Published online Sep 20, 2023. doi: 10.5662/wjm.v13.i4.210

Peer-review started: January 27, 2023

First decision: April 20, 2023

Revised: June 9, 2023

Accepted: July 6, 2023

Article in press: July 6, 2023

Published online: September 20, 2023

Processing time: 235 Days and 10.6 Hours

Online surveys can align with youth’s increased use of the internet and can be a mechanism for expanding youth participation in research. This is particularly important during the coronavirus disease 2019 (COVID-19) pandemic, when in-person interactions are limited. However, the advantages and drawbacks of online systems used for research need to be carefully considered before utilizing such methodologies.

To describe and discuss the strengths and limitations of an online system developed to recruit adolescent girls for a sexual health research study and conduct a three-month follow up survey.

This methodology paper examines the use of an online system to recruit and follow participants three months after their medical visit to evaluate a mobile sexual and reproductive health application, Health-E You/Salud iTuTM, for adolescent girls attending school-based health centers (SBHCs) across the United States. SBHC staff gave adolescent girls a web link to an online eligibility and consent survey. Participants were then asked to complete two online surveys (baseline and 3-month follow-up). Surveys, reminders, and incentives to complete them were distributed through short message service (SMS) text messages. Upon completing each survey, participants were also sent an email with a link to an electronic gift card as a thank-you for their participation. Barriers to implementing this system were discussed with clinicians and staff at each participating SBHC.

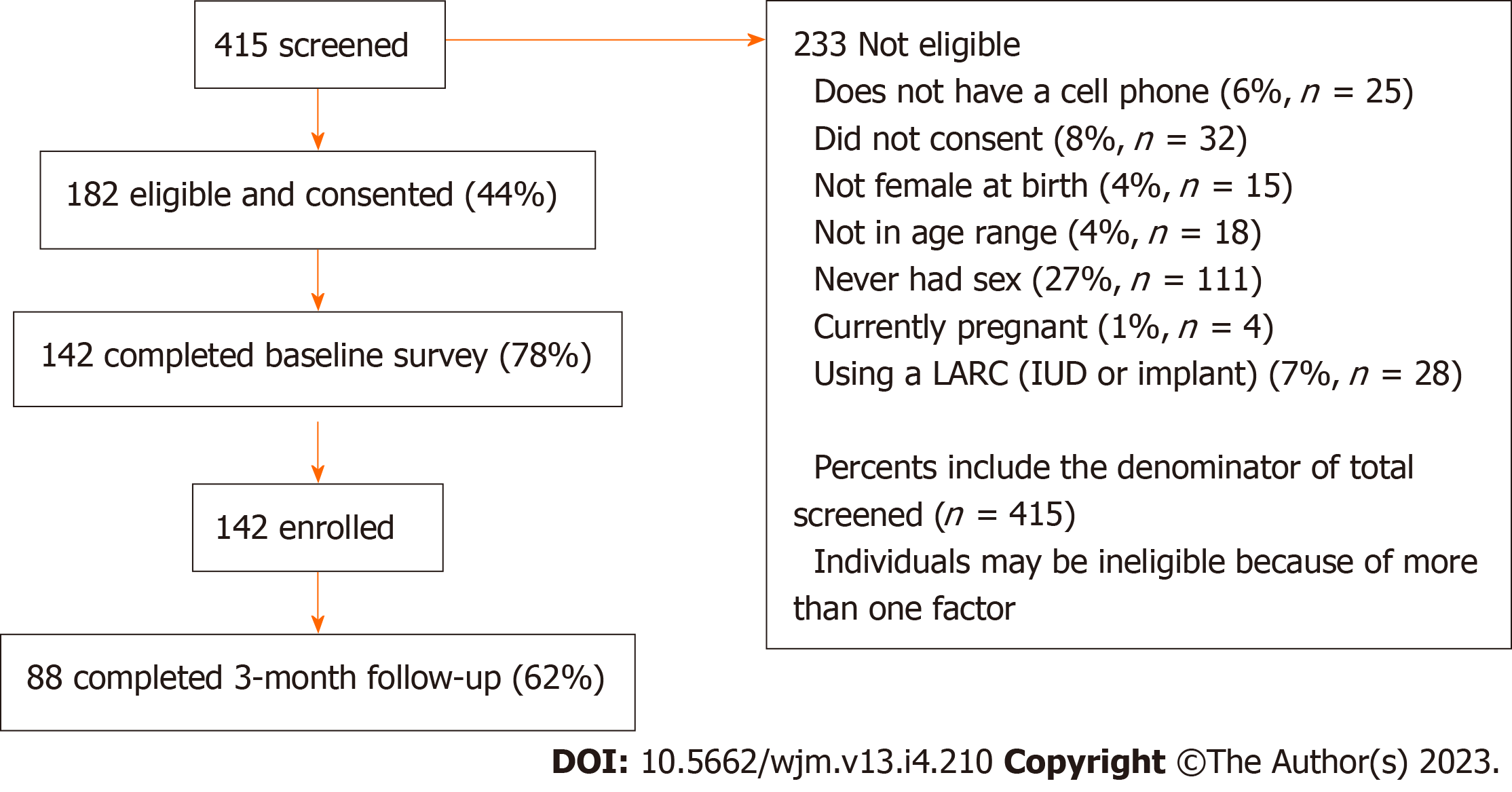

This online recruitment and retention system enabled participant recruitment at 26 different SBHCs in seven states across the United States. Between September 2021 and June 2022, 415 adolescent girls were screened using the Qualtrics online survey platform, and 182 were eligible to participate. Of those eligible, 78.0% (n = 142) completed the baseline survey. Participants were racially, geographically, and linguistically diverse. Most of the participants (89.4%) were non-White, and 40.8% spoke Spanish. A total of 62.0% (n = 88) completed the 3-month follow-up survey. Limitations of this system included reliance on internet access (via Wi-Fi or cell service), which was not universally available or reliable. In addition, an individual unrelated to the study obtained the survey link, filled out multiple surveys, and received multiple gift cards before the research team discovered and stopped this activity. As a result, additional security protocols were instituted.

Online systems for health research can increase the reach and diversity of study participants, reduce costs for research personnel time and travel, allow for continued study operation when in-person visits are limited (such as during the COVID-19 pandemic), and connect youth with research using technology. However, there are challenges and limitations to online systems, which include limited internet access, intermittent internet connection, data security concerns, and the potential for fraudulent users. These challenges should be considered prior to using online systems for research.

Core Tip: Online systems for health research have the potential to reach larger and more diverse audiences than traditional in-person recruitment methods. It can also decrease the cost and time necessary to recruit participants in person. This paper provides a case study of the online system developed and used to evaluate Health-E You/Salud iTuTM, an interactive mobile sexual and reproductive health application (app) for adolescent females used in conjunction with school-based health centers. This study demonstrates the strengths and limitations of online systems used for research.

- Citation: Salem M, Pollack L, Zepeda A, Tebb KP. Utilization of online systems to promote youth participation in research: A methodological study. World J Methodol 2023; 13(4): 210-222

- URL: https://www.wjgnet.com/2222-0682/full/v13/i4/210.htm

- DOI: https://dx.doi.org/10.5662/wjm.v13.i4.210

Online systems were widely used prior to the coronavirus disease 2019 (COVID-19) pandemic; however, COVID has increased the need for researchers to consider alternative methods to continue their study activities remotely[1,2]. Online systems to recruit and consent participants in research studies can be more time-efficient and reduce costs associated with traditional, in-person approaches[3,4]. More recently, online recruitment efforts for health research have been conducted with targeted web-based strategies on social media platforms[2,5,6]. However, online recruitment can also be useful for clinic-based sampling to evaluate interventions aimed at improving clinical care. In addition, online recruitment methods may be particularly effective for adolescents, given their increased use of mobile technologies, including smartphones, tablets, and laptops[7,8]. There is value beyond recruitment; online systems can be used to automatically send participants links to follow-up surveys, reminders to complete follow-up surveys, and distribute e-gift card incentives[9,10].

The utilization of mobile technologies can extend the geographical reach and promote the participation of diverse adolescent populations in health research. Our study specifically focuses on adolescents aged 13-19 years, as that is the common age of high school students utilizing our participating school-based health centers (SBHCs). It is important to note that there is variation in the definition of adolescents. For instance, the World Health Organization defines adolescence as between ages 10-19[11], while the American Academy of Pediatrics considers adolescence as between ages 11-21[12]. Almost all adolescents in the United States (US) (95%) have access to smartphones, and 45% said that they are “almost constantly” online[8]. This is true for youth from lower socio-economic and diverse racial/ethnic backgrounds. Specifically, 93% of low-income adolescents, 96% of Hispanic, and 91% of Black adolescents have access to a smartphone[8]. Further, among US smartphone owners, young adults, those with no college education, and those with lower income levels are most likely to use their mobile phone as their main source to access the internet[13]. At the same time, studies show that youth from diverse racial/ethnic, sexual orientation, gender identity, and low-income backgrounds are often underrepresented in research[14-16]. Thus, using these technologies can potentially expand youth participation, increase the diversity of participants[8,17,18], and reach youth from historically under-resourced communities[18]. A recent study comparing virtual recruitment studies to in-person recruitment for medical research found that virtual studies were able to enroll participants from more geographically diverse regions and recruit higher percentages of females[19]. Online recruitment is more cost-effective and time-efficient than in-person recruitment. In-person recruitment involves greater costs associated with travel to clinic study sites and study staff time spent on recruitment activities[5]. Online surveys can also be translated and accessed in multiple languages, further expanding accessibility for non-English speaking participants. It can also include audio features for groups with lower literacy levels[20]. Online surveys are, therefore, an opportunity to expand youth participant reach for populations who have been historically excluded from health research and, in doing so, they can help reduce inequities in research participation related to gender, race, ethnicity, education, location, age, and language spoken.

Using mobile technology for health research data collection can also improve data quality. Online surveys can increase participants’ comfort in completing health surveys, especially those on sensitive topics (e.g., sexual health and behaviors)[18]. Research shows that compared to a trained health educator, adolescents are more comfortable disclosing health information on a computer even when they know it will be shared with their clinician[21]. Additionally, online surveys have been shown to reduce social desirability biases compared to interviewer-administered modes[20,22]. In one study that analyzed social desirability for a sexual health survey, respondents who completed the survey over the internet were more likely than those who responded over the telephone to report more than one sexual partner, indicating that online surveys can decrease social desirability bias[22].

Despite the promise of technology in promoting access to research for diverse populations, barriers remain. While adolescents’ use of smartphones has increased for all income levels and races/ethnicities, approximately 5% of adolescents still do not have access[8]. The “digital divide” persists in many groups. In particular, those from rural regions[23] and low-income communities[24] across the US have unequal access to reliable internet. Although recent studies show that mobile phone ownership is becoming more evenly distributed among diverse populations[8], divides in internet access exist among school-aged youth of different household incomes[24]. Thus, the “digital divide” has the potential to create barriers to online recruitment efforts and can perpetuate inequalities in health research for some populations.

Online recruitment methods can also create barriers to obtaining informed consent, risk the inclusion of fraudulent users, and hinder participant retention over time. These barriers have been identified in a national study that used online surveys for sexual and gender minority adolescent health research[16]. Obtaining consent online may make it difficult for some participants to fully understand their rights, risks, and benefits of participating in research. Even when the language is simplified, consent can be complicated. When informed consent is done online, there is no research staff present and readily available to answer questions, provide clarification, and ensure the potential participants’ understanding[16]. While potential participants can be encouraged to contact research staff with questions, they are not required to do so. To compensate for this limitation and increase comprehension of the consent and study processes, researchers have used videos, consent quizzes, and interactive follow-up methods[16]. Online surveys are also prone to fraudulent or repeat users, especially when incentives are distributed for survey completion[16,25]. For example, an online study on COVID-19’s impact on LGBTQ+ populations resulted in 62% fraudulent survey responses due to the infiltration of bots[25]. Actions such as robust built-in data safety measures, requiring the same data point throughout the survey, and avoiding automatic incentive payments when the survey is complete, can decrease fraudulent users. However, implementing these safeguards is time-consuming for researchers and may decrease participation from authorized participants due to increased survey time and potential time delays for incentive distribution after survey completion[25]. In addition, participant retention over time may also be problematic when online recruitment strategies are used due to a lack of personal contact, and participants’ early interest in the study may fade over time, especially without a personal connection to the study[26,27].

Despite these technology-related limitations, there are several advantages to online research methodologies. They can increase research during COVID isolation periods[1,2], reduce research-related personnel and travel costs for researchers and participants[5], and improve the feasibility and efficiency of conducting research across multiple geographic regions, thereby increasing diverse populations of youth access to research[8,17,19]. Due to ever-changing technology landscapes, there is a need for additional research on best practices for online survey recruitment, data collection, and participant retention strategies.

There are only a few papers that describe online study processes used in conducting adolescent sexual and reproductive health (SRH) research; however, these papers are not primarily focused on methodologies. These studies include an online human immunodeficiency virus (HIV) prevention study called YouthNet[27], an online adolescent and young adult HIV study called Just/Us[28], and an adolescent SRH study focused on online social media recruitment called SpeakOut[29]. There are a few gaps in this literature that our methodology paper seeks to address. The papers describing YouthNet[27], Just/Us[28], and SpeakOut[29] are focused on online recruitment and enrollment methods, but do not go into detail about survey program, settings, security, monitoring, and tracking. In addition, our study differs from these studies because it: (1) Uses a hybrid approach of recruiting in-person and data collecting online; and (2) engages with a youth advisory board that informed our research methods. Specifically, we engaged youth input to ensure the inclusivity of genders and improve participant retention. This methodology paper aims to address these gaps and expand the literature on online data collection processes for adolescent health research.

This methodology paper provides a case study of an online system that we developed to evaluate Health-E You/Salud

The eligibility, baseline and follow-up surveys were programmed into the Qualtrics online survey platform[31]. The eligibility survey took less than 5 minutes to complete, and the baseline and 3-month follow-up surveys took approximately 10 minutes. These surveys can be taken on any device (e.g., smartphone, tablet, or computer) connected to the internet.

Clinic staff were asked to provide all adolescents coming into the participating SBHC with a link to the online eligibility survey. Clinic staff distributed the eligibility survey link via business cards that had a QR code. In addition, clinics hung posters that included information about the study along with the survey QR code and bit.ly shortened survey link. This link directed adolescents to information about the study, and it then asked about their interest in participating, assessed eligibility, and obtained informed consent.

Consented participants were then asked to provide their cell phone number, which was saved securely in Qualtrics and used to distribute subsequent survey links through SMS text messages[9]. To link the eligibility, baseline, and follow-up surveys and to protect participants’ confidentiality, we created a unique participant identifier (ID) that included the participant’s first letter of their first name, the first letter of their last name, birth date, and birth month.

After creating a unique ID, participants were immediately texted a link to the baseline survey prior to the visit with their SBHC clinician. This SMS text was generated and distributed through Qualtrics. We also set up the Qualtrics system to distribute texts at 1 and 2 months after baseline to remind participants about when they would receive the link for the 3-month follow-up survey with the goal of increasing retention rates. In addition, 3 months after baseline, participants received an SMS text with the link to the 3-month follow-up survey. Participants received SMS text reminders to complete the 3-month survey beginning 24 hours after receiving the link and every other week for up to 2 months (for a maximum of three text reminders and two email reminders) as part of our retention efforts.

The National School-Based Health Alliance Youth Advisory Board (YAB) informed the language on the SMS texts and schedule of the SMS reminders to maximize recruitment and retention. The YAB recommended that the name of the principal investigator be included to increase the “friendliness” of the text and decrease the risk of it appearing as spam. They reviewed the language of the messages to ensure that they were gender-inclusive, reduced the length of the text messages, and provided language about the incentives for completing the survey. They also provided input on the look of the cards and posters used at the SBHCs to promote the study.

Participant incentives were also distributed through an online system. Qualtrics was used in conjunction with an electronic gift card (e-gift card) system. For this study, we used Rewards Genius, operated by Tango Card[32]. The Qualtrics/Tango integration was included with our University’s Qualtrics license and allowed for the automatic distribution of digital rewards (e-gift cards) to survey respondents. The Rewards Genius system allowed us to select the monetary amount of the gift card for each specific survey, limit the maximum amount of gift cards distributed, and included a setting to prevent the distribution of multiple gift cards to the same email for the same survey. Rewards Genius has a self-serve online portal to track how many incentives have been sent and monitor the incentive budget. At the end of each survey, the participant was asked how they would like to receive their gift card. If they provided their email, the participant immediately received an email from Tango with a link to redeem a gift card of their choice. If a participant did not have (or did not provide) an email, they could request that their gift card be texted to them. For the SMS option, gift cards were sent manually (as there was no automated option available in this system). To do this, the research assistant downloaded survey data from Qualtrics every other week and used Stata to export the participants’ cell phone numbers, without a corresponding email, into an Excel sheet. Research staff manually texted each of these participants with a link to a gift card.

Qualtrics has a variety of setting options that are important to consider for each survey. In this study, Qualtrics was set to record incomplete surveys for partial data after 1 week for the eligibility survey and 1 month for the baseline and 3-month follow-up surveys. The eligibility survey was open for 1 week to decrease the chance of an individual using the QR codes multiple times. The 3-month follow-up survey remained open for 1 month after the initial completion date to increase participant response rates. Each survey included a back button for participants to change or review their responses, skip logic to route participants to different questions based on their prior responses, and a Qualtrics setting to ensure that email and phone numbers were entered correctly.

Data was securely stored in the Qualtrics database. Qualtrics is General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) compliant and provides technology for users to be compliant as well[33]. As mentioned previously, to further protect participants’ confidentiality, data from the eligibility survey were stored separately from the baseline and follow-up surveys. The baseline and follow-up surveys included the participant’s unique ID, so there was no way to identify an individual with their survey responses, if in a rare event, the back-end database was hacked. Rewards Genius and Tango Card also protect data and privacy through GDPR and the CCPA in addition to multi-factor authentication[32]. Only authorized research staff had access to these systems. We also set up a code within Qualtrics that includes reCAPTCHA (Completely Automated Public Turing Test to Tell Computers and Humans Apart) data to identify bots and relevant ID data to prevent duplicate responses, fraudulent users, and the distribution of multiple gift cards to any individual user. The security system in Qualtrics was vital because our online surveys were attached to incentive gift card distribution. Participants or hackers may be motivated to complete the surveys multiple times with inaccurate responses to obtain e-gift cards[33].

Monitoring participant enrollment, follow-up survey completion, and gift card distribution were critical to the integrity of this study. Data, including the participant variables included in the unique ID and participant contact information from the eligibility, baseline, and 3-mo surveys, were downloaded from Qualtrics by SBHC in each state. The participant’s unique ID was used to match the baseline and follow-up surveys using Stata programming. The Stata code identified potential duplicates, participant identification data errors, and participants without emails who need manual (SMS) gift card incentive deliveries. Fraudulent and repeat users were identified during this data monitoring process through duplicate unique IDs, emails, or phone numbers. The code also produced Excel files of unmatched surveys, including participants who were eligible but did not complete a baseline survey and/or participants who completed the baseline but not the 3-month follow-up survey. This monitoring system allowed us to manually send unmatched participants an SMS text reminder with the survey link. As part of data monitoring efforts, enrollment and 3-month survey completion rates were provided to each participating SBHC on a monthly basis. The investigators discussed this data with clinicians and staff champions from each site, along with implementation successes and challenges.

We worked with SBHCs in diverse locations that largely serve youth who are underserved by the broader health care system. To increase the representation and diversity in our sampling, we asked SBHC staff to provide all adolescents coming for care the opportunity to participate in the study and use the app (when in intervention mode). In addition, we used data monitoring to ensure that the online sampling was representative of youth at the SBHCs. For each school year, we compared the demographics of the survey responses with the retention rates. While we encouraged the distribution to all adolescents and had an incentive system to encourage participation, our sample is one of convenience and relied on the youth’s willingness to participate.

The statistical methods of this study were reviewed by Lance Pollack, PhD at the University of California San Francisco.

Informed consent by all participants were obtained through an online consent process. The research protocols and study were approved by ethics review boards at the University of San Francisco.

In the academic year between September 2021 and June 2022, 26 SBHCs across seven states: California, Illinois, Massachusetts, Michigan, Minnesota, New York, and Texas agreed to participate in this study. A total of 415 adolescents were screened to determine eligibility. The screening rate was based on participants who at least clicked on the link that the SBHC staff provided them. Of these, 43.9% (n = 182) were eligible based on study inclusion criteria. There were no significant differences between ineligible and eligible participants when comparing age, race/ethnicity, and language spoken at home. Of the 182 eligible participants, there was a 78.0% (n = 142) retention rate of those who were enrolled and completed the baseline survey (Figure 1). Of the enrolled participants, all were female sex assigned at birth (per inclusion criteria), 95.1% (n = 135) were identified as female, 2.8% (n = 4) identified as non-binary, 1.4% (n = 2) as male or transgender male, and 0.7% (n = 1) as gender fluid (Table 1). The mean age was 16.7 (SD +/- 1.1) years. Nearly half (49.3%) of the participants identified as Hispanic/Latin, 15.5% Black/African American, 12.7% Asian, 10.6% White/Caucasian, and 12% multi-racial/ethnic. Many (40.8%) spoke Spanish with their family, either solely or in addition to English.

| Demographics | n (%) |

| Gender | |

| Female | 135 (95.1) |

| Male | 1 (0.7) |

| Transgender male | 1 (0.7) |

| Non-binary | 4 (2.8) |

| Gender fluid | 1 (0.7) |

| Sex assigned at birth | |

| Female | 142 (100) |

| Age group, mean +/- SD | 16.7 +/- 1.1 |

| Race/ethnicity | |

| Asian | 18 (12.7) |

| Black/African/African Amer | 22 (15.5) |

| Hispanic/Latinx/o/a | 70 (49.3) |

| White/Caucasian | 15 (10.6) |

| Multi-racial/ethnic | 17 (12) |

| Speaks Spanish with family | 58 (40.8) |

Of those who were enrolled in the study, there was a 62.0% (n = 88) retention rate of those who completed the 3-month follow-up survey. There were no significant differences in age, race/ethnicity, and languages spoken at home between those who completed the 3-mo follow-up and those who did not (Table 2). Among the 3-month follow-up survey participants, 95.5% were identified as female with a mean age of 16.8 years (SD +/- 1.04). Nearly half (46.6%) identified as Hispanic/Latin, 11.4% Black/African American, 14.8% Asian, 12.5% White/Caucasian, and 14.8% reported being multi-racial/ethnic.

| Demographics | n (%) |

| Gender | |

| Female | 84 (95.5) |

| Transgender male | 1 (1.1) |

| Non-binary | 3 (3.4) |

| Sex assigned at birth | |

| Female | 88 (100) |

| Age group, mean +/- SD | 16.8 +/- 1.04 |

| Race/ethnicity | |

| Asian | 13 (14.8) |

| Black/African/African Amer | 10 (11.4) |

| Hispanic/Latinx/o/a | 41 (46.6) |

| White/Caucasian | 11 (12.5) |

| Multi-racial/ethnic | 13 (14.8) |

| Speaks Spanish with family | 34 (38.6) |

There were a few challenges that resulted with this online data system. As part of ongoing data monitoring efforts, we provided SBHC clinicians and staff monthly data and discussed implementation barriers. Some staff reported that it was difficult to remember to distribute the link because they had other responsibilities, were short-staffed, or they forgot when the adolescent was being seen for a non-reproductive health visit. A total of 21 out of the 26 SBHCs reported being short-staffed or overworked due to ongoing challenges with COVID-19 that increased rates of illness for staff and their family members, exacerbated youth mental health issues, and contributed to burn-out. Additionally, some clinicians and staff reported that internet access and connectivity problems were barriers to youth accessing and completing the surveys. There were internet connectivity issues for cellular data at five SBHCs, limiting access for youth to use their phones to complete the surveys. The high school affiliated with two SBHCs, did not allow students to use their cell phones on campus so youth could not access the internet until these sites were able to connect tablets to their clinic Wi-Fi, which involved setting up a secure guest wifi network. As a result, not all potential participants received the eligibility survey link.

Despite protections against duplicate and fraudulent users, there were some duplicate users that we identified and removed, and one individual, not related to the study, hacked the system and obtained multiple e-gift cards. Duplicate users came from SBHCs that provided adolescents with iPads to access the online eligibility survey. Since multiple youth were using the same device, a generic link had to be used to access the online system. Thus, it was not possible to set up Qualtrics to limit the number of times that a survey was completed for respondents using the same device. Duplicates were removed as part of the data monitoring and cleaning process. Additionally, one SBHC posted the survey link on their social media website. An individual, not involved with this SBHC, obtained the survey link to access the survey, identified the study eligibility criteria, and generated multiple, fictitious participant contacts to complete multiple baseline surveys and obtain incentives. The hacker used the system over the weekend and by Monday, when study staff returned to work and discovered the problem through routine data monitoring, the hacker completed 668 surveys and obtained $6680 in gift cards. This was the only time in which this issue occurred. All SBHCs were advised to distribute the link only to individual patients coming to their clinic and avoid posting the link on social media or other clinic websites. There was no breach of any participant data during this incident.

This methodological paper provides a case study of the online system used to evaluate the Health-E You/Salud iTuTM app. We found that this system enabled us to recruit and retain a diverse study population without having to deploy study personnel to the 26 SBHC sites across seven states. We found no statistically significant differences between eligible and non-eligible participants based on age, race/ethnicity, and language spoken at home. There were also no significant differences between participants who completed the baseline and participants who completed the 3-month follow-up survey based on age, race/ethnicity, and language spoken at home.

A major strength of this online research system was its ability to reach a diverse group of youth at multiple SBHCs across the nation including Northern and Southern California, Illinois, Massachusetts, Michigan, Minnesota, New York, and Texas. In addition to geographic diversity, this study recruited a racially diverse sample size with almost half coming from Hispanic/Latin backgrounds and the balance comprising relatively equal proportions of Black, Asian, White, and multi-racial/ethnic participants. Additionally, almost half respondents reported that they were Spanish speakers. The online system allowed participants to participate in their preferred language English or Spanish and they could toggle between languages throughout the process. Increasing diversity of participants’ region, race, and language spoken is a vital aspect to increasing inclusivity and representation of underrepresented populations in health research.

Online research systems can reduce costs and increase efficiency for researchers. Online research systems allow for increased organization, centrally located documents and materials, and an efficient tracking system to save time for researchers. The study recruitment and surveys were set up online with automatic survey distributions and reminders using Qualtrics settings, which allowed our research staff to have more time to track and communicate with participants individually, meet with clinics more frequently, create and meet with the youth council, and make adaptations to improve the study. Online surveys saved costs on transportation, survey materials, and data collection staff. In this study, these aspects would have been costly due to the number of participating SBHCs across multiple states in the US. However, it should be noted, that there can be research costs when a study uses paid adds to recruit via social media or through paid online survey platforms[5] (neither of these were used in this study). Our hybrid approach that included initial in-person contact (by SBHC staff) followed by online data collection and monitoring, cost less than other online studies[27,29,35]. An online HIV study spent $13,000 on banner advertising[27] and a smoking-cessation study spent $172.76 per participant on social media[35]. In contrast, our study spent approximately $32.77/clinic on promotional materials for direct study recruitment.

Online surveys are also an extremely effective way to increase the accuracy of survey responses from adolescent participants as well as their comfort responding to sensitive questions about their SRH. In previous SRH studies, adolescents have reported concerns about clinician judgment, power differential, and a lack of confidentiality[34]. Research also shows that adolescents feel more comfortable answering SRH questions online compared to clinician or researcher interview[21]. In addition, while not examined in the current study, prior research found that social desirability bias occurred when research was conducted in person or on a phone call rather than online[22].

Despite the advantages of online systems, such as those used in the current study, there are important challenges to consider. While asking clinic staff to distribute the link did not seem like it would be burdensome, staff commonly forgot to do so. Clinics reported being short-staffed, overworked, and were focused on other pressing priorities at their clinic and/or with their patients – most of which were related to ongoing challenges associated with the COVID-19 pandemic.

Consistent with prior research[16,25], our study found that online recruitment and follow-up surveys can increase the possibility of duplicate survey responses and invalid survey participants or hackers. By automatically linking online surveys to gift card incentive distributions (participants receive an e-gift card directly after survey completion), hackers can take advantage of incentive systems to target the survey through code that searches the internet for surveys linked to gift cards. To prevent unsolicited participants from finding online surveys, survey links should not be posted online on public platforms. However, restricting access to survey links can also limit broader recruitment through online platforms such as social media sites. To maximize reach and limit fraud, gift cards could be manually distributed via text or email after each survey is verified and/or researchers can consider validating the authenticity of the participant through follow-up phone calls prior to study enrollment. However, both of these methods can increase the burden for research staff and create delays in the distribution of participants’ gift cards. To limit these duplicate and fraudulent user issues, we implemented robust security systems in Qualtrics (described in the methods section); however, there was still a possibility for error of fraudulent or duplicate users in these security systems. Researchers as well as potential participants need to better understand the risks of online research, such as fraudulent users, duplicates users, and potential data security breaches, especially in the context of rapidly evolving computer-based and online technologies.

While the retention rates in this study are comparable to other online studies focused on SRH, there is a need to improve retention rates in online studies. The recruitment and 3-month retention rates in this study were higher than those of the original cluster RCT (78% vs 57% for recruitment rates and 3-month retention rates were 62% vs 50%, respectively)[30]. These improvements in the current study may be due to lessons learned from the original trial, additional input from the YAB, and the expanded diversity of the study population. One advantage of this study’s online system, compared to the original RCT, was the use of automatic reminders for surveys through Qualtrics. Our retention rates were also greater than that of another online HIV prevention study, which had a 53% retention rate at the 2-month follow-up[27]. This HIV study relied heavily on online recruitment through banner advertisements, which is advantageous for reaching a larger and more diverse study sample; however, using this approach resulted in approximately 20% of potentially fraudulent participants. Our study was able to overcome this issue because, by its very nature, the intervention, use of the Health-E You app, is designed to be used in conjunction with a health care visit. While data collection was done via our online system, SBHC clinic staff provided the opportunity to use the eligibility link to begin the online system with real patients. In addition, we verified participants by requiring phone numbers to receive future surveys and matched across surveys using the match ID and email addresses. In another online HIV study with adolescents and young adults, retention rates were 69% at the 2 month follow-up and 50% at the 6 month follow-up[28]. While this is more comparable to our study, these rates are lower than those found in other SRH studies conducted in person[28]. In contrast, the retention rates in the current study are lower than those found in a study that recruited adolescents via social media[29] whose retention rates ranged from 71% (in the control group) to 79% (intervention). This is likely due to the fact that once deemed eligible via the online system, a research assistant conducted a follow-up phone call to verify eligibility and randomize participants to the intervention or control condition. This approach was not possible in our study, since participants were recruited in clinic and the baseline survey had to be completed prior to seeing their clinician. Recruiting via social media is advantageous for recruiting a greater number of individuals over a shorter time period as was demonstrated in the SpeakOut study[29]. In this study, high retention rates can be attributed to the work of research assistants who called each participant to verify their eligibility. This verification process improved the integrity of the data; however, this is a time-consuming and costly approach (in terms of staff time). When we compared our study’s retention rates with a smoking cessation study conducted on line, our retention rates were higher [35]. That study had an overall 52% 3-month follow-up retention rate and did not find any significant differences in retention rates between the online methods (including social media, ads, and standard media) vs the traditional recruitment methods that they used[35]. Despite these variations in retention rates, some research suggests that the overwhelming majority (82%) of young adults prefer online surveys over mail-in surveys and this preference was greater not only for youth of younger ages, but also for those from higher socio-economic backgrounds[36]. On going efforts to improve retention rates for all participants are needed.

To improve retention rates, researchers have used immediate incentives, continual contact information collection, and consistent reminders[28,29,35,36]. Our study also used these strategies and resulted in comparable or slightly better retention rates than those found in other studies using similar online methods. Thus, it is important to identify and further investigate additional approaches to recruit and increase participant retention when using online methodologies. While the use of online systems for research can increase the reach and diversity of study populations, relatively low retention rates can limit the generalizability of study findings.

Researchers using online systems also need to make special efforts to include participants who do not have internet access. Online surveys require internet access through cellular data, Wi-Fi connection, or ethernet cable connection and this can prevent individuals without such access from participating in research. They may not have cell phones that can access the internet or may have limited data plans with their smartphones. Others may not have household internet access, especially if they live in rural and other underserved areas. Data from the National Center for Education Statistics found that the percentage of households (with youth aged 3 to 18 years old) with home internet access was highest among those who were Asian (99%) and White (97%), and lowest among those who were American Indian/Alaska Native (83%), Pacific Islander (90%), and Black (91%)[37]. Reasons for lack of access included “did not need it/not interested” (50%) and “internet too expensive” (26%)[37]. Additionally, rural US residents also experience lower access to home internet (72%) compared to their suburban (79%) and urban (77%) counterparts[23]. In 2021, the United Nations adopted a resolution on the internet as a human right and encouraged countries to adopt “national internet-related public policies that have at their core the objective of universal access”[38]. However, the US lacks a national policy guaranteeing universal access and internet access disparities remain. Because online survey research systems are dependent on internet access, it is important for future research to explore ways to recruit and retain participants with more equitable rates rather than excluding populations with limited access. This may require hybrid approaches that use a combination of internet and in-person recruitment methods and partnering with community-based organizations to reach and include groups underrepresented in research.

Another limitation of online-only research studies is the lack of in-person interaction. Although the online system can decrease the time that clinics are required to dedicate to the study, it also limits the interpersonal connection that researchers make while in the clinics. To compensate for this limitation, our research team met with the clinic staff every few months via Zoom; however, a few clinics were unresponsive over email. In-person interaction could potentially increase clinic staff engagement in the study. The lack of in-person contact with participants, may also contribute to lower follow-up rates as they may feel less of a connection to the researchers and with the study.

Lastly, our study is limited because its findings are not generalizable to all adolescents. Our study participants are female at birth, attend SBHCs, are between the ages of 13-19 years, and not currently pregnant or using a LARC device. However, our online methods are still relevant to the overall literature on adolescent health.

This case study of an online system for health research has implications for future research. Ensuring the security of participants’ data is a top priority as is maintaining the authenticity of participants and data quality. Although there is increasing information available to the public about data security in settings such as jobs, browsing the internet[39], and social media[40], there is a lack of research describing how to ensure data security in research settings. As shown in our study, added measures are critical to safeguard the distribution of electronic gift cards. Researchers need to be fully aware of these risks and vigilant in protecting and monitoring data security[41]. However, health researchers are often not trained in or aware of all of the details involved with data security and need to partner with experts within and/or outside of their institution to ensure that the best possible security protections are in place, such as separately storing data, securing public Wi-Fi networks, and using data leak prevention and protection systems[42,43]. In this study, we worked closely with Qualtrics representatives and our UCSF Salesforce team. With ever evolving cyber-attacks, new technologies emerge to address this growing threat such as data storage programs and security protected online files[44]. It is vital for future studies to specifically address ways to maximize outreach and recruitment while ensuring the authenticity of the participants and their responses. This involves exploring online and social media recruitment but instituting robust security measures to verify participants such as contact information validation and mobile device authentication[25].

Although this study displayed ways that online systems can reach more racially, geographically, and linguistically (language) diverse populations, there are still disparities in online access through limited Wi-Fi and/or cell service[24]. It is important for studies to continue to identify strategies that include diverse populations through online systems and consider hybrid approaches when online inclusion is not possible. This includes conducting research activities in person with populations that have limited device or internet access. Lastly, there is a need to research the online system limitations related to engagement and the connection between the researcher and participant or clinic staff. It would be helpful for future studies to analyze the extent to which participant engagement is lost in online studies as opposed to in-person studies. As these limitations are addressed, online systems can be a powerful strategy for increasing the reach and diversity of populations included in health research.

Overall, this methodological paper displays the importance of online systems for youth participation in health research and provides examples of methods used to maximize efficiency of these systems. This case study of the Health-E You/Salud iTuTM app discusses the use of online systems that helped recruit youth via their mobile devices, distribute surveys, monitor participants, and deliver reminders. These systems improved the geographic reach and inclusion of diverse youth participation. Additionally, in an era of COVID-19 remote work, increased use of telehealth, and youth’s use of technology, online systems are crucial for health research. Future studies should study how to leverage technology to further improve reach, diversity, and inclusion of underrepresented groups in research.

Online surveys can align with youth’s increased use of the internet and can be a mechanism for expanding youth participation in research. The utilization of mobile technologies can extend the geographical reach and promote the participation of diverse adolescent populations in health research. Using mobile technology for health research data collection can also improve data quality. However, the advantages and drawbacks of online systems used for research need to be carefully considered before utilizing such methodologies.

There are few methodology papers that describe online study processes in the same field as our study, adolescent sexual and reproductive health (SRH). These studies that describe online methodologies include an online human immunodeficiency virus (HIV) prevention study called YouthNet, an online adolescent and young adult human immunodeficiency virus study called Just/Us, and an adolescent SRH study focused on online social media recruitment called SpeakOut. However, there is a lack of research on how to specifically involve adolescents in research and the methods used to ensure diversity, keep the adolescents retained, and maintain data security.

The purpose of this study is to describe and discuss the strengths and limitations of an online system developed to recruit adolescent girls for a sexual health research study and follow them for 3 months. It aims to address the gap of methodology papers and expand the literature on online data collection process for adolescent health research.

This methodology paper examines the use of an online system to recruit and follow participants to evaluate a mobile SRH application, Health-E You/Salud iTuTM, for adolescent females attending school-based health centers (SBHCs) across the US. The paper goes into detail regarding the following methodologies for our online study: Data collection and survey processes, the electronic gift card incentive system, survey settings, data storage and security, and data monitoring.

This online recruitment and retention system enabled participant recruitment at 26 different SBHCs in seven states across the United States. Between September 2021 and June 2022, 415 adolescent girls were screened using the Qualtrics online survey platform, and 182 were eligible to participate. Participants were racially, geographically, and linguistically diverse; most of the participants (89.4%) were non-White, and 40.8% spoke Spanish. Limitations of this system included reliance on internet access (via Wi-Fi or cell service), which was not universally available or reliable, and some issues individuals outside the study discovering the survey link and completing multiple surveys.

Online systems for health research can increase the reach and diversity of study participants, reduce costs for research personnel time and travel, allow for continued study operation when in-person visits are limited (such as during the coronavirus disease 2019 pandemic), and connect youth with research using technology. The methods detailed using online surveys, online gift card distribution, and online data monitoring and tracking are new and add to the lack of methodology papers. However, there are challenges and limitations to online systems, which include limited internet access, intermittent internet connection, data security concerns, and the potential for fraudulent users. These challenges should be considered prior to using online systems for research.

This case study of an online system for health research has implications for future research. Ensuring the security of participants’ data is a top priority as is maintaining the authenticity of participants and data quality and as shown in our study, added measures are critical to safeguard the distribution of electronic gift cards. It is important for studies to continue to identify strategies that include diverse populations through online systems and consider hybrid approaches when online inclusion is not possible. Lastly, there is a need to research the online system limitations related to engagement and the connection between the researcher and participant or clinic staff.

We thank the adolescent participants in this study, school-based health center staff and clinicians, the National School-Based Health Alliance, and our National Youth Advisory Board for their time, effort, and dedication to this study.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Health care sciences and services

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B, B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Liu XQ, China; Moshref RH, Saudi Arabia; Wang D, Thailand S-Editor: Liu JH L-Editor: Wang TQ P-Editor: Yu HG

| 1. | Saberi P. Research in the Time of Coronavirus: Continuing Ongoing Studies in the Midst of the COVID-19 Pandemic. AIDS Behav. 2020;24:2232-2235. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 35] [Cited by in RCA: 40] [Article Influence: 8.0] [Reference Citation Analysis (1)] |

| 2. | Barney A, Rodriguez F, Schwarz EB, Reed R, Tancredi D, Brindis CD, Dehlendorf C, Tebb KP. Adapting to Changes in Teen Pregnancy Prevention Research: Social Media as an Expedited Recruitment Strategy. J Adolesc Health. 2021;69:349-353. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 9] [Article Influence: 2.3] [Reference Citation Analysis (1)] |

| 3. | Amon KL, Campbell AJ, Hawke C, Steinbeck K. Facebook as a recruitment tool for adolescent health research: a systematic review. Acad Pediatr. 2014;14:439-447.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 120] [Cited by in RCA: 95] [Article Influence: 8.6] [Reference Citation Analysis (1)] |

| 4. | Hoffmann SH, Paldam Folker A, Buskbjerg M, Paldam Folker M, Huber Jezek A, Lyngsø Svarta D, Nielsen Sølvhøj I, Thygesen L. Potential of Online Recruitment Among 15-25-Year Olds: Feasibility Randomized Controlled Trial. JMIR Form Res. 2022;6:e35874. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 8] [Reference Citation Analysis (1)] |

| 5. | Fenner Y, Garland SM, Moore EE, Jayasinghe Y, Fletcher A, Tabrizi SN, Gunasekaran B, Wark JD. Web-based recruiting for health research using a social networking site: an exploratory study. J Med Internet Res. 2012;14:e20. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 299] [Cited by in RCA: 269] [Article Influence: 20.7] [Reference Citation Analysis (1)] |

| 6. | Darko EM, Kleib M, Olson J. Social Media Use for Research Participant Recruitment: Integrative Literature Review. J Med Internet Res. 2022;24:e38015. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 67] [Reference Citation Analysis (0)] |

| 7. | Moreno MA, Waite A, Pumper M, Colburn T, Holm M, Mendoza J. Recruiting Adolescent Research Participants: In-Person Compared to Social Media Approaches. Cyberpsychol Behav Soc Netw. 2017;20:64-67. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 29] [Cited by in RCA: 36] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 8. | Vogels EA, Gelles-Watnick R, Massarat N. Teens, social media and technology 2022. Pew Research Center; 2022. Available from: https://www.pewresearch.org/internet/2022/08/10/teens-social-media-and-technology-2022/. |

| 9. | Qualtrics. SMS Distributions: Qualtrics; 2022. Available from: https://www.qualtrics.com/support/survey-platform/distributions-module/mobile-distributions/sms-surveys/. |

| 10. | Tango Card Inc. Rewards Genius Seattle, Washington: Washington State Department of Financial Institutions; 2022. Available from: https://www.rewardsgenius.com.. |

| 11. | World Health Organization. Adolescent Health. Geneva, Switzerland: World Health Organization; 2023. Available from: https://www.who.int/health-topics/adolescent-health#tab=tab_1. |

| 12. | Hardin AP, Hackell JM; Committee on practice and ambulatory medicine. Age Limit of Pediatrics. Pediatrics. 2017;140. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 262] [Cited by in RCA: 398] [Article Influence: 49.8] [Reference Citation Analysis (1)] |

| 13. | Zickuhr K, Smith A. Digital differences. Pew Research Center; Washington, DC; 2012. Available from: https://www.pewresearch.org/internet/2012/04/13/digital-differences/. |

| 14. | Nguyen TT, Jayadeva V, Cizza G, Brown RJ, Nandagopal R, Rodriguez LM, Rother KI. Challenging recruitment of youth with type 2 diabetes into clinical trials. J Adolesc Health. 2014;54:247-254. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 37] [Cited by in RCA: 38] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 15. | Smart A, Harrison E. The under-representation of minority ethnic groups in UK medical research. Ethn Health. 2017;22:65-82. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 70] [Cited by in RCA: 97] [Article Influence: 12.1] [Reference Citation Analysis (1)] |

| 16. | Sterzing PR, Gartner RE, McGeough BL. Conducting Anonymous, Incentivized, Online Surveys With Sexual and Gender Minority Adolescents: Lessons Learned From a National Polyvictimization Study. J Interpers Violence. 2018;33:740-761. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 17] [Article Influence: 2.4] [Reference Citation Analysis (1)] |

| 17. | McInroy LB. Pitfalls, Potentials, and Ethics of Online Survey Research: LGBTQ and Other Marginalized and Hard-to-Access Youths. Soc Work Res. 2016;40:83-94. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 81] [Cited by in RCA: 58] [Article Influence: 6.4] [Reference Citation Analysis (1)] |

| 18. | Das M, Ester P, Kaczmirek L. Social and behavioral research and the internet: Advances in applied methods and research strategies: Routledge; 2018. |

| 19. | Moseson H, Kumar S, Juusola JL. Comparison of study samples recruited with virtual vs traditional recruitment methods. Contemp Clin Trials Commun. 2020;19:100590. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 17] [Cited by in RCA: 40] [Article Influence: 8.0] [Reference Citation Analysis (1)] |

| 20. | Cantrell J, Hair EC, Smith A, Bennett M, Rath JM, Thomas RK, Fahimi M, Dennis JM, Vallone D. Recruiting and retaining youth and young adults: challenges and opportunities in survey research for tobacco control. Tob Control. 2018;27:147-154. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 37] [Cited by in RCA: 49] [Article Influence: 6.1] [Reference Citation Analysis (1)] |

| 21. | Jasik CB, Berna M, Martin M, Ozer EM. Teen Preferences for Clinic-Based Behavior Screens: Who, Where, When, and How? J Adolesc Health. 2016;59:722-724. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 42] [Cited by in RCA: 31] [Article Influence: 3.4] [Reference Citation Analysis (1)] |

| 22. | Jones MK, Calzavara L, Allman D, Worthington CA, Tyndall M, Iveniuk J. A Comparison of Web and Telephone Responses From a National HIV and AIDS Survey. JMIR Public Health Surveill. 2016;2:e37. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 20] [Article Influence: 2.2] [Reference Citation Analysis (1)] |

| 23. | Vogels EA. Some digital divides persist between rural, urban and suburban America. Pew Research Center. 2021. Available from: https://www.pewresearch.org/short-reads/2021/08/19/some-digital-divides-persist-between-rural-urban-and-suburban-america/. |

| 24. | Vogels EA. Digital divide persists even as Americans with lower incomes make gains in tech adoption. Pew Research Center. 2021; 22. Available from: https://www.pewresearch.org/short-reads/2021/06/22/digital-divide-persists-even-as-americans-with-lower-incomes-make-gains-in-tech-adoption/. |

| 25. | Griffin M, Martino RJ, LoSchiavo C, Comer-Carruthers C, Krause KD, Stults CB, Halkitis PN. Ensuring survey research data integrity in the era of internet bots. Qual Quant. 2022;56:2841-2852. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 95] [Article Influence: 23.8] [Reference Citation Analysis (1)] |

| 26. | Lane TS, Armin J, Gordon JS. Online Recruitment Methods for Web-Based and Mobile Health Studies: A Review of the Literature. J Med Internet Res. 2015;17:e183. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 145] [Cited by in RCA: 142] [Article Influence: 14.2] [Reference Citation Analysis (1)] |

| 27. | Bull SS, Vallejos D, Levine D, Ortiz C. Improving recruitment and retention for an online randomized controlled trial: experience from the Youthnet study. AIDS Care. 2008;20:887-893. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 48] [Article Influence: 2.8] [Reference Citation Analysis (1)] |

| 28. | Bull SS, Levine D, Schmiege S, Santelli J. Recruitment and retention of youth for research using social media: Experiences from the Just/Us study. Vulnerable Children and Youth Studies. 2013;8:171-181. [DOI] [Full Text] |

| 29. | Tebb KP, Dehlendorf C, Rodriguez F, Fix M, Tancredi DJ, Reed R, Brindis CD, Schwarz EB. Promoting teen-to-teen contraceptive communication with the SpeakOut intervention, a cluster randomized trial. Contraception. 2022;105:80-85. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 1] [Article Influence: 0.3] [Reference Citation Analysis (1)] |

| 30. | Tebb KP, Rodriguez F, Pollack LM, Adams S, Rico R, Renteria R, Trieu SL, Hwang L, Brindis CD, Ozer E, Puffer M. Improving contraceptive use among Latina adolescents: A cluster-randomized controlled trial evaluating an mHealth application, Health-E You/Salud iTu. Contraception. 2021;104:246-253. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 19] [Article Influence: 4.8] [Reference Citation Analysis (1)] |

| 31. | Qualtrics. Qualtrics Provo, Utah, USA 2022. Available from: https://www.qualtrics.com. |

| 32. | Tango Card Inc. Data Protection Addendum Seattle, Washington: Washington State Department of Financial Institutions; 2022. Available from: https://www.tangocard.com/data-protection-addendum/.. |

| 33. | Qualtrics. Fraud Detection: Qualtrics; 2022. Available from: https://www.qualtrics.com/support/survey-platform/survey-module/survey-checker/fraud-detection/.. |

| 34. | Hoopes AJ, Benson SK, Howard HB, Morrison DM, Ko LK, Shafii T. Adolescent Perspectives on Patient-Provider Sexual Health Communication: A Qualitative Study. J Prim Care Community Health. 2017;8:332-337. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 38] [Cited by in RCA: 47] [Article Influence: 5.9] [Reference Citation Analysis (1)] |

| 35. | Heffner JL, Wyszynski CM, Comstock B, Mercer LD, Bricker J. Overcoming recruitment challenges of web-based interventions for tobacco use: the case of web-based acceptance and commitment therapy for smoking cessation. Addict Behav. 2013;38:2473-2476. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 51] [Cited by in RCA: 50] [Article Influence: 4.2] [Reference Citation Analysis (1)] |

| 36. | Larson N, Neumark-Sztainer D, Harwood EM, Eisenberg ME, Wall MM, Hannan PJ. Do young adults participate in surveys that 'go green'? Response rates to a web and mailed survey of weight-related health behaviors. Int J Child Health Hum Dev. 2011;4:225-231. [PubMed] |

| 37. | Irwin V, Zhang J, Wang X, Hein S, Wang K, Roberts A, York C, Barmer A, Mann FB, Dillig R, Parker S. Report on the Condition of Education 2021. NCES 2021-144. National Center for Education Statistics. 2021. Available from: https://nces.ed.gov/pubs2021/2021144.pdf. |

| 38. | United Nations. The promotion, protection and enjoyment of human rights on the Internet. In: Rights OotHCfH, editor. Geneva, Switzerland: United Nations; 2021. Available from: https://digitallibrary.un.org/record/3937534?ln=en. |

| 39. | Soofi AA, Khan MI, Amin F-e. A review on data security in cloud computing. International Journal of Computer Applications. 2017;96:95-96. [DOI] [Full Text] |

| 40. | Saravanakumar K, Deepa K. On privacy and security in social media–a comprehensive study. Procedia Comput Sci. 2016;78:114-119. [DOI] [Full Text] |

| 41. | Keshta I, Odeh A. Security and privacy of electronic health records: Concerns and challenges. Egyptian Informatics Journal. 2021;22:177-183. [RCA] [DOI] [Full Text] [Cited by in Crossref: 42] [Cited by in RCA: 71] [Article Influence: 17.8] [Reference Citation Analysis (2)] |

| 42. | Filkins BL, Kim JY, Roberts B, Armstrong W, Miller MA, Hultner ML, Castillo AP, Ducom JC, Topol EJ, Steinhubl SR. Privacy and security in the era of digital health: what should translational researchers know and do about it? Am J Transl Res. 2016;8:1560-1580. [PubMed] |

| 43. | Cheng L, Liu F, Yao D. Enterprise data breach: causes, challenges, prevention, and future directions. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery. 2017; 7: e1211. [DOI] [Full Text] |

| 44. | Anandarajan M, Malik S. Protecting the Internet of medical things: A situational crime-prevention approach. Cogent Medicine. 2018;5:1513349. [RCA] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 5] [Article Influence: 0.7] [Reference Citation Analysis (1)] |