Published online Nov 26, 2018. doi: 10.12998/wjcc.v6.i14.735

Peer-review started: August 24, 2018

First decision: October 9, 2018

Revised: October 15, 2018

Accepted: October 31, 2018

Article in press: November 1, 2018

Published online: November 26, 2018

Processing time: 94 Days and 16.6 Hours

Endosonography (EUS) has an estimated long learning curve including the acquisition of both technical and cognitive skills. Trainees in EUS must learn to master intraprocedural steps such as echoendoscope handling and ultrasonographic imaging with the interpretation of normal anatomy and any pathology. In addition, there is a need to understand the periprocedural parts of the EUS-examination such as the indications and contraindications for EUS and potential adverse events that could occur post-EUS. However, the learning process and progress vary widely among endosonographers in training. Consequently, the performance of a certain number of supervised procedures during training does not automatically guarantee adequate competence in EUS. Instead, the assessment of EUS-competence should preferably be performed by the use of an assessment tool developed specifically for the evaluation of endosonographers in training. Such a tool, covering all the different steps of the EUS-procedure, would better depict the individual learning curve and better reflect the true competence of each trainee. This mini-review will address the issue of clinical education in EUS with respect to the evaluation of endosonographers in training. The aim of the article is to provide an informative overview of the topic. The relevant literature of the field will be reviewed and discussed. The current knowledge on how to assess the skills and competence of endosonographers in training is presented in detail.

Core tip: Endosonography (EUS) has an estimated long learning curve including the acquisition of both technical and cognitive skills. However, the learning process and progress varies widely among trainees in EUS. Therefore, the performance of a certain number of EUS-procedures during training does not automatically guarantee adequate competence. Instead, assessment tools developed for the evaluation of endosonographers in training should better reflect the true competence of each individual trainee. This mini-review addresses the issue of clinical education in EUS and describes the current knowledge on how to assess the skills and competence of endosonographers in training in detail.

- Citation: Hedenström P, Sadik R. The assessment of endosonographers in training. World J Clin Cases 2018; 6(14): 735-744

- URL: https://www.wjgnet.com/2307-8960/full/v6/i14/735.htm

- DOI: https://dx.doi.org/10.12998/wjcc.v6.i14.735

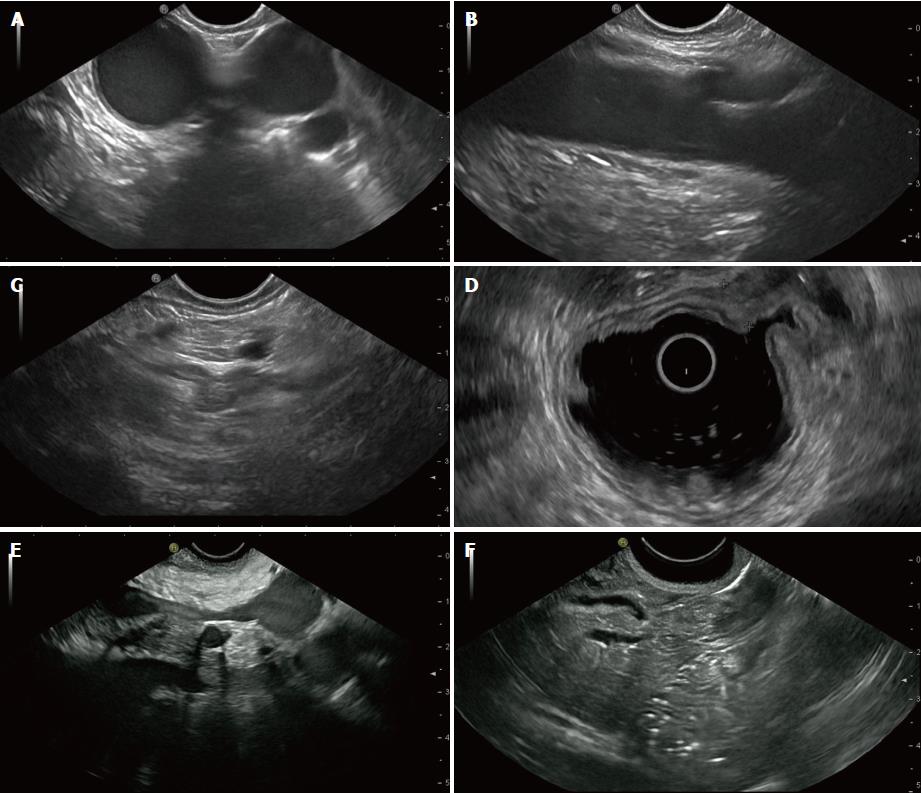

Endosonography (EUS) has become an important diagnostic and therapeutic tool for medical gastroenterologists, surgeons, and oncologists worldwide. The learning of EUS is a rewarding but demanding task with an estimated long learning curve[1]. The long learning curve is partly explained by the fact that EUS has several different clinical indications[2,3]. Moreover, many of the lesions examined by EUS include a wide range of possible diagnostic entities[4,5]. Consequently, the competent endosonographer needs to master not only multiple maneuvers with the echoendoscope and its accessories, but also endosonographic interpretation of the normal anatomy and any pathologic lesions (Figure 1). In the end, both cognitive and technical skills are essential to perform a safe EUS-examination of high quality.

In advanced endoscopy, the learning process and progress vary widely among trainees[1,6]. Therefore, the performance of a certain number of procedures during training does not automatically guarantee adequate competence in EUS. It is likely that an assessment tool that covers the different steps of the EUS-procedure and that is developed for the evaluation of endosonographers in training would be more appropriate than the count of procedures for assessing competence. Such tools would likely better depict the learning curve of EUS and reflect the true competence of each individual trainee[6].

This minireview addresses the issue of clinical education in EUS with respect to the evaluation of endosonographers training basic, diagnostic EUS with or without fine needle aspiration (EUS-FNA). The aim of this mini-review is to provide an informative up to date overview of the topic. The relevant literature of the field is reviewed and discussed. The current knowledge on how to assess the skills and competence of endosonographers in training is presented in detail.

It is recommended that the EUS-trainee should have completed a minimum of two years of training or practice in routine endoscopy before initiating training in EUS[7]. However, the experience in advanced, therapeutic endoscopy might not be a prerequisite for successful, basic EUS-training[8]. Likewise, previous competence in transabdominal ultrasound is probably not vital for learning EUS[9].

There is limited data on the number of centers providing supervised training in EUS[10]. Although it is frequently reported[10], learning EUS by self-teaching without supervision is discouraged[7,9,11]. A large number of learning procedures are expected. Therefore, training in EUS should only be performed in centers that can provide a reasonably high volume of procedures along with experienced and motivated instructors[11]. This type of focused training is highlighted by a study published in 2005, which found that trainees in an advanced endoscopy fellowship in an academic center performed a larger number of supervised procedures compared with endosonographers trained in other types of practice[10]. Furthermore, it is important that the endosonographic findings of the trainee are co-evaluated by the supervisor in the early phase of training[11].

Animal models can probably work as a facilitating tool for beginners or for trainees with little experience in EUS[12-14]. A live porcine model was evaluated by Bhutani et al[14] in a survey among 38 trainees with little experience in EUS, with these trainees participating in either of two EUS courses organized by the American Society of Gastrointestinal Endoscopy (ASGE) in 1997 and 2000. Over 90% of the respondents found the model helpful in enhancing their EUS skills but there was no measurement of the effect on the learning curve of EUS. Similar models have also been evaluated and have been found to be useful for the purpose of learning EUS-FNA[15,16].

Even though ex vivo-models could be helpful tools in early EUS-training, they may not be available in all centers and cannot replace supervised training in true patients[7,11]. Regarding the equipment, the linear array echoendoscope can probably be introduced to trainees at the on-set of training. A period of initial training with a radial echoendoscope was shown to not improve the performance of subsequent scanning with the linear array echoendoscope according to one study published in 2015[17]. The recommended design of training programs in EUS can be further studied by the guidelines issued by the ASGE[11,18].

The decision as to when to introduce the trainee to EUS-FNA has been a matter of debate. Some authors advocate long, previous experience with basic EUS with a thorough knowledge of the normal and abnormal anatomy before the introduction of EUS-FNA[19]. Others consider early trainee-performed EUS-FNA both appropriate and patient safe[20,21]. In a study by Coté et al[20] a supervisor-directed, trainee-performed EUS-FNA executed from the on-set of training, resulted in no recorded complications in a total of 305 patients. In addition, the performance characteristics of EUS-FNA including the diagnostic accuracy were found to be comparable (trainee vs supervisor). In another study by Mertz et al[21], the first 50 EUS-FNA:s of pancreatic masses performed by a non-experienced endosonographer were found to be safe with no adverse events detected. However, in this study, the diagnostic sensitivity for cancer was significantly higher after the first 30 EUS-FNA procedures. Therefore, it might be that the introduction of EUS-FNA could be considered by supervisors to already be performed at the on-set of training, at least from a patient safety point of view.

An important issue merits some attention: “How to ascertain that the obtained competence in EUS will be maintained after the completion of training in EUS?”. One way of ascertaining the maintenance of competence is to follow the recommendations issued by the ASGE[7], which encourage the trained endosonographer to log the annual number of EUS-procedures and, like all other endosonographers, to regularly review the quality and outcome of the procedures. Educational activities, such as scientific meetings and hands-on workshops, should also be attended.

The simple answer to this difficult question is “we do not know”. Therefore, the competence of an EUS-trainee can hardly be assessed only by the numeric count of performed procedures.

According to guidelines published in 2001, there are a suggested, minimum number of 125 supervised procedures to be performed before acceptable competence in EUS can be expected[7]. For comprehensive competence in all aspects of EUS, the same guidelines recommend a minimum of 150 supervised trainee-performed EUS-procedures. Out of these 150 procedures at least 75 procedures should have a focus on the pancreaticobiliary area and at least 50 procedures should include EUS-guided sampling (EUS-FNA)[7]. These recommended numbers should be considered to be an absolute minimum and not a guarantee that the necessary skills will be acquired.

A few clinical studies[1,8,22] have investigated the number of training procedures required to become a competent endosonographer. These publications are summarized in Table 1. As is discussed below, there is a significant variation in the methodologies of the studies, in the variables measured, and in the criteria for competence, when comparing the studies included in Table 1. This variation makes the results of these studies somewhat difficult to compare to each other.

| Ref. | Procedural step or scanning position | ||||||

| Intubation1 | Esophageal view | Gastric view | Duodenal view | ||||

| Meenan et al[8] 2003 | NA2 | 25–CNR3 | 35–CNR | 78–CNR | |||

| Intubation | Mediastinum | Celiac axis | Gastric wall | Pancreas (body) | Pancreas (head) | CBD | |

| Hoffman et al[22] 2005 | 1–23 | 1–33 | 8–36 | 1–47 | 1–34 | 15–74 | 13–134 |

| Intubation | AP window | Celiac axis | Pancreas (body) | Pancreas (head) | CBD | ||

| Wani et al[1] 2013 | 245–CNR | 315–CNR | 235–CNR | 226–CNR | 166–CNR | CNR–CNR4 | |

In the early era of EUS, examinations were mainly performed with the purpose of tumor staging without sampling. Today a majority of EUS-procedures include diagnostic sampling of lesions (EUS-FNA/B) or therapeutic interventions such as drainage of pancreatic pseudocysts. Therefore, to a large extent, radial echoendoscopes have been replaced by linear ones[11]. Consequently, the number of required cases for competence in EUS that were recommended many years ago should be interpreted with some caution since it might not be completely valid today.

Before independent performance of EUS-FNA, the ESGE and the ASGE both recommend a minimum of 50 supervised, trainee-performed EUS-FNAs of which 25-30 should be pancreatic EUS-FNA[7,9]. No specific number of EUS-FNA-procedures has been identified before full competence can be expected[9], but the learning curve most likely continues long after the initial period of supervised training[23]. In a retrospective study by Mertz et al[21], the sensitivity for the detection of pancreatic cancer by trainee-performed EUS-FNAs was compared in quintiles of procedures. A significant increase in sensitivity after the third quintile was detected. Consequently, the authors concluded that the ASGE guideline of 25 supervised EUS-FNA procedures in solid pancreatic lesions seemed reasonable.

In a prospective, Japanese study including only subepithelial lesions[24] the accuracy and safety of EUS-FNA performed by two trainees were compared with those of two experts. Before the study period, both trainees had performed 50 EUSs without sampling and attended 20 EUS-FNAs performed by experts. In the study, a total of 51 cases were performed alternately by the trainee and the expert, and there was no difference in the acquisition of an adequate specimen. No major complications were recorded.

In a study by Wani et al[1], five EUS-trainees performing a total of 1412 examinations were assessed with regards to both basic EUS and EUS-FNA. The number of examinations required for acceptable competence varied significantly among the trainees. In one trainee 255 procedures were required while another trainee was still in need of continued training after 402 procedures (Table 1). The authors concluded that, compared with the recommended minimum of 150 supervised cases, all five trainees needed much larger number of training procedures to be competent[7]. Consequently, it is likely that > 200 procedures are required for the majority of trainees. This estimation is supported by others who argue that the number of recommended EUS-procedures may be a significant underestimation of the true number of procedures that are needed[25].

Logically, the competence of the trainee is reflected by the quality of the EUS being performed. Consequently, in EUS, what is good quality and what quality is good enough? One definition of adequate competence is suggested in the following guidelines by the ASGE: “The minimum level of skill, knowledge, and/or expertise derived through training and experience, required to safely and proficiently perform a task or procedure”[7]. Nevertheless, there is no consensus on the exact definition of competence in EUS or with what tools, and on what scale, it should be measured[1]. It also remains to be agreed upon what the specific indicators to be used as quality measures are in EUS.

In 2006, the American College of Gastroenterology (ACG)/ASGE task force aimed to establish quality indicators in EUS to aid in the recognition of high-quality examinations[26]. An updated and extended version including 23 quality indicators was published in 2015[27]. The 23 indicators were divided into three categories – Preprocedure (n = 9), Intraprocedure (n = 5), Postprocedure (n = 9). The three most prioritized indicators should be the frequency of adequate staging of GI malignancies, the diagnostic sensitivity of EUS-FNA in pancreatic masses, and the frequency of adverse events post-EUS-FNA[27]. However, these documents are basically intended for trained endosonographers working in clinical practice and not specifically for the situation of evaluating trainees in EUS. Naturally, the fully-trained endosonographer should ultimately aim to meet these quality indicators. Interestingly, the authors stressed that a subject for future research is the amount of training required for obtaining “diagnostic FNA yields comparable to those of published literature”.

The European Society of Gastrointestinal Endoscopy (ESGE), has published technical guidelines on EUS[28], however these guidelines do not include any quality indicators. As such, a recent initiative launched by the ESGE aims to address this specific issue. A working group has been formed[29,30] but to date no report has been published.

Thus, one way of assessing endosonographers in training would be to apply some of the quality indicators for EUS and to record the outcome on an arbitrary scale over time. However, the assessment of endoscopy trainees should not necessarily only focus on the quality indicators, but also focus on other parameters. The sensible approach would be to use the pre-defined and validated assessment criteria as well as the direct observation of an expert[11].

There are several validated assessment tools for measuring the learning curve in endoscopy such as the Mayo Colonoscopy Skills Assessment Tool (MCSAT)[31], the Competency in Endoscopy (ACE)[32], the British Direct Observation of Procedural Skills (DOPS)[33], and the Global Assessment of Gastrointestinal Endoscopic Skills (GAGES)[34]. Technical skills such as scope navigation, tip control, and loop reduction together with cognitive skills such as pathology identification and management of patient discomfort are assessed and scored to a varying degree. However the above tools were primarily designed for colonoscopy and not for EUS, which is why the ASGE has encouraged the development of objective criteria for the assessment of endosonographers in training[35].

The ASGE standards of practice committee has authored guidelines for credentialing and for granting privileges for EUS[7], with these guidelines stating that the competence in EUS should be evaluated independently from other endoscopic procedures. As further specified in this publication, the competent endosonographer should acquire skills including, among others, safe intubation of the esophagus, appropriate sonographic visualization of various organs, recognition of abnormal findings, and appropriate documentation of the EUS-procedure[7].

The assessment tools of trainee-performance in porcine EUS-models have been investigated[15]. However, it might be challenging to interpret trainee-competence based on their performance in an animal model, which is a quite different experience compared with the clinical everyday EUS-practice. To date, there is no clear recommendation on what parameters to include in the assessment of endosonographers in training performing EUS in humans. Although there is a lack of a uniform standard, some assessment tools, elaborated for EUS-trainees and for use in real patients, have been proposed.

The assessment tools that rate specific steps or maneuvers of the EUS-procedures have been investigated by some authors. As an example, in 2012 Konge et al[36] presented the EUS Assessment Tool (EUSAT), designed exclusively to measure EUS - FNA-competence for the specific situation of mediastinal staging of non-small cell lung cancer. Other examples also include assessment tools for the accurate staging of esophageal cancer[37,38], for the diagnostic EUS-FNA of pancreatic masses[21] or submucosal lesions[24], and for the adequate on-site trainee-assessment of the EUS-FNA-specimens[39]. These studies are limited to a certain scenario and they do not cover the complete examination including all organs and structures within reach for upper GI EUS.

Only a handful of groups have presented tools aimed at assessing the complete EUS-procedure including visualization of all the standard views. In an older study by Meenan et al[8], five EUS-trainees were evaluated in performing radial EUS, i.e., no EUS-FNA. In the beginning of training the trainees observed supervisor-performed examinations (range: 55-170 cases). Afterwards, the trainees performed the examinations themselves (range: 25-124 cases). In this study, a study unique data collection tool (Table 2), was designed to assess the ability of the trainees to use the ultrasound controls and to visualize a number of predetermined anatomic stations via the esophagus, the stomach, and the duodenum. Esophageal intubation with the echoendoscope was not assessed. Via the assessment tool and a point score system (maximum score: 40 points, Table 2), each trainee was evaluated at the end of training and during five examinations. For each position (esophagus/stomach/duodenum) an arbitrary minimum score was set to determine adequate competence. The authors concluded that the assessment tool was applicable in clinical practice and could identify trainees with a need for continued training. Difficult maneuvers could be identified such as the dynamic visualization of the aortic outflow, of the splenic vein, and of the common bile duct. A drawback of the study, which limits its implications, is that linear EUS was not performed and that only five procedures per trainee were scored.

| Site | Structures | Points |

| Esophagus | Liver, inferior vena cava/hepatic veins, crus, abdominal aorta, spine, right pleura, thoracic aorta, left atrium, aortic outflow, left pulmonary vein, azygous vein, thoracic duct, right/left bronchus, carina, aortic arch, carotids, trachea, thyroid | 18 points (minimum score for competence: 12 points) |

| Stomach | Stomach wall layer pattern, celiac axis, left adrenal, portal confluence, splenic vein, splenic artery, follow course of splenic vein | 8 points (following course of splenic vein: 2 points; minimum score for competence: 5 points) |

| Duodenum | Gall bladder, portal vein, pancreatic duct, abdominal aorta, inferior vena cava, uncinate process, superior mesenteric vein, superior mesenteric artery, follow course of common bile duct | 11 points (following course of common bile duct: 3 points; minimum score for competence: 7.5 points) |

| Use of ultrasound controls | Frequency, range, brightness/contrast | 3 points |

In another older study, only published in abstract form, twelve EUS-trainees were evaluated and rated by an expert[22]. According to the text of the abstract, the trainee-performed EUSs were assessed and rated with respect to the separate steps of the procedure (Table 1). Each step was scored and categorized as follows: 0 = Failed; 1 = Unsatisfactory; 2 = Satisfactory; and 3 = Excellent. Competency was defined as consistent achievement of a score of 2. Unfortunately, any further details and comments on this assessment tool cannot be provided due to the lack of a full article publication.

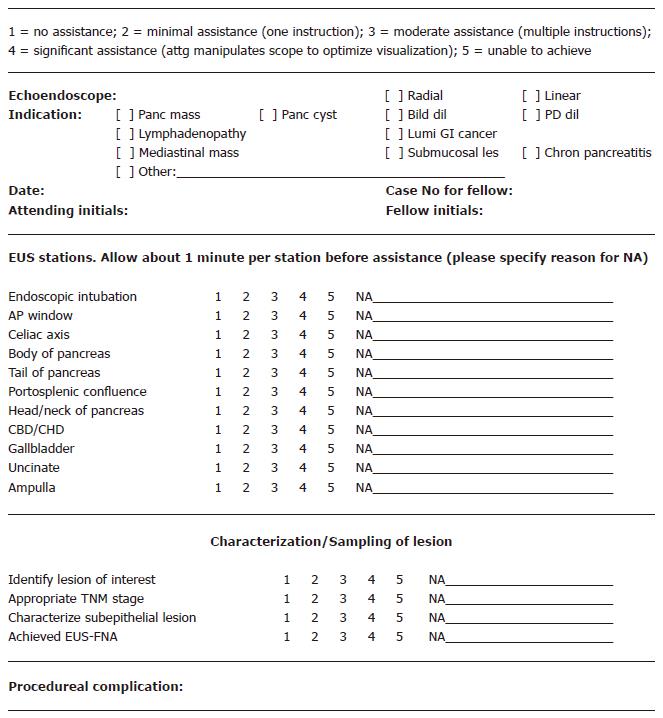

In a more recent study by Wani et al[1], five EUS-trainees performing a total of 1412 EUS-examinations were assessed by an EUS-expert. Beginning at the 25th examination, every 10th examination was assessed. Similar to the work by Meenan, the authors elaborated on a standardized data collection tool including different steps of the EUS-procedure (Figure 2). Each step was scored on a 5-grade scale. Then the score of each step and the overall score were recorded. Finally, the assessment of competence was based on the trend and inclination of the score and the learning curve was calculated by a so called CUSUM (Cumulative Sum Analysis)[1]. The authors found the suggested assessment method to be both feasible and valuable for identifying trainees who needed continued training. The method also identified the anatomic stations, such as the pancreas and the ampulla, that were more difficult to master for trainees. A weak point of this study was that only every 10th examination was assessed.

The identical study methodology and assessment tool (Figure 2), was used in an enlarged study by Wani et al[40] published in 2015. This study included 17 trainees who performed a total of 4257 examinations in 15 tertiary centers. The results were similar to those presented in the first publication with the learning curves showing a high degree of inter-trainee variation.

In 2017, another study was published by the same author[41], with the study evaluating trainees in EUS and ERCP using the EUS and ERCP skills assessment tool (TEESAT). In every third trainee-performed EUS, a nearly identical assessment tool (Figure 2), as was used in the two previous studies[1,40] was used to score the trainees. Twenty-two trainees participated in the study and 3786 examinations were graded. A centralized database was used and was found feasible for the collection of data. The authors concluded that TEESAT was a more time-consuming tool than any global rating scale but that it had the clear advantage of monitoring the learning curve and providing precise feed-back to trainees. TEESAT, therefore, could facilitate the improvement of certain steps or maneuvers. Finally, this study confirmed the fact that there was significant variability among the trainees concerning the time and number of procedures to achieve competence in EUS.

The safe and competent performance of advanced endoscopy procedures such as EUS is cognitively and technically demanding. Therefore, there is a definite need for the evaluation and assessment of EUS trainees both during and at the completion of training.

Some assessment tools have been evaluated in clinical studies but only some of those tools cover all the steps and aspects of a complete, diagnostic EUS-procedure. Moreover, the few extensive assessment tools that have been studied thus far have not yet been fully validated by external and independent investigators. The small number of publications within the field is somewhat troublesome, meaning that today, there is no standardized measurement protocol and assessment tool regarding trainee-performance in EUS. Consequently, no specific recommendation can be put forward on the most appropriate assessment tool to use for the evaluation of endosonographers learning basic, diagnostic EUS[6]. The assessment of endosongraphers learning therapeutic EUS was not an aim of this article.

Nevertheless, EUS is a rapidly expanding field with a growing number of diagnostic and therapeutic indications[42-44]. Therefore, supervisors should be prepared to include new and additional parameters for assessment with respect to the type of EUS-procedure being trained. It may also be that the trainee-performed EUS-FNA should be assessed more profoundly than previously attempted and include parameters such as diagnostic accuracy. Similar tools already exist for the purpose of assessing competence in polypectomy during colonoscopy[45].

Clinical research addressing the issue of assessing endosonographers in training should be encouraged. Studies presenting new assessment tools and studies validating suggested tools would be valuable. Such initiatives could be a great support in the education and training of future endosonographers. Although attempts are not lacking[27], there is an urgent need to establish an international consensus on the benchmarks for high-quality performance and competence in EUS.

Manuscript source: Invited manuscript

Specialty type: Medicine, research and experimental

Country of origin: Sweden

Peer-review report classification

Grade A (Excellent): A

Grade B (Very good): 0

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P- Reviewer: Fusaroli P, Yeh HZ S- Editor: Ji FF L- Editor: A E- Editor: Song H

| 1. | Wani S, Coté GA, Keswani R, Mullady D, Azar R, Murad F, Edmundowicz S, Komanduri S, McHenry L, Al-Haddad MA. Learning curves for EUS by using cumulative sum analysis: implications for American Society for Gastrointestinal Endoscopy recommendations for training. Gastrointest Endosc. 2013;77:558-565. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 108] [Cited by in RCA: 114] [Article Influence: 9.5] [Reference Citation Analysis (0)] |

| 2. | Dumonceau JM, Deprez PH, Jenssen C, Iglesias-Garcia J, Larghi A, Vanbiervliet G, Aithal GP, Arcidiacono PG, Bastos P, Carrara S. Indications, results, and clinical impact of endoscopic ultrasound (EUS)-guided sampling in gastroenterology: European Society of Gastrointestinal Endoscopy (ESGE) Clinical Guideline - Updated January 2017. Endoscopy. 2017;49:695-714. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 264] [Cited by in RCA: 238] [Article Influence: 29.8] [Reference Citation Analysis (0)] |

| 3. | ASGE Standards of Practice Committee, Early DS, Ben-Menachem T, Decker GA, Evans JA, Fanelli RD, Fisher DA, Fukami N, Hwang JH, Jain R, Jue TL, Khan KM, Malpas PM, Maple JT, Sharaf RS, Dominitz JA, Cash BD. Appropriate use of GI endoscopy. Gastrointest Endosc. 2012;75:1127-1131. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 156] [Cited by in RCA: 179] [Article Influence: 13.8] [Reference Citation Analysis (0)] |

| 4. | Hedenström P, Marschall HU, Nilsson B, Demir A, Lindkvist B, Nilsson O, Sadik R. High clinical impact and diagnostic accuracy of EUS-guided biopsy sampling of subepithelial lesions: a prospective, comparative study. Surg Endosc. 2018;32:1304-1313. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 23] [Cited by in RCA: 37] [Article Influence: 4.6] [Reference Citation Analysis (1)] |

| 5. | Jabbar KS, Verbeke C, Hyltander AG, Sjövall H, Hansson GC, Sadik R. Proteomic mucin profiling for the identification of cystic precursors of pancreatic cancer. J Natl Cancer Inst. 2014;106:djt439. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 39] [Cited by in RCA: 42] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 6. | Ekkelenkamp VE, Koch AD, de Man RA, Kuipers EJ. Training and competence assessment in GI endoscopy: a systematic review. Gut. 2016;65:607-615. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 124] [Cited by in RCA: 116] [Article Influence: 12.9] [Reference Citation Analysis (0)] |

| 7. | Eisen GM, Dominitz JA, Faigel DO, Goldstein JA, Petersen BT, Raddawi HM, Ryan ME, Vargo JJ 2nd, Young HS, Wheeler-Harbaugh J, Hawes RH, Brugge WR, Carrougher JG, Chak A, Faigel DO, Kochman ML, Savides TJ, Wallace MB, Wiersema MJ, Erickson RA; American Society for Gastrointestinal Endoscopy. Guidelines for credentialing and granting privileges for endoscopic ultrasound. Gastrointest Endosc. 2001;54:811-814. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 154] [Cited by in RCA: 153] [Article Influence: 6.4] [Reference Citation Analysis (0)] |

| 8. | Meenan J, Anderson S, Tsang S, Reffitt D, Prasad P, Doig L. Training in radial EUS: what is the best approach and is there a role for the nurse endoscopist? Endoscopy. 2003;35:1020-1023. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 22] [Cited by in RCA: 22] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 9. | Polkowski M, Larghi A, Weynand B, Boustière C, Giovannini M, Pujol B, Dumonceau JM; European Society of Gastrointestinal Endoscopy (ESGE). Learning, techniques, and complications of endoscopic ultrasound (EUS)-guided sampling in gastroenterology: European Society of Gastrointestinal Endoscopy (ESGE) Technical Guideline. Endoscopy. 2012;44:190-206. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 196] [Cited by in RCA: 216] [Article Influence: 16.6] [Reference Citation Analysis (0)] |

| 10. | Wasan SM, Kapadia AS, Adler DG. EUS training and practice patterns among gastroenterologists completing training since 1993. Gastrointest Endosc. 2005;62:914-920. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 14] [Cited by in RCA: 15] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 11. | Van Dam J, Brady PG, Freeman M, Gress F, Gross GW, Hassall E, Hawes R, Jacobsen NA, Liddle RA, Ligresti RJ. Guidelines for training in electronic ultrasound: guidelines for clinical application. From the ASGE. American Society for Gastrointestinal Endoscopy. Gastrointest Endosc. 1999;49:829-833. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 48] [Cited by in RCA: 45] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 12. | Bhutani MS, Aveyard M, Stills HF Jr. Improved model for teaching interventional EUS. Gastrointest Endosc. 2000;52:400-403. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 32] [Article Influence: 1.3] [Reference Citation Analysis (0)] |

| 13. | Bhutani MS, Hoffman BJ, Hawes RH. A swine model for teaching endoscopic ultrasound (EUS) imaging and intervention under EUS guidance. Endoscopy. 1998;30:605-609. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 33] [Article Influence: 1.2] [Reference Citation Analysis (0)] |

| 14. | Bhutani MS, Wong RF, Hoffman BJ. Training facilities in gastrointestinal endoscopy: an animal model as an aid to learning endoscopic ultrasound. Endoscopy. 2006;38:932-934. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 14] [Article Influence: 0.7] [Reference Citation Analysis (0)] |

| 15. | Barthet M, Gasmi M, Boustiere C, Giovannini M, Grimaud JC, Berdah S; Club Francophone d’Echoendoscopie Digestive. EUS training in a live pig model: does it improve echo endoscope hands-on and trainee competence? Endoscopy. 2007;39:535-539. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 35] [Article Influence: 1.9] [Reference Citation Analysis (0)] |

| 16. | Gonzalez JM, Cohen J, Gromski MA, Saito K, Loundou A, Matthes K. Learning curve for endoscopic ultrasound-guided fine-needle aspiration (EUS-FNA) of pancreatic lesions in a novel ex-vivo simulation model. Endosc Int Open. 2016;4:E1286-E1291. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 19] [Article Influence: 2.1] [Reference Citation Analysis (0)] |

| 17. | Xu W, Liu Y, Pan P, Guo Y, Wu RP, Yao YZ. Prior radial-scanning endoscopic ultrasonography training did not contribute to subsequent linear-array endoscopic ultrasonography study performance in the stomach of a porcine model. Gut Liver. 2015;9:353-357. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 7] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 18. | Eisen GM, Dominitz JA, Faigel DO, Goldstein JL, Kalloo AN, Petersen BT, Raddawi HM, Ryan ME, Vargo JJ 3rd, Young HS, Fanelli RD, Hyman NH, Wheeler-Harbaugh J; American Society for Gastrointestinal Endoscopy. Standards of Practice Committee. Guidelines for advanced endoscopic training. Gastrointest Endosc. 2001;53:846-848. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 24] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 19. | Harewood GC, Wiersema LM, Halling AC, Keeney GL, Salamao DR, Wiersema MJ. Influence of EUS training and pathology interpretation on accuracy of EUS-guided fine needle aspiration of pancreatic masses. Gastrointest Endosc. 2002;55:669-673. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 83] [Cited by in RCA: 71] [Article Influence: 3.1] [Reference Citation Analysis (0)] |

| 20. | Coté GA, Hovis CE, Kohlmeier C, Ammar T, Al-Lehibi A, Azar RR, Edmundowicz SA, Mullady DK, Krigman H, Ylagan L. Training in EUS-Guided Fine Needle Aspiration: Safety and Diagnostic Yield of Attending Supervised, Trainee-Directed FNA from the Onset of Training. Diagn Ther Endosc. 2011;2011:378540. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 8] [Cited by in RCA: 13] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 21. | Mertz H, Gautam S. The learning curve for EUS-guided FNA of pancreatic cancer. Gastrointest Endosc. 2004;59:33-37. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 125] [Cited by in RCA: 115] [Article Influence: 5.5] [Reference Citation Analysis (0)] |

| 22. | Hoffman B, Wallace MB, Eloubeidi MA, Sahai AV, Chak A, van Velse A, Matsuda K, Hadzijahic N, Patel RS, Etemad R. How many supervised procedures does it take to become competent in EUS? Results of a multicenter three year study. Gastrointest Endosc. 2000;51:AB139. [DOI] [Full Text] |

| 23. | Eloubeidi MA, Tamhane A. EUS-guided FNA of solid pancreatic masses: a learning curve with 300 consecutive procedures. Gastrointest Endosc. 2005;61:700-708. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 123] [Cited by in RCA: 123] [Article Influence: 6.2] [Reference Citation Analysis (0)] |

| 24. | Niimi K, Goto O, Kawakubo K, Nakai Y, Minatsuki C, Asada-Hirayama I, Mochizuki S, Ono S, Kodashima S, Yamamichi N. Endoscopic ultrasound-guided fine-needle aspiration skill acquisition of gastrointestinal submucosal tumor by trainee endoscopists: A pilot study. Endosc Ultrasound. 2016;5:157-164. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 13] [Cited by in RCA: 13] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 25. | Faigel DO. Economic realities of EUS in an academic practice. Gastrointest Endosc. 2007;65:287-289. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 13] [Article Influence: 0.7] [Reference Citation Analysis (0)] |

| 26. | Jacobson BC, Chak A, Hoffman B, Baron TH, Cohen J, Deal SE, Mergener K, Petersen BT, Petrini JL, Safdi MA. Quality indicators for endoscopic ultrasonography. Am J Gastroenterol. 2006;101:898-901. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 18] [Cited by in RCA: 21] [Article Influence: 1.1] [Reference Citation Analysis (0)] |

| 27. | Wani S, Wallace MB, Cohen J, Pike IM, Adler DG, Kochman ML, Lieb JG 2nd, Park WG, Rizk MK, Sawhney MS, Shaheen NJ, Tokar JL. Quality indicators for EUS. Gastrointest Endosc. 2015;81:67-80. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 71] [Cited by in RCA: 87] [Article Influence: 8.7] [Reference Citation Analysis (0)] |

| 28. | Polkowski M, Jenssen C, Kaye P, Carrara S, Deprez P, Gines A, Fernández-Esparrach G, Eisendrath P, Aithal GP, Arcidiacono P. Technical aspects of endoscopic ultrasound (EUS)-guided sampling in gastroenterology: European Society of Gastrointestinal Endoscopy (ESGE) Technical Guideline - March 2017. Endoscopy. 2017;49:989-1006. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 268] [Cited by in RCA: 253] [Article Influence: 31.6] [Reference Citation Analysis (0)] |

| 29. | Rutter MD, Senore C, Bisschops R, Domagk D, Valori R, Kaminski MF, Spada C, Bretthauer M, Bennett C, Bellisario C. The European Society of Gastrointestinal Endoscopy Quality Improvement Initiative: developing performance measures. Endoscopy. 2016;48:81-89. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 43] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 30. | Bretthauer M, Aabakken L, Dekker E, Kaminski MF, Rösch T, Hultcrantz R, Suchanek S, Jover R, Kuipers EJ, Bisschops R. Requirements and standards facilitating quality improvement for reporting systems in gastrointestinal endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. Endoscopy. 2016;48:291-294. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 41] [Cited by in RCA: 46] [Article Influence: 5.1] [Reference Citation Analysis (0)] |

| 31. | Sedlack RE. The Mayo Colonoscopy Skills Assessment Tool: validation of a unique instrument to assess colonoscopy skills in trainees. Gastrointest Endosc. 2010;72:1125-1133, 1133.e1-1133.e3. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 90] [Cited by in RCA: 91] [Article Influence: 6.1] [Reference Citation Analysis (0)] |

| 32. | Sedlack RE, Coyle WJ; ACE Research Group. Assessment of competency in endoscopy: establishing and validating generalizable competency benchmarks for colonoscopy. Gastrointest Endosc. 2016;83:516-23.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 72] [Cited by in RCA: 83] [Article Influence: 9.2] [Reference Citation Analysis (0)] |

| 33. | Barton JR, Corbett S, van der Vleuten CP; English Bowel Cancer Screening Programme; UK Joint Advisory Group for Gastrointestinal Endoscopy. The validity and reliability of a Direct Observation of Procedural Skills assessment tool: assessing colonoscopic skills of senior endoscopists. Gastrointest Endosc. 2012;75:591-597. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 91] [Cited by in RCA: 107] [Article Influence: 8.2] [Reference Citation Analysis (0)] |

| 34. | Vassiliou MC, Kaneva PA, Poulose BK, Dunkin BJ, Marks JM, Sadik R, Sroka G, Anvari M, Thaler K, Adrales GL. Global Assessment of Gastrointestinal Endoscopic Skills (GAGES): a valid measurement tool for technical skills in flexible endoscopy. Surg Endosc. 2010;24:1834-1841. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 134] [Cited by in RCA: 142] [Article Influence: 9.5] [Reference Citation Analysis (0)] |

| 35. | ASGE Guidelines for clinical application. Methods of privileging for new technology in gastrointestinal endoscopy. American Society for Gastrointestinal Endoscopy. Gastrointest Endosc. 1999;50:899-900. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 20] [Cited by in RCA: 13] [Article Influence: 0.5] [Reference Citation Analysis (0)] |

| 36. | Konge L, Vilmann P, Clementsen P, Annema JT, Ringsted C. Reliable and valid assessment of competence in endoscopic ultrasonography and fine-needle aspiration for mediastinal staging of non-small cell lung cancer. Endoscopy. 2012;44:928-933. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 73] [Cited by in RCA: 73] [Article Influence: 5.6] [Reference Citation Analysis (0)] |

| 37. | Fockens P, Van den Brande JH, van Dullemen HM, van Lanschot JJ, Tytgat GN. Endosonographic T-staging of esophageal carcinoma: a learning curve. Gastrointest Endosc. 1996;44:58-62. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 79] [Cited by in RCA: 58] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 38. | Lee WC, Lee TH, Jang JY, Lee JS, Cho JY, Lee JS, Jeon SR, Kim HG, Kim JO, Cho YK. Staging accuracy of endoscopic ultrasound performed by nonexpert endosonographers in patients with resectable esophageal squamous cell carcinoma: is it possible? Dis Esophagus. 2015;28:574-578. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 16] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 39. | Harada R, Kato H, Fushimi S, Iwamuro M, Inoue H, Muro S, Sakakihara I, Noma Y, Yamamoto N, Horiguchi S. An expanded training program for endosonographers improved self-diagnosed accuracy of endoscopic ultrasound-guided fine-needle aspiration cytology of the pancreas. Scand J Gastroenterol. 2014;49:1119-1123. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 16] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 40. | Wani S, Hall M, Keswani RN, Aslanian HR, Casey B, Burbridge R, Chak A, Chen AM, Cote G, Edmundowicz SA. Variation in Aptitude of Trainees in Endoscopic Ultrasonography, Based on Cumulative Sum Analysis. Clin Gastroenterol Hepatol. 2015;13:1318-1325.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 60] [Cited by in RCA: 56] [Article Influence: 5.6] [Reference Citation Analysis (0)] |

| 41. | Wani S, Keswani R, Hall M, Han S, Ali MA, Brauer B, Carlin L, Chak A, Collins D, Cote GA. A Prospective Multicenter Study Evaluating Learning Curves and Competence in Endoscopic Ultrasound and Endoscopic Retrograde Cholangiopancreatography Among Advanced Endoscopy Trainees: The Rapid Assessment of Trainee Endoscopy Skills Study. Clin Gastroenterol Hepatol. 2017;15:1758-1767.e11. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 53] [Cited by in RCA: 83] [Article Influence: 10.4] [Reference Citation Analysis (0)] |

| 42. | Iwashita T, Yasuda I, Mukai T, Iwata K, Ando N, Doi S, Nakashima M, Uemura S, Mabuchi M, Shimizu M. EUS-guided rendezvous for difficult biliary cannulation using a standardized algorithm: a multicenter prospective pilot study (with videos). Gastrointest Endosc. 2016;83:394-400. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 62] [Cited by in RCA: 62] [Article Influence: 6.9] [Reference Citation Analysis (0)] |

| 43. | Khashab MA, Kumbhari V, Kalloo AN, Saxena P. EUS-guided biliary drainage by using a hepatogastrostomy approach. Gastrointest Endosc. 2013;78:675. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 9] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 44. | Sadik R, Kalaitzakis E, Thune A, Hansen J, Jönson C. EUS-guided drainage is more successful in pancreatic pseudocysts compared with abscesses. World J Gastroenterol. 2011;17:499-505. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 42] [Cited by in RCA: 44] [Article Influence: 3.1] [Reference Citation Analysis (2)] |

| 45. | Gupta S, Bassett P, Man R, Suzuki N, Vance ME, Thomas-Gibson S. Validation of a novel method for assessing competency in polypectomy. Gastrointest Endosc. 2012;75:568-575. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 38] [Cited by in RCA: 48] [Article Influence: 3.7] [Reference Citation Analysis (0)] |