INTRODUCTION

Although artificial intelligence (AI) was first conceptually presented as a means for machines to mechanize human actions and cognitive thinking approximately 70 years ago, the current applications are exponentially broad[1,2]. This technology is predicated on the fact that AI is able to exhibit certain facets of human intelligence which is derived from techniques known as machine learning (ML) and deep learning (DL)[3]. Machine learning involves automatically building mathematical algorithms from data sets and forming decisions with or without human supervision[3,4]. When an algorithm is able to learn predictive models, it can use new inputs to form outputs[3,5,6]. These models can be combined to form artificial neural networks (ANN) which mimic the neural network of a brain. Each algorithm assumes the role of a neuron and when grouped together form a network that interacts with different neurons[5,6]. ANN have pathways from inputs to outputs with hidden layers in between to help make the inner nodes more efficient and improve the overall network[3]. DL is a domain in which AI process a vast amount of data and self-creates algorithms that interconnect the nodes of ANN with interplay in the hidden neural layers[3,6]. Researchers have been using DL to form computer aided diagnosis systems (CADS) to aid in polyp detection and characterization[7]. Two major CAD systems have been developed so far: CADe (termed for computer-aided detection) and CADx (termed for computer-aided characterization). CADe uses white-light endoscopy for image analysis with the ultimate goal to increase the number of adenomas found in each colonoscopy thereby increasing adenoma detection rate (ADR) and reducing the rate of missed polyps[8]. CADx is designed to characterize polyps found during colonoscopy, thereby improving the accuracy of optical biopsies and reducing unnecessary polypectomy for non-neoplastic lesions[8]. It predominantly uses magnifying narrow band imaging (mNBI) but could also incorporate a variety of other techniques including white-light endoscopy, magnifying chromoendoscopy, confocal laser endomicroscopy, spectroscopy, and autofluorescence endoscopy[8]. In addition, AI technology is being applied to evaluate the quality of bowel preparation for colonoscopy. In this review, we outline the role of AI in polyp detection and characterization of dysplastic and/or neoplastic lesions. We also provide the current data on utility of AI in evaluation of bowel preparation and future directions of AI in colonoscopy.

POLYP DETECTION AND CADS

Polyps are abnormal tissue growths that arise in the colon that carry malignant potential[9]. Polyps are detected during colonoscopy but can sometimes be missed due to a variety of factors e.g., age of patient, diminutive polyp size, failure to reach cecum, quality of bowel preparation, and experience of endoscopist[10,11]. The ADR is the frequency to detect one or more adenomatous polyps during screening colonoscopy and is a universal quality metric with the strongest association to the development of interval cancers[11-13]. Owing to growing concerns of increasing rates of colon cancer in adults, CADS have been developed and utilized to aid in polyp detection and ultimately increase ADR[9,14-18].

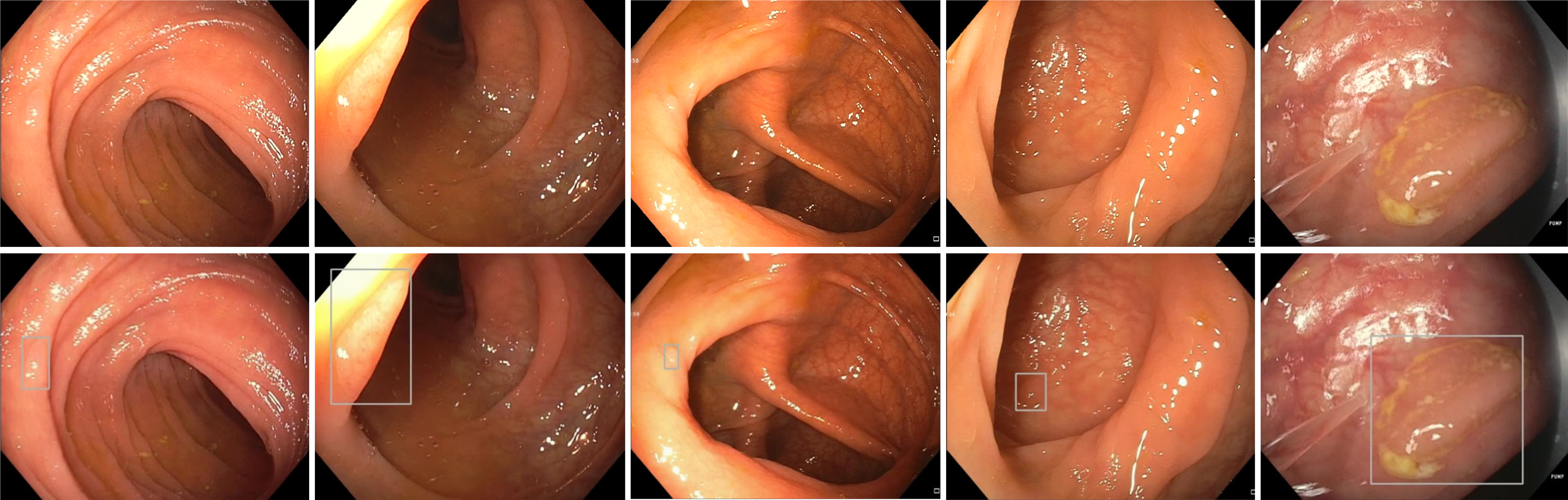

Multiple research groups have created automated computer vision methods to help analyze and detect polyps during colonoscopy[15-19] (Figure 1). One of the first groups to use CADe to help detect polyps relied mainly on still images from videos for analysis and polyp detection[20]. Their CADe used 24 videos containing a total of 31 polyps which were detectable in at least 1 frame[20]. The study demonstrated a sensitivity and specificity for polyp detection of 70.4% and 72.4%, respectively[20]. Another group created a model using DL which used 546 short videos and 73 full length videos to create the software and train it with positive and negative polyp containing videos[21]. The sensitivity and specificity were 90% and 63.3% respectively, showing that the model could potentially be used in a clinical setting to help minimize polyp miss rates during colonoscopy[21]. A recent, prospective multicenter trial comparing a CADe system to trained endoscopists and found that endoscopists (with a baseline ADR ≥ 35%) and CADe had a diagnostic accuracy of 98.2% and 96.5% respectively[22]. This led the authors to conclude that CADe was non-inferior to expert endoscopists[22].

Figure 1 Polyp detection without artificial intelligence (top) and with artificial intelligence (bottom).

As CADe systems proved to enhance polyp detection, researchers then focused on the role of AI on improving ADR. A prospective, randomized, controlled study evaluated 1058 patients undergoing colonoscopy with or without an automatic polyp detection system (APDS) found a relative ADR increase of 43.3% (29.1% vs 20.3%) using the APDS compared to standard colonoscopy[23]. This increase was most prevalent amongst diminutive adenomas which suggests that smaller adenomas are more likely to be missed compared to larger adenomas[23]. To expand upon the previous study, a double-blinded, randomized, controlled trial was performed with a sham group to control for operational bias[24]. There was a 21.4% relative increase of ADR in the CADe group (34% vs 28%) when compared to controls[24]. They found the delta to be higher amongst endoscopists with lower baseline ADR than compared to those with a higher baseline ADR[24]. A recent meta-analysis which included 6 randomized controlled trials comparing AI-assisted-colonoscopy to non-AI-assisted-colonoscopy totaling 5058 patients showed a significantly higher ADR within the AI group compared to the control (33.7% vs 22.9%, respectively)[25]. The study also showed an overall increase in detecting proximal colon adenomas with the AI-assisted-group compared to the control group (23.4% vs 14.5%, respectively)[25]. This is important because currently colorectal cancer (CRC) screening with colonoscopy alone is not effective at reducing proximal colon cancers and their mortality[25,26]. Thus while improving ADR is vital to preventing CRC, particularly in the proximal colon, the use of AI alongside endoscopists can be an ideal starting point. The CADe systems could be used as second observers, as second observers have been shown to increase ADR[27].

POLYP CHARACTERIZATION AND AI

Worldwide, CRC is the third most common cancer diagnosed in men and second in women[28]. Overall incidence of CRC in the United States has decreased due to lower smoking rates, early colonoscopy screenings, and early identification of patient-specific risk factors, but recent studies have reported a global increase in incidence of CRC in the younger population[29,30]. Thus, the latest endoscopic research is aimed towards techniques to better identify polyps and allow for real-time polyp histologic characterization which provides vital information for early intervention through endoscopic or surgical resection[31].

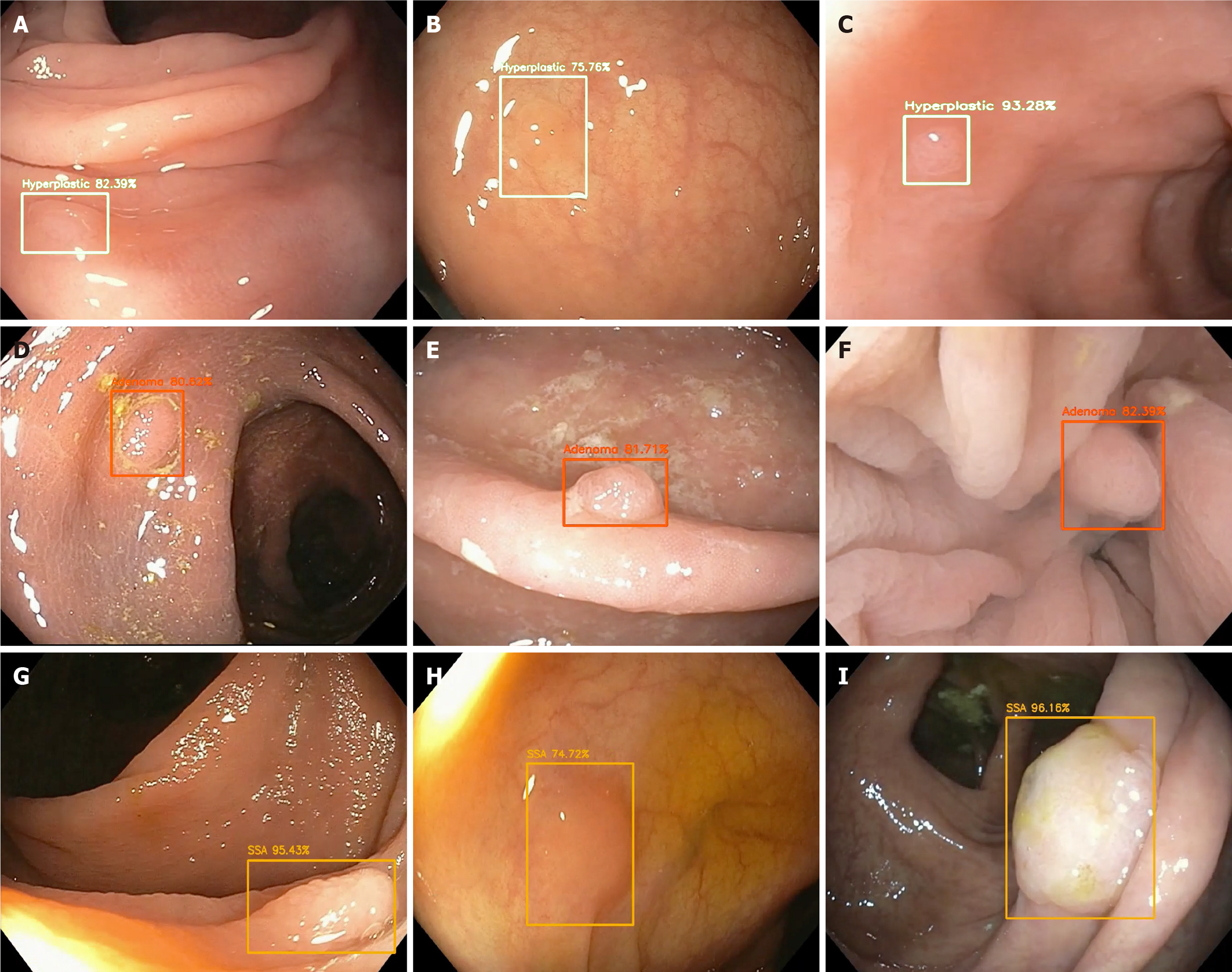

Studies evaluating AI and histologic assessment with optical biopsy have been a targeted focus- in particular for a “resect and discard” strategy for diminutive polyps < 6 mm, thereby avoiding the costs of pathology for low risk lesions[32] (Figure 2) top.

Figure 2 Optical pathology of detected polyps with associated probability utilizing artificial intelligence.

A-C: Hyperplastic polyps; D-F: Adenomas; G-I: Sessile serrated adenomas.

Several studies have found the range of sensitivity and specificity for polyp detection and characterization to be 70%-98% and 63%-98%, respectively[33]. An optical biopsy allows for differentiation of polyp type based on certain features. For example, NBI is an image-enhanced type of endoscopy that is used to identify microstructures and capillaries of the mucosal epithelium and allow for prediction of histologic features of colorectal polyps. Use of this advanced imaging technique often requires expertise to differentiate hyperplastic polyps from neoplastic polyps with high accuracy. AI systems offer a standardization of polyp characterization that overcomes the expertise or training differences across endoscopists[34]. Analysis of a CAD system with a deep neural network for analyzing NBI of diminutive polyps found that the AI system could identify neoplastic or hyperplastic polyps with 96.3% sensitivity and 78.1% specificity[34]. The system was compared to both novice (in-training) and expert endoscopists and it was notable that over half of the novice endoscopists classified polyps with a negative-predictive value of ranging from 73%-84%, compared to 91.5% of the system. The system also had a shorter time-to-classification compared to both expert and novice endoscopists (P < 0.05)[34]. Other groups have had similar results showing promise for AI-identification. One study compared images of 225 polyps as evaluated by a CAD system compared to diagnosis by endoscopists[35]. The polyps were classified using the Kudo and NBI international colorectal endoscopic classifications which found of the 225 polyps, 142 were dysplastic and 83 were non-dysplastic after endoscopy. The results of the CAD system correctly classified 205 polyps (91.1% of the total) and correctly delineated 131/142 (92%) as dysplastic and 74/83 (89%) as non-dysplastic[35]. There were no statistically significant differences in histologic prediction between the CAD system and endoscopic assessment, thus they concluded that a computer vision system based on characterization of the polyp surface could accurately predict polyp histology[35].

AI IN INFLAMMATORY BOWEL DISEASE

Inflammatory bowel disease (IBD), which includes Crohn’s disease (CD) and ulcerative colitis (UC), is a chronic inflammatory gastrointestinal tract disorder that remains a global concern as incidence in developing countries continues to grow[36]. Studies with AI and large datasets of endoscopic images have shown that AI can improve the way to diagnose IBD, evaluate the severity of disease, and follow-up treatments and provide follow-up[37]. Initial diagnosis of IBD through endoscopic evaluation remains a challenge due to wide ranging clinical manifestations of IBD and overlap across subtypes. Key endoscopic features of IBD include ulceration or erosions, and AI has shown its role in better predicting the need for further evaluation[38]. Aoki et al[38] have demonstrated that a deep convolutional neural network (DCNN) can be trained to detect erosions and ulcerations seen on wireless capsule endoscopy. Their system evaluated 10440 images in 233 s and demonstrated an area under the curve for detection of erosions and ulcerations at 0.958 (95% confidence interval: 0.947-0.968) and sensitivity, specificity and accuracy of 88%, 90% and 90%, respectively[38]. Tong et al[39] studied 6399 patients with UC, CD, or intestinal tuberculosis (ITB) who underwent colonoscopies. The colonoscopic images were then translated in the form of free texts and Random Forest (RF) and CNN were utilized to distinguish the three diseases. Diagnostic sensitivity and specificity of RF in UC/CD/ITB were 0.89/0.84, 0.83/0.82, and 0.72/0.77, respectively and that of CNN were 0.99/0.97, 0.87/0.83, and 0.52/0.81, respectively[39]. The studies showed that AI can be employed to discern and diagnose IBD although real-time diagnostic utility remains an area to develop[39].

Determining disease severity and activity in IBD can be done using endoscopic inflammation indices, and histologic scores. However, there can be certain flaws to using these methodologies such as intra-observer and inter-observer variability[40]. Studies using AI have been done to help control some of these factors. Bossuyt et al[41] developed a red density (RD) system, which was specific for endoscopic and histologic disease activity in UC patients, to help mitigate the observer bias by endoscopists. The study had 29 UC patients compared against 6 control patients using the RD score gained during colonoscopy[41]. The RD score was linked to the Robart’s Histologic Index in a multiple regression analysis and was found to be correlated with the RHI (r = 0.65, P < 0.00002) from the patients with UC[41]. The RD score from the control patients was also correlated with the RHI, Mayo endoscopic subscores (r = 0.76, P < 0.0001) and UC Endoscopic Index of Severity scores (r = 0.74, P < 0.0001), showing it correlated well with the validated tests[41]. A study done by Takenaka et al[40] used their algorithm, the deep neural network for evaluation of UC (DNUC), in 875 UC patients. The DNUC was developed using 40785 images from colonoscopies and 6885 biopsy results from 2012 UC patients[40]. The DNUC was able to identify patients in endoscopic remission with 90.1% accuracy and a kappa coefficient of 0.798 and identify patients in histologic remission with 92.2% accuracy and a kappa coefficient of 0.895 between the biopsy result and the DNUC[40]. The researchers concluded that it could be used to identify patients in remission and potentially avoid mucosal biopsy and analysis[40]. Stidham et al[42] created a 159-layer CNN using 16514 images from 3082 UC patients to help categorize patients groups in remission (Mayo subscore 0 or 1) to moderate to severe (Mayo subscore 2 or 3). The CNN had a positive predictive value of 0.87, sensitivity 83% and specificity of 96%[42]. The CNN was compared against human reviewers when assigned the Mayo scores, with a kappa coefficient of 0.84 for the CNN vs 0.86 for the human reviewers[42]. This shows that the AI is effectively able to help categorize patients into their respective severity stages[42].

Patients with IBD are at increased risk for CRC and it is important for these patients to undergo frequent surveillance. Guidelines differ depending on the medical society, but overall recommended intervals are from 1-5 years[43]. IBD surveillance guidelines and whether AI has a role in CRC detection has yet to be directly studied. A large reason for the lack of studies of AI and IBD has been due to IBD being an exclusion criterion for many of the early colonoscopic AI studies. A single study by Uttam et al[44] was one of the first to look at IBD and cancer risk, utilizing a three-dimensional nanoscale nuclear architecture mapping (nanoNAM). By analyzing 103 patients with IBD that were undergoing colonoscopy, their system measured for submicroscopic alterations in the intrinsic nuclear structure within epithelial cells and compared findings to histologic biopsies after 3 years. They found that their nanoNAM could identify colonic neoplasia with an AUC of 0.87, sensitivity of .81, and specificity of 0.82[44]. Additional studies on AI in IBD surveillance could help personalize surveillance strategies or guidelines for patients.

AI SYSTEMS IN PRACTICE

The most recent developments in clinical practice have been with the approval of several different devices: EndoBRAIN (Olympus Corporation, Tokyo, Japan), GI Genius (Cosmo Pharmaceuticals N.V., Dublin, Ireland), and WavSTAT4 (SpectraScience, Inc., San Diego, CA)[33,45,46]. EndoBRAIN is an AI-based system that is able to analyze pathologic features present on endoscopic imaging, and was developed and approved as a class II medical device[33]. In a multi-center study to determine the diagnostic accuracy of EndoBRAIN, their system was trained using 69142 endocytoscopic images taken from patients that had undergone endoscopy and the EndoBRAIN was compared against 30 endoscopists (20 trainees, 10 experts) with primary outcome of assessing neoplastic vs non-neoplastic lesions. Their results found that EndoBRAIN distinguished neoplastic from non-neoplastic lesions with 96.9% sensitivity, 94.3% specificity, which was higher than trainees and comparable to experts[33].

GI Genius has been approved by the FDA as an AI device to detect colonic lesions. GI Genius was compared to experienced endoscopists for colorectal polyp detection[45]. This system was trained on a data-set using white-light endoscopy videos in a randomized controlled trial and primarily looked at reaction time on a lesion as the primary endpoint. Results demonstrated that the AI system held a faster reaction time when compared with endoscopists in 82% of cases[45].

Lastly, laser-induced fluorescence spectroscopy using a WavSTAT4 optical biopsy system was evaluated for efficacy in accurately assessing the histology of colorectal polyps with the end goal of reducing time, costs, and risks of resecting diminutive colorectal polyps[46]. The overall accuracy of predicting polyp histology was 84.7%, sensitivity of 81.8%, specificity of 85.2%, and negative predictive value of 96.1%. This suggests that the system is accurate enough to allow distal colorectal polyps to be left in place and nearly reaches the American Society for Gastrointestinal Endoscopy threshold for resecting and discarding without pathologic assessment[46].

REAL-TIME EVALUATION FOR INVASIVE CANCER

AI prediction of invasive cancers through the utilization of real-time identification of colorectal polyps has the potential to improve CRC screening by limiting misses and improving outcomes, especially in geographic regions with less access to highly trained endoscopists.

Advanced imaging techniques during endoscopy (without AI) to provide a real-time prediction of lesion pathology and depth of invasion has been widely used. For example, a study assessed white-light endoscopy, mNBI, magnifying chromoendoscopy, and probe-based confocal laser endomicroscopy in real-time, in order to evaluate and classify the depth of invasion for colorectal lesions[31]. Of the 22 colorectal lesions, 7 were adenomas, 10 were intramucosal cancers, and 5 had deep submucosal invasion or deeper involvement. Sensitivity and specificity of white light endoscopy and mNBI were both 60% and 94%, respectively. Magnifying chromoendoscopy and probe-based confocal laser endomicroscopy were both 80 and 94%, respectively[31].

With data showing reliability of advanced imaging techniques in real-time for information to establish a diagnosis and drive intervention pursuits, integration of AI systems with these advanced imaging techniques has been a growing research focus. A recent review assessed 5 retrospective studies with wide ranging sensitivities ranging from 67.5%-88.2% sensitivity and 77.9%-98.9% specificity in finding invasive cancers[47]. The prediction of cancer invasion was made using magnified NBI, confocal laser endomicroscopy, white light endoscopy, or endocytoscopy. As the numbers reflect, more studies are needed to better evaluate how AI can provide more stable reliability in evaluation for invasive cancers[47].

COLON PREPARATION AND AI

Bowel preparation significantly impacts the diagnostic accuracy of colonoscopies. Inadequate colon preparation impairs visualization of the mucosa, thus causing missed lesions, extended operative time, and increased need for repeat colonoscopies[48,49]. Approximately 10%-25% of all colonoscopies are inadequately prepared[50-52]. In addition, studies have shown that suboptimal bowel preparation can result in an adenoma miss rate ranging from 35%-42%[51]. A recent prospective study discovered that variable bowel preparation quality did not have a measurable effect on their AI algorithm’s ability to accurately identify colonic polyps. However, the applicability of these findings is limited by the study’s small sample size of 50[50]. Therefore, the ability of AI to accurately identify polyps in suboptimal conditions remains unknown.

Currently several scales, the most validated and reliable of which is the Boston Preparation Scale (BBPS), are used to assess bowel preparation quality[52]. Scores ranging from 0-3 are individually given to the right, transverse, and left colon during colonoscope withdrawal. A bowel preparation that fails to have a total BPPS score of ≥ 2 would mandate a repeat colonoscopy before the recommended 10-year interval (assuming a normal colon)[48,52]. Despite BBPS being deemed the most reliable scale, it cannot accurately account for variability in bowel preparation throughout the entire colon or gradients in adequacy of cleansing. Although BBPS takes into consideration the 3 colonic segments, regions of the same segment can be variably cleansed[49,52]. Therefore, 1 score cannot accurately represent one-third of the colon. This limitation is further exacerbated by the scale’s susceptibility to subjectivity, as individual experiences can shape how physicians interpret data[49].

Most studies indicating the efficacy of AI in detecting colonic polyps utilized still images and videos of ideally prepared colons to train and test their AI software[53]. A DCNN known as ENDOANGEL (Wuhan EndoAngel Medical Technology Company, Wuhan, China) provided real-time and objective BPPS scores during the colonoscopy. ENDOANGEL circumvents subjective bias via DL using thousands of images scored by different endoscopists[49]. Additionally, the DCNN simultaneously calculates a real-time BPPS score every 30 s throughout the colonoscopy and provides a cumulative ratio of the different stages, thus providing an accurate assessment of preparation quality throughout the colon[49,52]. Through DL and frequent scoring, ENDOANGEL proved to be far more effective than endoscopists at accurate BPPS scoring (93.33% vs 75.91%)[49].

Overall, poor bowel preparation quality significantly increases ADR[51]. Although previous applications of CADe and CADx have been used to optimize endoscopic image quality and mucosal visualization, ENDOANGEL, is the first utilization of AI to provide objective, real-time assessments of bowel preparation quality throughout the entire colon[49,54,55]. Another laboratory group has since independently released promising results regarding use of their AI to assess bowel preparation, indicating AI’s potential to improve the preparatory-phase of colonoscopy[56].

FUTURE DIRECTIONS

A significant problem in the advanced imaging trials is that these are done by experts and accordingly, there is good inter-observer performance characteristics. These results are not the same when evaluated by lesser experienced providers[57]. Application of AI as a formidable tool seems logical and promising to mitigate the costs and learning curves for application of these newer techniques across the broad and variable ranges of providers.

Although the current CADS provide promising results, a larger data sets for training the systems can provide improvements in sensitivity and specificity in addition to minimizing false positives and false negatives. The larger training data also increases the burden of annotations, however, this can be overcome by an annotation software which incorporates a DL module. The precise effects of AI once it is widely available in clinical practices are yet to be determined, but the evidence based on EndoBRAIN, GI Genius, and WavSTAT4 are hopeful that significant benefits in training gastroenterologist and diagnosing a polyp can be expected.

Additional areas of future study include better detection of various polyps (adenomatous, non-adenomatous, dysplastic), evaluation of lesion size and morphology, and distinguishing invasive involvement. Additionally, further study is necessary to evaluate the adequacy of large polyp resection (i.e., margins free of adenomatous change). Much of the early data to date have used AI systems which are based on algorithms using still-images and videos[58]. Larger-scale studies can help us better understand real-time use of AI to show how it compares to endoscopists. Due to the novelty of AI systems in the clinical setting, study methods utilizing AI have also largely been done in a non-blinded manner, which may interfere with how the endoscopists perform the procedure, leading to a component of observation bias.

Finally, the future of AI lies in simplifying the tool for utilization by many endoscopists as well as achieving the goal of treatment. One way to overcome the complexity is incorporating the CADS into the colonoscope and display instead of existing as a separate entity that needs to be installed. In addition, an improved model for distinguishing polyps and invasion can further facilitate treatment process for patients.

CONCLUSION

AI is widely applied and utilized in endoscopy and continues to be researched to augment the accuracy of screening and differentiation of neoplastic vs non-neoplastic lesions. Although this wide applicability and active investigations are encouraging, further work is needed to solidify the integration of AI into everyday practice. Real-time diagnosis using AI remains technically challenging, however, these recent studies exemplify promising advancements for enhanced quality assessment and management of colonic disease.

Manuscript source: Invited manuscript

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): 0

Grade C (Good): C, C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Morya AK, Zhao Y S-Editor: Liu M L-Editor: A P-Editor: Wang LYT