Published online Dec 8, 2023. doi: 10.35712/aig.v4.i3.64

Peer-review started: July 27, 2023

First decision: August 31, 2023

Revised: September 13, 2023

Accepted: October 23, 2023

Article in press: October 23, 2023

Published online: December 8, 2023

Processing time: 132 Days and 19.5 Hours

Colorectal cancer is a major public health problem, with 1.9 million new cases and 953000 deaths worldwide in 2020. Total mesorectal excision (TME) is the standard of care for the treatment of rectal cancer and is crucial to prevent local recurrence, but it is a technically challenging surgery. The use of artificial intelligence (AI) could help improve the performance and safety of TME surgery.

To review the literature on the use of AI and machine learning in rectal surgery and potential future developments.

Online scientific databases were searched for articles on the use of AI in rectal cancer surgery between 2020 and 2023.

The literature search yielded 876 results, and only 13 studies were selected for review. The use of AI in rectal cancer surgery and specifically in TME is a rapidly evolving field. There are a number of different AI algorithms that have been developed for use in TME, including algorithms for instrument detection, anatomical structure identification, and image-guided navigation systems.

AI has the potential to revolutionize TME surgery by providing real-time surgical guidance, preventing complications, and improving training. However, further research is needed to fully understand the benefits and risks of AI in TME surgery.

Core Tip: This review provided an overview of the current use of artificial intelligence methods in surgery and the latest findings on their use during total mesorectal excision dissection in rectal cancer procedures. It also discussed the main limitations of artificial intelligence in surgery and that it is still not used in clinical settings.

- Citation: Mosca V, Fuschillo G, Sciaudone G, Sahnan K, Selvaggi F, Pellino G. Use of artificial intelligence in total mesorectal excision in rectal cancer surgery: State of the art and perspectives. Artif Intell Gastroenterol 2023; 4(3): 64-71

- URL: https://www.wjgnet.com/2644-3236/full/v4/i3/64.htm

- DOI: https://dx.doi.org/10.35712/aig.v4.i3.64

Colorectal cancer is a significant public health concern, with 1.9 million new cases and 953000 deaths worldwide in 2020. It is the third most common cancer and the second leading cause of cancer-related deaths globally, according to GLOBOCAN data[1]. Despite advances in non-surgical treatment of colorectal cancer, oncological radical surgical excision of the primary tumor and locoregional lymph nodes represents the predominant aspect of curative treatment. Total mesorectal excision (TME) is a surgical technique that has become the standard of care for the treatment of rectal cancer and involves the complete removal of the rectum and surrounding tissues, including the mesorectum, the fatty tissue that surrounds the rectum. The technique was first introduced in the 1980s and has since been shown to improve local control of the disease and reduce the risk of recurrence, leading to better long-term outcomes for patients[2]. This surgery requires skill and expertise to achieve both oncological radicality and preservation of the presacral nerves responsible for continence and sexual function.

Incomplete TME is directly linked to local tumor recurrence and decreased overall survival. Curtis et al[3] demonstrated that surgeons in the top skill quartile consistently achieved superior-quality histopathological TME specimens, resulting in improved patient outcomes. Many countries are considering centralizing rectal cancer treatment for this reason. The application of adjunctive measures such as artificial intelligence (AI) could aid surgeons in performing an adequate TME. The effect is likely to be more pronounced in surgeons who are still at the beginning of their learning curve but may also be useful to orientate more experienced surgeons in challenging cases, such as cases of recurrent rectal cancer and patients who have previously received neoadjuvant treatment.

This review provided an overview of the current state of knowledge on the use of AI, specifically in the performance of a TME dissection, focusing on the scientific evidence that supports its use in the management of rectal cancer.

A comprehensive literature search was conducted to identify relevant studies for this review. The search was performed using the PubMed electronic database, using the following search terms: “TME” OR “Total Mesorectal Excision” OR “Rectal Cancer Surgery” AND “Artificial Intelligence” OR “Machine Learning” OR “Deep Learning.” The search was limited to studies published between 2020 and 2023. Only articles published in English were included. Only studies addressing the use of AI in rectal cancer surgery and specifically in TME were selected.

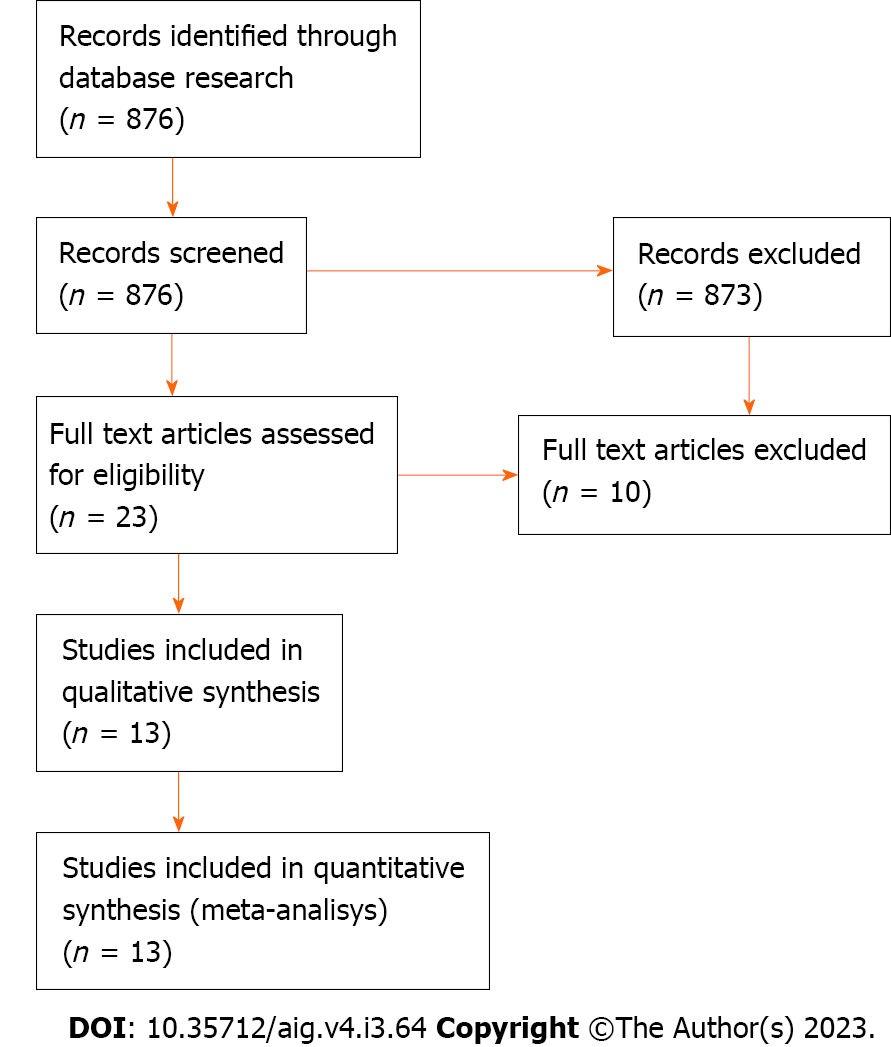

The literature search yielded 876 results. Thirteen studies met our inclusion criteria. The selection flowchart is illustrated in Figure 1. In an initial screening, only the title and abstract of the papers were analyzed until we obtained 26 results. After reading the full text, only 13 studies were selected for review.

AI is a technology that today encompasses several approaches, such as machine learning (ML), a complex set of mathematical algorithms that allow computers to learn through experience[4], deep learning (DL), or computer vision (CV). The concept of ML is to identify patterns and optimize their parameters to better solve a specific problem by analyzing large-scale datasets[5]. ML has shown encouraging results in the analysis of data such as texts, images, and videos. DL is a subfield of ML that uses multilayer artificial neural networks to draw pattern-based conclusions from input data[6]. In medicine, a large amount of data is visualized in the form of images, and CV is another subfield of ML, which trains machines to extract valuable information from images (e.g., radiological and histopathological) and videos (e.g., endoscopic and surgical videos)[7].

Several groups have developed radiological image processing algorithms to enable faster diagnoses, improve the visualization of pathologies, and recognize emergency situations[8-12]. Recent examples include DL-based algorithms to achieve accurate carotid artery stenosis detection and plaque classification using computed tomography angiography[11], 3D convolutional neural networks to automate tumor volumes using positron emission tomography computed tomography and magnetic resonance images[12], automatic detection of lymph node metastases in colon and head-and-neck cancer[13,14], and DL models for automatic classification of thyroid biopsies based on microscope images taken with a smartphone[15].

Surgical data science describes an emerging field of research concerned with the collection and analysis of surgical data[16]. The application of AI technology in surgery was first studied by Gunn[17] in 1976, when he explored the possibility of diagnosing acute abdominal pain using computer analysis. Over the last two decades, interest in the application of AI in general and in colorectal surgery has increased. AI methods have been applied in multiple areas of colorectal surgery, preoperatively, intraoperatively, and postoperatively[18]. Preoperatively, AI can help diagnose and clinically classify patients as accurately as possible and offer a personalized treatment plan. Postoperatively, it can integrate the pathway to better recovery after surgery, automate pathology assessment, and support research. All these elements contribute to improved patient outcomes and provide promising results.

Intraoperatively, it could help improve the surgeon’s skills during laparoscopic and robotic procedures. The development of AI-based systems could support anatomy detection and trigger alerts, providing surgical guidance on dangerous actions at crucial stages and improving surgeons’ decision-making. ML algorithms have been used to identify surgical instruments as they enter the surgical field and the identification of anatomical landmarks such as vascular and nervous structures and organs[19]. This is achieved using methods that first assess the presence and second analyze the movement pattern of surgical instruments and/or by automatic assessment of surgical phases[20]. In 2022, the work of Mascagni et al[21] developed a DL model that automatically segments the hepatocystic triangle and automatically determines compliance with the critical view of safety criteria during laparoscopic cholecystectomy, with the aim of reducing bile duct injuries.

Although this is an evolving field, it is important to point out that AI-based assistance during surgery is still at an embryonic stage, and its developments, such as video segmentation and automatic detection of instruments, have so far shown little benefit, as no AI algorithm has yet been approved for clinical use.

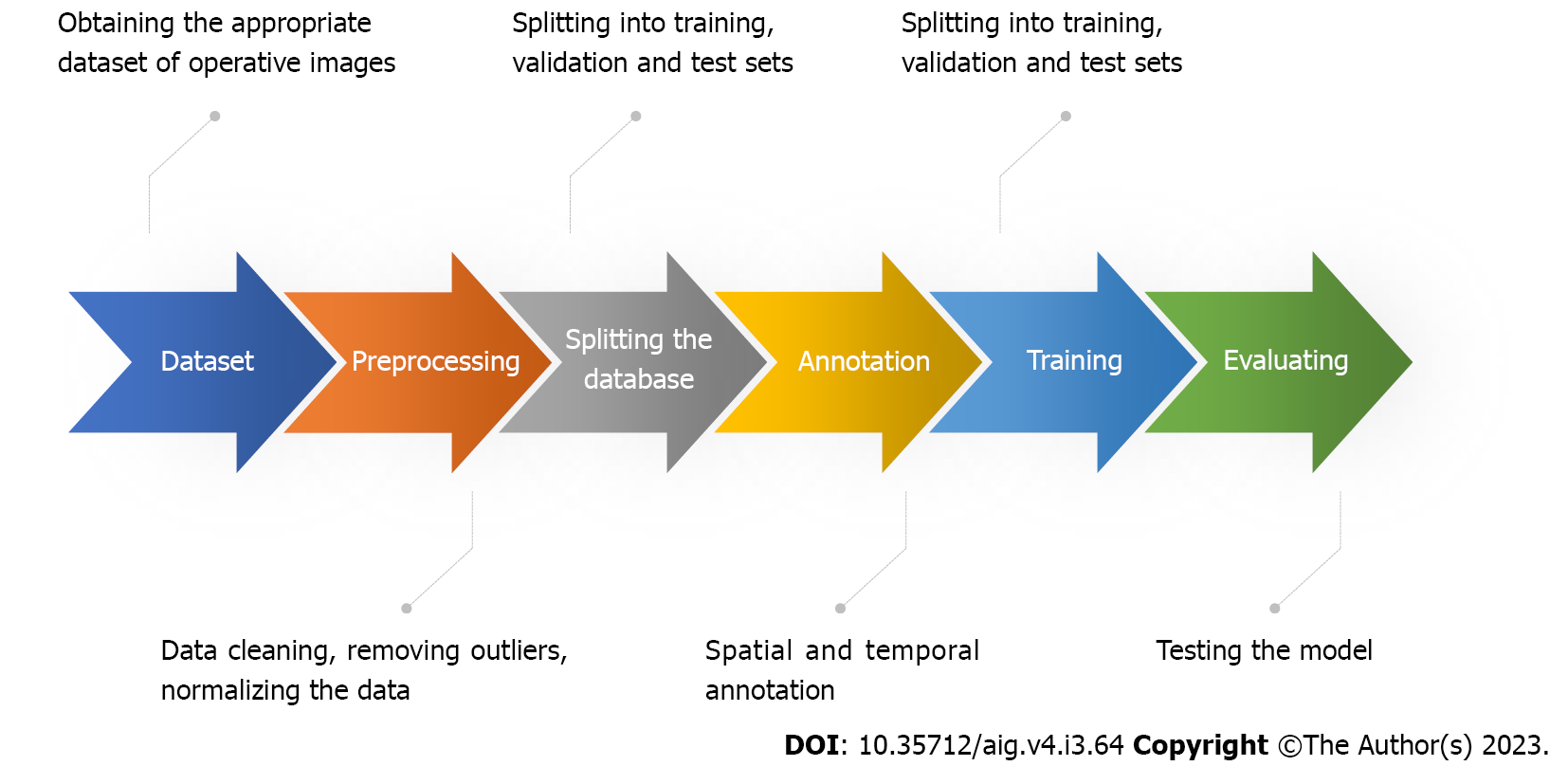

AI in surgery focuses on training neural networks. This process starts with splitting the data into two main parts: the training data and the test data. The training data is a predetermined part of the overall data from which the network learns most of its information. The test data is used to see how well the network can apply what it has learned to new, unseen data. The neural network is then fine-tuned through a validation dataset, evaluating various hyperparameters so that the network can estimate the spectrum of data that it may receive.

The DL task can be divided into three categories depending on the type of output expected from the network: (1) Classification. The task of categorizing a given input into two or more possible classes. For example, a classification model could be used to identify the type of tissue in a medical image; (2) Detection. The task of identifying and localizing an object of interest in an image. For example, a detection model could be used to identify and track the surgical tools in a video; and (3) Segmentation. The task of assigning a label to each pixel in an image. For example, a segmentation model could be used to identify the different organs in a medical image. Surgical phase and tool detection models are two early examples of DL for surgical applications[20]. These models have been used to improve the accuracy and efficiency of surgical procedures.

The ML process used in surgical applications can be generally outlined with the following steps (Figure 2): (1) Obtaining an appropriate dataset. The dataset should contain surgical images linked to clinical outcomes. In some cases, a simple and effective way to verify that the data is sufficiently informative is to ask an expert to look at the data and perform the same task proposed for the model. People without medical education could correctly annotate the presence or absence of tools in images. However, the same cannot be said for annotating surgical phases, as this requires surgical understanding and a common definition of what exactly defines and delineates phases; (2) Pre-processing of data. This may include cleaning the data, removing outliers, and normalizing the data; (3) Splitting the dataset. The database is split into training, testing, and validation sets. It is good scientific practice to keep these sets as independent from each other as possible, as the network may develop biases; (4) Annotation. Data labeling is a crucial step in the ML pipeline, as it enables supervised training for ML models. Annotations can be temporal or spatial. Temporal annotations are useful when we need to determine surgical phases during an operation. Spatial annotations are used to identify surgical instruments in the surgical scene or anatomical structures (e.g., tool detection); (5) Training the model. This involves feeding the data into the model and allowing it to learn the patterns in the data. The training process can be computationally expensive, depending on the size of the dataset and the complexity of the model; (6) Evaluation of the model. This involves testing the model on a verified dataset and evaluating its performance. This ensures that the model is not overfitted to the training data; and (7) Deployment of the model. This means making the model available for use in real-world applications.

As mentioned in the annotation description, phase recognition is the process of classifying frames in a video or image sequence according to a predetermined surgical phase. It is a CV task in which visual data is analyzed to identify and understand different phases or actions. The goal is to recognize the sequence of frames in a video or image sequence to identify specific actions or events that occur at different points in time. This can be done by observing characteristic visual cues, such as motion, changes in shape, or object interactions, to differentiate between different phases.

In semantic segmentation, an image is divided into meaningful regions or segments, and each segment is assigned a semantic label. The goal is to understand what the different parts of an image represent. To accomplish this task, an algorithm analyzes the image at the pixel level and assigns each pixel a label indicating which object or category it belongs to. By observing patterns and features in the training data, the algorithm can generalize its understanding to detect and classify new images.

In the context of surgical applications, semantic segmentation can be used to identify different anatomical structures, such as organs, tissues, and blood vessels. This information can be used to guide surgeons during surgery and to improve the accuracy and safety of the procedure. Examples of semantic segmentation in surgical applications include identification of the tumor and surrounding tissue in cancer surgery, localization of the surgical target in minimally invasive surgery, tracking the movement of organs and tissues during surgery, detecting and removing blood clots, and preventing accidental injury to surrounding tissue.

One of the challenges in semantic segmentation for surgical applications is the complexity of the images. Surgical images are often cluttered with noise and artifacts, which can make it difficult for the algorithm to accurately segment the different objects. Another challenge is the variability of surgical procedures. Each procedure is unique, and the objects and tissues involved can differ from patient to patient. This makes it difficult to train a single algorithm that can be used for all surgical procedures. Recent advances in DL have made it possible to develop more accurate and robust semantic segmentation algorithms for surgical applications. These algorithms can learn the complex patterns in surgical images and generalize their understanding to new datasets[22].

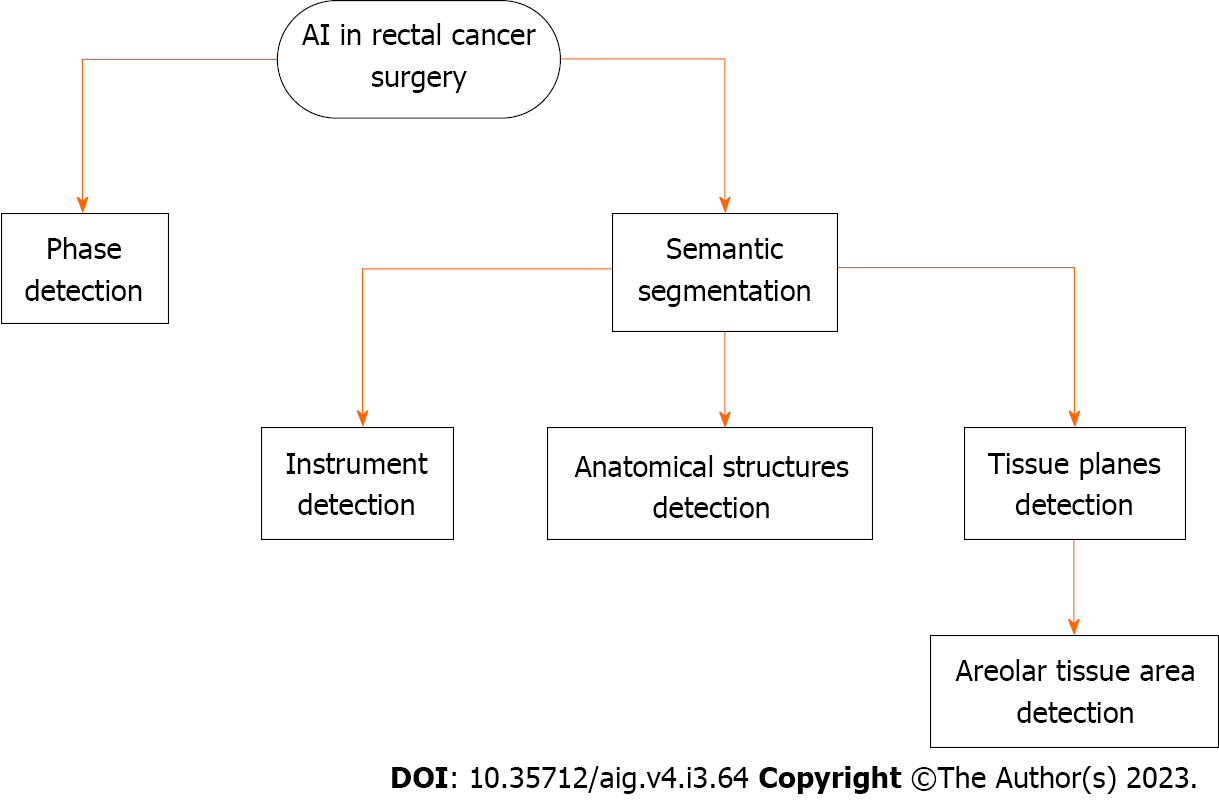

In anterior rectal resection and TME, studies to date have focused on the development of DL-based phase, act, and tool recognition[22] as well as DL-based image-guided navigation systems for areolar tissue at the level of TME[23].

As mentioned earlier, TME is a complex surgical procedure in rectal cancer surgery consisting of the complete resection of the mesorectal envelope, which requires that the resection is performed in the correct plane and preserves vulnerable anatomical structures, such as the autonomic nerve plexus. An injury of this type could cause major issues such as postoperative incontinence and sexual dysfunction. Robotic-assisted surgery is particularly useful in TME surgery, although the authors acknowledge that no substantial clinical benefit over laparoscopic surgery has been demonstrated[24]. It does, however, offer advantages in terms of acquiring high-quality image data due to the benefits of 3D vision and a more stable camera platform. An additional advantage is that the system recognizes when a new instrument has been connected to the console, making it easy to compare instrument recognition algorithms.

In this context, AI could provide surgical guidance by identifying anatomical structures and helping to improve surgical quality, reduce differences between surgeons, and provide better clinical results. To date, efforts have been made to develop image recognition algorithms using minimally invasive video data, with a particular focus on automated instrument detection, which has only indirect surgical benefits[20,25]. Significant results have also been achieved in the recognition of relevant anatomical structures during less complex surgical procedures such as cholecystectomy[21,26].

The 2022 work by Kolbinger et al[22] (republished in 2023) was based on 57 robot-assisted rectal resections and focused on developing an algorithm for automatic detection of surgical phases and identification of determined anatomical structures. In particular, the algorithm achieved the best results in detecting the mesocolon, mesorectum, Gerota’s fascia, abdominal wall, and dissection planes during mesorectal excision.

In 2022, Igaki et al[23] relied on the idea of the “holy plane,” first proposed by Heald[27] in the 1980s when describing TME dissection. The holy plane lies between the mesorectal fascia and the parietal pelvic fascia through fibroareolar tissue and is an important landmark to follow an avascular pathway, ensuring that TME can be performed safely and effectively. Igaki et al[23] developed a DL algorithm to automatically detect areolar tissue using the open-source DeepLabv3plus software (Figure 3).

One limitation of the studies was the uncertainty of detection. According to the experience of Kolbinger et al[22], automatic recognition of thin and small structures is more difficult, e.g., the recognition of the exact position of the dissection line in mesorectal excision. Furthermore, in TME, patient-related aspects such as individual anatomical variations and the history of neoadjuvant (radio) therapy can lead to the dissection lines being very different throughout the dataset and therefore difficult to detect automatically. These limitations could be overcome by technical improvements, e.g., by displaying the detection uncertainty of the target structures using Bayesian calculation methods, which would increase acceptance among surgeons. Another improvement could be real-time display by minimizing the computational delay, which is currently 4 s[22].

The essential points for the integration of the above improvements and in general for the development of better algorithms for automatic recognition are the availability of data, the creation of publicly available datasets for complex surgical procedures, and the creation of multicenter studies for these applications.

In TME, identification of embryonic tissue planes and the closely associated line of dissection at the mesorectal fascia can be challenging because of significant variation due to neoadjuvant (radio) therapy and individual factors such as body composition. AI algorithms can improve intraoperative identification and highlight important parts of the anatomy involved in TME, such as the fibroareolar tissue plane and vascular and neural structures.

In robotic surgery, the use of visual aids could be considered more important than in laparoscopic surgery due to the lack of haptic feedback, i.e., the sense of touch and force feedback that surgeons rely on in traditional open or laparoscopic surgery. To compensate for the lack of haptic feedback, visual augmentation plays a crucial role. In addition, advanced technologies such as fluorescence and near-infrared imaging are frequently used in robotic surgery. These techniques allow visualization of blood flow, tissue perfusion, and identification of vital structures that are not readily visible under normal lighting conditions. Combined with AI-assisted visual enhancements, the surgeon’s ability to make critical decisions and perform delicate maneuvers such as TME with the required precision is improved.

Studies on AI-powered surgical guidance, which uses context-aware ML algorithms to automatically identify anatomical structures, surgical instruments, and surgical phases in complex abdominal surgery, require the creation of publicly available datasets and multicenter studies. This is because the datasets need to be large and diverse enough to train AI algorithms that can be generalized to new patients. Additionally, multicenter studies are necessary to ensure that the results are valid and reproducible.

The use of AI in TME is still in its infancy, but it has the potential to revolutionize the procedure. For example, AI algorithms can be used to identify and highlight key anatomical structures such as the mesorectal fascia, the vascular bundle, and the autonomic nervous structure. This could provide real-time identification of surgical structures and allow surgeons to perform complex procedures more accurately and safely, even in cases where the anatomy is challenging.

AI algorithms can also be used to track the movement of instruments, tissues, and organs during surgery. This can help prevent complications, such as accidental injury to surrounding tissue. For example, AI algorithms can track the movement of the rectum during dissection to prevent accidental perforation. AI algorithms can also be used to improve surgeons’ training and help them become familiar with the complex anatomy of the pelvis and the techniques of TME.

While studies have shown that DL-based algorithms in TME are able to identify fibroareolar tissue and several other anatomical structures, these models have not related the results to postoperative outcomes. This may be due to experienced surgeons evaluating the algorithms, and the true effect is most apparent in those surgeons who are still in the learning phase.

The use of AI in TME is a promising area of research that has the potential to improve the safety and effectiveness of this important surgical procedure. However, more research is needed to fully understand the benefits and risks of this technology, including issues of safety, privacy, and ownership of sensitive data.

Colorectal cancer is a major public health problem, with 1.9 million new cases and 953000 deaths worldwide in 2020. Total mesorectal excision (TME) is the standard of care for the treatment of rectal cancer, but it is a technically challenging surgery. Artificial intelligence (AI) has the potential to improve the performance of TME surgery, especially for surgeons who are still at the beginning of their learning curve.

AI in surgery is a rapidly evolving field with applications in the preoperative, intraoperative, and postoperative settings. In colorectal surgery, AI has been used to automate tasks such as instrument detection and anatomical structure identification. AI has also been used to develop image-guided navigation systems for TME surgery. One of the challenges of AI in surgery is the complexity of the images. Another challenge is the variability of surgical procedures. Recent advances in deep learning have made it possible to develop more accurate and robust AI algorithms for surgical applications.

To investigate the potential of AI in surgery, particularly in colorectal surgery, and the current state of the art. To describe AI algorithms for surgical applications, such as instrument detection, anatomical structure identification, and image-guided navigation systems. To describe their limitations and future developments, such as AI algorithms that can be used in real time. To propose the evaluation of the safety and efficacy of AI in surgery through clinical trials.

A literature search was conducted to identify relevant studies on the use of AI in rectal cancer surgery and specifically in TME. The search was performed using the PubMed electronic database and was limited to studies published between 2020 and 2023. Only articles published in English were included.

The use of AI in rectal cancer surgery and specifically in TME is a rapidly evolving field. There are a number of different AI algorithms that have been developed for use in TME, including algorithms for instrument detection, anatomical structure identification, and image-guided navigation systems.

The results of these studies are promising, but more research is needed to fully evaluate the safety and efficacy of AI in TME. Challenges that need to be overcome before AI can be widely adopted in TME include the need for large datasets of labeled images to train AI algorithms, the need to develop AI algorithms that can be used in real-time, and the need to address the ethical concerns raised by the use of AI in surgery.

AI has the potential to revolutionize TME by providing real-time surgical guidance, preventing complications, and improving training. However, more research is needed to fully understand the benefits and risks of AI in TME.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: Italy

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): 0

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Ma X, China S-Editor: Lin C L-Editor: Filipodia P-Editor: Lin C

| 1. | Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, Bray F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin. 2021;71:209-249. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 75126] [Cited by in RCA: 64583] [Article Influence: 16145.8] [Reference Citation Analysis (176)] |

| 2. | Heald RJ, Ryall RD. Recurrence and survival after total mesorectal excision for rectal cancer. Lancet. 1986;1:1479-1482. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1867] [Cited by in RCA: 1914] [Article Influence: 49.1] [Reference Citation Analysis (0)] |

| 3. | Curtis NJ, Foster JD, Miskovic D, Brown CSB, Hewett PJ, Abbott S, Hanna GB, Stevenson ARL, Francis NK. Association of Surgical Skill Assessment With Clinical Outcomes in Cancer Surgery. JAMA Surg. 2020;155:590-598. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 111] [Article Influence: 27.8] [Reference Citation Analysis (0)] |

| 4. | Ramesh AN, Kambhampati C, Monson JR, Drew PJ. Artificial intelligence in medicine. Ann R Coll Surg Engl. 2004;86:334-338. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 310] [Cited by in RCA: 390] [Article Influence: 18.6] [Reference Citation Analysis (0)] |

| 5. | Quero G, Mascagni P, Kolbinger FR, Fiorillo C, De Sio D, Longo F, Schena CA, Laterza V, Rosa F, Menghi R, Papa V, Tondolo V, Cina C, Distler M, Weitz J, Speidel S, Padoy N, Alfieri S. Artificial Intelligence in Colorectal Cancer Surgery: Present and Future Perspectives. Cancers (Basel). 2022;14. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 17] [Cited by in RCA: 25] [Article Influence: 8.3] [Reference Citation Analysis (0)] |

| 6. | Alapatt D, Mascagni P, Srivastav V, Padoy N. Artificial Intelligence in Surgery: Neural Networks and Deep Learning. In: Hashimoto DA (Ed.) Artificial Intelligence in Surgery: A Primer for Surgical Practice. 2020 Preprint. Available from: arXiv:2009.13411. [DOI] [Full Text] |

| 7. | Mintz Y, Brodie R. Introduction to artificial intelligence in medicine. Minim Invasive Ther Allied Technol. 2019;28:73-81. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 109] [Cited by in RCA: 279] [Article Influence: 46.5] [Reference Citation Analysis (0)] |

| 8. | Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115-118. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5683] [Cited by in RCA: 5355] [Article Influence: 669.4] [Reference Citation Analysis (0)] |

| 9. | Ehteshami Bejnordi B, Veta M, Johannes van Diest P, van Ginneken B, Karssemeijer N, Litjens G, van der Laak JAWM; the CAMELYON16 Consortium, Hermsen M, Manson QF, Balkenhol M, Geessink O, Stathonikos N, van Dijk MC, Bult P, Beca F, Beck AH, Wang D, Khosla A, Gargeya R, Irshad H, Zhong A, Dou Q, Li Q, Chen H, Lin HJ, Heng PA, Haß C, Bruni E, Wong Q, Halici U, Öner MÜ, Cetin-Atalay R, Berseth M, Khvatkov V, Vylegzhanin A, Kraus O, Shaban M, Rajpoot N, Awan R, Sirinukunwattana K, Qaiser T, Tsang YW, Tellez D, Annuscheit J, Hufnagl P, Valkonen M, Kartasalo K, Latonen L, Ruusuvuori P, Liimatainen K, Albarqouni S, Mungal B, George A, Demirci S, Navab N, Watanabe S, Seno S, Takenaka Y, Matsuda H, Ahmady Phoulady H, Kovalev V, Kalinovsky A, Liauchuk V, Bueno G, Fernandez-Carrobles MM, Serrano I, Deniz O, Racoceanu D, Venâncio R. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women With Breast Cancer. JAMA. 2017;318:2199-2210. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1871] [Cited by in RCA: 1556] [Article Influence: 194.5] [Reference Citation Analysis (0)] |

| 10. | Li R, Zhang W, Suk HI, Wang L, Li J, Shen D, Ji S. Deep learning based imaging data completion for improved brain disease diagnosis. Med Image Comput Comput Assist Interv. 2014;17:305-312. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 185] [Cited by in RCA: 146] [Article Influence: 13.3] [Reference Citation Analysis (0)] |

| 11. | Fu F, Shan Y, Yang G, Zheng C, Zhang M, Rong D, Wang X, Lu J. Deep Learning for Head and Neck CT Angiography: Stenosis and Plaque Classification. Radiology. 2023;307:e220996. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 31] [Reference Citation Analysis (0)] |

| 12. | Bollen H, Willems S, Wegge M, Maes F, Nuyts S. Benefits of automated gross tumor volume segmentation in head and neck cancer using multi-modality information. Radiother Oncol. 2023;182:109574. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 13. | Bándi P, Balkenhol M, van Dijk M, Kok M, van Ginneken B, van der Laak J, Litjens G. Continual learning strategies for cancer-independent detection of lymph node metastases. Med Image Anal. 2023;85:102755. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 14. | Xu X, Xi L, Wei L, Wu L, Xu Y, Liu B, Li B, Liu K, Hou G, Lin H, Shao Z, Su K, Shang Z. Deep learning assisted contrast-enhanced CT-based diagnosis of cervical lymph node metastasis of oral cancer: a retrospective study of 1466 cases. Eur Radiol. 2023;33:4303-4312. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 17] [Reference Citation Analysis (0)] |

| 15. | Assaad S, Dov D, Davis R, Kovalsky S, Lee WT, Kahmke R, Rocke D, Cohen J, Henao R, Carin L, Range DE. Thyroid Cytopathology Cancer Diagnosis from Smartphone Images Using Machine Learning. Mod Pathol. 2023;36:100129. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 7] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 16. | Maier-Hein L, Eisenmann M, Sarikaya D, März K, Collins T, Malpani A, Fallert J, Feussner H, Giannarou S, Mascagni P, Nakawala H, Park A, Pugh C, Stoyanov D, Vedula SS, Cleary K, Fichtinger G, Forestier G, Gibaud B, Grantcharov T, Hashizume M, Heckmann-Nötzel D, Kenngott HG, Kikinis R, Mündermann L, Navab N, Onogur S, Roß T, Sznitman R, Taylor RH, Tizabi MD, Wagner M, Hager GD, Neumuth T, Padoy N, Collins J, Gockel I, Goedeke J, Hashimoto DA, Joyeux L, Lam K, Leff DR, Madani A, Marcus HJ, Meireles O, Seitel A, Teber D, Ückert F, Müller-Stich BP, Jannin P, Speidel S. Surgical data science - from concepts toward clinical translation. Med Image Anal. 2022;76:102306. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 144] [Cited by in RCA: 120] [Article Influence: 40.0] [Reference Citation Analysis (0)] |

| 17. | Gunn AA. The diagnosis of acute abdominal pain with computer analysis. J R Coll Surg Edinb. 1976;21:170-172. [PubMed] |

| 18. | Spinelli A, Carrano FM, Laino ME, Andreozzi M, Koleth G, Hassan C, Repici A, Chand M, Savevski V, Pellino G. Artificial intelligence in colorectal surgery: an AI-powered systematic review. Tech Coloproctol. 2023;27:615-629. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 22] [Cited by in RCA: 17] [Article Influence: 8.5] [Reference Citation Analysis (0)] |

| 19. | Kitaguchi D, Takeshita N, Matsuzaki H, Igaki T, Hasegawa H, Kojima S, Mori K, Ito M. Real-time vascular anatomical image navigation for laparoscopic surgery: experimental study. Surg Endosc. 2022;36:6105-6112. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 14] [Reference Citation Analysis (0)] |

| 20. | Jin A, Yeung S, Jopling J, Krause J, Azagury D, Milstein A, Fei-Fei L. Tool Detection and Operative Skill Assessment in Surgical Videos Using Region-Based Convolutional Neural Networks. 2018 Preprint. Available from: arXiv:1802.08774. [DOI] [Full Text] |

| 21. | Mascagni P, Vardazaryan A, Alapatt D, Urade T, Emre T, Fiorillo C, Pessaux P, Mutter D, Marescaux J, Costamagna G, Dallemagne B, Padoy N. Artificial Intelligence for Surgical Safety: Automatic Assessment of the Critical View of Safety in Laparoscopic Cholecystectomy Using Deep Learning. Ann Surg. 2022;275:955-961. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 172] [Cited by in RCA: 126] [Article Influence: 42.0] [Reference Citation Analysis (0)] |

| 22. | Kolbinger FR, Bodenstedt S, Carstens M, Leger S, Krell S, Rinner FM, Nielen TP, Kirchberg J, Fritzmann J, Weitz J, Distler M, Speidel S. Artificial Intelligence for context-aware surgical guidance in complex robot-assisted oncological procedures: An exploratory feasibility study. Eur J Surg Oncol. 2023;106996. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 12] [Article Influence: 12.0] [Reference Citation Analysis (0)] |

| 23. | Igaki T, Kitaguchi D, Kojima S, Hasegawa H, Takeshita N, Mori K, Kinugasa Y, Ito M. Artificial Intelligence-Based Total Mesorectal Excision Plane Navigation in Laparoscopic Colorectal Surgery. Dis Colon Rectum. 2022;65:e329-e333. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 39] [Cited by in RCA: 28] [Article Influence: 9.3] [Reference Citation Analysis (0)] |

| 24. | Jayne D, Pigazzi A, Marshall H, Croft J, Corrigan N, Copeland J, Quirke P, West N, Rautio T, Thomassen N, Tilney H, Gudgeon M, Bianchi PP, Edlin R, Hulme C, Brown J. Effect of Robotic-Assisted vs Conventional Laparoscopic Surgery on Risk of Conversion to Open Laparotomy Among Patients Undergoing Resection for Rectal Cancer: The ROLARR Randomized Clinical Trial. JAMA. 2017;318:1569-1580. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 689] [Cited by in RCA: 919] [Article Influence: 114.9] [Reference Citation Analysis (0)] |

| 25. | Alsheakhali M, Eslami A, Roodaki H, Navab N. CRF-Based Model for Instrument Detection and Pose Estimation in Retinal Microsurgery. Comput Math Methods Med. 2016;2016:1067509. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 8] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 26. | Madani A, Namazi B, Altieri MS, Hashimoto DA, Rivera AM, Pucher PH, Navarrete-Welton A, Sankaranarayanan G, Brunt LM, Okrainec A, Alseidi A. Artificial Intelligence for Intraoperative Guidance: Using Semantic Segmentation to Identify Surgical Anatomy During Laparoscopic Cholecystectomy. Ann Surg. 2022;276:363-369. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 203] [Cited by in RCA: 164] [Article Influence: 54.7] [Reference Citation Analysis (0)] |

| 27. | Heald RJ. The 'Holy Plane' of rectal surgery. J R Soc Med. 1988;81:503-508. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 537] [Cited by in RCA: 492] [Article Influence: 13.3] [Reference Citation Analysis (0)] |