Published online Dec 28, 2022. doi: 10.35712/aig.v3.i5.117

Peer-review started: October 9, 2022

First decision: October 29, 2022

Revised: November 21, 2022

Accepted: December 21, 2022

Article in press: December 21, 2022

Published online: December 28, 2022

Processing time: 79 Days and 8.7 Hours

Artificial intelligence (AI) is a complex concept, broadly defined in medicine as the development of computer systems to perform tasks that require human intelligence. It has the capacity to revolutionize medicine by increasing efficiency, expediting data and image analysis and identifying patterns, trends and associations in large datasets. Within gastroenterology, recent research efforts have focused on using AI in esophagogastroduodenoscopy, wireless capsule endoscopy (WCE) and colonoscopy to assist in diagnosis, disease monitoring, lesion detection and therapeutic intervention. The main objective of this narrative review is to provide a comprehensive overview of the research being performed within gastroenterology on AI in esophagogastroduodenoscopy, WCE and colonoscopy.

Core Tip: Artificial intelligence (AI) is a complex concept that has the capacity to revolutionize medicine. Within gastroenterology, recent research efforts have focused on using AI in esophagogastroduodenoscopy, wireless capsule endoscopy (WCE) and colonoscopy to assist in diagnosis, disease monitoring, lesion detection and therapeutic intervention. This narrative review provides a comprehensive overview of the research being performed within gastroenterology on AI in esophagogastroduodenoscopy, WCE and colonoscopy.

- Citation: Galati JS, Duve RJ, O'Mara M, Gross SA. Artificial intelligence in gastroenterology: A narrative review. Artif Intell Gastroenterol 2022; 3(5): 117-141

- URL: https://www.wjgnet.com/2644-3236/full/v3/i5/117.htm

- DOI: https://dx.doi.org/10.35712/aig.v3.i5.117

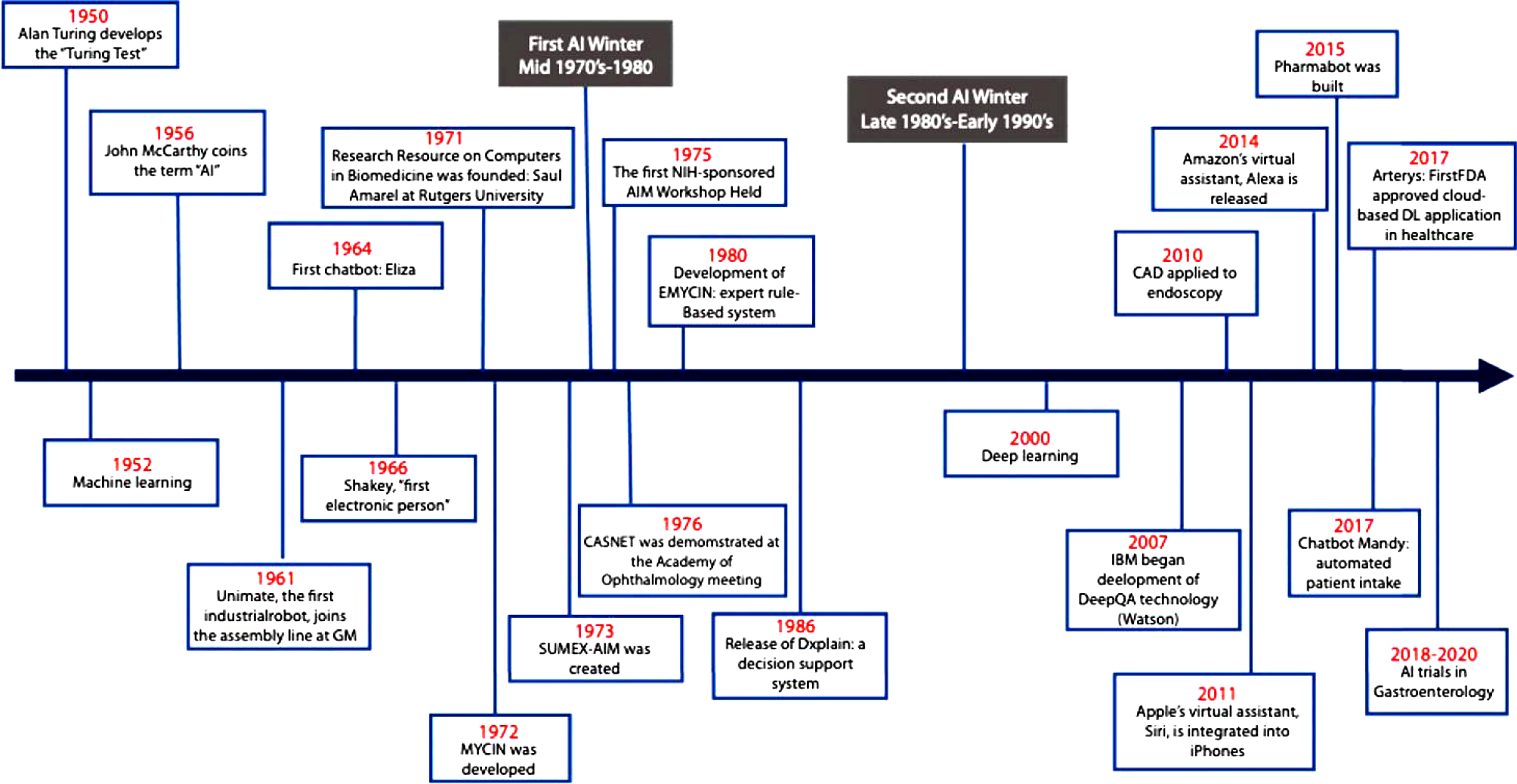

Artificial intelligence (AI) is a complex concept, broadly defined in medicine as the development of computer systems to perform tasks that require human intelligence[1]. Since its inception in the 1950s, the field of AI has grown considerably (Figure 1)[2]. Often AI is accompanied by the terms machine learning (ML) and deep learning (DL), techniques used within the field of AI to develop systems that can learn and adapt without explicit instructions. Machine learning uses self-learning algorithms that derive knowledge from data to predict outcomes[1]. There are two main categories within ML: Supervised and unsupervised learning. In supervised learning, the AI is trained on a dataset in which human intervention has previously assigned a hierarchy of features which allows the algorithm to understand differences between data inputs and classify or predict outcomes[3]. In unsupervised learning, the system is provided a dataset that has not been categorized by human intervention. The algorithm then analyzes the data with the goal of identifying labels or patterns[3].

Deep learning is a subfield of ML that utilizes artificial neural networks (ANN) to analyze data. In DL, the system is able to analyze raw data and determine features that distinguish between data inputs. ANN systems are composed of interconnected nodes in a layered structure similar to how neurons are organized in the human brain. The weight of the connections between each node influences how the system can recognize, classify, and describe objects within data[3,4]. ANNs with multiple layers of nodes are classified as deep neural networks which form the backbone of deep learning.

Artificial intelligence has the capacity to revolutionize medicine. It can be used to increase efficiency by aiding in appointment scheduling, reviewing insurance eligibility, or tracking patient history. AI can also expedite data and image analysis and detect patterns, trends and associations[5]. Within gastroenterology, AI’s prominence stems from its utility in image analysis[5,6]. Many gastrointestinal diseases rely on endoscopic evaluation for diagnosis, disease monitoring, lesion detection and therapeutic intervention. However, endoscopic evaluation is heavily operator dependent and thus subject to operator bias and human error. As such, recent efforts have focused on using AI in esophagogastroduodenoscopy, wireless capsule endoscopy (WCE) and colonoscopy to mitigate these issues, serving as an additional objective observer of the intestinal tract. The main objective of this narrative review is to provide a comprehensive overview of the research being performed within gastroenterology on artificial intelligence in esophagogastroduodenoscopy, WCE and colonoscopy. While other narrative reviews have been published regarding the use of artificial intelligence in esophagogastroduodenoscopy, WCE and colonoscopy, this narrative review goes a step further by providing a granular and more technical assessment of the literature. As such, this narrative review is intended for medical providers and researchers who are familiar with the use of artificial intelligence in esophagogastroduodenoscopy, WCE and colonoscopy and are interested in obtaining an in-depth review in a specific area.

Electronic databases Embase, Ovid Medicine, and PubMed were searched from inception to September 2022 using multiple search queries. Combinations of the terms “artificial intelligence”, “AI”, “computer aided”, “computer aided detection”, “CADe”, “convolutional neural network”, “deep learning”, “DCNN”, “machine learning”, “colonoscopy”, “endoscopy”, “wireless capsule endoscopy”, “capsule endoscopy”, “WCE”, “esophageal cancer”, esophageal adenocarcinoma”, “esophageal squamous cell carcinoma”, “gastric cancer”, “gastric neoplasia”, “gastric lesions”, “Barrett’s esophagus”, “celiac disease”, “Helicobacter pylori”, “Helicobacter pylori infection”, “H pylori”, “H pylori infection”, “gastric ulcers”, “duodenal ulcers”, “inflammatory bowel disease”, “IBD”, “ulcerative colitis”, “Crohn’s disease”, “parasitic infections”, “hookworms”, “bleeding”, “gastrointestinal bleeding”, “vascular lesions”, “angioectasias”, “polyp”, “polyp detection”, “tumor”, “gastrointestinal tumor”, “small bowel tumor”, “bowel preparation”, “Boston bowel preparation scale”, “BBPS”, “adenoma”, “adenoma detection”, “adenoma detection rate”, “sessile serrated lesion”, and “sessile serrated lesion rate” were used. We subsequently narrowed the results to clinical trials in human published within the last 10 years.

Barrett’s esophagus (BE) is a premalignant condition associated with esophageal adenocarcinoma (EAC)[7-9]. It is caused by chronic inflammation and tissue injury of the lower esophagus as a result of gastric reflux[7-9]. Early detection and diagnosis can prevent the progression of BE to EAC[7-9]. Patients with BE should undergo routine surveillance endoscopies to monitor for progression. However, even with surveillance, dysplastic changes can be easily missed[7]. To improve the detection of dysplastic changes in BE, researchers have focused on developing AI systems to assist with the identification of dysplasia and early neoplasia during endoscopic evaluation.

Since 2016, a group of researchers from the Netherlands have developed numerous AI systems to identify neoplastic lesions in BE[10-16]. Their first publication detailed their experience using a support vector machine (SVM), a ML method, to identify early neoplastic lesions from white light endoscopy (WLE) images[10]. Their SVM achieved a sensitivity and specificity of 83% with respect to per-image detection and sensitivity of 86% and specificity of 87% with respect to per-patient detection[10]. In their next study, the group trialed several different feature extraction and ML methods using volumetric laser endomicroscopy (VLE) images[11]. They received the best results with the feature extraction module “layering and signal decay statistics”, achieving high sensitivity (90%) and specificity (93%) with area under the curve (AUC) 0.95 for neoplastic lesion detection[11]. Following this, they conducted a second studying again using ML in VLE to identify neoplastic lesions in BE, however, they used a multiframe analysis approach, including frames neighboring the region of interest in the analysis[12]. With this approach, they found that multiframe analysis resulted in a significantly higher median AUC when compared to single frame analysis (0.91 vs 0.83; P < 0.001)[12]. Continuing to use ML methods, the group published their finding from the ARGOS project – a consortium of three international tertiary referral centers for Barrett’s neoplasia[13]. In this study, de Groof et al[13] created a computer-aided detection (CADe) system that used SVM to classify images. The group tested the CADe with 60 images – 40 images from patients with a neoplastic lesion, 20 images from patients with non-dysplastic Barrett’s esophagus. The CADe achieved an AUC of 0.92 and a sensitivity, specificity and accuracy of 95%, 85% and 92% respectively for detecting neoplastic lesions[13].

Following their successes creating ML systems for neoplastic lesion detection, the group of researchers from the Netherlands shifted their focus to DL methods. In their first foray into DL, they developed a hybrid CADe system using architecture from ResNet and U-Net models. The CADe was trained with 494364 labeled endoscopic images and subsequently refined with a data set comprised of 1247 WLE images. It was finally tested on a set of 297 images (129 images with early neoplasia, 168 with non-dysplastic BE) where the hybrid CADe system attained a sensitivity of 87.6%, specificity of 88.6% and accuracy of 88.2% for identifying early neoplasia[14]. The system was also tested in two external validation sets where it achieved similar results. A secondary outcome of the study was to see if within the images classified as having neoplasia if the CADe could delineate the neoplasia and recommend a site for biopsy. The ground truth was determined by expert endoscopists. In two external data sets (external validation data set 4 and 5), the CADe identified the optimal biopsy site in 97.2% of cases and 91.9% of cases respectively[14]. Using a similar hybrid CADe, the group performed a pilot study testing the CADe during live endoscopic procedures[15]. Overall, the CADe achieved a sensitivity of 75.8%, specificity of 86.5% and accuracy of 84% in per-image analyses[15]. Their most recent study again used their hybrid ResNet and U-Net CADe to identify neoplastic lesions in narrow-band imaging (NBI)[16]. With respect to NBI images, the CADe was found to have sensitivity of 88% (95%CI 86%-94%), specificity of 78% (95%CI 72%-84%), and accuracy of 84% (95%CI 81%-88%) for identifying BE neoplasia[16]. In per frame and per video analyses, the CADe achieved sensitivities of 75% and 85%, specificities of 90% and 83% and accuracies of 85% and 83% respectively[16].

Outside of this group from the Netherlands, several other researchers have created DL systems for the detection of BE neoplasia[17-21]. Hong et al[17] created a CNN that could distinguish between intestinal metaplasia, gastric metaplasia and neoplasia from images obtained by endomicroscopy in patients with Barrett’s esophagus with accuracy of 80.8%. Ebigbo et al[18] created a DL-CADe capable of detecting BE neoplasia with sensitivity 83.7%, specificity of 100.0% and accuracy of 89.9%. Two other groups achieved similar results to Ebigbo et al[18]: Hashimoto et al’s CNN detected early neoplasia with sensitivity of 96.4%, specificity of 94.2%, and accuracy of 95.4% and Hussein et al’s CNN detected early neoplasia with sensitivity 91%, specificity 79%, area under the receiver operating characteristic (AUROC) of 93%[19,20]. An overview of these studies is provided in Table 1.

| Ref. | Country | Study design | AI Classifier | Lesions | Training dataset | Test dataset | Sensitivity (%) | Specificity (%) | Accuracy (%) | AUROC |

| Swager et al[11], 2017 | Netherlands | Retrospective | ML2 methods | NPL | - | 60 VLE images | 90 | 93 | - | 0.95 |

| van der Sommen et al[10], 2016 | Netherlands | Retrospective | SVM | NPL | - | 100 WLE images | 83 | 83 | - | - |

| Hong et al[17], 2017 | South Korea | Retrospective | CNN | NPL, IM, GM | 236 endomicroscopy images | 26 endomicroscopy images | - | - | 80.77 | - |

| de Groof et al[13], 2019 | Netherlands, Germany, Belgium | Prospective | SVM | NPL | - | 60 WLE images | 95 | 85 | 91.7 | 0.92 |

| Ebigbo et al[21], 2019 | Germany, Brazil | Retrospective | CNN | EAC | Augsburg dataset: 148 WLE images and NBI; MICCAI dataset: 100 WLE images | 97; 94a; 92 | 88; 80a; 100 | - | - | |

| Ghatwary et al[24], 2019 | England, Egypt | Retrospective | Multiple CNNs | EAC | Images from 21 patients | Images from 9 patients | 96 | 92 | - | - |

| de Groof et al[14], 2020 | Netherlands, France, Sweden, Germany, Belgium, Australia | Ambispective | CNN | NPL | Dataset 1: 494364 images; Dataset 2:1; 247 images; Dataset 3: 297 images | Dataset 3: 297 images; Dataset 4: 80 images; Dataset 5: 80 images | 90b | 87.5b | 88.8b | - |

| de Groof et al[15], 2020 | Netherlands, Belgium | Prospective | CNN | NPL | 495611 images | 20 patients; 144 WLE images | 75.8 | 86.5 | 84 | - |

| Ebigbo et al[18], 2020 | Germany, Brazil | Prospective | CNN | EAC | 129 images | 62 images | 83.7 | 100 | 89.9 | - |

| Hashimoto et al[19], 2020 | United States | Retrospective | CNN | NPL | 1374 images | 458 images | 96.4 | 94.2 | 95.4 | - |

| Struyvenberg et al[12], 2020 | Netherlands | Prospective | ML2 methods | NPL | - | 3060 VLE frames | - | - | - | 0.91 |

| Iwagami et al[25], 2021 | Japan | Retrospective | CNN | EJC | 3443 images | 232 images | 94 | 42 | 66 | - |

| Struyvenberg et al[16], 2021 | Netherlands, Sweden, Belgium | Retrospective | CNN | NPL | 495611 images | 157 NBI zoom videos; 30021 frames | 851; 75 | 831; 90 | 831; 85 | - |

| Hussein et al[20], 2022 | England, Spain, Belgium, Austria | Prospective | CNN | DPL | 148936 frames | 264 iscan-1 images | 91 | 79 | - | 0.93 |

In addition to neoplasia detection, some groups started to use AI to grade BE and predict submucosal invasion of lesions. Ali et al[22] recently published the results from a pilot study using a DL system to quantitatively assess BE area (BEA), circumference and maximal length (C&M). They tested their DL system on 3D printed phantom esophagus models with different BE patterns and 194 videos from 131 patients with BE. In the phantom esophagus models, the DL system achieved an accuracy of 98.4% for BEA and 97.2% for C&M[22]. In the patient videos, the DL system differed from expert endoscopists by 8% and 7% for C&M respectively[22]. Ebigbo et al[23], building upon their earlier success using a DL CADe to detect neoplasia, performed a pilot study using a 101-layer CNN to differentiate T1a (mucosal) and T1b (submucosal) BE related cancers. Using 230 WLE images obtained from three tertiary care centers in Germany, their CNN was capable of discerning T1a lesions from T1b lesions with sensitivity, specificity and accuracy of 77%, 64% and 71% respectively, comparable to the expert endoscopists enrolled in the study[23].

Despite BE’s potential progression to EAC if left unmanaged, few studies have explicitly looked at using AI to detect EAC. Ghatwary et al[24] tested several DL models on 100 WLE images (50 featuring EAC, 50 featuring normal mucosa) to determine which was best at identifying EAC. They found that the Single-Shot Multibox Detector (SSD) method achieved the best results, attaining a sensitivity of 96% and specificity of 92%[24]. In 2021, Iwagami et al[25] focused on developing an AI system to identify esophagogastric junctional adenocarcinomas. They used SSD for their CNN, achieving a sensitivity, specificity and accuracy of 94%, 42% and 66% for detecting esophagogastric junctional adenocarcinomas. Their CNN performed similarly to endoscopists enrolled in the study (sensitivity 88%, specificity 43%, accuracy 66%)[25].

Esophageal squamous cell carcinoma (ESCC) is the most common histologic type of esophageal cancer in the world[26]. While certain imaging modalities such as Lugol’s chromoendoscopy and confocal microendoscopy are effective at improving the accuracy, sensitivity and specificity of targeted biopsies, they are expensive and not universally available[27]. In recent years, efforts have focused on developing AI systems to support lower cost imaging modalities in order to improve their ability to detect ESCC.

Shin et al[27] and Quang et al[28] created ML algorithms which they tested on high-resolution microendoscope images, obtaining comparable sensitivities for the detection of ESCC (98% and 95% respectively). Following these studies, several groups created DL systems to detect ESCC[29-38]. In Cai et al’s study, their deep neural network-CADe was tested on 187 images obtained from WLE. The system obtained good sensitivity (97.8%), specificity (85.4%) and accuracy (91.4%) for identifying ESCC[29]. Similar findings occurred in three separate studies that used deep convolutional neural networks (DCNNs) to detect ESCC in WLE[30-32]. Using NBI, Guo et al[33] created a CADe that achieved high sensitivity (98.0%), specificity (95.0%) and an AUC of 0.99 for detecting ESCC in still images. Similar results were obtained in Li et al’s study[35]. For detecting ESCC in NBI video clips, Fukuda et al[34] obtained different results, finding similar sensitivity (91%) to Guo et al[33] however substantially lower specificity (51%). Three studies compared a DL-CADe with WLE to DL-CADe with NBI for the detection of ESCC[32,35,36]. The results from these three studies were quite discordant and as such a statement regarding whether a DL-CADe with WLE or DL-CADe with NBI is better for the detection of ESCC cannot be made at this time.

Interestingly, several studies used DL algorithms to assess ESCC invasion depth[39,40]. Everson et al[39] and Zhao et al[40] created CNNs to detect intrapapillary capillary loops, a feature of ESCC that correlates with invasion depth, in images obtained from magnification endoscopy with NBI. They achieved similar findings with Everson et al’s CNN achieving an accuracy of 93.7% and Zhao et al’s achieving an accuracy of 89.2%[39,40]. Using DL, two groups created DCNNs to directly detect ESCC invasion depth[41-43]. One group from Osaka International Cancer Institute conducted two studies using SSD to create their DCNNs[41,42]. The DCNNs were made to classify images as EP-SM1 or EP-SM2-3 as this distinction in ESCC bares clinical significance. The studies (Nakagawa et al[41] and Shimamoto et al[42]) attained similar accuracies and specificities, however had substantially different sensitivities (90.1% vs 50% and 71%)[41,42]. The third study, Tokai et al[43], used SSD as well for their DCNN and also programed the DCNN to classify images as EP-SM1 or EP-SM2-3. Their observed sensitivity, specificity and accuracy were lower than those found by Nakagawa et al[41] (84.1%, 73.3% and 80.9% respectively).

Gastric cancer is the third leading cause of cancer-related mortality in the world[44,45]. Early detection of precancerous lesions or early gastric cancer with endoscopy can prevent progression to advanced disease[46]. However, a substantial number of upper gastrointestinal cancers are missed placing patients at risk for interval development[45]. To mitigate this risk, AI systems are being develop to assist with lesion detection.

In 2013, Miyaki et al[47] used a bag-of-features framework with densely sampled scale-invariant feature transform descriptors to classify still images obtained from magnifying endoscopy with flexible spectral imaging color enhancement as having or not having gastric cancer. Their system, a rudimentary version of ML, obtained good sensitivity (84.8%), specificity (87.0%) and accuracy (85.9%) for identifying gastric cancer[47]. Using SVM, Kanesaka et al[48] found higher sensitivity (96.7%), specificity (95%) and accuracy (96.3%).

Following these successes, several groups began using CNNs for the identification of gastric cancer[44-46,49-59]. In 2018, Hirasawa et al[44] published one of the first papers to use a CNN (SSD) to detect gastric cancer. In a test set of 2296 images, the CNN had a sensitivity of 92.2% for identifying gastric cancer lesions[44]. In a larger study, Tang et al[49] created a DCNN to detect gastric cancer in a test set of 9417 images and 26 endoscopy videos. With respect to their test set, the DCNN performed well, achieving a sensitivity of 95.5% (95%CI 94.8%–96.1%), specificity of 81.7% (95%CI 80.7%–82.8%), accuracy of 87.8% (95%CI 87.1%–88.5%) and AUC 0.94[49]. The DCNN continued to perform well in external validation sets, achieving sensitivity of 85.9%-92.1%, specificity of 84.4%-90.3%, accuracy of 85.1%-91.2% and AUC 0.89-0.93[49]. Compared to expert endoscopists, the DCNN attained higher sensitivity, specificity and accuracy. In the video set, the DCNN achieved a sensitivity of 88.5% (95%CI 71.0%-96.0%)[49]. Several studies using DCNN to detect gastric cancer in endoscopy images obtained similar sensitivities, specificities and accuracies to Tang et al[49]. While one study reported a sensitivity of 58.4% for detecting gastric cancer, the sensitivity for the study’s 67 endoscopists was 31.9%[55].

Recently, several groups from China and Japan have published studies using CNNs with magnified endoscopy with NBI (ME-NBI) in an effort to improve early gastric cancer detection[56-59]. Using a 22-layer CNN, Horiuchi et al[56] achieved a sensitivity, specificity and accuracy of 95.4%, 71.0% and 85.3% respectively for identifying early gastric cancer from a set of 258 ME-NBI images (151 gastric cancer, 107 gastritis). The same group published a similar study the following year however using ME-NBI videos instead of still images[57]. They obtained similar results: sensitivity of 87.4% (95%CI 78.8%-92.8%), specificity of 82.8% (95%CI 73.5%-89.3%) and accuracy of 85.1% (955 CI 79.0%-89.6%)[57]. Hu et al[58] and Ueyama et al[59] in their studies using CNN to identify gastric cancer in ME-NBI achieved similar sensitivities, specificities and accuracies as Horiuchi et al[56]. An overview of these studies is provided in Table 2.

| Ref. | Country | Study design | AI classifier | Lesions | Training dataset | Test dataset | Sensitivity (%) | Specificity (%) | Accuracy (%) | AUROC |

| Miyaki et al[47], 2013 | Japan | Prospectivea | SVM | Gastric cancer | 493 FICE-derived magnifying endoscopic images | 92 FICE-derived magnifying endoscopic images | 84.8 | 97 | 85.9 | - |

| Kanesaka et al[48], 2018 | Japan, Taiwan | Retrospective | SVM | EGC | 126 M-NBI images | 81 M-NBI images | 96.7 | 95 | 96.3 | - |

| Wu et al[50], 2019 | China | Retrospective | CNN | EGC | 9151 images | 200 images | 94 | 91 | 92.5 | - |

| Cho et al[51], 2019 | South Korea | Ambispective | CNN | Advanced gastric cancer, EGC, high grade dysplasia, low grade dysplasia, non-neoplasm | 4205 WLE images | 812 WLE images; 200 WLE images | - | - | 86.6b; 76.4 | 0.877b |

| Tang et al[49], 2020 | China | Retrospective | CNN | EGC | 35823 WLE images | Internal: 9417 WLE images; External: 1514 WLE images1 | 95.51; 85.9-92.1 | 81.71; 84.4-90.3 | 87.81; 85.1-91.2 | 0.941; 0.887-0.925 |

| Namikawa et al[52], 2020 | Japan | Retrospective | CNN | Gastric cancer | 18410 images | 1459 images | 99 | 93.3 | 99 | - |

| Horiuchi et al[56], 2020 | Japan | Retrospective | CNN | EGC | 2570 M-NBI images | 258 M-NBI images | 95.4 | 71 | 85.3 | 0.852 |

| Horiuchi et al[57], 2020 | Japan | Retrospective | CNN | EGC | 2570 M-NBI images | 174 videos | 87.4 | 82.8 | 85.1 | 0.8684 |

| Guo et al[54], 2021 | China | Retrospective | CNN | Gastric cancer, erosions/ulcers, polyps, varices | 293162 WLE images | 33959 WLE images | 67.52; 85.1 | 70.92; 90.3 | - | - |

| Ikenoyama et al[55], 2021 | Japan | Retrospective | CNN | EGC | 13584 WLE and NBI images | 2940 WLE and NBI images | 58.4 | 87.3 | - | - |

| Hu et al[58], 2021 | China | Retrospective | CNN | EGC | M-NBI images from 170 patients | Internal: M-NBI from 73 patients External: M-NBI images from 52 patients | 79.23; 78.2 | 74.53; 74.1 | 773; 76.3 | 0.8083; 0.813 |

| Ueyama et al[59], 2021 | Japan | Retrospective | CNN | EGC | 5574 M-NBI images | 2300 M-NBI | 98 | 100 | 98.7 | - |

| Yuan et al[53], 2022 | China | Retrospective | CNN | EGC, advanced gastric cancer, submucosal tumor, polyp, peptic ulcer, erosion, and lesion-free gastric mucosa | 29809 WLE images | 1579 WLE images | 59.24; 100 | 99.34; 98.1 | 93.54; 98.4 | - |

Of increasing interest to researchers within this field is predicting invasion depth of gastric cancer using AI. Few studies have used CNNs to predict invasion depth[60-63]. Yoon et al[60] created a CNN to predict gastric cancer lesion depth from standard endoscopy images. The CNN achieved good sensitivity (79.2%) and specificity (77.8%) for differentiating T1a (mucosal) from T1b (submucosal) gastric cancers (AUC 0.851)[60]. Also using standard endoscopy images, Zhu et al[61] attained similar results. They trained their CNN to identify P0 (restricted to the mucosa or < 0.5 mm within the muscularis mucosae) vs P1 (≥ 0.5 mm deep into the muscularis mucosae) lesions. The CNN achieved a sensitivity of 76.6%, specificity of 95.6%, accuracy of 89.2% and AUROC 0.94 (95%CI 0.90-0.97). Cho et al[62] using DenseNet-161 as their CNN and Nagao et al[62] using ResNet50 as their CNN obtained comparable results to Zhu et al[61] for predicting gastric cancer invasion depth from endoscopy images.

Within recent years, numerous studies have been published regarding the use of AI to assist with the detection and classification of gastric lesions. Few of these studies explicitly used AI systems to detect duodenal and gastric ulcers, however they report data pertaining to ulcer detection.

Using YOLOv5, a deep learning object detection model, Ku et al[64] created a CADe system capable of detecting multiple gastric lesions with good precision (98%) and sensitivity (89%). Also using YOLO for their DCNN, Yuan et al[53] achieved an overall system accuracy of 85.7% for gastric lesion identification. With respect to peptic ulcer detection, their system achieved an accuracy of 95.4% (93.5%-97.2%), sensitivity of 86.2% (77.5%–94.8%) and specificity of 96.8% (95.1%–98.4%)[53]. Guo et al[54] used ResNet50 to construct their CADe designed to detect gastric lesions. Their CADe achieved lower sensitivity 71.4% (95%CI 69.5–73.2%) and specificity 70.9% (95%CI 70.3–71.4%) than Yuan et al’s DCNN[53], however Guo et al[54] combined erosions and ulcers into one category for analysis. With their primary outcome being classifying gastric cancers and ulcers, Namikawa et al[52] developed a CNN capable of identifying gastric ulcers with high sensitivity (93.3%; 95%CI 87.3%−97.1%) and specificity (99.0%; 95%CI 94.6%-100%).

As a risk factor for future development of gastric cancer, early detection and eradication of Helicobacter pylori (H. pylori) in infected individuals is important. Endoscopic evaluation for H. pylori is highly operator dependent[65]. Pairing artificial intelligence with endoscopy for the detection of H. pylori could possibly reduce false results.

Shichijo et al[66] used GoogLeNet, a DCNN consisting of 22 layers, to evaluate 11481 images obtained from 397 patients (72 H. pylori positive, 325 negative) for the presence or absence of H. pylori infection. GoogLeNet attained a sensitivity of 81.9% (95%CI 71.1%-90.0%), specificity of 83.4% (95%CI 78.9%-87.3%) and accuracy of 83.1% (95%CI 79.1%-86.7%) with AUROC 0.89 for detecting H. Pylori infection[66]. When compared to endoscopists enrolled in the study, the sensitivity, specificity and accuracy attained by GoogLeNet was comparable to those attained by the endoscopists[66]. This same group published a second study in 2019 again using GoogLeNet for their DCNN[67]. However, a different optimization technique was used to prepare GoogLeNet. The DCNN was tasked with classifying images as H. pylori positive, negative or eradicated. In a set of 23699 images, the DCNN attained an accuracy of 80% for H. pylori negative, 84% for H. pylori eradicated, and 48% for H. pylori positive[67]. Also using GoogLeNet, Itoh et al[68] obtained similar results to Shichijo et al’s 2017 study with respect to sensitivity (86.7%) and specificity (86.7%)[66]. Using ResNet-50 as their architectural unit for their DCNN, Zheng et al[69] were successful in classifying images as H. pylori positive or negative, achieving a sensitivity, specificity, accuracy and AUC of 81.4% (95%CI 79.8%–82.9%), 90.1% (95%CI 88.4%–91.7%), 84.5% (95%CI 83.3%–85.7%) and 0.93 (95%CI 0.92–0.94) respectively.

Taking a different approach, Yasuda et al[70] used linked color imaging (LCI) with SVM to identify H. pylori infection. The LCI images were classified into high-hue and low-hue images based on redness and classified by SVM as H. pylori positive or negative. This method attained a sensitivity, specificity and accuracy of 90.4%, 85.7% and 87.6% respectively[70]. Combining LCI with a deep learning CADe system, Nakashima et al[71] achieved a sensitivity, specificity and accuracy of 92.5%, 80.0%, 84.2% for identifying H. pylori negative images, 62.5%, 92.5%, 82.5% for H pylori positive images, 65%, 86.2%, 79.2% for H. pylori post-eradication images respectively.

While immunological tests can support the diagnosis of celiac disease, definitive diagnosis requires histological assessment of duodenal biopsies[72]. As such being able to identify changes in the duodenal mucosa consistent with celiac disease is important. However, these changes can be subtle and difficult to appreciate. Few studies have been published using a CADe system to detect or diagnose celiac disease.

In 2016, Gadermayr et al[73] created a system that combined expert knowledge acquisition with feature extraction to classify duodenal images obtained from 290 children as Marsh-0 (normal mucosa) or Marsh-3 (villous atrophy). Expert knowledge acquisition was achieved by having one of three study endoscopists assign a Marsh grade of 0 or 3 to an image. Feature extraction was accomplished using one of three methods: (1) multi-resolution local binary patterns; (2) multi-fractal spectrum; and (3) improved Fisher vectors. From expert knowledge acquisition and feature extraction, their classification algorithm identified images as Marsh-0 or Marsh-3. With optimal settings, the classification algorithm achieved an accuracy of 95.6%-99.6%[73]. In 2016, Wimmer et al[74] used CNN to detect celiac disease in a set of 1661 images (986 images of normal mucosa, 675 images of celiac disease) with varying convolutional blocks. Their CNN achieved the best overall classification rate (90.3%) with 4 convolutional blocks[74]. Taking their CNN a step further, they combined the CNN with 4 convolutional blocks with SVM which increased overall classification rate by 6.7%[74]. While interesting, Gadermayr et al’s method requires human intervention and the paper’s methodology is quite complicated[73], largely in part to the extensive number of systems tested. Wimmer et al[74] provided a simpler method that attained a good overall classification rate.

Few studies have assessed the utility of AI in the detection of celiac disease using WCE. In 2017, Zhou et al[75] trained GoogLeNet, a DCNN, to identify celiac disease using clips obtained during WCE. Their DCCN achieved a sensitivity and specificity of 100% for identifying patients with celiac disease from 10 WCE videos (5 from patients with celiac disease, 5 from healthy controls)[75]. Similarly, Wang et al[76] used DL to diagnose celiac disease from WCE videos, however their CNN utilized a block-wise channel squeeze and excitations attenuation module, a newer architectural unit thought to better mimic human visual perception[76]. Their system attained an accuracy of 95.9%, sensitivity of 97.2% and specificity of 95.6% for diagnosing celiac disease.

WCE is often used in patients with inflammatory bowel disease (IBD) to detect small bowel ulcers and erosions. While computed tomography enterography and MRI have been used to detect areas of disease activity and inflammation along the gastrointestinal tract in patients with IBD, these imaging modalities can miss early or small lesions. While WCE can directly visualize lesions, endoscopists reviewing the video may miss lesions or mistakenly identify imaging artifacts as lesions. AI systems could help reduce these errors. Several studies have been published using AI in WCE to detect intestinal changes consistent with Crohn’s disease[77-83].

To discriminate ulcers from normal mucosa in Crohn’s disease, Charisis et al[78] proposed combining bidimensional ensemble empirical mode decomposition and differential lacunarity to pre-process images followed by classification using several ML algorithms and a multilayer neural network. Using a dataset consisting of 87 ulcer and 87 normal mucosa images, their CADe achieved accuracy 89.0%-95.4%, sensitivity 88.2%-98.8%, and specificity 84.2%-96.6%[78]. Subsequently, Charisis and Hadjileontiadis published a paper in 2016 combining hybrid adaptive filtering and differential lacunarity (HAF-DLac) to process images followed by SVM to detect Crohn’s disease related lesions in WCE[79]. In a set of 800 WCE images, the HAF-DLac system achieved a sensitivity, specificity and accuracy of 95.2%, 92.4% and 93.8% respectively for detecting lesions[79]. Using a similar approach to Charisis et al[78], Kumar et al[80] used MPEG-7 edge, color and texture features to pre-process images followed by image classification using SVM to detect and classify lesions in patients with Crohn’s disease. Their system, tested against 533 images (212 normal mucosa, 321 images with lesions), obtained an accuracy of 93.0%-93.8% for detecting lesions and an accuracy of 78.5% for classifying them based on severity.

With respect to deep learning, few groups have used deep learning algorithms in WCE to identify Crohn’s disease related lesions. Recently, Ferreira et al[82] used a DCNN to identify erosions and ulcers in patients with Crohn’s disease. Their DCNN achieved a sensitivity of 98.0%, specificity of 99.0%, accuracy of 98.8% and AUROC of 1.00. Interestingly, Klang et al[83] developed a DCNN to detect intestinal strictures. Overall, their DCNN achieved an accuracy of 93.5% ± 6.7% and AUC of 0.989 for detecting strictures.

Three studies have used artificial intelligence to detect hookworms using WCE. The first to publish on this topic was Wu et al[84] in 2016. Using SVM, they were able to create a system that achieved a specificity of 99.0% and accuracy of 98.4% for detecting hookworms in WCE[84]. However, the system’s sensitivity was 11.1%. He et al[85] created a DCNN using a novel deep hookworm detection framework that modeled the tubular appearance of hookworms. Their DCNN had an accuracy of 88.5% for identifying hookworm[85]. Gan et al[86] performed a similar study, finding an AUC of 0.97 (95%CI 0.967-0.978), sensitivity of 92.2%, specificity of 91.1% and accuracy of 91.2% The concordant findings of these three studies suggest a possible utility of using AI to diagnose hookworm infections.

One of the most common reasons to perform WCE is to evaluate for gastrointestinal bleeding after prior endoscopic attempts have failed to localize a source. Since the implementation of WCE in clinical practice, many methods, notably AI, have been employed to improve the detection of gastrointestinal sources of bleeding.

Several studies have looked at using supervised learning to identify bleeding in WCE. In 2014, Sainju et al[87] used an ML algorithm to interpret color quantization images and determine if bleeding was present. One of their models achieved a sensitivity, specificity and accuracy of 96%, 90% and 93%, respectively[87]. Using SVM, Usman et al[88] achieved similar results - sensitivity, specificity and accuracy of 94%, 91% and 92% respectively.

More recently, several groups have created DCNNs to identify bleeding and sources of bleeding in WCE. In 2021, Ghosh et al[89] used a system comprised of two CNN systems (CNN-1, CNN-2) to classify WCE images as bleeding or non-bleeding and subsequently to identify sources of bleeding within the bleeding images. For classifying images as bleeding or non-bleeding, CNN-1 had a sensitivity, specificity, accuracy and AUC of 97.5%, 99.9%, 99.4% and 0.99[89]. For identifying sources of bleeding within the bleeding images, CNN-2 had an accuracy of 94.4% and intersection over union (IoU) of 90.7%[89].

In 2020, Tsuboi et al[90] published the first study to use DCNN to detect small bowel angioectasias from WCE images. In their test set which included 488 images of small bowel angioectasias and 10000 images of normal small bowel mucosa, their DCNN achieved an AUC of 0.99 with sensitivity and specificity of 98.8% and 98.4%[90]. Similarly, in 2021 Ribeiro at al[91] developed a DCNN to identify vascular lesions, categorizing them by bleeding risk according to Saurin’s classification: P0 – no hemorrhagic potential, P1 – uncertain/intermediate hemorrhagic potential and red spots, and P2 – high hemorrhagic potential (angioectasias, varices). In their validation set, the DCNN had a sensitivity, specificity, accuracy and AUROC of 91.7%, 95.3%, 94.1% and 0.97 respectively for identifying P1 lesions[91]. Regarding P2 lesions, the network had a sensitivity, specificity, accuracy and AUROC of 94.1%, 95.1%, 94.8% and 0.98 respectively[91]. This group published a similar study in 2022 however now using their DCNN to detect and differentiate mucosal erosions and ulcers based on bleeding potential[92]. Saurin’s classification was again used to classify lesions, additionally labeling P1 lesions as mucosal erosions or small ulcers and P2 lesions as large ulcers (> 2 cm)[92]. The DCNN achieved an overall sensitivity of 90.8% ± 4.7%, specificity of 97.1% ± 1.7%, and accuracy of 93.4% ± 3.3% in their test set of 1226 images[92]. For the detection of mucosal erosions (P1), their DCNN achieved a sensitivity of 87.2%, specificity of 95.0% and accuracy of 93.3% with AUROC of 0.98 (95%CI 0.97-0.99)[92]. With respect to small ulcers (P1), their DCNN achieved a sensitivity of 86.4%, specificity of 96.9% and accuracy of 94.5% with AUROC of 0.99 (95%CI 0.97-1.00)[92]. Finally, with respect to large ulcers (P2), their DCNN achieved a sensitivity of 95.3%, specificity of 99.2% and AUROC of 1.00 (95%CI 0.98-1.00)[92]. A third study published by this group aimed to develop a DCNN to identify colonic lesions and luminal blood/hematic vestiges had similar findings. In their training set of 1801 images, the DCNN achieved an overall sensitivity, specificity and accuracy of 96.3%, 98.2%, and 97.6% respectively[93]. For detecting mucosal lesions, the DCNN achieved a sensitivity of 92.0%, specificity of 98.5% and AUROC of 0.99 (95%CI 0.98-1.00)[93]. For luminal blood/hematic vestiges, the DCNN achieved a sensitivity of 99.5%, specificity of 99.8% and AUROC of 1.00 (95%CI 0.99-1.00)[93].

Gastrointestinal tumors can be difficult to discern from normal mucosa and thus pose a higher degree of diagnostic difficulty compared to other lesions on traditional WCE[94]. As such, developing an AI system to aid with the detection of these easy to miss lesions could be beneficial.

Several groups have developed ML systems to aid with detection. Using SVM, Li et al[95] were able to develop a system capable of detecting small bowel tumors with sensitivity, specificity and accuracy of 88.6%, 96.2% and 92.4%. Similarly, Liu et al[96] and Faghih Dinevari et al[97] used SVM to identify tumors in WCE, however they used different image pre-processing algorithms. Liu et al[96] used discrete curvelet transform to pre-process images prior to being classified by SVM. Their ML system achieved a sensitivity of 97.8% ± 0.5, specificity of 96.7% ± 0.4 and accuracy of 97.3% ± 0.5 for identifying small bowel tumors[96]. Faghih Dinevari et al[97] relied on discrete wavelet transform and singular value decomposition for image pre-processing prior to classification by SVM. Their system achieved a sensitivity of 94.0%, specificity of 93.0% and accuracy of 93.5% for identifying small bowel tumors[97]. Sundaram and Santhiyakumari built upon these methodologies, using a region of interest-based color histogram to enhance WCE images prior to being classified by two SVM algorithms: SVM1 and SVM2[98]. SVM1 classified the WCE image as normal or abnormal. If SVM1 classified the image as abnormal, it was further classified by SVM2 as benign, malignant or normal[98]. The system attained an overall sensitivity of 96.0%, specificity of 95.4% and accuracy of 95.7% for small bowel tumor detection and classification[98].

With respect to DL methods, Blanes-Vidal et al[99] created a DCNN to autonomously detect and localize colorectal polyps. Their study included 255 patients who underwent WCE and standard colonoscopy for positive fecal immunochemical tests. Of the 255 patients, 131 had at least 1 polyp. The DCNN obtained a sensitivity of 97.1%, specificity of 93.3% and accuracy of 96.4% for detecting polyps in WCE[99]. Saraiva et al[100] and Mascarenhas et al[101] similarly used DCNNs to detect colonic polyps in WCE and obtained similar results to Blanes-Vidal et al[99-101]. Using an ANN, Constantinescu et al[102] created a DL system able to detect small bowel polyps with sensitivity of 93.6% and specificity of 91.4%. For gastric polyps and tumors, Xia et al[103] created a novel CNN – a region-based convolutional neural network (RCNN) – to evaluate magnetically controlled capsule endoscopy (MCE) images. Tested on 201365 MCE images obtained from 100 patients, the RCNN detected gastric polyps with sensitivity of 96.5%, specificity of 94.8%, accuracy of 94.9% and AUC of 0.898 (95%CI 0.84-0.96)[103]. For submucosal tumors, the RCNN achieved a sensitivity of 87.2%, specificity of 95.3%, accuracy of 95.2% and AUC of 0.88 (95%CI 0.81-0.96)[103]. Taking a different approach, Yuan and Meng used a novel deep learning method – stacked sparse autoencoder image manifold constraint – to identify intestinal polyps on WCE, finding an accuracy of 98.00% for poly detection[104]. However, sensitivity, specificity and AUC analyses were not reported.

Inadequate bowel preparation, present in 15% to 35% of colonoscopies, is associated with lower rates of cecal intubation, lower adenoma detection rate (ADR), and higher rates of procedure-related adverse events[105,106]. For patients with inadequate bowel preparation, the United States Multi-Society Task Force of Colorectal Cancer (MSTF) which represents the American College of Gastroenterology, the American Gastroenterological Association and the American Society for Gastrointestinal Endoscopy (ASGE), and the European Society of Gastrointestinal Endoscopy recommend repeating a colonoscopy within 1 year[105,107-109]. In addition, the MSTF and ASGE recommend that endoscopists document bowel preparation quality at time of colonoscopy[108,109].

Despite these recommendations and variety of bowel preparation rating scales available, documentation of bowel preparation quality remains variable with studies reporting appropriate documentation in 20% to 88% of colonoscopies[110-112]. Few studies have been published regarding the use of DCNN to assist in the objective assessment of bowel preparation. The first group to do so, Zhou et al[113] in 2019, found that their DCNN (ENDOANGEL) was more accurate (93.3%) at grading the bowel preparation quality of still images than novice (< 1 year of experience performing colonoscopies; 75.91%), senior (1-3 years of experience performing colonoscopies; 74.36%) and expert (> 3 years of experience performing colonoscopies; 55.11%) endoscopists. When tested on colonoscopy videos, ENDOANGEL remained accurate at grading bowel preparation quality (89.04%)[113].

Building upon their experience with ENDOANGEL, Zhou et al[114] created a new system using two DCNNs: DCNN1 filtered unqualified frames while DCNN2 classified images by Boston Bowel Preparation Scale (BBPS) scores. The BBPS is a validated rating scale for assessing bowel preparation quality[115]. Colonic segments are assigned scores on a scale from 0 to 3. Colonic segments unable to be evaluated due to the presence of solid, unremovable stool are assigned a score of 0 whereas colonic segments that are able to be easily evaluated and contain minimal to no stool are assigned a score of 3[115]. Zhou et al’s DCNN2 classified images into two categories: well-prepared (BBPS score 2-3) and poorly prepared (BPPS score 0-1)[114]. There was no difference between the dual DCNN system and study endoscopists when calculating the unqualified image portion (28.35% vs 29.58%, P = 0.285) and e-BBPS scores (7.81% vs 8.74%, P = 0.088). In addition, a strong inverse relationship between e-BBPS and ADR (ρ = -0.976, P = 0.022) was found.

Two other groups developed similar dual DCNN systems as Zhou et al[114] to calculate BBPS and obtained concordant findings[116,117]. Lee et al[116] tested their system on colonoscopy videos and found the system had an accuracy of 85.3% and AUC of 0.918 for detecting adequate bowel preparation. Using still images, Low et al’s system was able to accurately determine bowel preparation adequacy (98%) and subclassify by BBPS (91%)[117].

Using a different approach, Wang et al[118] used U-Net to create a DCNN to perform automatic segmentation of fecal matter from still images. Compared to images segmented by endoscopists, U-Net achieved an accuracy of 94.7%.

Colonoscopy is essential for the assessment of IBD as it allows for real-time evaluation of colonic inflammation[119,120]. Despite there being endoscopic scoring systems available to quantify disease activity, assessment is operator-dependent resulting in high interobserver variability[119-121]. Recent efforts have focused on using artificial intelligence to objectively grade colonic inflammation[121,122].

Several studies have investigated using DCNNs to classify images obtained from patients with ulcerative colitis (UC) by endoscopic inflammation scoring systems. The most commonly used endoscopic scoring system in these studies is the Mayo Endoscopic Score (MES). Physicians assign scores on a scale from 0 to 3 based on the absence or presence of erythema, friability, erosions, ulceration and bleeding[123]. A score of 0 indicates normal or inactive mucosa whereas a score of 3 indicates severe disease activity[123]. In 2018, Ozawa et al[121] published the first study to use a DCNN to classify still images obtained from patients with UC into MES 0 vs MES 1-3 and MES 0-1 vs MES 2-3. Their DCNN had an AUROC of 0.86 (95%CI 0.84-0.87) and AUROC 0.98 (95%CI 0.97-0.98) when differentiating MES 0 vs MES 1-3 and MES 0-1 vs MES 2-3 respectively[121]. Stidham et al[122] performed a similar study and found an AUROC of 0.966 (95%CI 0.967-0.972) for differentiating still images into MES 0-1 vs MES 2-3. Using a combined deep learning and machine learning system, Huang et al[124] were able to achieve an AUC of 0.938 with accuracy of 94.5% for identifying MES 0-1 vs MES 2-3 from still images. While the binary classification used in the aforementioned studies can differentiate remission/mucosal healing (MES 0-1) and active inflammation (MES 2-3), knowing exact MESs also has clinical significance[125,126]. Bhambhvani and Zamora created a DCNN to assign individual MESs to still images. The model achieved an AUC of 0.89, 0.86 and 0.96 for classifying images into MES 1, MES 2 and MES 3 respectively and achieved an average specificity of 85.7%, average sensitivity of 72.4% and overall accuracy of 77.2%[127].

In order to simulate how MES is performed in practice, several groups developed systems using DL to predict MES from colonoscopy videos. Yao et al’s DCNN had good agreement with MES scoring performed by gastroenterologists in their internal video test set (k = 0. 84; 95%CI 0.75-0.92), however their DCCN did not perform as well in the external video test set (k = 0.59; 95%CI 0.46-0.71)[128]. Gottlieb et al[129] reported similar findings to Yao et al[128], finding that their DCNN had good agreement with MES scoring performed by gastroenterologists (quadratic weighted kappa of 0.844; 95%CI 0.787–0.901). Gutierrez Becker et al[130] created a DL system designed to perform multiple binary tasks: discriminating MES < 1 vs MES ≥ 1, MES < 2 vs MES ≥ 2, and MES < 3 vs MES ≥ 3. For these tasks, their DL system attained an AUROC of 0.84, 0.85, and 0.85 respectively.

A group from Japan published several studies using AI on endoscopic images to predict histologic activity in patients with UC[131-134]. Their first study in 2016 used machine learning to predict persistent histologic inflammation[131]. Their system attained a sensitivity of 74% (95%CI 65%-81%), specificity of 97% (95%CI 95%-99%) and accuracy of 91% (95%CI 83%-95%) for predicting persistent histologic inflammation in still images[131]. Their following studies used a deep neural network labeled DNUC (deep neural network for evaluation of UC) to identify endoscopic remission and histologic remission[132,134]. In still images, DNUC had a sensitivity of 93.3% (95%CI 92.2%–94.3%), specificity of 87.8% (95%CI 87.0%–88.4%) and diagnostic accuracy of 90.1% (95%CI 89.2%–90.9%) for determining endoscopic remission[132]. With respect to histologic remission, DNUC had a sensitivity of 92.4% (95%CI 91.5%–93.2%), specificity of 93.5% (95%CI 92.6%–94.3%) and diagnostic accuracy of 90.1% (92.9%; 95%CI 92.1%–93.7%)[132]. In colonoscopy videos, DNUC showed a sensitivity of 81.5% (95%CI 78.5%–83.9%) and specificity of 94.7% (95%CI 92.5%–96.4%) for endoscopic remission[134]. For histologic remission, DNUC had a sensitivity of 97.9% (95%CI 97.0%–98.5%) and specificity of 94.6% (95%CI 91.1%–96.9%) in colonoscopy videos[134].

To date, only one study has been published using an AI system to distinguish normal from inflamed colonic mucosa in Crohn’s disease[135]. The group paired a DCNN with a long short-term memory (LSTM), a type of neural network that uses previous findings to interpret its current input, and confocal laser endomicroscopy. Their DCNN-LSTM system attained an accuracy of 95.3% and AUC of 0.98 for differentiating normal from inflamed mucosa[135].

Colorectal cancer is the third most common malignancy and second leading cause of cancer-related mortality in the world[136]. While colonoscopy is the gold standard for detection and treatment of premalignant and malignant lesions, a substantial number of adenomas are missed[137,138]. As such, efforts have focused on using AI to improve ADR and decrease adenoma miss rate (AMR).

At present, numerous pilot, validation and prospective studies[139-161], randomized controlled studies[162-174], and systematic reviews and meta-analyses[175-183] have been published regarding the use of AI for the detection of colonic polyps. Furthermore, there are commercially available AI systems for both polyp detection and interpretation. With respect to the systematic reviews and meta-analyses published on this topic, AI-assisted colonoscopy has consistently been shown to have higher ADR, polyp detection rate (PDR) and adenoma per colonoscopy (APC) compared to standard colonoscopy[175-183]. Recently, several large, randomized controlled trials have been published supporting these findings. Shaukat et al[162] published their findings from their multicenter, randomized controlled trial comparing CADe colonoscopy to standard colonoscopy. Their study included 1359 patients: 677 randomized to standard colonoscopy, 682 to CADe colonoscopy. They found an increase in ADR (47.8% vs 43.9%; P = 0.065) and APC (1.05 vs 0.83; P = 0.002) in the CADe colonoscopy group. However, they also found a decrease in the overall sessile serrated lesions per colonoscopy rate (0.20 vs 0.28; P = 0.042) and sessile serrated lesion detection rate (12.6% vs 16.0%; P = 0.092) in the CADe colonoscopy group[162]. Brown et al[163] in their CADeT-CS Trial which was a multicenter, single-blind randomized tandem colonoscopy study comparing CADe colonoscopy to high-definition white light colonoscopy found similar increases in ADR (50.44% vs 43.64%; P = 0.3091) and APC (1.19 vs 0.90; P = 0.0323) in their patients who underwent CADe colonoscopy first[163]. Additionally, polyp miss rate (PMR) (20.70% vs 33.71%; P = 0.0007), AMR (20.12% vs 31.25%; P = 0.0247), and sessile serrated lesion miss rate (7.14% vs 42.11%; P = 0.0482) were lower in the CADe colonoscopy first group. In a similarly designed study to Brown et al[163], Kamba et al’s multicenter, randomized tandem colonoscopy study comparing CADe colonoscopy to standard colonoscopy found lower AMR (13.8% vs 26.7%; P < 0.0001), PMR (14.2% vs 40.6%; P < 0.0001), and sessile serrated lesion miss rate (13.0% vs 38.5%’ P = 0.03) and higher ADR (64.5% vs 53.6%; P = 0.036) and PDR (69.8% vs 60.9%; P = 0.084) in patients who underwent CADe colonoscopy first[164]. Similar to Shaukat et al[162], the sessile serrated lesion detection rate was lower in the CADe colonoscopy first group compared to standard colonoscopy first (7.6% vs 8.1%; P = 0.866)[164]. Similar increases in ADR, APC and PDR were appreciated in randomize controlled trials by Xu et al[172], Liu et al[173], Repici et al[170], Gong et al[166], Wang et al[167], and Su et al[169] as well[166-172].

The majority of AI-assisted colonoscopy studies focus on adenoma detection. While these studies report sessile serrated lesion rates, it is often a secondary outcome despite sessile serrated lesions being the precursors of 15%-30% of all colorectal cancers[184]. Few studies have created AI systems optimized for dedicating sessile serrated lesions. Recently, Yoon et al[184] used a generative adversarial network (GAN) to generate endoscopic images of sessile serrated lesions which were used to train their DCNN with the hope of improving sessile serrated lesion detection. In the validation set which was comprised of 1141 images of polyps and 1000 normal images, their best performing GAN-DCNN model, GAN-aug2, achieved a sensitivity of 95.44% (95%CI 93.71%-97.17%), specificity of 90.10% (95%CI 88.38%-91.77%), accuracy of 92.95% (95%CI 91.86%-94.04%) and AUROC of 0.96 (95%CI 0.9547-0.9709)[184]. In a type-separated polyp validation dataset, the GAN-aug2 achieved a sensitivity of 95.24%, 19.1% higher than the DCNN without augmentation[184]. Given the small number of sessile serrate lesions present in the initial set, Yoon et al[184] collected an additional 130 images depicting 133 sessile serrated lesions to create an additional validation set titled SSL temporal validation dataset[184]. The GAN-aug2 continued to outperform the DCNN without augmentation (sensitivity 93.98% vs 84.21%). Nemoto et al[185] created a DCNN to differentiate (1) tubular adenomas from serrated lesions; and (2) serrated lesions from hyperplastic polyps. In their 215-image training set, the DCNN was able to differentiate tubular adenomas from sessile serrated lesions with sensitivity of 72% (95%CI 62%-81%), specificity 89% (95%CI 82%-94%), accuracy 82% (95%CI 77%-87%) and AUC 0.86 (95%CI 0.80-0.91). For differentiating sessile serrated lesions from hyperplastic polyps, the DCNN achieved a sensitivity of 17% (95%CI 7%-32%), specificity 85% (95%CI 76%-92%), accuracy 63% (95%CI 54%-72%) and AUC 0.55 (95%CI 0.44-0.66)[185]. An overview of studies investigating the detection accuracy of CADe is provided in Table 3. An overview of studies investigating ADR and PDR using CADe is provided in Table 4.

| Ref. | Country | Study design | Lesions | Training dataset | Test dataset | Sensitivity (%) | Specificity (%) | Accuracy (%) | AUROC |

| Komeda et al[139], 2017 | Japan | Retrospective | Adenomas | 1200 images | 10 images | 80 | 60 | 70 | - |

| Misawa et al[140], 2018 | Japan | Retrospective | Polyps | 411 video clips | 135 video clips | 90 | 63.3 | 76.5 | 0.87 |

| Wang et al[149], 2018 | China, United States | Retrospective | Polyps | 4495 images | Dataset A: 27113 images; Dataset C: 138 video clips; Dataset D: 54 full-length videos | Dataset A: 94.38; Dataset C: 91.64 | Dataset A: 95.92; Dataset D: 95.4 | - | Dataset A: 0.984 |

| Horiuchi et al[154], 2019 | Japan | Prospective | Diminutive polyps | - | a | 80 | 95.3 | 91.5 | - |

| Hassan et al[141], 2020 | Italy, United States | Retrospective | Polyps | - | 338 video clips | 99.7 | - | - | - |

| Guo et al[142], 2021 | Japan | Retrospective | Polyps | 1991 images | 100 video clips; 15 full videos | 87b | 98.3b | - | - |

| Neumann et al[143], 2021 | Germany | Retrospective1 | Polyps | > 500 videos | 240 polyps within full-length videos | 100 | 0 | - | - |

| Li et al[144], 2021 | Singapore | Retrospective | Polyps | 6038 images | 2571 images | 74.1 | 85.1 | - | - |

| Livovsky et al[151], 2021 | Israel | Ambispective | Polyps | 3611 h of videos | 1393 h of videos | 97.1 | 0 | - | - |

| Pfeifer et al[158], 2021 | Germany, Italy, Netherlands | Retrospective | Polyps | 10467 images | 45 videos | 90 | 80 | - | 0.92 |

| Ahmad et al[145], 20222 | England | Prospective | Polyps | Dataset A: 58849 frames; Dataset B: 10993 videos and still images | Dataset C: 110985 frames; Dataset D: 8950 frames; Dataset E: 542484 frames | Dataset C: 100, 84.1; Dataset D&E: 98.9, 85.2 | Dataset C: 79.6; Dataset D&E: 79.3% | ||

| Hori et al[146], 2022 | Japan | Prospective | Polyps | 1456 images | 600 images | 97 | 97.7 | 97.3 | - |

| Pacal et al[152], 2022 | Turkey | Retrospective | Polyps | Used images from 3 publicly available datasets (SUN, PICCOLO, Etis-Larib) to create training and test datasets | 91.04 | - | - | - | |

| Yoon et al[184], 2022 | South Korea | Retrospective | SSL | 4397 images | Validation Set 2106; SSL Temporal Validation set 133 | 95.44; 93.89 | 90.1 | 92.95 | 0.96 |

| Nemoto et al[185], 2022 | Japan | Retrospective | TA, SSL | 1849 images | 400 images | 72 | 89 | 82 | 0.86 |

| Lux et al[148], 2022 | Germany | Retrospective | Polyps | 506338 images | 41 full-length videos | - | - | 95.3 | - |

| Ref. | Country | Study design | Patients (n) | PDR (%) | ADR (%) | |||||

| CADe | SC | CADe | SC | P value | CADe | SC | P value | |||

| Wang et al[168], 2019 | China, United States | Randomized | 522 | 536 | 45.02 | 29.1 | < 0.001 | 29.12 | 20.34 | < 0.001 |

| Becq et al[155], 2020 | United States, Turkey, Costa Rica | Prospective | 50b | 82 | 62 | Not reported | - | - | - | |

| Gong et al[166], 2020 | China | Randomized | 355 | 349 | 47 | 34 | 0.0016 | 16 | 8 | 0.001 |

| Liu et al[171], 2020 | China, United States | Randomized | 393 | 397 | 47.07 | 33.25 | < 0.001 | 29.01 | 20.91 | 0.009 |

| Liu et al[173], 2020 | China | Prospective | 508 | 518 | 43.65 | 27.81 | < 0.001 | 39.1 | 23.89 | < 0.001 |

| Repici et al[170], 2020 | Italy, Kuwait, United States, Germany | Randomized | 341 | 344 | - | - | - | 54.8 | 40.4 | < 0.001 |

| Su et al[169], 2020 | China | Randomized | 308 | 315 | 38.3 | 25.4 | 0.001 | 28.9 | 16.5 | < 0.001 |

| Wang et al[156], 2020 | China, United States | Prospective, Tandem1 | 184 | 185 | 65.59 | 55.14 | 0.099 | 42.39 | 35.68 | 0.186 |

| Wang et al[167], 2020 | China, United States | Randomized | 484 | 478 | 52 | 37 | < 0.0001 | 34 | 28 | 0.03 |

| Kamba et al[164], 2021 | Japan | Randomized, Tandem2 | 172 | 174 | 69.8 | 60.9 | 0.084 | 64.5 | 53.6 | 0.036 |

| Luo et al[174], 2021 | China | Randomized, Tandem1 | 72 | 78 | 38.7 | 34 | < 0.001 | - | - | - |

| Pfeifer et al[158], 2021 | Germany, Italy, Netherlands | Prospective, Tandem1 | 42b | 50 | 38 | 0.023 | 36 | 26 | 0.044 | |

| Shaukat et al[157], 2021 | United States, England | Prospective | 83 | 283 | - | - | - | 54.2 | 40.6 | 0.028 |

| Shen et al[150], 2021 | China | Ambispective | 64 | 64 | 78.1 | 56.3 | 0.008 | 53.1 | 29.7 | 0.007 |

| Xu et al[172], 2021 | China | Randomized | 1177 | 1175 | 38.8 | 36.2 | 0.183 | - | - | - |

| Glissen Brown et al[163], 2022 | China, United States | Randomized, Tandem2 | 113 | 110 | 70.8 | 65.45 | 0.3923 | 50.44 | 43.64 | 0.3091 |

| Ishiyama et al[159], 2022 | Japan, Norway | Prospective | 918 | 918 | 59 | 52.1 | 0.003 | 26.4 | 19.9 | 0.001 |

| Lux et al[148], 2022 | Germany | Retrospective | 41 | - | - | - | - | - | 41.5 | - |

| Quan et al[153], 2022 | United States | Prospective | 300 | 300 | - | - | - | 43.7a; 66.7 | 37.8a; 59.72 | 0.37a; 0.35 |

| Repici et al[165], 2022 | Italy, Switzerland, United States, Germany | Randomized | 330 | 330 | - | - | - | 53.3 | 44.5 | 0.017 |

| Shaukat et al[162], 2022 | United States | Randomized | 682 | 677 | 64.4 | 61.2 | 0.242 | 47.8 | 43.9 | 0.065 |

| Zippelius et al[160], 2022 | Germany, United States | Prospective | 150b | - | - | - | 50.7 | 52 | 0.5 | |

Artificial intelligence is in its early stages for medicine, especially in gastroenterology and endoscopy. AI will help is in the areas of “augmentation” and “automation”. Augmentation like what is happening with polyp detection and interpretation. Automation by eliminating electronic paperwork, such as the use of natural language processing for procedure documentation. Artificial intelligence systems have repeatedly been shown to be effective at identifying gastrointestinal lesions with high sensitivity, specificity and accuracy. While lesion detection is important, this is only the beginning of AI’s utility in esophagogastroduodenoscopy, WCE and colonoscopy.

After refining their AI systems for lesion detection, several groups discussed in this narrative review were able to add additional functions to their AI systems. In BE, ESCC and gastric cancer, several AI systems were capable of predicting tumor invasion depth. Within IBD, AI systems were able to generate endoscopic disease severity scores. One group was able to train their CADe to recommend neoplasia biopsy sites in BE[14]. Additional efforts should be dedicated to developing these functions, testing them in real-time and having the AI system provide management recommendations when clinically appropriate.

Additional areas in need of future research are using AI systems to make histologic predictions, to assist with positioning of the endoscopic ultrasound (EUS) transducer and interpretation of EUS images, to detect biliary diseases and make therapeutic recommendations in endoscopic retrograde cholangiopancreatography (ERCP), and, in combination with endoscopic mechanical attachments, to improve colorectal cancer screening and surveillance. While endoscopists may perform optical biopsies of gastrointestinal lesions to predict histology and make real-time management decisions, these predictions are highly operator-dependent and often require expensive equipment that is not readily available. Thus, developing an AI system capable of performing objective optical biopsies, especially in WLE, would preserve the quality of histologic predictions, be cost effective, and avoid the risks associated with endoscopic biopsy and resection.

Similarly, EUS is highly operator-dependent, requiring endoscopists to place the transducer in specific positions to obtain adequate views of the hepatopancreatobiliary system. Research should focus on using AI systems to assist with appropriate transducer positioning and perform real-time EUS image analysis[186-194].

Presently, several clinical studies are actively recruiting patients to evaluate the utility of AI systems in ERCP. Of particular interest is the diagnosis and management of biliary diseases. Some groups are planning to use AI to classify bile duct lesions and provide biopsy site recommendations[195]. One group is planning to use an AI system in patients requiring biliary stents to assist with biliary stent choice and stent placement[196]. It will be interesting to see how AI performs in these tasks as successes could pave the way for future studies investigating the utility of AI systems to make real-time management recommendations.

While this narrative review focused on the use of AI in colonoscopy, of growing interest is the use of endoscopic mechanical attachments in colonoscopy to assist with polyp detection in colorectal cancer screening and surveillance. Independently, AI systems and endoscopic mechanical attachments are known to increase ADR and PDR. Few studies have investigated how combining AI with endoscopic mechanical attachments impacts ADR and PDR. Future research should examine the impact that combining these modalities has on ADR and PDR.

While substantial advances have been made in AI, it is important to note that AI is not without limitations. In many of the studies discussed in this narrative review, the authors trained their AI systems using internally obtained images labeled by a single endoscopist. Thus, the AI is subject to the same operator biases and human error as the labeling endoscopist[1,197]. In addition, by using internally obtained data, several of these training sets may have inherent institutional or geographic biases resulting in AI systems that are biased and nongeneralizable[197]. As AI continues to progress, large datasets comprised of high-quality images should be created and used for training AI systems to reduce these biases[1].

With the implementation of AI in clinical practice, medical error accountability must also be addressed. While many of the AI systems discussed in this narrative review boast high detection accuracies, none are perfect. It is undeniable that errors in detection and diagnosis will arise when using these technologies. Regulatory bodies are needed to continually supervise these AI systems and oversee problems as they arise[198].

In this narrative review, we provide an objective overview of the AI-related research being performed within esophagogastroduodenoscopy, WCE and colonoscopy. We attempted to be comprehensive by using several electronic databases including Embase, Ovid Medicine, and PubMed. However, it is possible that some publications pertinent to our narrative review were missed.

Undoubtedly, AI within esophagogastroduodenoscopy, WCE and colonoscopy is rapidly evolving, moving from retrospectively tested supervised learning algorithms to large, multicenter clinical trials using completely autonomous systems within the span of 10 years. The systems developed by these researchers show promise for detecting lesions, diagnosing conditions, and monitoring diseases. In fact, two of the computer aided detection systems discussed in this narrative review designed to aid with colorectal polyp detection were approved by the United States Food and Drug Administration in 2021[171,199]. Thus, the question is no longer if but when will AI become integrated with clinical practice. Medical providers at all levels of training should prepare to incorporate artificial intelligence systems into routine practice.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C

Grade D (Fair): D

Grade E (Poor): 0

P-Reviewer: Liu XQ, China; Qi XS, China S-Editor: Liu JH L-Editor: A P-Editor: Liu JH

| 1. | Kröner PT, Engels MM, Glicksberg BS, Johnson KW, Mzaik O, van Hooft JE, Wallace MB, El-Serag HB, Krittanawong C. Artificial intelligence in gastroenterology: A state-of-the-art review. World J Gastroenterol. 2021;27:6794-6824. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 28] [Cited by in RCA: 81] [Article Influence: 20.3] [Reference Citation Analysis (7)] |

| 2. | Kaul V, Enslin S, Gross SA. History of artificial intelligence in medicine. Gastrointest Endosc. 2020;92:807-812. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 125] [Cited by in RCA: 314] [Article Influence: 62.8] [Reference Citation Analysis (1)] |

| 3. | Deo RC. Machine Learning in Medicine. Circulation. 2015;132:1920-1930. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1155] [Cited by in RCA: 1956] [Article Influence: 217.3] [Reference Citation Analysis (6)] |

| 4. | Le Berre C, Sandborn WJ, Aridhi S, Devignes MD, Fournier L, Smaïl-Tabbone M, Danese S, Peyrin-Biroulet L. Application of Artificial Intelligence to Gastroenterology and Hepatology. Gastroenterology. 2020;158:76-94.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 230] [Cited by in RCA: 323] [Article Influence: 64.6] [Reference Citation Analysis (1)] |

| 5. | Christou CD, Tsoulfas G. Challenges and opportunities in the application of artificial intelligence in gastroenterology and hepatology. World J Gastroenterol. 2021;27:6191-6223. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 34] [Cited by in RCA: 17] [Article Influence: 4.3] [Reference Citation Analysis (8)] |

| 6. | Pannala R, Krishnan K, Melson J, Parsi MA, Schulman AR, Sullivan S, Trikudanathan G, Trindade AJ, Watson RR, Maple JT, Lichtenstein DR. Artificial intelligence in gastrointestinal endoscopy. VideoGIE. 2020;5:598-613. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 61] [Cited by in RCA: 38] [Article Influence: 7.6] [Reference Citation Analysis (0)] |

| 7. | Hussein M, González-Bueno Puyal J, Mountney P, Lovat LB, Haidry R. Role of artificial intelligence in the diagnosis of oesophageal neoplasia: 2020 an endoscopic odyssey. World J Gastroenterol. 2020;26:5784-5796. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 9] [Cited by in RCA: 8] [Article Influence: 1.6] [Reference Citation Analysis (0)] |

| 8. | Sharma P. Barrett Esophagus: A Review. JAMA. 2022;328:663-671. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 54] [Article Influence: 18.0] [Reference Citation Analysis (0)] |

| 10. | van der Sommen F, Zinger S, Curvers WL, Bisschops R, Pech O, Weusten BL, Bergman JJ, de With PH, Schoon EJ. Computer-aided detection of early neoplastic lesions in Barrett's esophagus. Endoscopy. 2016;48:617-624. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 111] [Cited by in RCA: 127] [Article Influence: 14.1] [Reference Citation Analysis (2)] |

| 11. | Swager AF, van der Sommen F, Klomp SR, Zinger S, Meijer SL, Schoon EJ, Bergman JJGHM, de With PH, Curvers WL. Computer-aided detection of early Barrett's neoplasia using volumetric laser endomicroscopy. Gastrointest Endosc. 2017;86:839-846. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 103] [Cited by in RCA: 102] [Article Influence: 12.8] [Reference Citation Analysis (0)] |

| 12. | Struyvenberg MR, van der Sommen F, Swager AF, de Groof AJ, Rikos A, Schoon EJ, Bergman JJ, de With PHN, Curvers WL. Improved Barrett's neoplasia detection using computer-assisted multiframe analysis of volumetric laser endomicroscopy. Dis Esophagus. 2020;33. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 16] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 13. | de Groof J, van der Sommen F, van der Putten J, Struyvenberg MR, Zinger S, Curvers WL, Pech O, Meining A, Neuhaus H, Bisschops R, Schoon EJ, de With PH, Bergman JJ. The Argos project: The development of a computer-aided detection system to improve detection of Barrett's neoplasia on white light endoscopy. United European Gastroenterol J. 2019;7:538-547. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 83] [Cited by in RCA: 86] [Article Influence: 14.3] [Reference Citation Analysis (0)] |

| 14. | de Groof AJ, Struyvenberg MR, van der Putten J, van der Sommen F, Fockens KN, Curvers WL, Zinger S, Pouw RE, Coron E, Baldaque-Silva F, Pech O, Weusten B, Meining A, Neuhaus H, Bisschops R, Dent J, Schoon EJ, de With PH, Bergman JJ. Deep-Learning System Detects Neoplasia in Patients With Barrett's Esophagus With Higher Accuracy Than Endoscopists in a Multistep Training and Validation Study With Benchmarking. Gastroenterology. 2020;158:915-929.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 172] [Cited by in RCA: 222] [Article Influence: 44.4] [Reference Citation Analysis (0)] |

| 15. | de Groof AJ, Struyvenberg MR, Fockens KN, van der Putten J, van der Sommen F, Boers TG, Zinger S, Bisschops R, de With PH, Pouw RE, Curvers WL, Schoon EJ, Bergman JJGHM. Deep learning algorithm detection of Barrett's neoplasia with high accuracy during live endoscopic procedures: a pilot study (with video). Gastrointest Endosc. 2020;91:1242-1250. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 66] [Cited by in RCA: 90] [Article Influence: 18.0] [Reference Citation Analysis (0)] |

| 16. | Struyvenberg MR, de Groof AJ, van der Putten J, van der Sommen F, Baldaque-Silva F, Omae M, Pouw R, Bisschops R, Vieth M, Schoon EJ, Curvers WL, de With PH, Bergman JJ. A computer-assisted algorithm for narrow-band imaging-based tissue characterization in Barrett's esophagus. Gastrointest Endosc. 2021;93:89-98. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 27] [Cited by in RCA: 54] [Article Influence: 13.5] [Reference Citation Analysis (0)] |

| 17. | Jisu Hong, Bo-Yong Park, Hyunjin Park. Convolutional neural network classifier for distinguishing Barrett's esophagus and neoplasia endomicroscopy images. Annu Int Conf IEEE Eng Med Biol Soc. 2017;2017:2892-2895. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 20] [Cited by in RCA: 27] [Article Influence: 3.9] [Reference Citation Analysis (0)] |

| 18. | Ebigbo A, Mendel R, Probst A, Manzeneder J, Prinz F, de Souza LA Jr, Papa J, Palm C, Messmann H. Real-time use of artificial intelligence in the evaluation of cancer in Barrett's oesophagus. Gut. 2020;69:615-616. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 84] [Cited by in RCA: 124] [Article Influence: 24.8] [Reference Citation Analysis (0)] |

| 19. | Hashimoto R, Requa J, Dao T, Ninh A, Tran E, Mai D, Lugo M, El-Hage Chehade N, Chang KJ, Karnes WE, Samarasena JB. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett's esophagus (with video). Gastrointest Endosc. 2020;91:1264-1271.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 172] [Cited by in RCA: 144] [Article Influence: 28.8] [Reference Citation Analysis (0)] |

| 20. | Hussein M, González-Bueno Puyal J, Lines D, Sehgal V, Toth D, Ahmad OF, Kader R, Everson M, Lipman G, Fernandez-Sordo JO, Ragunath K, Esteban JM, Bisschops R, Banks M, Haefner M, Mountney P, Stoyanov D, Lovat LB, Haidry R. A new artificial intelligence system successfully detects and localises early neoplasia in Barrett's esophagus by using convolutional neural networks. United European Gastroenterol J. 2022;10:528-537. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 33] [Cited by in RCA: 27] [Article Influence: 9.0] [Reference Citation Analysis (0)] |

| 21. | Ebigbo A, Mendel R, Probst A, Manzeneder J, Souza LA Jr, Papa JP, Palm C, Messmann H. Computer-aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut. 2019;68:1143-1145. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 94] [Cited by in RCA: 103] [Article Influence: 17.2] [Reference Citation Analysis (0)] |

| 22. | Ali S, Bailey A, Ash S, Haghighat M; TGU Investigators, Leedham SJ, Lu X, East JE, Rittscher J, Braden B. A Pilot Study on Automatic Three-Dimensional Quantification of Barrett's Esophagus for Risk Stratification and Therapy Monitoring. Gastroenterology. 2021;161:865-878.e8. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 24] [Cited by in RCA: 21] [Article Influence: 5.3] [Reference Citation Analysis (0)] |

| 23. | Ebigbo A, Mendel R, Rückert T, Schuster L, Probst A, Manzeneder J, Prinz F, Mende M, Steinbrück I, Faiss S, Rauber D, de Souza LA Jr, Papa JP, Deprez PH, Oyama T, Takahashi A, Seewald S, Sharma P, Byrne MF, Palm C, Messmann H. Endoscopic prediction of submucosal invasion in Barrett's cancer with the use of artificial intelligence: a pilot study. Endoscopy. 2021;53:878-883. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 20] [Cited by in RCA: 43] [Article Influence: 10.8] [Reference Citation Analysis (0)] |

| 24. | Ghatwary N, Zolgharni M, Ye X. Early esophageal adenocarcinoma detection using deep learning methods. Int J Comput Assist Radiol Surg. 2019;14:611-621. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 38] [Cited by in RCA: 67] [Article Influence: 11.2] [Reference Citation Analysis (0)] |

| 25. | Iwagami H, Ishihara R, Aoyama K, Fukuda H, Shimamoto Y, Kono M, Nakahira H, Matsuura N, Shichijo S, Kanesaka T, Kanzaki H, Ishii T, Nakatani Y, Tada T. Artificial intelligence for the detection of esophageal and esophagogastric junctional adenocarcinoma. J Gastroenterol Hepatol. 2021;36:131-136. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 32] [Cited by in RCA: 27] [Article Influence: 6.8] [Reference Citation Analysis (0)] |

| 26. | Zhang Y. Epidemiology of esophageal cancer. World J Gastroenterol. 2013;19:5598-5606. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 736] [Cited by in RCA: 747] [Article Influence: 62.3] [Reference Citation Analysis (8)] |

| 27. | Shin D, Protano MA, Polydorides AD, Dawsey SM, Pierce MC, Kim MK, Schwarz RA, Quang T, Parikh N, Bhutani MS, Zhang F, Wang G, Xue L, Wang X, Xu H, Anandasabapathy S, Richards-Kortum RR. Quantitative analysis of high-resolution microendoscopic images for diagnosis of esophageal squamous cell carcinoma. Clin Gastroenterol Hepatol. 2015;13:272-279.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 59] [Cited by in RCA: 66] [Article Influence: 6.6] [Reference Citation Analysis (0)] |

| 28. | Quang T, Schwarz RA, Dawsey SM, Tan MC, Patel K, Yu X, Wang G, Zhang F, Xu H, Anandasabapathy S, Richards-Kortum R. A tablet-interfaced high-resolution microendoscope with automated image interpretation for real-time evaluation of esophageal squamous cell neoplasia. Gastrointest Endosc. 2016;84:834-841. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 62] [Cited by in RCA: 61] [Article Influence: 6.8] [Reference Citation Analysis (0)] |

| 29. | Cai SL, Li B, Tan WM, Niu XJ, Yu HH, Yao LQ, Zhou PH, Yan B, Zhong YS. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc. 2019;90:745-753.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 74] [Cited by in RCA: 110] [Article Influence: 18.3] [Reference Citation Analysis (0)] |