Published online Mar 20, 2022. doi: 10.5493/wjem.v12.i2.16

Peer-review started: October 11, 2021

First decision: December 9, 2021

Revised: December 14, 2021

Accepted: March 6, 2022

Article in press: March 6, 2022

Published online: March 20, 2022

Processing time: 155 Days and 14.9 Hours

Left ventricular ejection fraction calculation automation typically requires complex algorithms and is dependent of optimal visualization and tracing of endocardial borders. This significantly limits usability in bedside clinical applications, where ultrasound automation is needed most.

To create a simple deep learning (DL) regression-type algorithm to visually estimate left ventricular (LV) ejection fraction (EF) from a public database of actual patient echo examinations and compare results to echocardiography laboratory EF calculations.

A simple DL architecture previously proven to perform well on ultrasound image analysis, VGG16, was utilized as a base architecture running within a long short term memory algorithm for sequential image (video) analysis. After obtaining permission to use the Stanford EchoNet-Dynamic database, researchers randomly removed approximately 15% of the approximately 10036 echo apical 4-chamber videos for later performance testing. All database echo examinations were read as part of comprehensive echocardiography study performance and were coupled with EF, end systolic and diastolic volumes, key frames and coordinates for LV endocardial tracing in csv file. To better reflect point-of-care ultrasound (POCUS) clinical settings and time pressure, the algorithm was trained on echo video correlated with calculated ejection fraction without incorporating additional volume, measurement and coordinate data. Seventy percent of the original data was used for algorithm training and 15% for validation during training. The previously randomly separated 15% (1263 echo videos) was used for algorithm performance testing after training completion. Given the inherent variability of echo EF measurement and field standards for evaluating algorithm accuracy, mean absolute error (MAE) and root mean square error (RMSE) calculations were made on algorithm EF results compared to Echo Lab calculated EF. Bland-Atlman calculation was also performed. MAE for skilled echocardiographers has been established to range from 4% to 5%.

The DL algorithm visually estimated EF had a MAE of 8.08% (95%CI 7.60 to 8.55) suggesting good performance compared to highly skill humans. The RMSE was 11.98 and correlation of 0.348.

This experimental simplified DL algorithm showed promise and proved reasonably accurate at visually estimating LV EF from short real time echo video clips. Less burdensome than complex DL approaches used for EF calculation, such an approach may be more optimal for POCUS settings once improved upon by future research and development.

Core Tip: The manuscript describes a novel study of machine learning algorithm creation for point of care ultrasound left ventricular ejection fraction estimation without measurements or modified Simpson's Rule calculations typically seen in artificial applications designed to calculate the left ventricular ejection fraction. I believe the manuscript will be of interest to your readers and significantly add to the body of literature related to bedside clinical ultrasound artificial intelligence applications.

- Citation: Blaivas M, Blaivas L. Machine learning algorithm using publicly available echo database for simplified “visual estimation” of left ventricular ejection fraction . World J Exp Med 2022; 12(2): 16-25

- URL: https://www.wjgnet.com/2220-315x/full/v12/i2/16.htm

- DOI: https://dx.doi.org/10.5493/wjem.v12.i2.16

Left ventricular (LV) ejection fraction (EF) calculation is the most common method for quantifying left ventricular systolic function[1,2]. Not only is EF the most widely used measure of cardiac function in clinical care but it is especially important in severely ill and unstable patients. In critically ill patients, rapidly obtaining the EF helps narrow treatment options and can identify possible causes behind unstable vital signs. EF can be assessed using a variety of imaging modalities and methods. Magnetic resonance imaging, while providing high accuracy, is logistically difficult to perform in most urgent or emergent situations[3,4]. The resultant effective criterion standard is EF calculation by comprehensive 2-D echocardiography, typically using the modified Simpson’s rule[5]. However, despite ultrasound’s lower cost and greater accessibility than MRI, and the potential for bedside imaging by an echocardiography tech, results are typically delayed by hours to days after examination performance. This “results time lag” is impractical in any clinical scenario requiring rapid patient assessment and decision making[6].

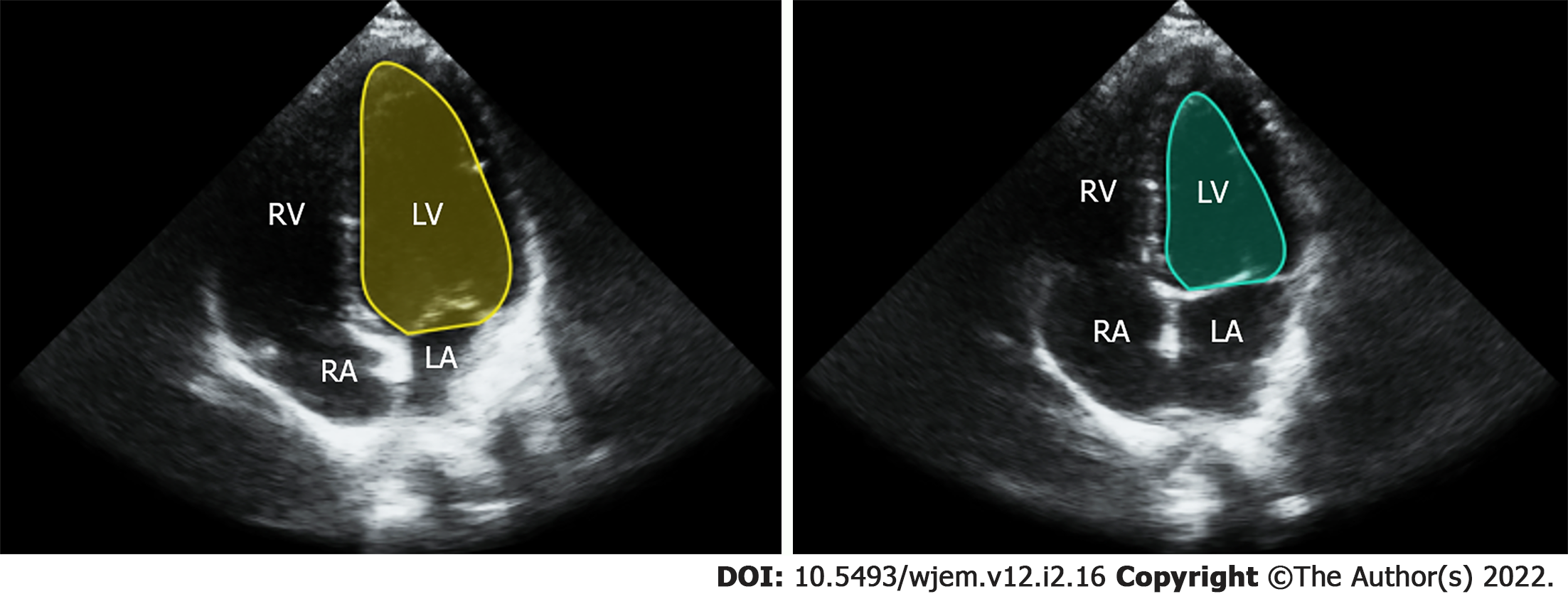

The modified Simpson’s approach uses a mathematical approach for estimating volumes, based on LV images in two orthogonal planes[7]. The operator carefully outlines endocardial borders for end systolic and end diastolic frames in both planes (Figure 1). Using a single plane, typically from the apical 4 chamber view, is possible, but leads to lower accuracy when compared to a two plane approach[8]. Manually calculating EF using ultrasound is time consuming, requires considerable training and expertise and is rarely performed, even by highly experienced providers, in POCUS settings due to hardware and time limitations[9]. An alternative method is visual estimation by the operator. Experienced echocardiography technologists and cardiologists specializing in echocardiography can visually estimate EF with reasonable accuracy[10]. However, rank and file POCUS users are only able to grade EF visually into broad general categories such as normal, moderately and severely depressed. This level of gradation equates to just three 20% EF ranges while most echocardiography laboratories report EF in much more granular 5% ranges between 10% to 70%[11].

Although visual EF estimation is indeed faster and theoretically better suited for many acute care scenarios, it has to be accurate and precise enough to detect clinically relevant changes and be repeatable. Given that human operator visual estimation is highly subjective, reproducibility in a high pressure clinical setting such as with a critically ill patient undergoing interventions and resuscitation, can be especially difficult[12]. The challenge can be made even more difficult if the operator obtaining the visual EF estimation changes, such as with shift change or transition of care. The decompensating patient, now being treated by a new provider, may not have an objective and reproducible EF assessment for comparison. In such cases especially, a more objective, precise and reproducible, yet rapid, measure is highly desirable.

Considerable work has occurred with Artificial Intelligence (AI) in automatic EF estimation in the academic research space as well as some with commercial ventures, resulting in several hardware/ software products available for purchase and use by clinicians[13-16]. Liu et al[16] developed a DPS-Net based algorithm using a biplane Simpson’s rule for EF determination. The investigators achieved high correlation with gold standard testing based on receiver operator curves approaching 0.974. However, accurate segmentation of the LV in the apical 4 and 2 chamber planes, for both end systole and end diastole. This method, while accurate is computationally intensive and would require POCUS users to obtain an imaging plane they are rare trained to achieve (apical 2 chamber). Strezecka et al[13] studied automated EF measurement specifically on a POCUS device, which would inherently indicate use by clinicians with little training in echo. The researchers used an algorithm capable of EF determination from just one imaging plane, the apical 4 chamber view. However, they depicted several failures of the algorithm to detect and trace the endocardial border, a critical step in their EF calculation method. Unfortunately, POCUS settings often result in images with limited endocardial border detail, which can lead to the failure of such algorithms on a regular basis. To date, the majority of the commercial products utilize some form of a modified Simpson’s rule approach and depend significantly on clear images with well delineated endocardial borders[17,18]. In fact, the challenge of determining an EF in the POCUS setting with POCUS equipment has already led to one class 2 FDA recall and another vendor’s EF application removal from the market and requirement for full FDA review[19].

In order to explore improved visual EF estimation, researchers sought to create a simple deep learning (DL) algorithm to rapidly “visually” estimate EF from a public database of actual patient echo examinations and compare results to echocardiography laboratory EF calculations.

Researchers utilized simple DL architecture previously found to perform well in ultrasound image analysis. The VGG16 architecture was used as a base to run inside a long short term memory (LSTM) algorithm for video analysis by sequential frames. To better reflect POCUS clinical settings and time pressure, the algorithm was trained on echo videos correlated with calculated ejection fraction without incorporating additional available measurement data such as end systolic and diastolic volumes, key frames or endocardial border coordinates, from a large public echo database. Seventy percent of the data was used for algorithm training and 15% for validation during training. A previously separated 15% was reserved for algorithm performance testing. Algorithm training was optimized through variably adjusting batch size, number of epochs (an epoch is one round of DL algorithm training through all of the data), learning rate and the number of frames the LSTM analyses at once. A total of 1263 randomly selected echo videos were used to test algorithm performance. For final DL testing, researchers created a script to generate a CSV file containing a calculation of difference between algorithm estimated EF and criterion standard EF calculation for each video along with a cumulative average. The study did not utilize any patient data nor medical center facilities or resources and was exempted from Institutional Review Board (IRB) review.

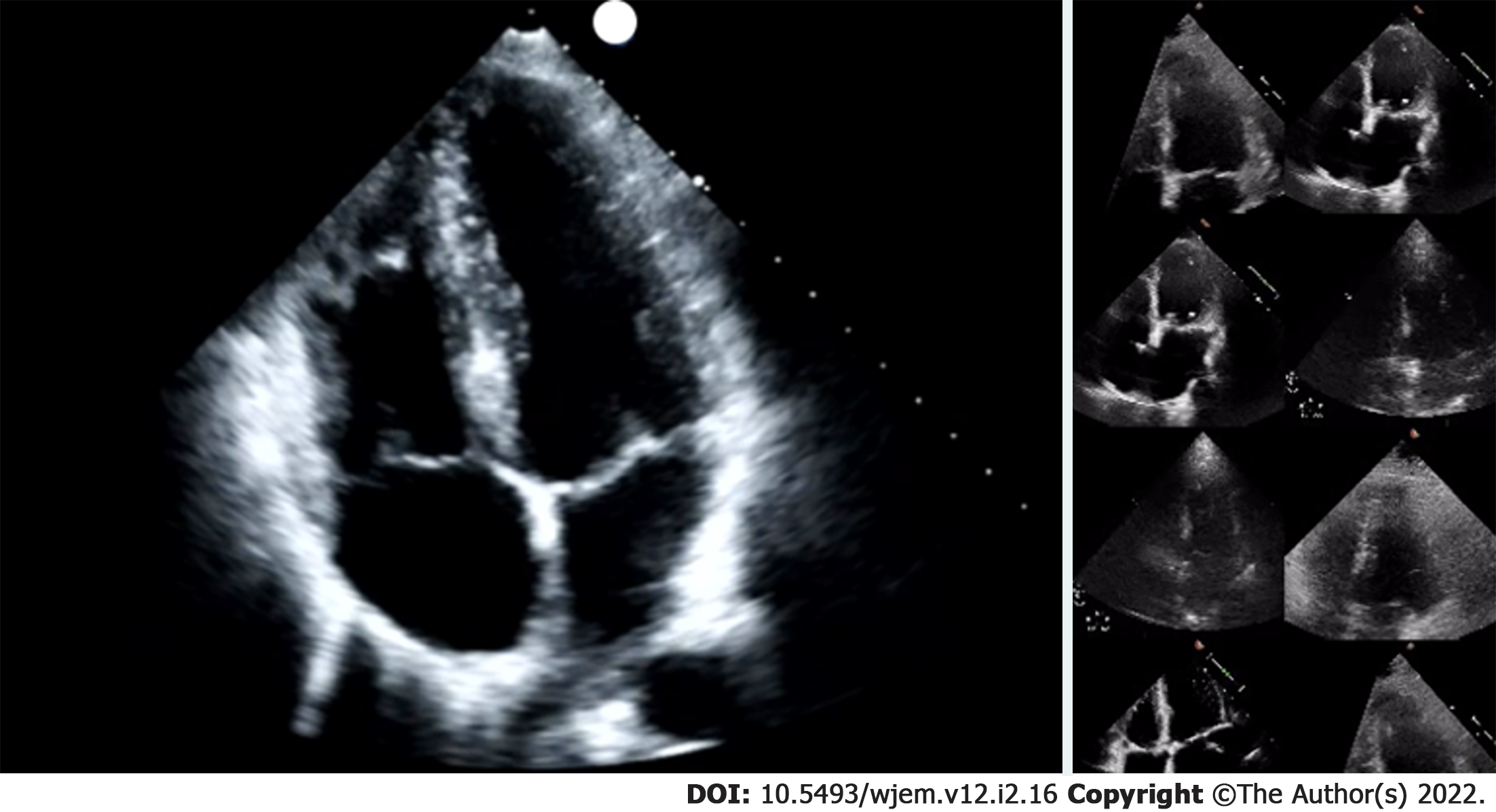

Researchers were granted permission to access the Stanford EchoNet-Dynamic database after submitting an application to the data curators of the approximately 10036 apical 4-chamber (A4C) echo A4C video repository[20]. After downloading the video data and corresponding spreadsheet, researchers randomly removed approximately 15% (1263 A4C) of the A4C videos for final performance testing. Stanford researchers created the EchoNet-Dynamic database “to provide images to study cardiac motion and chamber volumes using echocardiography, or cardiac ultrasound, videos obtained in real clinical practice for diagnosis and medical decision making[20].” Data contained in the database is depicted in Table 1. All extracted Stanford de-identified echo examination data contained EF, end systolic and diastolic volumes, key frames and coordinates for LV endocardial tracing and were read as part of comprehensive echocardiography study performance. A4C videos were 112 × 112 pixels in size, compared to typical exported examination videos which can be 1024 × 560 pixels in size, or larger (Figure 2). Many of these videos were noted to have noisy images impacting LV endocardial delineation.

| Category | Content in Category |

| Video file name | File name linked to annotations, labels and videos |

| Subject age | Scanning subjects age reported in years |

| Subject gender | Scanning subject gender |

| Ejection fraction | EF calculated through a ratio of ESV and EDV |

| End systolic volume | ESV calculated using a method of discs during the echocardiogram |

| End diastolic volume | EDV calculated using a method of discs during the echocardiogram |

| Height of video frame | Individual frame height for the echo videos |

| Width of video frame | Individual frame width for the echo videos |

| Frames per second | FPS rate for the echo video |

| Number of frames | Number of frames in the entire echo video |

| Split from benchmark | Split of videos into train/validate and test datasets from original work |

The publicly available Keras-based (a python machine learning library) VGG-16 bidirectional LSTM DL algorithm, which had produced superior performance in prior studies, was chosen for this project[21]. Researchers coded the DL algorithm in the Python programming language version 3.72. VGG-16 convolutional neural network (CNN) architecture is obtainable from public sources including an online repository, github.com. VGG is a rudimentary CNN containing only 16 Layers, in comparison to most modern CNNs which are made of hundreds of layers. Previous work suggests simpler CNNs like VGG-16 may perform better than larger complex ones in classifying some grayscale ultrasound images[21].

The VGG-16 CNN was used inside a Long Short Term Memory algorithm. A LSTM network is one of several approaches geared for video analysis by having the VGG-16 CNN analyze each frame sequentially. On top of the VGG-16 functionality the LSTM tracks temporal changes which may occur from one frame to the next. For studies with large dynamic components such as lung ultrasound applications and echocardiography, such approaches are especially critical. Standard LSTM networks are designed to track temporal changes in one direction. Researchers chose a bidirectional LSTM architecture for even better performance. Bi-directional LSTM allows temporal information to flow in both directions, forward and reverse, resulting in higher sensitivity and specificity for detecting change from one frame to another. Higher sensitivity and specificity result from the bi-directional LSTM’s enhanced understanding of what context motion or change occurs in. Researchers used standard VGG-16 specific initial training weights for the VGG-16 bidirectional LSTM. Weights used in a CNN are best viewed as learnable mathematical parameters. These weights are used by a CNN to analyze image features and through that the entire image, leading to image classification or object detection.

The bidirectional LSTM was trained on 70% of the original downloaded data. Stepwise adjustments were made to optimizers, batch size and learning rates in response to training results. Total epochs were also manipulated training to improve results for highest accuracy.

LSTM architecture and coding included scripts for automatic cross validation during each epoch automatically. Additionally, researchers added code to automatically calculate a running MAE from epoch to epoch in order to provide additional training performance clues. After results were optimized and no further adjustments improved performance, the algorithm was tested on the 1263 apical 4 chamber echocardiograph videos randomly selected and set aside upon original data download from EchoNet. These randomly selected video EFs ranged from 7% to 91%. During this final testing phase, researchers again coded the algorithm to produce an MAE and also a running CSV file with each CNN predicted EF and the actual calculated EF made at Stanford using the modified Simpson’s rule.

Echocardiographic EF measurements inherently vary in the same subject due to both patient and operator factors[21]. Therefore, exact agreement between the calculated EF and LSTM prediction are seen as unlikely. Thus, mean absolute error (MAE) and root mean square error (RMSE) calculations were performed on algorithm EF results, in keeping with field standards, to evaluate relative algorithm accuracy compared to Echo Lab calculated EF[21]. MAE for highly skilled echocardiographers has been established to range from 4 to 5%[22]. A highly complex DL algorithm using additional data points and built by database creators achieved a MAE of 5.44% with the same videos[20]. Researchers also performed Bland-Altman analysis between comprehensive echocardiography laboratory bi-planar modified Simpson’s rule EF results and the visual estimations by the DL algorithm. Statistical analyses were performed using Python Scripts.

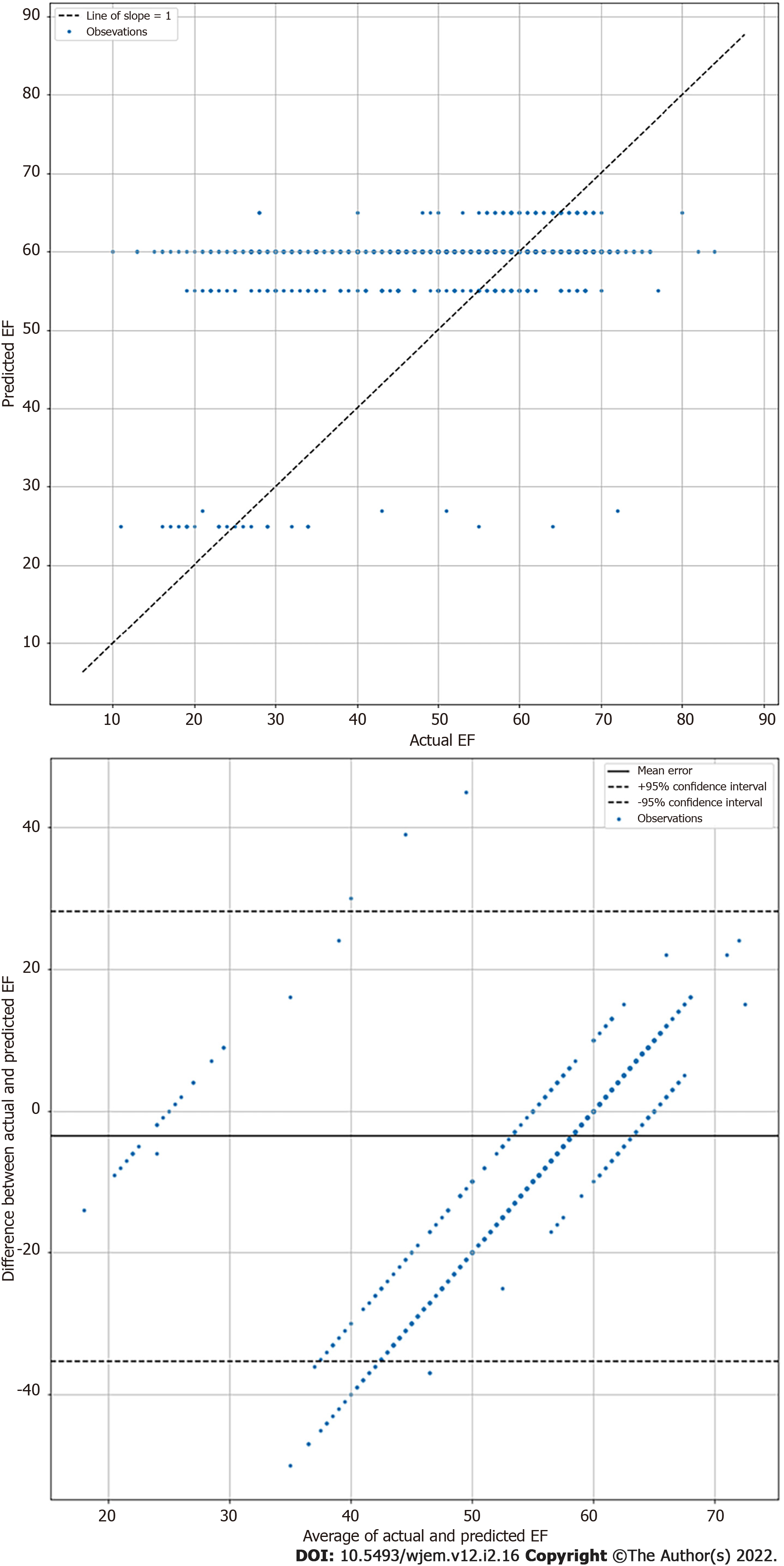

The LSTM DL algorithm using original greyscale video for visual EF estimation resulted in a MAE of 8.08% (95%CI 7.60 to 8.55) when tested on 1263 apical 4 chamber videos previously unseen by the algorithm. This suggests good performance compared to highly skilled human operators such as echo technologists or echo trained cardiologists who typically have an MAE of 4% to 5%[22]. The RMSE was 11.98 and correlation of 0.348. The standard deviation was 8.58%. The Bland-Altman plots are shown in Figure 3. For reference, the DynamicEcho creators tested 9 different DL models obtaining a best MAE of 5.44 and worst of 51.8. RMSE ranged similarly from 6.16 to 35.2, respectively[20]. Human experts tested by DynamicEcho creators achieved an MAE of 3.12 and RMSE of 4.57[20].

Best results were obtained with an LSTM frame analysis of 50, 40 epochs, batch size of 40, using an Adam optimizer and batch size of 10 videos. The DL was able to analyze and interpret reach of the 1263 test videos with no failures, Training failed on three videos which were found to be corrupted (not previously identified) and contained no usable data.

This simple DL algorithm proved fairly accurate in delivering visual EF predictions when tested on 1263 actual patient A4C echocardiogram videos and compared to comprehensive cardiac laboratory echocardiography EF calculations. Further, its agreement as measured by MAE was within three percentage points of what is expected from expert echocardiographers and approximately two percentage points of the best performing complex algorithm designed by the DynamicEcho database creators. utilizing additional available data points. The creation of “visual” EF estimation DL algorithms has been overlooked to date by POCUS machine vendors, but considerable potential exists for its imple

Emergent situations such as unstable vital signs require rapid patient assessment. Simple measurements like blood pressure, heart rate and oxygen saturations are useful initial parameters, yet clinicians may require more information than vital signs provide. Perhaps the most important general information in many emergent medical situations is assessment of systolic cardiac function. Uncovering abnormal cardiac function is immensely informative to the clinician, especially when it was previously unknown. An unstable patient with normal cardiac function can tolerate interventions that are contraindicated for those with decreased EF, such as immediate administration of fluid boluses. Alternatively, severely depressed systolic cardiac function may lead the clinician directly to pharmacological intervention with centrally administered vasopressors to increase blood pressure and systemic perfusion. Unfortunately, without actually imaging the heart in real time at bedside, clinicians have few options for dividing current systolic function reliably. POCUS cardiac imaging is the most accessible imaging solution worldwide and may hold the answer for emergent assessment even in the hands of novice users[23].

POCUS literature on cardiac function assessment dates back nearly 30 years, and has ranged from simply identifying cardiac activity in arresting patients to identification of tamponade and even visual assessment of EF[24,25,11]. One early study showed that POCUS users, who received focused training on visual EF estimation, could successfully categorize EF into normal, moderately and severely depressed categories[11]. This equates to approximately 20% categories given a typically EF range of 10% on the low side and 70% on the high. In contrast, a report obtained from a echocardiography laboratory will have an EF presented as a 5% range. While knowing if the EF is normal, moderately or severely depressed can be helpful in some clinical situations, a more granular measure and one that is reproducible would be necessary in others. For instance such as a patient whose EF has dropped from 50% to 40% or from 35% to 25%. Both may represent critically important changes as one shows a 10% decrease from a near normal EF and the other a deterioration from a poor EF to significantly worse. Additionally, stressful situations such as emergency scenarios may result in a confidence drop in measurement repeatability and a change of the provider visually estimating the EF can lead more inter-observer problems with identifying EF changes[26]. A precise and repeatable EF measurement tool would optimally be available at very patient’s bedside, but in reality most clinicians still do not use ultrasound at all, and among those that do the vast majority cannot perform modified Simpson’s rule calculation from the apical 4 and 2 chamber views[27]. Similarly, most clinicians still lack the experience to reliably visually estimate the EF such as a highly seasoned cardiologist or echo tech.

EF calculation via echo with AI has been well explored by large research groups with good results, but often complex algorithms and some requiring multiple steps[28,29]. The creators of the Stanford EchoNet-Dynamic database were successful in creating several algorithms with the best one performing on par with echo techs in a comprehensive echocardiography laboratory[19]. Not surprisingly, commercial vendors of AI technology have finally turned their attention to the POCUS market and its needs. One of the first applications focused on by a number of both hardware/software and software only vendors has been EF calculation. Most utilize a modified Simpson’s rule approach requiring good imaging planes and in some cases acquisition of a 2 chamber apical view. Typically the internal LV tracing made by the software are displayed and the clinician is asked to adjust them as needed, something beyond the skill level of most POCUS users.

This is the first POCUS research effort without involvement of a commercial entity and using a classical modified Simpson’s rule approach that could be identified in the literature. It suggests that rapid visual EF estimation may be feasible as a clinical DL tool for emergent clinical settings. The MAE of 8%, while not as good as attained by expert echocardiographers still shows significant potential for such deep learning algorithms. The original DynamicEcho creators attained a range of MAEs for multiple DL algorithm approaches using additional data beside simple video analysis. The highest MAE was over 50% and best performing at 5.44% further validating this initial effort as a worthwhile development pathway for future DL solutions. No doubt future developers, using higher resolution videos could greatly improve on these results, especially prior to putting them into commercially available software. The visual estimation DL algorithm described here using LSTM can run in real time on an ultrasound device while a novice POCUS user is imaging the heart. The ability to estimate EF in real time, without need for a pause while the ultrasound machine runs the DL algorithm tracing endocardial borders and comparing end systolic and end diastolic volumes, should improve clinicians’ abilities for rapid medical decision making.

Our study had multiple limitations. The database contained a large number of videos with comprehensive echocardiography laboratory calculated EF, but the videos to which access was provided were very small at 112 × 112 pixels, potentially limited algorithm performance. While DL algorithms often resize video during training in order to decrease computational burden on the algorithm, researchers have seen improved results when using larger image size, double or triple the provided frame dimension, when training on ultrasound video. Although the DL algorithm was tested on a large number of echo videos covering the broad range of EFs from very low to high, this is not the same as actual implementation of an algorithm on a POCUS device in a clinical setting to test its performance. The steps necessary to achieve that were outside the scope of our study, but are technically, if not logistically simple. Additionally, the source videos were typically from one of a handful of ultrasound machines, thus likely leading to a less robust algorithm as recent work show the potential for significant DL algorithm performance degradation even when faced with superior image quality videos and near total performance failure when significantly inferior image quality videos are faced by the algorithm[30]. Another source of disagreement with comprehensive echo lab EF calculation and our DL algorithm lie in our use of only 4 chamber videos (the only ones available for download). The optimal approach to EF calculation is using the ESV and EDV volume of the left ventricle in both apical 4 chamber and apical 2 chamber views. This results in a more accurate EF calculation and should naturally explain some of the differences found[7].

This simplified DL algorithm proved fairly accurate at visually estimating LV EF from short real time echo video clips. It opens up an exploratory avenue that differs from most current commercial applications seen in automated EF calculations. Less burdensome than complex DL approaches used for EF calculation, such an approach may be more optimal for POCUS settings. Future research lines should explore actual on the edge implementation and testing in different clinical environments. Additionally, an exploration of a more diverse database with multiple ultrasound machines represented as well as higher quality videos should be undertaken to further implore potential accuracy improvements in visual EF estimation.

Deep learning has been explored in medical ultrasound image analysis for several years and some applications have focused on evaluation of cardiac function. To date, most academic research and commercial deep learning ventures to automate left ventricular ejection calculation have resulted in image quality dependent highly complex algorithms which require multiple views from the apical window. Research into alternative approaches have been limited.

To explore a deep learning approach modeling visual ejection fraction estimation, thereby modeling the approach taken by highly skill electrocardiographers with decades of experience. If possible, such an approach could work with less than ideal images and be less computationally burdensome, both ideal for point of care ultrasound applications, where experts are unlikely to be present.

To develop a deep learning algorithm capable of visual estimation of left ventricular ejection fraction.

Long short term memory structure using a VGG16 convolutional neural network capable of bidirectionality was employed for video analysis of cardiac function. The algorithm was trained on a publicly available echo database with ejection fraction calculations made at a comprehensive echocardiography laboratory. After training, the algorithm was tested on a data subset specifically set aside prior to training.

The algorithm performed well in comparison to baseline data for correlation between echocardiographers calculating ejection fraction and gold standards. It outperformed some previously published algorithms for agreement.

Deep learning based visual ejection fraction estimation is feasible and could be improved with further refinement and higher quality databases.

Further research is needed to explore the impact of higher quality video for training and with a more diverse ultrasound machine source.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Medicine, research and experimental

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Cabezuelo AS, United States; Muneer A, United States S-Editor: Wang LL L-Editor: A P-Editor: Li X

| 1. | Halliday BP, Senior R, Pennell DJ. Assessing left ventricular systolic function: from ejection fraction to strain analysis. Eur Heart J. 2021;42:789-797. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 27] [Cited by in RCA: 64] [Article Influence: 16.0] [Reference Citation Analysis (0)] |

| 2. | Kusunose K, Zheng R, Yamada H, Sata M. How to standardize the measurement of left ventricular ejection fraction. J Med Ultrason (2001). 2022;49:35-43. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 12] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 3. | O'Quinn R, Ferrari VA, Daly R, Hundley G, Baldassarre LA, Han Y, Barac A, Arnold A. Cardiac Magnetic Resonance in Cardio-Oncology: Advantages, Importance of Expediency, and Considerations to Navigate Pre-Authorization. JACC CardioOncol. 2021;3:191-200. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 4] [Cited by in RCA: 6] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 4. | Vega-Adauy J, Tok OO, Celik A, Barutcu A, Vannan MA. Comprehensive Assessment of Heart Failure with Preserved Ejection Fraction Using Cardiac MRI. Heart Fail Clin. 2021;17:447-462. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 3] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 5. | Sanna GD, Canonico ME, Santoro C, Esposito R, Masia SL, Galderisi M, Parodi G, Nihoyannopoulos P. Echocardiographic Longitudinal Strain Analysis in Heart Failure: Real Usefulness for Clinical Management Beyond Diagnostic Value and Prognostic Correlations? Curr Heart Fail Rep. 2021;18:290-303. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 14] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 6. | Vieillard-Baron A, Millington SJ, Sanfilippo F, Chew M, Diaz-Gomez J, McLean A, Pinsky MR, Pulido J, Mayo P, Fletcher N. A decade of progress in critical care echocardiography: a narrative review. Intensive Care Med. 2019;45:770-788. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 174] [Cited by in RCA: 149] [Article Influence: 24.8] [Reference Citation Analysis (0)] |

| 7. | Nosir YF, Vletter WB, Boersma E, Frowijn R, Ten Cate FJ, Fioretti PM, Roelandt JR. The apical long-axis rather than the two-chamber view should be used in combination with the four-chamber view for accurate assessment of left ventricular volumes and function. Eur Heart J. 1997;18:1175-1185. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 38] [Cited by in RCA: 38] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 8. | Kuroda T, Seward JB, Rumberger JA, Yanagi H, Tajik AJ. Left ventricular volume and mass: Comparative study of two-dimensional echocardiography and ultrafast computed tomography. Echocardiography. 1994;11:1-9. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 29] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 9. | Jafari MH, Girgis H, Van Woudenberg N, Liao Z, Rohling R, Gin K, Abolmaesumi P, Tsang T. Automatic biplane left ventricular ejection fraction estimation with mobile point-of-care ultrasound using multi-task learning and adversarial training. Int J Comput Assist Radiol Surg. 2019;14:1027-1037. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 23] [Cited by in RCA: 26] [Article Influence: 4.3] [Reference Citation Analysis (0)] |

| 10. | Gudmundsson P, Rydberg E, Winter R, Willenheimer R. Visually estimated left ventricular ejection fraction by echocardiography is closely correlated with formal quantitative methods. Int J Cardiol. 2005;101:209-212. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 131] [Cited by in RCA: 138] [Article Influence: 6.9] [Reference Citation Analysis (0)] |

| 11. | Moore CL, Rose GA, Tayal VS, Sullivan DM, Arrowood JA, Kline JA. Determination of left ventricular function by emergency physician echocardiography of hypotensive patients. Acad Emerg Med. 2002;9:186-193. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 152] [Cited by in RCA: 171] [Article Influence: 7.4] [Reference Citation Analysis (0)] |

| 12. | Etherington N, Larrigan S, Liu H, Wu M, Sullivan KJ, Jung J, Boet S. Measuring the teamwork performance of operating room teams: a systematic review of assessment tools and their measurement properties. J Interprof Care. 2021;35:37-45. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 12] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 13. | Filipiak-Strzecka D, Kasprzak JD, Wejner-Mik P, Szymczyk E, Wdowiak-Okrojek K, Lipiec P. Artificial Intelligence-Powered Measurement of Left Ventricular Ejection Fraction Using a Handheld Ultrasound Device. Ultrasound Med Biol. 2021;47:1120-1125. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 18] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 14. | Aldaas OM, Igata S, Raisinghani A, Kraushaar M, DeMaria AN. Accuracy of left ventricular ejection fraction determined by automated analysis of handheld echocardiograms: A comparison of experienced and novice examiners. Echocardiography. 2019;36:2145-2151. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 8] [Article Influence: 1.3] [Reference Citation Analysis (0)] |

| 15. | Narang A, Bae R, Hong H, Thomas Y, Surette S, Cadieu C, Chaudhry A, Martin RP, McCarthy PM, Rubenson DS, Goldstein S, Little SH, Lang RM, Weissman NJ, Thomas JD. Utility of a Deep-Learning Algorithm to Guide Novices to Acquire Echocardiograms for Limited Diagnostic Use. JAMA Cardiol. 2021;6:624-632. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 194] [Cited by in RCA: 215] [Article Influence: 53.8] [Reference Citation Analysis (2)] |

| 16. | Liu X, Fan Y, Li S, Chen M, Li M, Hau WK, Zhang H, Xu L, Lee AP. Deep learning-based automated left ventricular ejection fraction assessment using 2-D echocardiography. Am J Physiol Heart Circ Physiol. 2021;321:H390-H399. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 24] [Article Influence: 6.0] [Reference Citation Analysis (0)] |

| 17. | Yoon YE, Kim S, Chang HJ. Artificial Intelligence and Echocardiography. J Cardiovasc Imaging. 2021;29:193-204. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 22] [Article Influence: 5.5] [Reference Citation Analysis (0)] |

| 18. | Guppy-Coles KB, Prasad SB, Smith KC, Lo A, Beard P, Ng A, Atherton JJ. Accuracy of Cardiac Nurse Acquired and Measured Three-Dimensional Echocardiographic Left Ventricular Ejection Fraction: Comparison to Echosonographer. Heart Lung Circ. 2020;29:703-709. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 6] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 19. | U.S. Food and Drug Administration. [cited 20 July 2021]. Available from: https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfRes/res.cfm?ID=173162. |

| 20. | Ouyang D, He B, Ghorbani A, Lungren M, Ashley E, Liang D, Zou J. EchoNet-Dynamic: a Large New Cardiac Motion Video Data Resource for Medical Machine Learning. 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), 2019: 1-11. |

| 21. | Blaivas M, Blaivas L. Are All Deep Learning Architectures Alike for Point-of-Care Ultrasound? J Ultrasound Med. 2020;39:1187-1194. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 20] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 22. | Farsalinos KE, Daraban AM, Ünlü S, Thomas JD, Badano LP, Voigt JU. Head-to-Head Comparison of Global Longitudinal Strain Measurements among Nine Different Vendors: The EACVI/ASE Inter-Vendor Comparison Study. J Am Soc Echocardiogr. 2015;28:1171-1181, e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 414] [Cited by in RCA: 526] [Article Influence: 52.6] [Reference Citation Analysis (0)] |

| 23. | Maw AM, Galvin B, Henri R, Yao M, Exame B, Fleshner M, Fort MP, Morris MA. Stakeholder Perceptions of Point-of-Care Ultrasound Implementation in Resource-Limited Settings. Diagnostics (Basel). 2019;9. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 8] [Cited by in RCA: 14] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 24. | Blaivas M, Fox JC. Outcome in cardiac arrest patients found to have cardiac standstill on the bedside emergency department echocardiogram. Acad Emerg Med. 2001;8:616-621. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 216] [Cited by in RCA: 196] [Article Influence: 8.2] [Reference Citation Analysis (0)] |

| 25. | Plummer D, Brunette D, Asinger R, Ruiz E. Emergency department echocardiography improves outcome in penetrating cardiac injury. Ann Emerg Med. 1992;21:709-712. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 228] [Cited by in RCA: 200] [Article Influence: 6.1] [Reference Citation Analysis (0)] |

| 26. | Al-Ghareeb A, McKenna L, Cooper S. The influence of anxiety on student nurse performance in a simulated clinical setting: A mixed methods design. Int J Nurs Stud. 2019;98:57-66. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 22] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 27. | Pouryahya P, McR Meyer AD, Koo MPM. Prevalence and utility of point-of-care ultrasound in the emergency department: A prospective observational study. Australas J Ultrasound Med. 2019;22:273-278. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 6] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 28. | Kim T, Hedayat M, Vaitkus VV, Belohlavek M, Krishnamurthy V, Borazjani I. Automatic segmentation of the left ventricle in echocardiographic images using convolutional neural networks. Quant Imaging Med Surg. 2021;11:1763-1781. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 8] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 29. | Asch FM, Mor-Avi V, Rubenson D, Goldstein S, Saric M, Mikati I, Surette S, Chaudhry A, Poilvert N, Hong H, Horowitz R, Park D, Diaz-Gomez JL, Boesch B, Nikravan S, Liu RB, Philips C, Thomas JD, Martin RP, Lang RM. Deep Learning-Based Automated Echocardiographic Quantification of Left Ventricular Ejection Fraction: A Point-of-Care Solution. Circ Cardiovasc Imaging. 2021;14:e012293. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 10] [Cited by in RCA: 44] [Article Influence: 11.0] [Reference Citation Analysis (0)] |

| 30. | Blaivas M, Blaivas LN, Tsung JW. Deep Learning Pitfall: Impact of Novel Ultrasound Equipment Introduction on Algorithm Performance and the Realities of Domain Adaptation. J Ultrasound Med. 2021;. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 3] [Article Influence: 1.0] [Reference Citation Analysis (0)] |