Published online Nov 27, 2014. doi: 10.5313/wja.v3.i3.203

Revised: April 14, 2014

Accepted: July 17, 2014

Published online: November 27, 2014

Processing time: 292 Days and 5.6 Hours

This paper discusses some of the key aspects of human factors in anaesthesia for the improvement of patient safety. Medical errors have emerged as a serious issue in healthcare delivery. There has been new interest in human factors as a means of reducing these errors. Human factors are important contributors to critical incidents and crises in anaesthesia. It has been shown that the prevalence of human factors in anaesthesia can be as high as 83%. Cognitive thinking process and biases involved are important in understanding human factors. Errors of cognition linked with human factors lead to anaesthetic errors and crisis. Multiple errors in the cognitive thinking process, known as “Cognitive dispositions to respond” have been identified leading to errors. These errors classified into latent or active can be easily identified in the clinical vignettes of serious medical errors. Application of the knowledge on human factors and use of cognitive de-biasing strategies can avoid human errors. These strategies could involve use of checklists, strategies to cope with stress and fatigue and the use of standard operating procedures. A safety culture and health care model designed to promote patient safety can compliment this further. Incorporation of these strategies strengthens the defence layers against the “Swiss Cheese” models, which exist in the health care industry.

Core tip: Human factors contribute to human errors and anaesthetic crisis situation. These human factors can be identified and studied in detail. Progression of the non-routine events coupled with human factors when left unchecked lead to serious errors in health care. When the knowledge of human factors is incorporated into the practice of anaesthesia, patient safety is promoted.

- Citation: Chandran R, DeSousa KA. Human factors in anaesthetic crisis. World J Anesthesiol 2014; 3(3): 203-212

- URL: https://www.wjgnet.com/2218-6182/full/v3/i3/203.htm

- DOI: https://dx.doi.org/10.5313/wja.v3.i3.203

Patient safety has taken centre stage in all aspects of anaesthesia. In the last few years, human factors have appeared time and again as an important contributor in many aspects of patient safety. Lessons from aviation safety have also made way into training in anaesthesia. Many of these lessons involve human factors, which are less spoken about, generally not included in the routine training of anaesthesia or at least certainly not studied in great depth.

In the past decade, many critical incidents in anaesthesia have been compared to the aviation disasters, leading to human factors affecting the performance of anaesthetist to be looked into at a greater depth. Understanding the nature of interaction between these various factors can be complex. However it is pertinent that every anaesthetist should possess a basic idea of human factors, which can affect his or her performance in a crisis situation. Knowledge on this subject can be used in organizing training programs, creating simulation sessions, debriefing critical incidents and most importantly looking into one’s own practice to refine the non technical skills to make the anaesthetic practice much safer.

Retrospective analysis of critical incidents and disasters in anaesthesia often brings human factors to be flagged up as important contributors. Therefore, gaining an insight into human factors in itself can mitigate the risks associated with them in times of anaesthetic crisis. Research in this field has shown that important contributions can be made to patient safety[1].

Mishaps, errors, critical incidents, near misses all speak the same language geared towards promoting patient safety. It is now well recognized that human factors play an important role in preventable anaesthetic mishaps. A review of critical incidents by Cooper et al[2] revealed that human factors were contributory in 82% of the 359 incidents reported. These incidents ranged from simple equipment malfunction in some cases, to death in others, indicating seriousness and importance of the problems. These errors due to human factors are not isolated events picked up by researchers and studies. In fact it has been reported that 44000 to 98000 deaths occur annually in the United States secondary to medical errors[3].

Human errors have been blamed in over 60% of nuclear power plant accidents and 70% of all commercial aviation accidents. It has been shown that human error was an important contributory factor in 83% of anaesthetic incidents in analysis of 2000 incident reports from Australia[4].

The necessity of safety system in any organization is of paramount importance. This safety system is most needed as a provider to a medium, wherein systematic approach to safety is sought. The analysis and use of human factors can be embedded into this system[5]. Anaesthetists often have a unique way of practice, rather a safety system unique to their practice. This safety system is refined, acquired or adopted through the lessons learnt in their working lives. In aviation a similar system exists, this is known as the Safety Management System[5].

It is under the tenets of this safety system, often referred to as clinical governance in the health care setting, the understanding of human factors gains a strong hold in enhancing patient safety. In the 1970’s, rising concerns over the safety of anaesthesia gas machines triggered interest in the application of human factors. In the late 1990’s medical error emerged as a serious issue in the delivery of healthcare, spawning new interest in human factors as a means of reducing error.

In simple terms, “human factors include all the factors that can influence people and their behaviour”[6]. The Institute of ergonomics and human factors (United Kingdom) defines human factors as a “scientific discipline dealing with the interactions among humans and other factors in the system and deals with the theory, principles, data and methods to design and optimise human well being and overall system performance”[6]. It can also be defined in terms of performance of a person working within a complex mechanical system. Performance is dependent on the individual’s capabilities, limitations and attitudes. Performance is also directly related to the quality of instructions and training provided.

Human error is defined as performance that deviates from the ideal[7]. This may take the form of cognitive or procedural error. Cognitive errors are usually thought-process errors in decision-making, and may be attributed to the individual or to the anaesthetic team. They usually cause morbidity or mortality. Procedural errors occur when the wrong drugs are administered[8] or if mistakes are made with nerve blocks or other similar procedures.

The most important human error is the error of cognition. Studies have shown cognitive errors as important contributors in medical errors. Groopman[9] reported that most medical errors are mistakes in thinking and that technical error only constituted a small proportion. This aspect of psychology involved with decision-making has been looked into with interest as an important contributor of human factor in errors. Despite the ample evidence of cognitive bias in decision-making leading to errors, the need to focus on this area in greater depth has been long over due[10]. The impact of cognitive errors in medicine needs to be acknowledged and it is time, that anaesthetists and every other healthcare personnel pay heed to lessons on cognitive errors and enter a new era of patient safety.

Whilst a detailed discussion on cognition is beyond the scope of this article, it is important to understand some basic fundamental principles of cognition. Cognition is generally defined as a process involving conscious intellectual activity[11]. This intellectual activity consists of many different aspects such as attention, memory, logic and reasoning.

Croskerry[10] defined multiple aspects of the cognitive thinking process leading to diagnostic errors and called them cognitive dispositions to respond (CDR’s). These errors occur due to failures in perception, failed heuristics and biases. Some of the CDR’s mentioned by Croskerry[10,12] and Stiegler et al[13] have been listed below.

Also known as fixation, is focusing on some of the features of patient’s initial presentation and not responding to other aspects of patient care in the light of other new information being presented.

Much discussed in literature is the case of Elaine Bromiley, where in the focus was to intubate rather than recognizing a “cannot intubate, cannot ventilate situation” (CICV) and proceeding for emergency cricothyroidotomy. More common examples include focus on alarms of the infusion pump and ignoring the surgical bleed and hypotension[13].

These errors of fixation can be classified into three main categories[14]: (1) This and only this: e.g., in a case of airway obstruction, having a persistent belief of bronchospasm and not thinking of kinked EndoTracheal tube; (2) Everything but this: e.g., not looking for wrong drug administration when the drug does not solve the problem. In a hypertensive patient not responding to antihypertensive infusion should arouse the suspicion of wrong drug administration; and (3) Everything is OK: e.g., A clinician assumes that a low pulse oximeter value is due to equipment malfunction or peripheral vasoconstriction when, in fact, there is severe hypoxemia.

The thought process is governed by prior expectation in this bias, e.g., expectations of intubation to be easy when the assessment of airway point towards a difficult intubation, e.g., apnoea after administration of opioids is a prior expectation, but when this occurs due to wrong drug administration of muscle relaxant, it becomes an error due to ascertainment bias.

Aggregate fallacy is the belief that clinical guidelines do not apply to the individual’s patients. This often leads to errors of commission, e.g., choosing to perform a central neuraxial blockade in a patient with coagulation disorder, clearly against the guidelines and standards, e.g., administering non steroidal anti inflammatory drug in a severe asthmatic patient.

This bias can be defined as a tendency to judge things based on what comes to mind readily. This is also influenced by previous bad experience, e.g., diagnosing a simple case of bronchospasm as anaphylaxis because of a previous bad experience[13], e.g., treating ST depression, purely as an effect of bleeding and hypotension and not worrying about myocardial infarction.

In simple words, the tendency to act rather than be inactive in response to information. It is more likely seen in physicians who believe that harm to the patient can only be prevented by active intervention. Unnecessary interventions and investigations fall under this category, e.g., trying to fix a central vein catheter in a simple case of bronchospasm and producing pneumothorax.

This is the tendency to look for confirming evidence to support a diagnosis rather than disconfirming evidence. It’s also known as “Cherry-picking” or trying to force data to fit a desired or suspected diagnosis, e.g., repeated check at the blood pressures, changing the blood pressure cuff in order to get a better blood pressure reading and failing to acknowledge the low reading[13] or changing the pulse oximeter probe when there is low reading due to actual hypoxemia.

Typical example is that of a differential diagnosis when carried over on multiple records by multiple health care workers tends to become a more definitive diagnosis. This bias often ends in excluding all other possibilities.

Contrary to the commission bias, this is exactly the opposite. Here the physician has a tendency towards inaction, e.g., delays seen in use of cardioversion in fast arrhythmias when the clinical situation demands it.

The tendency to believe that one knows much more than what he or she actually does. Both anchoring and availability augment this bias. Fuelled by commission bias, this may lead to catastrophic results, e.g., often linked to unconscious incompetence, examples of this bias include a junior anaesthetist with little or no skill of fibre-optic intubation attempting an “awake fibre-optic” technique on a patient with difficult airway.

The tendency to stop the decision making process prematurely and accept a diagnosis before it has fully been verified is known as premature closure, e.g., assessing and diagnosing the cause of low oxygen saturations (as displayed on the monitor) to be secondary to the low perfusion of the cold fingers on which the pulse oximetry probe has been placed, rather than looking for other reasons.

This is the failure to elicit all information in making a diagnosis, e.g., failure to elicit information regarding ischaemic heart disease or angina in the pre-anaesthetic assessment.

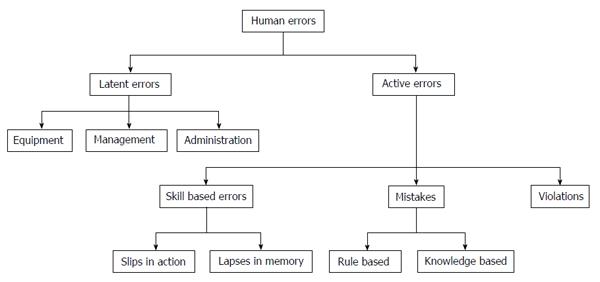

Linked to these errors of cognition are human failures causing errors in health care. Human failures can be broadly classified into latent and active errors. Latent errors include the equipment, management, administration and the processes involved. Active errors include the errors occurring at the site of action[15]. Active errors can further be classified as shown in the Figure 1.

Skill based errors commonly occur when analytical thinking is not employed. These occur with normal routine tasks. Knowledge based errors occur when an expert judgement is used contrary to the prevailing standards[15]. Knowledge based errors occur when there is deficiency of knowledge. This needs to be differentiated from violations, which are deliberate deviations from the prevailing standards.

Rule based errors occur when the standard rules in practice are not used or inappropriately used[16].

The list of human factors influencing anaesthetic practice is endless. Some of the important human factors influencing anaesthetic practice have been well elucidated by Marcus[15]. In his study of human factors, in which 668 incidents were reported, a total of 284 anaesthetic human factors were identified. These human factors accounted for 42.5% of the total incidents. Needless to say, the involvement of human factors in critical incidents ranked high. These factors are presented in the Table 1.

| Human factors | Cognitive mechanism |

| Error of judgement | Rule or knowledge |

| Failure to check | Violation |

| Technical failures ok skill | Skill |

| Inexperience | Knowledge |

| Inattention/distraction | Skill |

| Communication | Latent |

| Poor preoperative assessment | Rule or knowledge |

| Lack of care | Skill |

| Drug dosage slip | Skill |

| Teaching | Skill |

| Pressure to do the case | Latent |

A combination of non-routine events (NRE’s) and human factors provides a medium prone for medical errors to thrive. These when unchecked by the safety measures incorporated by the hospital safety net or even worse when unchecked by the anaesthetists individual safety system often lead on to anaesthetic crisis with catastrophic results.

Merriam Webster defines crisis as “a situation that has reached a critical phase”, “an unstable or crucial time or state of affairs in which a decisive change is impending; especially with the distinct possibility of a highly undesirable outcome”[11].

In simple words it is a disaster waiting to happen. The difference between an anaesthetic emergency and a crisis is often difficult to elucidate. Not all emergencies are crisis bound by human factors. The following sections look into the human factors, which contribute in some way to anaesthetic crisis and its management.

The clinical vignettes below illustrate some of the many anaesthesia crises in which human factors have had an important role to play.

Epidural/spinal blunders: (1) Theatre nurse Myra had received an epidural for labour analgesia. Post delivery, the epidural anaesthetic was mistakenly connected into her arm intravenous cannula. Myra died[17]; (2) Grace Wang’s spinal canal was mistakenly injected with chlorhexidine instead of the local anaesthetic solution. Grace Wang is now virtually quadriplegic[18,19]; and (3) Wayne Jowett aged 18 was recovering from leukaemia. Vincristine, which was to be given intravenously, was administered intrathecally. Wayne Jowett died a month later[20].

Air embolism: Baby Aaron was being operated for pyloric stenosis. Towards the end of the operation, the anaesthetist was asked to inject air into the nasogastric tube to distend the stomach. The air was injected mistakenly into the veins. Baby Aaron died[21].

Airway disasters: (1) A 9-year-old boy was posted for a minor operation. The cap of the intravenous fluid administration set blocked the angle piece of the anaesthetic tubing. Treatment for presumed bronchospasm was unsuccessful. The 9-year-old died from hypoxemia[22]; and (2) Elaine Bromiley was due to have surgery on her nose. After induction of anaesthesia, a CICV situation was encountered. In the prolonged period of hypoxia, which ensued, repeated attempts at intubation were unsuccessful. Elaine died of severe hypoxic brain injury a few days later[23].

Wrong side errors: A medical student warned the surgeon of the concerns of wrong kidney being removed. The surgeon dismissed the concerns of the medical student and proceeded with the operation. Patient was left with no kidney function and died a few weeks later[24].

The above clinical vignettes are just a few of the many medical errors, which have occurred with catastrophic consequences. An impossible error as it may seem, these impossibilities become distinct realities when the levels of defence are weak, latent conditions supportive of errors are rampant and human factors contributory.

Reflection of one’s own practice of anaesthesia would identify multiple instances of similar “Swiss cheese models” often ending up in near misses or critical incidents. The multiple layers of defence as described by Reason[25,26] in his Swiss cheese models consisted of engineered defence systems, procedures and administrative controls and finally the individuals and their skills. For errors to occur, they need to originate from a chain of failures percolating through all the defence layers. The last layer of defence is the human. And humans sometimes fail. Human factors play a major role in these failures. The following section looks into the various human factors, which contribute to human error and lead to anaesthetic crisis.

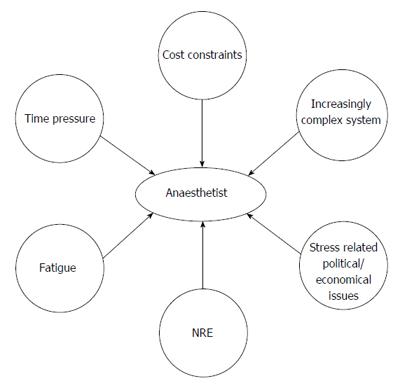

Time pressure, cost constraints, complex environment, fatigue, stress related to political and economic issues, non-routine events and many other factors can affect anaesthetist’s performance (Figure 2).

Frequently, NRE are precursors of anaesthetic disasters. Weinger et al[1] defined NRE as an unusual, out of ordinary or atypical event contributing to a dangerous dysfunctional clinical system[1]. Not all NRE’s lead to disasters or patient harm. It is however important to note that retrospective analysis of NRE’s often represent a disruption to the other wise smooth processes involved in health care[1]. Most often it is the progression of the non-routine events coupled with human factors that lead to serious errors in health care.

Among the important human factors are: (1) Lack of vigilance. Clearly, recognizing NRE’s will require the anaesthetist to be vigilant. Vigilance is nothing but situational awareness and depends on a number of factors. These factors include alertness, attention span and diagnostic skills[1]. It has been shown that increased workload is associated with an increased incidence of critical incidents[27]. Cognitive resources are diminished when the anaesthetist is exposed to a heavy workload leading to a decreased attention span and vigilance. This predisposes the anaesthetists to errors[1]. Cooper et al[2] presented evidence of this important role of vigilance in preventing anaesthetic mishaps as early as 1978. They reported that the causes of common anaesthetic errors could all have been avoided if appropriate attention was directed to the patient. Some researchers have reported the inattention and distraction as a cause in 5.6% of incidents studied[15]. It is easy to say that the wrong administration of drugs, wrong site surgery, injection of air into the venous system could all have been avoided if the concerned anaesthetist was more vigilant. “Asking staff to be more vigilant is a weak improvement approach to providing safer care”[28]. Performing a task analysis and workload assessment provides valuable clues on the performance of individuals with increased workload[1]. Organizations promoting patient safety and keen to deal with human factors should look into this aspect as well; (2) Stress. Stress is defined as “divergence between the demands and capabilities of a person in a situation, giving rise to impaired memory, reduced concentration and difficulties in “decision making” [29]. Researchers[30-32] have shown that the indecision and delays with decision-making are frequently seen in resuscitation practice courses. These are secondary to stress reaction from the stressful situations faced. It can only be extrapolated that anaesthetic crisis and emergencies can be extremely stressful and can lead to situations wherein delays with decision-making are witnessed. The stress during anaesthetic crises or emergencies should be differentiated from the stress occurring from life’s stress events.

Increased awareness is needed in understanding the effects of stress. It is illusory to believe that anyone can be immune to stress. In fact stress is normal and seen in many. This however is transitory and most people cope well. Having an already stressed person (from life’s many stressors) in a stressful situation such as an anaesthetic crisis will generally be accepted as a bad idea. A similar situation when occurs in aviation is known as “aviator at risk”[16]. Stressed anaesthetists are aviators at risk and need help. In many countries, systems are in place to help these “anaesthetists at risk”. This can be in the form of local arrangements with colleagues to ease the workload, allocation of mentors, arranging time off or avail stress related sick leave. It is important that a request for help should not be looked upon as a failure to function. All these are geared at one thing, and that is to make the entire system safe and improve the overall quality of care in terms of patient safety. Not all anaesthetists will admit that they are stressed and need help. Some experts[16] opine that vigilance should also be employed by colleagues to identify “anaesthetists at risk”; (3) Error of judgement. Marcus[15] showed that error of judgement was as high as 43% in 668 paediatric anaesthetic incidents reported in his study. The flawed clinical decisions made can be categorized into rule-based and knowledge-based mistakes. It is often difficult to inherit knowledge-based mistakes into the spectrum of human factors. However an argument can still be made for the case. As discussed above error of judgement and decision-making can be flawed under stressful conditions or when the anaesthetist is stressed; (4) Fixation errors. Lessons from Elaine Bromiley case speak in great depth about fixation errors. Fixation errors are errors which occur when the anaesthetist’s attention and focus is fixated on one aspect of care. The other aspects of care are often overlooked or ignored; and (5) Physical demands. Physical tiredness in itself contributes to the decision making process and can make the anaesthetist prone for errors. It will be wrong to say that vigilance can and should be maintained at all times, when we fail to address other parts of the equation. Analytical thinking involved in decision-making and vigilance are linked to physical tiredness. Anaesthetists like other humans are prone to physical and mental fatigue especially after a long busy night shift. Effective and safe patient care involves thorough patient assessment, planning and executing strategies complimented with intense monitoring. The ability to perform at various levels of physical and mental fatigue is variable, differing amongst individuals. This process requires high amounts of energy. It is not surprising that the level of vigilance falls when physical stress of fatigue sets in[33]. Continuing to work under fatigue, invariably leads to exhaustion. Decisions can be erroneous, attention to detail can be lacking and vigilance generally poor. Sinha et al[33] reported some of the frequently observed problems due to fatigue. These include lapses in attention, inability to stay focussed, reduced motivation, compromised problem solving, confusion, irritability, impaired communication, faulty information processing and diminished reaction. Needless to stay, anaesthetists showing symptoms of fatigue as mentioned above are prone to make errors. These errors can translate to patient harm and in some cases even death.

Mere understanding of the list of human factors discussed in the above paragraphs is not enough to reserve a definite momentum towards patient safety, and may lead only to illusory benefits. To obtain true and real benefit, this needs to be coupled with practice of multiple cognitive de-biasing strategies and in-depth reflection on the important lessons learnt from human errors.

As opined by Croskerry[10] and Yates et al[34], “one of the first steps is to overcome the bias of overcoming bias”.

Table 2 illustrates the Cognitive de-biasing strategies, which can be used to reduce diagnostic errors[10].

| Plan | Action | Example |

| Develop insight or awareness | Illustration of the errors caused by biases in the cognitive thinking process with the help of clinical examples leads to a better understanding and awareness | The case of intraoperative low oxygen saturations presumed to be due to cold fingers, when the actual cause was endo-bronchial intubation |

| Consider alternatives | Forming a habit wherein alternative possibilities are always looked into | Continuing with the above example, establishing a habit of looking for other (true) causes of low oxygen saturation, rather than simply blaming the cold fingers could direct the anaesthetist to look for other causes including a possible endotracheal intubation |

| Metacognition (strategic knowledge) | Emphasis on a reflective approach to problem solving | Knowing when and how to verify data is a good example of Strategic Knowledge |

| Decreased reliance on memory | Use of cognitive aids, pneumonics, guidelines and protocols protects against errors of memory and recall | Use of guidelines and protocols in the use of intralipids to treat Local Anaesthetic toxicity |

| Specific training | Identify specific flaws and biases and providing appropriate training to overcome these flaws | Early recognition of a “cannot intubate, cannot ventilate” scenario to guard against fixation errors |

| Simulation exercises | This is focussed at the common clinical scenarios prone for errors and emphasis on prevention of these errors secondary to human factors | Use of simulation training for difficult airway management |

| Cognitive forcing strategies | A coping strategy to avoid biases in particular clinical situations is often reflected in the practice of experienced clinicians | Checking for the availability of blood products as a routine ritual prior to the start of major surgery every single time can be considered as strategy to avoid |

| Minimize time pressures | Allowing adequate time for decision making rather than rushing through | Allowing time to check on patients airway prior to induction can help avoid surprises in airway management |

| Accountability | Establish clear accountability and follow up for decisions made | A decision to use frusemide intra operatively is followed up by checking the serum potassium levels |

| Feedback | Giving a reliable feedback to the decision maker, so that the errors are immediately appreciated and corrected | Junior anaesthetist reminding the senior of the allergy to a certain antibiotic, when the antibiotic is about to be administered |

Other strategies (Table 3) include application of knowledge of human factors in practice to prevent errors. These include: (1) Checklists. Some of the common examples in healthcare, which can be drawn as a parallel to that in the aviation industry, include checklists. It is common knowledge that wrong side surgeries have been prevented by the use of these checklists. So is the case with anaesthetic machine checks, checking the emergency intubation trolley, emergency drugs trolley and so on. The list of checklists is non-ending. But is important to realise that the checklists in itself act as a deterrent in preventing some very basic errors, which might be caused, but with catastrophic results. The importance of these checklists should never be underestimated. Evidence to the seriousness it commands can be derived from the 9000 critical incidents reported between 1990 and 2010 from the United States[35]. These incidents resulted in a mortality of 6.6%, permanent injury in 32% and resulted in total malpractice payments of $1.3 billion in payouts. The World Health Organization checklist, which addresses some of the issues leading to these 9000 critical incidents, has largely reduced the mortality and morbidity in major surgeries. These were mainly in the way of avoiding wrong site, wrong patient, and wrong procedure surgery. It is however interesting to note that the implementation and usage of checklists designed to counter some of the human factors leading to critical incidents in itself can largely be influenced by human factors. O’Connor et al[35] showed that the sociocultural issues, workload and support from senior personnel often affected the desired performance of these checklist. Munigangaiah et al[36] opined that human factors in the form of decision making, lack of communication, leadership and team work were largely responsible for the 152017 incidents reported by the National Reporting and Learning System database in England in 2008. The aim of the checklists is to have a structured communication to avoid these basic errors[36]. Enough evidence has been drawn to support the routine use of checklists prior to surgeries. Learning from aviation, checklists can be used under normal and emergency situations. It is in the emergency situations like an anaesthetic crisis, when things are missed easily, a checklist is most mandated. The AAGBI checklist for the anaesthetic equipment 2012, checklists for sedation, handover checklist, all look into the same basic human factors, which make our practice of anaesthesia prone to effect of human factors. It is extremely important for the junior doctors and the anaesthetists in training to understand that irrespective of the growing number of checklists available for use, the most important one is the checklist one can prepare for himself/herself to guard the patient from their own inadequacies and that of others; (2) Resuscitation training. The increasing focus on human factors and anaesthesia has found its way into training as well. Norris et al[30] reported the emphasis of human factors in resuscitation training. Multiple problems have been identified in resuscitation practice. These problems identified can be used as a possible guide to the difficulties faced in a real resuscitation scenario. These included team dynamics, influence of stress, debriefing and conflict within teams. Team dynamics and team leadership play an important role in resuscitation. Emphasis has also been given to the importance of debriefing in resuscitation training; (3) Coping with Stress. A number of methods for dealing with stress have been proposed. Meichenbaum[37] proposed the stress inoculation training in which use of conceptualization, coping strategies and application phase have all been proposed as a way of dealing with stress. Multiple other techniques, which have been proposed, include the STOP technique described by Norris et al[30] and Flin et al[38]. This technique helps with cognitive thinking by allowing the person to assess the situation and not acting immediately as a “knee jerk reaction”. “S-stop, do not act immediately, and assess the situation. T-take a breath in and out a couple of times. O-observe. What am I thinking about? What am I focusing on? P-prepare oneself. P-practice what works? What is the best thing to do? Do what works.” Other techniques include “Mindfulness” as described by Norris et al[30] and Hayes et al[39]. Mindfulness involves removal of panicky thoughts and replaces them with ordered thinking; (4) Dealing with fatigue. Organizations should ensure that the anaesthetists are properly rested between shifts. This can be carried out at a departmental level or at an organizational level. The Joint Commission[33] recommends a number of steps to be carried out by organizations to ensure that anaesthetists are adequately rested. These include awareness amongst senior authorities of the organization, evaluation of risks, analysis of inputs from staff and generating a fatigue management plan. This fatigue management plan can incorporate sleep breaks, maximum hours of continuous work, mandatory rest period, etc.; (5) Standard operating procedures. These are standard formal procedures in aviation and include a number of steps and procedures, which are carried out as a ritual every time. The use of standard operating procedures with intent of reducing errors has now also been employed in the operating theatre environment and anaesthesia[5]. Anaesthetic machine checks, resuscitation trolley checks can be compared to some of the standard operating procedures in aviation. The list of comparables between anaesthesia and aviation is exhaustive. It is important to note that these standard operating procedures are not limited to protocols, guidelines, and checklists. Unique practicing styles of anaesthetists, in itself is a standard operating procedure. Individuals through years of training and experience build in these safety mechanisms to guard themselves against the pitfalls posed by human factors, e.g., a particular way of ensuring that a throat pack is removed at the end of surgical procedure: sticky label on the forehead, tying one end of the throat pack to the endotracheal tube, reminder on the swab count board, reminder on the anaesthetic chart, using laryngoscopy for suctioning prior to extubation. These are nothing but standard operating procedures in the simplest sense, designed to be a safety net guarding the patient against the various human factors and errors. Every anaesthetist should look into his own standard operating procedure and adapt it to the environment making it a safer system, wherein percolation of errors through the various defence layers is not easily possible. This importance of a safety system should be realized both at an individual level and at an organizational level; and (6) Teamwork. The introduction of the concept of teamwork in human factors and patient safety is not new. Teams represent a form of synergy, in which teams work more effectively, efficiently, reliably and safely. The safety aspects of teamwork are much more than an individual or group of individuals working separately[40].

| Practical strategies to prevent human errors |

| Checklists |

| Resuscitation training or simulations |

| Managing Stress |

| Dealing with Fatigue |

| Standard operating procedures or protocols or guidelines |

| Team work with good communication |

Hunt et al[40] identified the characteristics of high performing teams. These include situational awareness. Team performance is improved when the team members are constantly aware of the environment and update each other in a process known as shared cognition. This shared cognition helps in having a shared mental model wherein decisions are made based on current state of affairs. The other aspects of high performing teams include leadership skills. An effective leader allows flow of information in both directions, thereby improving safety to the patient. Closed loop communication is also important in understanding that the information has been received and will be acted upon. Various other aspects of teamwork have also been looked into namely, assertive communication, adaptive behaviours, workload management, etc.

Lessons on human factors in healthcare can never be complete without acknowledging the added patient safety certain health care models can offer.

It is known that systems addressing human factors emphasize on interactions between people and their environment, and this in turn contributes to patient safety. Carayon et al[41,42] described the Systems Engineering Initiative for Patient Safety (SEIPS) model as a human factors systems approach. The key characteristics of the SEIPS model include the relationship between the work system and the interacting elements, identification of important contributing factors, integration of patient outcomes with organization/employee outcomes and feedback loops between all the above-mentioned characteristics. In many ways the SEIPS model is employed in different organizations to promote safety. Root cause analysis of critical incidents in anaesthesia is an application of the SEIPS model in itself.

Analysis of the anaesthetist job description and the interaction between various components using the SEIPS model (Table 4) should be performed both at an individual, departmental and organizational level. This would identify some of the factors influencing human errors, and remedial actions can be taken.

| Components | Elements | |

| Work system | Person | Skills, knowledge, motivation, physical and psychological characteristics |

| Organization | Organizational culture and patient safety culture, work schedules, social relationships | |

| Technology and tools | Human factors characteristics of technologies and tools | |

| Tasks | Job demands, job control and participation | |

| Environment | Layout, noise and lighting | |

| Process | care process | Information flow, purchasing, maintenance and cleaning |

| Outcomes | Employee and organizational outcomes | Job satisfaction, stress and burnout, employee safety and health, turnover |

| Patient outcomes | Patient safety, quality of care |

The use of the above SEIPS model can be many. It can be used for system design, proactive hazard analysis, and accident investigation and certainly for patient safety research. Utilization of the SEIPS model brings about balance to the work system and steadies the various interactions, making the system less prone for errors secondary to human factors. It is also important to note that individuals can also use the SEIPS model, in looking at their own adaptability to different components of the SEIPS model. This will enhance the performance in a direction towards better patient safety.

Irrespective of the health care model used, all models aim at identifying factors contributing to errors. Performance shaping factors are attributes that affect human performance[43]. Examples of performance shaping factors include factors affecting an individual, teams, technology, work environment and the work process involved. Identification of these factors can be used for risk mitigation, simulation training and future research.

Central to the ways of avoiding human errors and utilising models for safety is to promote a positive safety culture[28]. This safety culture can only be established when there is strong commitment from the senior management. Multiple aspects of safety culture need to be adopted by organizations wanting to promote patient safety. These include: (1) Open culture: Patient safety incidents are openly discussed with colleagues and senior managers; (2) Just culture: Staff and carers are treated fairly after a patient safety incident; (3) Reporting culture: A robust incident reporting system, wherein staff are encouraged and not blamed for reporting; (4) Learning culture: The organization is committed to learn safety lessons; and (5) Informed culture: These safety lessons are used to identify and mitigate future incidents.

Human errors continue to be an important cause of industrial accidents, aviation disasters and anaesthesia related deaths. Study of human factors should be encouraged to identify various interactions taking place in the health care systems, which make the practice of anaesthesia prone to the effects of human factors, causing human errors translating to anaesthesia mishaps and incidents. This ultimately affects the overall standard of care given to the patients. The care in terms of technical and non-technical skills, which are influenced by human factors, should be delivered in a manner to promote and maintain patient safety.

When we accept and acknowledge that human factors do play an important role in anaesthesia crisis, it is only imperative that strategies to avoid, cope and deal with these human factors are practiced.

Much more work both in terms of research, training and familiarity is needed to understand human factors at a greater depth to further strengthen the already existing strategies to avoid human errors in the practice of anaesthesia.

P- Reviewer: Kvolik S, Tong C, Velisek J S- Editor: Wen LL L- Editor: A E- Editor: Liu SQ

| 1. | Weinger MB, Slagle J. Human Factors Research in Anesthesia Patient Safety: Techniques to Elucidate Factors Affecting Clinical Task Performance and Decision Making. J Am Med Inform Assoc. 2002;9:S58-S63. [RCA] [DOI] [Full Text] [Cited by in Crossref: 58] [Cited by in RCA: 45] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 2. | Cooper JB, Newbower RS, Long CD, McPeek B. Preventable anesthesia mishaps: a study of human factors. 1978. Qual Saf Health Care. 2002;11:277-282. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 91] [Cited by in RCA: 95] [Article Influence: 4.1] [Reference Citation Analysis (1)] |

| 3. | Kihn LT, Corrigan JM, Donaldson MS. To Err Is Human: Building a Safer Health System. Washington, DC: Institute of Medicine National Academy Press Report 1999; . |

| 4. | Williamson JA, Webb RK, Sellen A, Runciman WB, Van der Walt JH. The Australian Incident Monitoring Study. Human failure: an analysis of 2000 incident reports. Anaesth Intensive Care. 1993;21:678-683. [PubMed] |

| 5. | Toff NJ. Human factors in anaesthesia: lessons from aviation. Br J Anaesth. 2010;105:21-25. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 51] [Cited by in RCA: 39] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 6. | Clinical Human Factors Group. What is human factors? Available from: http: //chfg.org/what-is-human-factors. |

| 7. | Allnutt MF. Human factors in accidents. Br J Anaesth. 1987;59:856-864. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 84] [Cited by in RCA: 78] [Article Influence: 2.1] [Reference Citation Analysis (0)] |

| 8. | Llewellyn RL, Gordon PC, Wheatcroft D, Lines D, Reed A, Butt AD, Lundgren AC, James MF. Drug administration errors: a prospective survey from three South African teaching hospitals. Anaesth Intensive Care. 2009;37:93-98. [PubMed] |

| 9. | Groopman J. Boston MA: Houghton Mifflin How Doctors Think. J Hosp Librarianship. 2008;8:480-482. [RCA] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 1] [Article Influence: 0.1] [Reference Citation Analysis (0)] |

| 10. | Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775-780. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 937] [Cited by in RCA: 876] [Article Influence: 39.8] [Reference Citation Analysis (0)] |

| 11. | Available from: http: //www.merriam-webster.com/dictionary/cognitive. |

| 12. | Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184-1204. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 203] [Cited by in RCA: 244] [Article Influence: 10.6] [Reference Citation Analysis (0)] |

| 13. | Stiegler MP, Neelankavil JP, Canales C, Dhillon A. Cognitive errors detected in anaesthesiology: a literature review and pilot study. Br J Anaesth. 2012;108:229-235. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 104] [Cited by in RCA: 107] [Article Influence: 7.6] [Reference Citation Analysis (0)] |

| 14. | Ortega R. Fixation errors: Boston University School of Medicine. Available from: http: //www.bu.edu/av/courses/med/05sprgmedanesthesiology/MASTER/data/downloads/chapter text - fixation.pdf. |

| 15. | Marcus R. Human factors in pediatric anesthesia incidents. Paediatr Anaesth. 2006;16:242-250. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 51] [Cited by in RCA: 42] [Article Influence: 2.2] [Reference Citation Analysis (1)] |

| 16. | Arnstein F. Catalogue of human error. Br J Anaesth. 1997;79:645-656. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 48] [Cited by in RCA: 31] [Article Influence: 1.1] [Reference Citation Analysis (0)] |

| 17. | Epidural error during birth killed mother. The Guardian. Available from: http: //www.theguardian.com/uk/2008/jan/08/health.society. |

| 18. | The Sidney Morning Herald. How could this Happen? Hospital blunder turns a family joy into heartbreak. Available from: http: //www.smh.com.au/nsw/how-could-this-happen-hospital-blunder-turns-a-familys-joy-into-heartbreak-20100820-138xw.html. |

| 19. | The Australian Women’s weekly. Epidural victim Grace Wang: I’m terrified my husband will leave me. Available from: http: //www.aww.com.au/news-features/in-the-mag/2012/12/epidural-victim-grace-wang-im-terrified-my-husband-will-leave-me/. |

| 20. | Dyer C. Doctor sentenced for manslaughter of leukaemia patient. BMJ. 2003;327:697. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 8] [Article Influence: 0.4] [Reference Citation Analysis (0)] |

| 21. | Wales Online. Medic cleared of Manslaughter. Available from: http: //www.walesonline.co.uk/news/wales-news/medic-cleared-of-manslaughter-2437464. |

| 22. | Stanley B, Roe P. An unseen obstruction. Anaesthesia. 2010;65:216-217. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 1] [Article Influence: 0.1] [Reference Citation Analysis (0)] |

| 23. | Fioratou E, Flin R, Glavin R. No simple fix for fixation errors: cognitive processes and their clinical applications. Anaesthesia. 2010;65:61-69. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 40] [Cited by in RCA: 43] [Article Influence: 2.9] [Reference Citation Analysis (0)] |

| 24. | Daily Mail. You are taking wrong kidney surgeon. Available from: http: //www.dailymail.co.uk/health/article-123005/Youre-taking-wrong-kidney-surgeon-told.html. |

| 25. | van Beuzekom M, Boer F, Akerboom S, Hudson P. Patient safety: latent risk factors. Br J Anaesth. 2010;105:52-59. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 84] [Cited by in RCA: 75] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 26. | James R. Managing risks of organizational accidents. Chapter: 1. Aldershot, Hants, England; Brookfield, Vt., USA: Ashgate; . |

| 27. | Cohen MM, O’Brien-Pallas LL, Copplestone C, Wall R, Porter J, Rose DK. Nursing workload associated with adverse events in the postanesthesia care unit. Anesthesiology. 1999;91:1882-1890. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 59] [Cited by in RCA: 55] [Article Influence: 2.1] [Reference Citation Analysis (0)] |

| 28. | Implementing human factors in health care. Available from: http: //www.patientsafetyfirst.nhs.uk. |

| 29. | Müller MP, Hänsel M, Fichtner A, Hardt F, Weber S, Kirschbaum C, Rüder S, Walcher F, Koch T, Eich C. Excellence in performance and stress reduction during two different full scale simulator training courses: a pilot study. Resuscitation. 2009;80:919-924. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 84] [Cited by in RCA: 86] [Article Influence: 5.4] [Reference Citation Analysis (0)] |

| 30. | Norris EM, Lockey AS. Human factors in resuscitation teaching. Resuscitation. 2012;83:423-427. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 61] [Cited by in RCA: 53] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 31. | Quilici AP, Pogetti RS, Fontes B, Zantut LF, Chaves ET, Birolini D. Is the Advanced Trauma Life Support simulation exam more stressful for the surgeon than emergency department trauma care? Clinics (Sao Paulo). 2005;60:287-292. [PubMed] |

| 32. | Sandroni C, Fenici P, Cavallaro F, Bocci MG, Scapigliati A, Antonelli M. Haemodynamic effects of mental stress during cardiac arrest simulation testing on advanced life support courses. Resuscitation. 2005;66:39-44. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 15] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 33. | Sinha A, Singh A, Tewari A. The fatigued anesthesiologist: A threat to patient safety? J Anaesthesiol Clin Pharmacol. 2013;29:151-159. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 36] [Cited by in RCA: 38] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 34. | Yates JF, Veinott ES, Patalano AL. Hard decisions, bad decisions: on decision quality and decision aiding. (eds.). Emerging Perspectives in Judgment and Decision Making. New York: Cambridge University Press 2003; 32. |

| 35. | O’Connor P, Reddin C, O’Sullivan M, O’Duffy F, Keogh I. Surgical checklists: the human factor. Patient Saf Surg. 2013;7:14. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 54] [Cited by in RCA: 50] [Article Influence: 4.2] [Reference Citation Analysis (0)] |

| 36. | Munigangaiah S, Sayana MK, Lenehan B. Relevance of World Health Organization surgical safety checklist to trauma and orthopaedic surgery. Acta Orthop Belg. 2012;78:574-581. [PubMed] |

| 37. | Meichenbaum D. Stress inoculation training: Chapter: 5. Oxford, England: Pergamon Press 1985; 143-145. |

| 38. | Flin R, O’Connor P, Crichton M. Safety at the sharp end-a guide to non technical skills: Chapetr: 7. UK: Ashgate 2007; 157-190. |

| 39. | Hayes SC, Smith S. Get out of your mind and into your life: Chapter: 8. Oakland, CA: New Hardinger Publications 2005; 105-120. |

| 40. | Hunt EA, Shilkofski NA, Stavroudis TA, Nelson KL. Simulation: translation to improved team performance. Anesthesiol Clin. 2007;25:301-319. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 108] [Cited by in RCA: 86] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 41. | Carayon P, Schoofs Hundt A, Karsh BT, Gurses AP, Alvarado CJ, Smith M, Flatley Brennan P. Work system design for patient safety: the SEIPS model. Qual Saf Health Care. 2006;15 Suppl 1:i50-i58. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 906] [Cited by in RCA: 926] [Article Influence: 51.4] [Reference Citation Analysis (1)] |

| 42. | Carayon P, Wetterneck TB, Rivera-Rodriguez AJ, Hundt AS, Hoonakker P, Holden R, Gurses AP. Human factors systems approach to healthcare quality and patient safety. Appl Ergon. 2014;45:14-25. [PubMed] |

| 43. | LeBlanc VR, Manser T, Weinger MB, Musson D, Kutzin J, Howard SK. The study of factors affecting human and systems performance in healthcare using simulation. Simul Healthc. 2011;6 Suppl:S24-S29. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 63] [Cited by in RCA: 56] [Article Influence: 4.0] [Reference Citation Analysis (0)] |