Published online May 21, 2025. doi: 10.3748/wjg.v31.i19.104897

Revised: March 12, 2025

Accepted: April 27, 2025

Published online: May 21, 2025

Processing time: 135 Days and 22.6 Hours

Esophageal cancer is the sixth most common cancer worldwide, with a high mortality rate. Early prognosis of esophageal abnormalities can improve patient survival rates. The progression of esophageal cancer follows a sequence from esophagitis to non-dysplastic Barrett’s esophagus, dysplastic Barrett’s esophagus, and eventually esophageal adenocarcinoma (EAC). This study explored the application of deep learning technology in the precise diagnosis of pathological classification and staging of EAC to enhance diagnostic accuracy and efficiency.

To explore the application of deep learning models, particularly Wave-Vision Transformer (Wave-ViT), in the pathological classification and staging of eso

We applied several deep learning models, including multi-layer perceptron, residual network, transformer, and Wave-ViT, to a dataset of clinically validated esophageal pathology images. The models were trained to identify pathological features and assist in the classification and staging of different stages of eso

The Wave-ViT model demonstrated the highest accuracy at 88.97%, surpassing the transformer (87.65%), residual network (85.44%), and multi-layer perceptron (81.17%). Additionally, Wave-ViT exhibited low computational complexity with significantly reduced parameter size, making it highly efficient for real-time clinical applications.

Deep learning technology, particularly the Frequency-Domain Transformer model, shows promise in improving the precision of pathological classification and staging of EAC. The application of the Frequency-Domain Transformer model enhances the automation of the diagnostic process and may support early detection and treatment of EAC. Future research may further explore the potential of this model in broader medical image analysis applications, particularly in the field of precision medicine.

Core Tip: This study demonstrates the application of deep learning models, particularly Wave-Vision Transformer, for the pathological classification and staging of esophageal cancer. Wave-Vision Transformer outperformed other models such as transformer, residual network, and multi-layer perceptron, achieving the highest accuracy of 88.97% with low computational complexity. This innovative approach shows promise for improving early detection and personalized treatment strategies for esophageal cancer, potentially enhancing clinical outcomes in real-time applications.

- Citation: Wei W, Zhang XL, Wang HZ, Wang LL, Wen JL, Han X, Liu Q. Application of deep learning models in the pathological classification and staging of esophageal cancer: A focus on Wave-Vision Transformer. World J Gastroenterol 2025; 31(19): 104897

- URL: https://www.wjgnet.com/1007-9327/full/v31/i19/104897.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i19.104897

Esophageal cancer is the sixth most common cancer globally and is associated with a high mortality rate. Its progression typically begins with esophagitis, advancing to Barrett’s esophagus and eventually developing into esophageal adenocarcinoma (EAC)[1,2]. Although current medical techniques, such as endoscopy and pathological examination, enable the diagnosis of esophageal cancer to some extent, the complexity of staging and the subtle differences in pathological features present significant limitations in the accuracy and efficiency of traditional diagnostic methods[3,4]. Therefore, developing a precision diagnostic method based on modern technology, particularly for the rapid and accurate pathological classification and staging of early-stage esophageal cancer, is essential for improving patient survival and treatment outcomes[5,6].

In recent years, with the rapid development of artificial intelligence (AI), particularly deep learning technology, the application of image analysis in medical imaging and pathological analysis has grown significantly[7,8]. Deep learning algorithms, through automated feature learning and extraction, surpass traditional manual feature extraction methods, providing a more comprehensive and in-depth capacity for pathological information mining[9]. This technology demonstrates considerable potential in cancer pathology analysis, markedly enhancing diagnostic accuracy and efficiency[10,11]. The progression of esophageal cancer involves several distinct pathological stages, including esophagitis, Barrett’s esophagus, and EAC. Early diagnosis of these stages is crucial for effective prevention and treatment[12,13]. However, existing pathological analysis methods struggle to differentiate these pathological types at early stages, resulting in missed or delayed diagnoses for many patients[14]. Thus, leveraging modern technology - especially deep learning - to improve the precision of pathological classification and staging diagnosis in esophageal cancer has become an urgent scientific challenge.

In the field of medical imaging analysis, deep learning models have become essential tools for disease diagnosis due to their powerful feature extraction capabilities and high-precision classification performance[15,16]. Esophageal cancer, a highly heterogeneous malignant tumor, requires early diagnosis to improve patient survival rates. However, traditional imaging analysis methods rely on manual feature extraction and shallow machine learning models, which struggle to capture the subtle differences in lesion areas. This challenge is particularly evident in complex medical images where early-stage lesions may exhibit hidden features, increasing the risk of misdiagnosis or missed diagnosis. In recent years, deep learning techniques - especially convolutional neural networks (CNNs)[17,18] and Vision Transformers (ViTs)[19] - have significantly advanced medical image analysis. These models can automatically learn multi-level features within images, enabling more accurate identification of lesion areas. However, traditional CNN models face limitations in capturing long-range dependencies, while ViT models, despite overcoming this issue through self-attention mechanisms, suffer from high computational complexity and limited ability to capture fine-grained local details.

Against this background, the Wave-ViT model[20] emerges as an innovative approach that integrates wavelet transform with transformer architecture, offering distinct advantages. Wavelet transform effectively captures multi-scale image features, which is particularly beneficial in medical imaging where lesions can exhibit different morphological and textural characteristics at varying scales. By incorporating wavelet transform into the ViT framework, Wave-ViT retains the transformer’s strengths in global feature extraction while enhancing sensitivity to local details, enabling a more comprehensive analysis of complex medical images. Additionally, Wave-ViT reduces computational complexity, improving efficiency and making it more suitable for large-scale medical imaging data analysis.

Wave-ViT’s advantages are especially evident in esophageal cancer diagnosis. Early-stage esophageal lesions, such as Barrett’s esophagus or mild dysplasia, often present as subtle mucosal changes in medical images. The multi-scale feature extraction capability of Wave-ViT allows for the precise identification of these early signals[21]. Moreover, the high computational efficiency of Wave-ViT enables rapid processing of endoscopic or computed tomography images, providing real-time diagnostic support for clinicians. Consequently, using Wave-ViT for esophageal cancer diagnosis not only enhances diagnostic accuracy and robustness but also offers an efficient and reliable tool for clinical applications, potentially advancing early screening and precision treatment of esophageal cancer.

In this context, this project introduces a deep learning-based approach that integrates advanced algorithms, including multi-layer perceptron (MLP), residual network (ResNet), transformer, and Wave-ViT, to automate the analysis and identification of pathological features in esophageal cancer images. These models combine self-attention mechanisms with frequency domain feature extraction, effectively capturing complex structures and lesion areas within pathological images. In particular, the Wave-ViT employs wavelet transform to extract high- and low-frequency information from images, significantly enhancing the model’s sensitivity and diagnostic accuracy for esophageal cancer lesions. The inclusion of frequency domain information not only improves the model’s ability to capture both local and global image features but also enables differentiation of subtle variations across pathological stages, thereby increasing diagnostic precision and stability.

Additionally, this study conducts experimental validation using open datasets such as Hyper Kvasir, which includes a large volume of high-quality esophageal pathology images labeled by professional endoscopists, covering various pathological stages such as esophagitis and Barrett’s esophagus. Through the training and optimization of deep learning models, the study fully exploits the key pathological features within these images to construct an accurate diagnostic model for the classification and staging of esophageal cancer. This model not only aids clinicians in achieving faster and more accurate diagnoses but also provides novel technological support for the early detection and personalized treatment of esophageal cancer. In summary, this project addresses the clinical needs for esophageal cancer diagnosis and leverages advances in deep learning technology. By incorporating deep learning and frequency domain analysis, it aims to enhance the accuracy and efficiency of pathological classification and staging for esophageal cancer. This research holds significant scientific value and has the potential to contribute to improved early diagnostic capabilities and treatment outcomes for esophageal cancer in clinical applications[22].

This study aims to collect clinical imaging data from various pathological stages of esophageal cancer to enable a detailed performance comparison of four representative deep learning models in the precise diagnosis of esophageal cancer. This data serves as a strong foundation for supporting early detection and treatment of the disease. The primary source of data is the Hyper Kvasir dataset[23], which was collected using endoscopy equipment from the Vestre Viken Health Trust (VV) in Norway. VV comprises four hospitals that provide healthcare services to approximately 470000 people. One of these hospitals, Bærum Hospital, houses a large gastroenterology department that has contributed training data and will continue to expand the dataset in the future. The dataset used in this study was obtained through the following steps: (1) Endoscopic examination: A research team conducted routine endoscopic examinations in hospitals, covering various parts of the gastrointestinal tract, including the esophagus, stomach, and colon. High-resolution endoscopy was used to capture images and videos, ensuring high-quality data collection; (2) Image and video recording: During the examinations, endoscopic operators recorded videos and captured multiple static images, documenting both normal and diseased tissues. Clinicians also took additional images of regions of interest based on real-time observations, providing a foundation for subsequent data annotation; and (3) Data storage: The collected images and videos were stored in the Picsara image documentation database, an extension of the hospital’s electronic medical records system used for managing medical images. Additionally, these images have been meticulously annotated by one or more medical experts from VV and the Cancer Registry of Norway (CRN). Through cancer research, the CRN contributes new insights into cancer and operates under the Oslo University Hospital Trust as an independent entity within the South-Eastern Norway Regional Health Authority. CRN manages the national cancer screening program, with the objective of detecting cancer or precancerous lesions early to prevent cancer-related mortality.

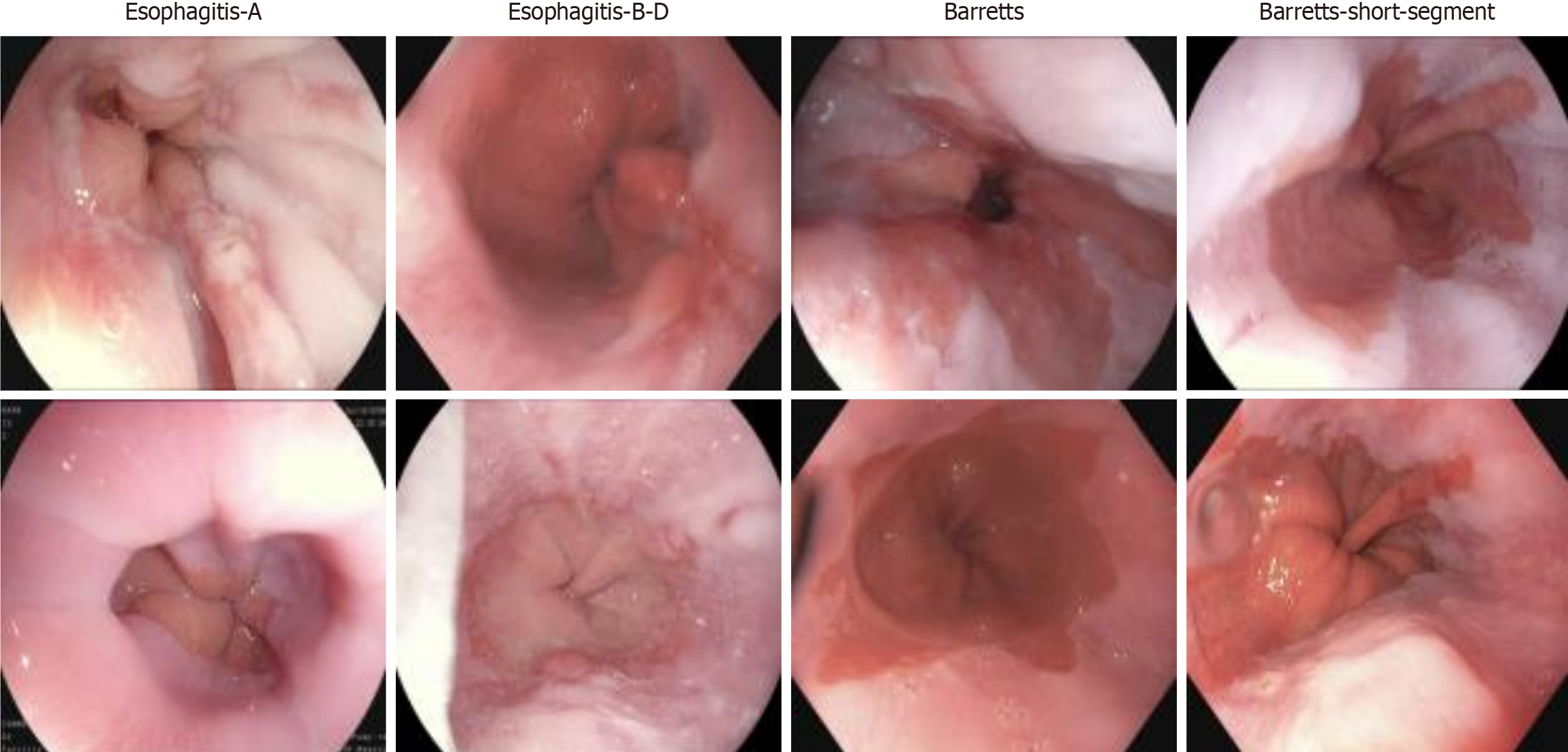

Additionally, the Hyper Kvasir dataset consists of images annotated and validated by experienced endoscopists, covering various categories, including anatomical landmarks, pathological findings, and endoscopic procedures within the gastrointestinal tract. Each category contains hundreds of images. For this study, we utilized a subset of this dataset, which includes images categorized as esophagitis types A and B-D, short-segment Barrett’s esophagus, and Barrett’s esophagus, with representative images shown in Figure 1. As part of the dataset preprocessing, the research team performed data cleaning before publication, removing low-quality or blurry images to ensure high dataset quality. These images have varying resolutions, ranging from 720 × 576 to 1920 × 1072 pixels, and are organized into separate folders named according to their contents. This study primarily focuses on the reflux esophagitis and Barrett’s esophagus subsets of the dataset.

The original dataset contains images of varying resolutions, which can reduce the efficiency of model training. Images with different resolutions require varying amounts of computational resources and processing time, leading to inconsistent processing speeds and significantly impacting training efficiency. Additionally, these variations in resolution can reduce the model’s generalization ability. The model needs to learn features from images of different resolutions, which increases training complexity and may cause performance inconsistencies across images, ultimately lowering the model’s generalizability. Furthermore, high-resolution images consume substantial memory, especially when dealing with large datasets, which can lead to memory overflow and affect the stability of the training process.

To address these issues, this study standardizes the resolution of all imaging data to 256 × 256 pixels during the preprocessing stage. This approach strikes a balance between retaining essential image information and improving computational efficiency. The selection of 256 × 256 pixels is based on the following considerations: First, this resolution preserves sufficient image detail to meet the model’s feature extraction requirements. Lower resolutions could result in the loss of critical details, thereby reducing model accuracy. Second, this resolution ensures computational efficiency, avoiding excessive processing costs associated with high-resolution images. While higher resolutions may capture additional information, the associated increase in computational costs and training time would likely outweigh any performance gains. Thus, a resolution of 256 × 256 pixels achieves an optimal balance between resource consumption and information retention, enhancing model training efficiency and performance and ultimately improving the model’s predictive accuracy and stability[24].

This study aims to develop an early-stage classification model for esophageal cancer and to evaluate the adaptability of various models. To this end, we selected four deep learning models that perform well in image recognition and sequential data processing: The MLP, ResNet, transformer, and Wave-ViT. Each model has unique strengths in ar

MLP: The MLP is a basic feedforward neural network consisting of multiple fully connected layers. It learns data features through simple linear transformations and nonlinear activation functions. The MLP is advantageous for its simple structure, making it easy to understand and implement. However, its capacity to learn high-dimensional data and complex features is limited, especially in image processing, where extensive feature engineering is required to achieve satisfactory results. In this study, MLP is selected as a baseline model to facilitate comparison with the performance improvements achieved by more complex models.

ResNet: ResNet is a CNN that addresses the vanishing gradient problem in deep network training by introducing residual blocks, enabling the training of deeper networks with improved performance. ResNet uses skip connections, which add the input directly to the output of subsequent layers, facilitating easier gradient backpropagation. ResNet has achieved remarkable success in image recognition due to its strong feature extraction capabilities, making it a key candidate for this study. ResNet was chosen for its advantages in handling image data, particularly in early-stage esophageal cancer diagnosis, where extracting complex image features may be necessary[25,26].

Transformer model: Originally developed for natural language processing (NLP), the transformer model is built around a core self-attention mechanism. This self-attention mechanism captures long-range dependencies within the data, enabling the transformer model to effectively process sequential information. In recent years, the transformer has also been applied successfully to image recognition. In this study, the transformer model is selected to explore its potential in handling early-stage esophageal cancer imaging data and other sequential feature data, such as time-series clinical indicators. Its powerful capacity for modeling long-range dependencies may be beneficial for capturing patterns related to disease progression[27,28].

Wave-ViT: Wave-ViT is a variant of the transformer model applied to frequency domain data. It first transforms the input data into the frequency domain and then uses the transformer model to learn frequency-domain features. This approach effectively captures frequency information, which may be more advantageous than spatial information in certain applications. Wave-ViT was selected to explore its potential benefits in processing early-stage esophageal cancer imaging data. If early esophageal cancer lesions exhibit more pronounced differences in frequency domain features, Wave-ViT may be more effective than traditional spatial domain transformer models[29,30].

In summary, the selection of these four models aims to comprehensively evaluate the performance of different architectures in early-stage esophageal cancer diagnosis. Through comparative analysis, the goal is to select or integrate the optimal model to construct an early-stage esophageal cancer classification model with high accuracy and strong adaptability. The model’s adaptability will be assessed based on its generalization ability across different datasets and robustness to variations in data distribution. Additionally, since ResNet, the transformer model, and Wave-ViT all share a U-shaped network framework (Supplementary Figure 1), the following analysis will focus on the key differences among these three models.

The MLP is a feedforward artificial neural network consisting of multiple layers of fully connected neurons, each employing a nonlinear activation function[31]. MLP is capable of distinguishing non-linearly separable data, making it widely applicable across various domains such as image recognition and NLP. The strength of MLP lies in its ability to learn and represent complex input-output relationships, making it a powerful machine learning model. MLP is typically trained using the backpropagation algorithm, enabling it to learn and optimize based on input data and corresponding output labels. Its structure includes an input layer, hidden layers, and an output layer. The input layer receives data, the hidden layers perform feature extraction and transformation, and the output layer generates predictions. Connections between neurons carry weights and biases, which are adjusted during training to minimize the loss of function. In sum, MLP is a robust machine learning model capable of learning and representing complex relationships, making it a widely used approach in various fields.

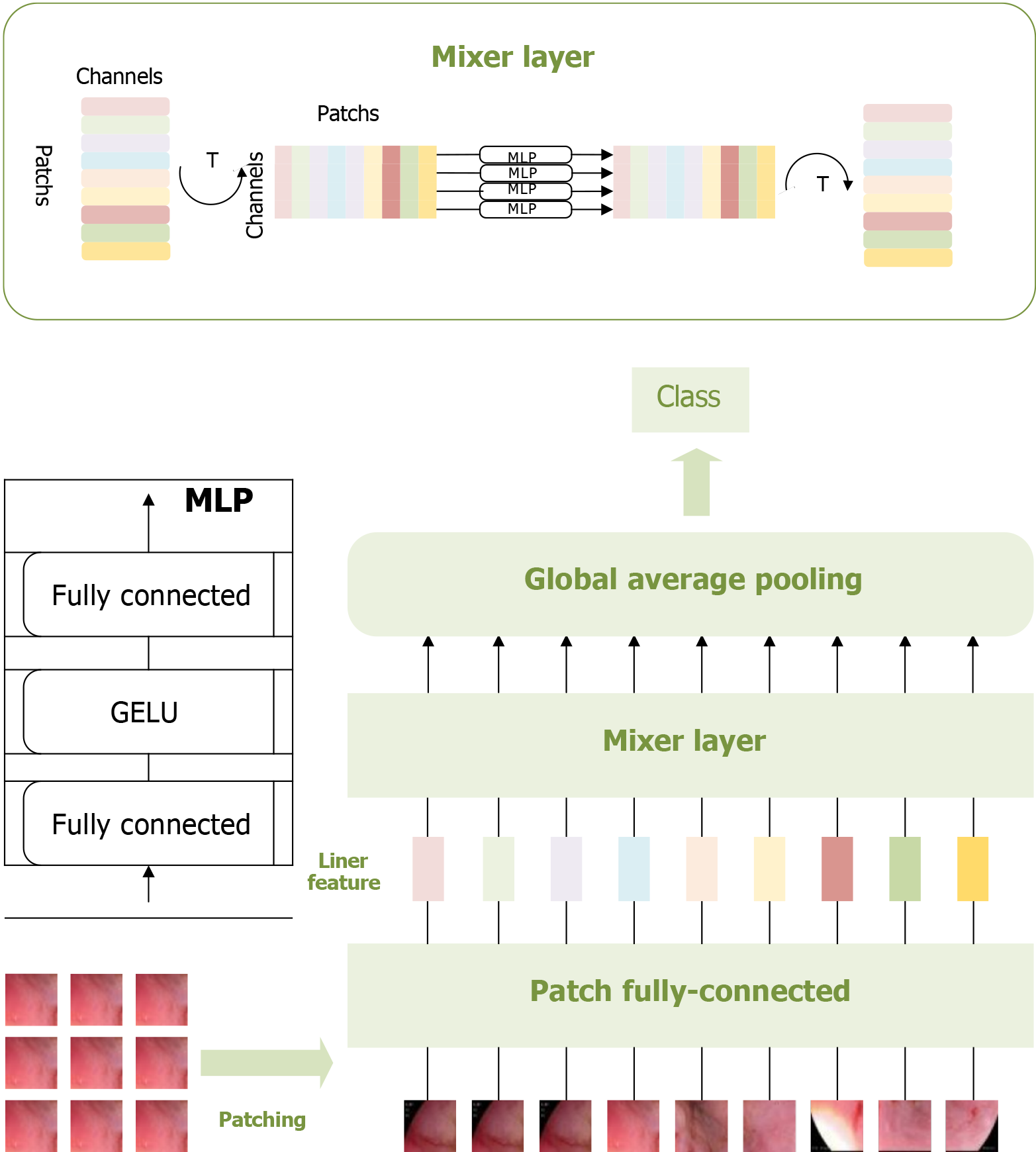

To fully leverage the potential of MLP, Tolstikhin et al[32] developed an upgraded model called the MLP-mixer, a purely MLP-based architecture. The MLP-mixer comprises two types of layers: One type of MLP operates independently on each image patch, mixing features at each location, while the other type applies MLP across patches, mixing spatial information. The application of the MLP-mixer model on the dataset in this study is illustrated in Figure 2.

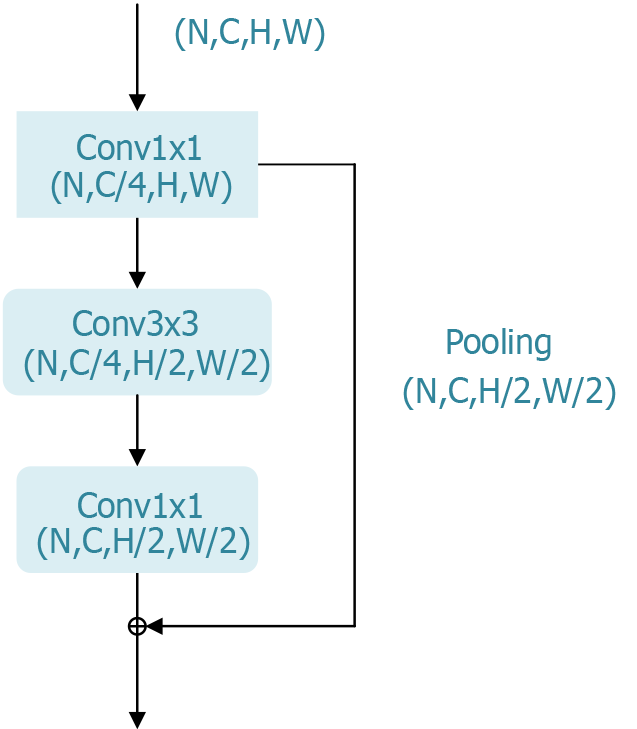

ResNet is a deep CNN designed to address the issues of vanishing gradients and network degradation in deep neural network training by introducing “residual blocks”. This innovation allows for the training of deeper networks with improved performance[25].

The core concept of ResNet is to shift the learning objective of each layer to focus on the residual between the input and output (i.e., output minus input). This is achieved by adding a “skip connection” in each layer, which directly adds the input to the layer’s output. With this design, gradients can propagate more easily back to earlier layers, even as the network depth increases, thus mitigating the vanishing gradient problem. The significance of ResNet lies in its ability to overcome training limitations in deep neural networks, enabling the training of deeper networks. Such deeper networks can learn more complex features, enhancing the model’s representational capacity and accuracy[33].

ResNet has achieved significant success in computer vision tasks such as image classification, object detection, and image segmentation and has become the foundational architecture for many subsequent deep learning models. Its outstanding performance in the ILSVRC 2015 competition further validated its effectiveness, making it a primary choice for evaluating the most suitable model for the early-stage classification of esophageal cancer in this study. To adapt ResNet for this specific application, we modified the network’s decoder structure from its original design for image classification to better suit esophageal cancer classification needs. The bottleneck block, a key component of ResNet’s residual module, is shown in Figure 3.

The transformer model is a deep learning architecture based on attention mechanisms, revolutionizing NLP and other sequential data processing fields. Unlike traditional sequential models that rely on recurrent neural networks or CNN, the transformer eliminates the need for recurrence. Instead, it processes all elements of the input sequence in parallel through a self-attention mechanism, significantly enhancing computational efficiency and its ability to handle long sequences.

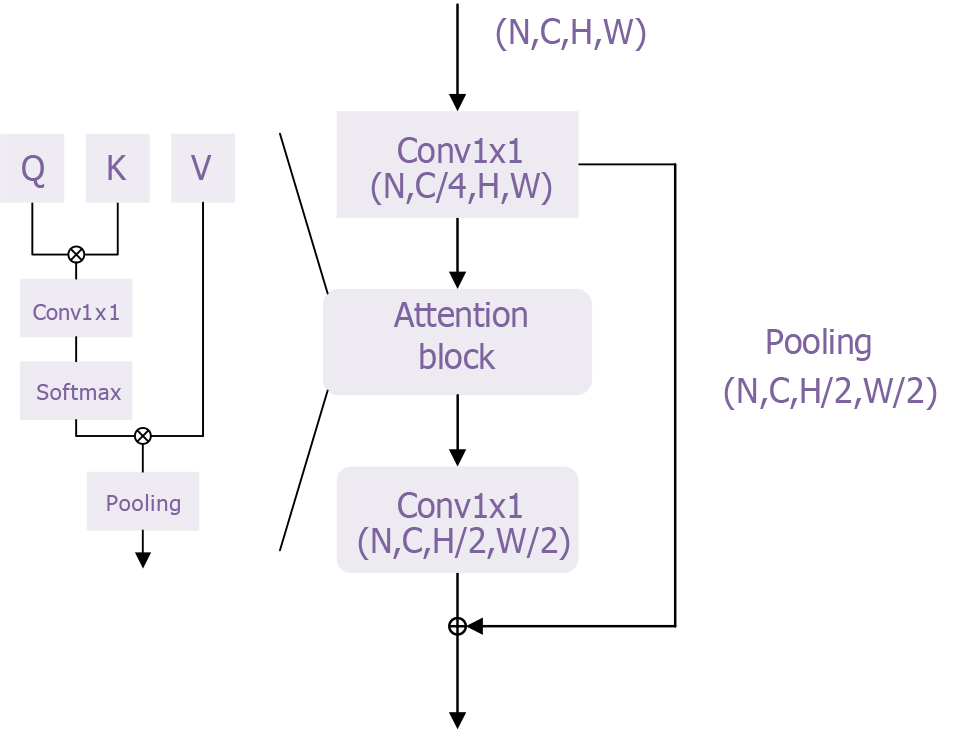

In this study, we adapted the basic transformer model proposed by Chen et al[34] to develop a classification model for esophageal cancer. The overall network architecture is shown in Supplementary Figure 1, with the transformer module illustrated in Figure 4. This transformer module overcomes the limitations of traditional sequential models by addressing the inefficiencies of recurrent neural networks in processing long sequences and mitigating the vanishing gradient problem, enabling the model to handle longer sequences effectively. Additionally, its self-attention mechanism enhances the model’s representational power by better capturing contextual information and long-range dependencies, thereby improving both expressiveness and accuracy.

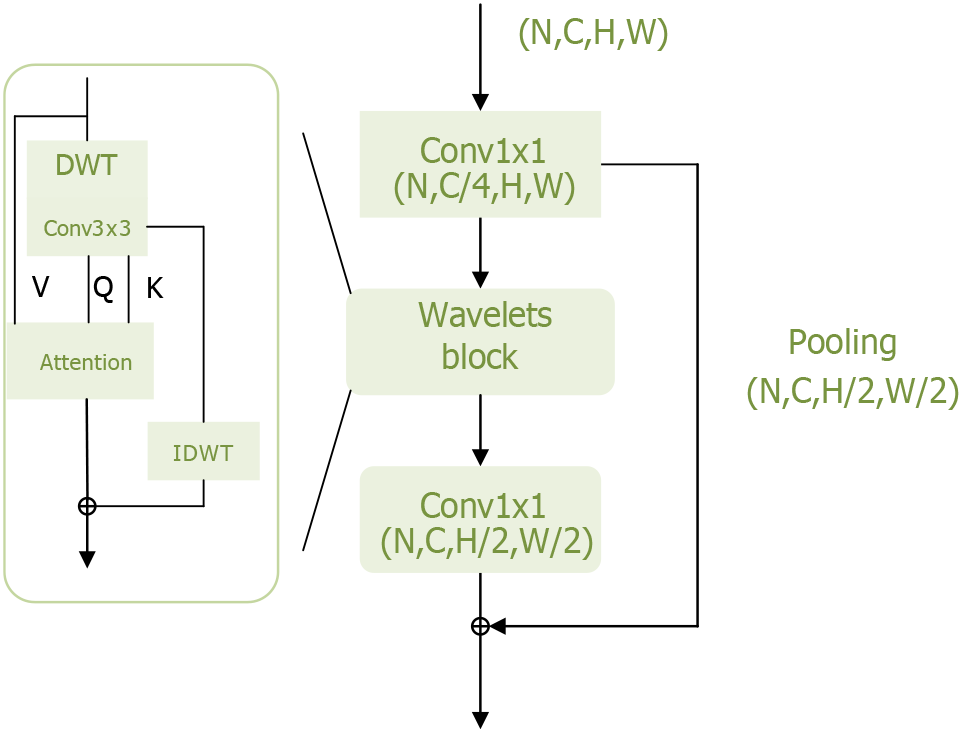

To further improve model performance, it is crucial to minimize information loss, particularly regarding high-frequency components such as texture details within the target. Yao et al[20] addressed this by modifying the transformer structure based on wavelet transforms, enabling the model to extract more frequency domain information and thereby enhance its performance. The wavelets module of the Wave-ViT model is shown in Figure 5.

The wavelets module performs reversible downsampling through wavelet transform, aiming to retain original image details for self-attention learning while reducing computational costs. Wavelet transform is a fundamental time-frequency analysis method that decomposes the input signal into different frequency sub-bands to address aliasing issues. Specifically, discrete wavelet transfor achieves reversible downsampling by transforming 2D data into four discrete wavelet sub-bands. Here, the low-frequency component reflects the coarse-grained structure of the primary object, while the high-frequency component retains fine-grained texture details[35]. In this way, different levels of image detail are preserved in lower-resolution sub-bands without information loss. Additionally, inverse discrete wavelet transform can be used to reconstruct the original image in the wavelet ViTs. This information-preserving transform enables the design of an efficient transformer block with lossless and reversible downsampling, facilitating self-attention learning on multi-scale feature maps.

In deep learning research and application, evaluating model performance is crucial. This study selects three commonly used evaluation metrics: Accuracy, computational complexity, and efficiency. Accuracy measures the proportion of correct predictions out of all predictions. It reflects the overall classification performance of the model, with values closer to 1 indicating stronger predictive capability. However, in cases where class imbalance exists, accuracy alone can be misleading. Therefore, it is necessary to incorporate additional metrics such as precision, recall, and F1-score for a comprehensive assessment of the model’s performance. The formulas for these metrics are as follows: (1) Where: True positives represents the number of actual positive cases correctly classified as positive. True negatives represents the number of actual negative cases correctly classified as negative. False positives represents the number of actual negative cases incorrectly classified as positive. False negatives represents the number of actual positive cases incorrectly classified as negative; (2) Computational complexity evaluates the computational resources required for training or inference. This metric is critical for understanding how computational demand scales with increasing input data size. A lower computational complexity implies better scalability and faster processing, making the model more suitable for practical applications. The formula for computational complexity is as follows: Where: N represents the input data size. d denotes the depth or dimensionality of the model; and (3) Efficiency measures the number of correct predictions per unit of time. In real-world applications, optimizing computation time and resource usage is essential. A highly efficient model can make more predictions within a shorter time, improving responsiveness, especially in real-time decision-making scenarios. Maintaining high efficiency enhances user experience and overall system performance.

To summarize, accuracy, computational complexity, and efficiency are three key evaluation metrics for deep learning models. Accuracy provides a direct understanding of the model’s predictive performance, while computational complexity and efficiency assess the model’s resource utilization and scalability. A comprehensive evaluation using these metrics guides model improvement and optimization, ensuring a balance between performance and practical usability in real-world applications.

In this study, various software tools and statistical methods were used to construct predictive models evaluating surgical outcomes and postoperative recovery. The programming and data processing were primarily conducted in Jupyter Notebook using Python. Data processing and visualization were performed with the Python libraries pandas and Matplotlib, while visualization plots were created with Visio. Statistical methods included data normalization, calculation of model accuracy, sensitivity, and specificity, as well as model performance assessment through loss function curves. In the data processing phase, imaging data was first normalized to eliminate differences in scale between various features. Additionally, all images were resized to a consistent resolution, facilitating subsequent model training.

During the model evaluation phase, we calculated accuracy, sensitivity, and specificity to assess the model’s performance in practical applications. Accuracy reflects the model’s overall predictive capability, sensitivity measures its ability to identify positive cases, and specificity evaluates its ability to recognize negative samples. Calculating these metrics provides a comprehensive understanding of the model’s performance, ensuring its effectiveness in clinical applications.

During model training, we employed the cross-entropy loss function as the primary evaluation criterion. The cross-entropy loss is highly effective for classification tasks, as it quantifies the discrepancy between the model’s predicted probability distribution and the actual labels, thereby enhancing classification accuracy. To optimize the learning process, we selected the Adam optimizer, which combines the advantages of momentum optimization and adaptive learning rate adjustments. This optimization method enables faster convergence and effectively handles sparse gradient problems.

Additionally, the batch size was set as a crucial parameter for efficient data processing. A well-chosen batch size improves training efficiency while balancing memory usage. The learning rate is another key hyperparameter that controls the step size of weight updates, directly influencing both convergence speed and final model performance. Through extensive experimentation, we determined an optimal initial learning rate to ensure stable training.

The entire training process was conducted in a high-performance computing environment, utilizing the NVIDIA RTX 3090 GPU. This GPU offers powerful parallel computing capabilities and efficient memory management, significantly accelerating deep learning model training and supporting the achievement of high-quality experimental results. These hardware settings and parameter configurations collectively enhanced the training efficiency and performance of the model, ensuring the smooth execution of deep learning tasks.

Additionally, we used the loss function value curve to further evaluate model performance. The loss function curve is a vital tool for assessing training effectiveness, diagnosing model issues, and guiding improvements. By analyzing the shape, trend, and differences in loss values between the training and validation sets, we gain insights into the model’s learning process and can identify the optimal-performing model. For data visualization, we used the matplotlib library to display arrays, illustrating spatial features of samples and model performance. This visualization approach aids in understanding data distribution and clearly reflects the model’s performance under varying conditions, providing valuable insights for future research and clinical applications. Through the integration of these statistical methods and visualization techniques, we comprehensively validate the model’s effectiveness, ensuring its reliability in practical use.

In this study, the collected esophageal disease imaging data was first normalized. The data was then divided into training, testing, and validation sets based on the quantity of images in each category. Details of the specific data split are provided in Table 1. To further analyze the performance of each deep learning model, we conducted a statistical evaluation using quantitative metrics. Table 2 presents a comparison of the performance metrics for the four deep learning models - MLP, ResNet, transformer, and Wave-ViT - on the image recognition task. By examining these metrics, we gain a deeper understanding of each model’s efficiency, complexity, and performance, allowing us to draw important conclusions regarding their relative strengths and suitability for this task.

| Esophagitis A | Esophagitis B-D | Barretts-short-segment | Barretts | |

| Tarin set | 320 | 208 | 42 | 32 |

| Test set | 42 | 26 | 6 | 5 |

| Val set | 41 | 26 | 5 | 4 |

| Total | 403 | 260 | 53 | 41 |

| Model name | Accuracy (%) | Input size (MB) | Params (MB) | Madd (G) | Flops (G) |

| MLP | 81.17 | 3.15 | 1.10 | 1.57 | 0.95 |

| ResNet | 85.44 | 3.15 | 45.20 | 71.21 | 35.63 |

| Transfomer | 87.65 | 3.15 | 1.45 | 2.17 | 1.57 |

| Wave-ViT | 88.97 | 3.15 | 1.29 | 1.04 | 1.12 |

The significance of each metric in the table is as follows: (1) Accuracy (%): The model’s accuracy on the image recognition task is expressed as a percentage. Higher accuracy indicates better model performance; (2) Input size (MB): The size of the input image in megabytes (MB), which reflects the memory space required for the model to process image data; (3) Params (MB): The number of model parameters in megabytes (MB). The parameter count indicates model complexity; generally, more parameters imply a more complex model with higher computational demand; (4) Madd (G): The number of multiply-accumulate (Madd) operations in billions (G). Madd is a common computational operation in deep learning models, and its count indicates the model’s computational complexity; and (5) Flops (G): The number of floating-point operations (flops) in billions (G). Floating-point operations include addition, subtraction, multiplication, and division, and the flops count serves as an essential indicator of the model’s computational complexity.

First, we observe that the input size for all four models is 3.15 MB, indicating that they process image data of the same scale, which facilitates a direct comparison of model performance. In terms of accuracy, Wave-ViT performs the best (88.97%), followed by transformer (87.65%), ResNet (85.44%), and MLP (81.17%). This suggests that the transformer and Wave-ViT models exhibit higher accuracy in image recognition tasks compared to the traditional MLP and ResNet models. This performance improvement may stem from the architectural advantages of the transformer and Wave-ViT models; for instance, the self-attention mechanism in the transformer model allows it to better capture long-range dependencies between image features, while Wave-ViT combines the strengths of convolutional and transformer architectures, effectively extracting both local and global features. In this study, the Wave-ViT model demonstrated superior diagnostic accuracy, achieving an accuracy of 0.8897, surpassing the transformer model (0.8765). This im

However, high accuracy does not necessarily equate to high efficiency. Let us analyze model complexity and computational load. The ResNet model has a significantly larger parameter count than the other three models (45.20 MB vs 1.10 MB, 1.45 MB, 1.29 MB), indicating that ResNet is the most complex model. Correspondingly, ResNet’s Madd (71.21 G) and flops (35.63 G) are also substantially higher than those of the other models. This suggests that while ResNet achieves relatively high accuracy, it also incurs a considerable computational cost.

In contrast, the transformer and Wave-ViT models have relatively low parameter counts and computational loads, comparable to or even lower than those of the MLP model. Wave-ViT has the lowest Madd and flops (1.04 G and 1.12 G), indicating that it achieves the highest computational efficiency while maintaining high accuracy. The transformer model also demonstrates relatively low computational demands, which can be attributed to its parallel processing capability. Although the MLP model has the fewest parameters and lowest computational load, it also has the lowest accuracy, suggesting that while simple models offer high computational efficiency, their limited representational capacity makes it challenging to capture complex features within images.

In summary, the data in Table 2 indicates that transformer-based models, particularly Wave-ViT, demonstrate a favorable balance of performance and efficiency in image recognition tasks. These models achieve a better trade-off between accuracy and computational efficiency, suggesting a promising direction for the development of deep learning models. Future research could explore further improvements to these models to achieve an optimal balance among accuracy, efficiency, and resource consumption.

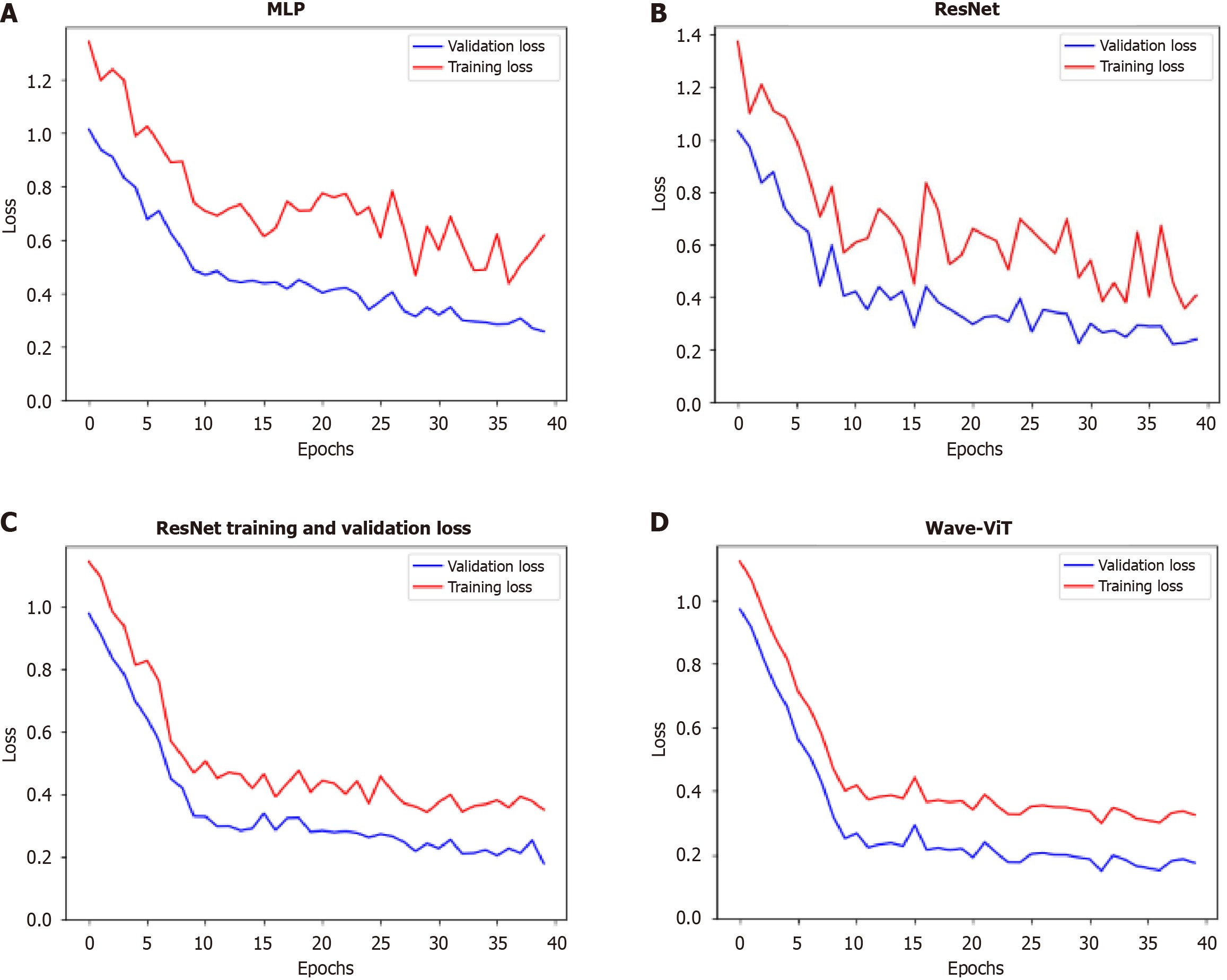

To further identify the optimal model, this study analyzes the variation in loss function values during training. The four models - MLP, ResNet, transformer, and Wave-ViT - show significant differences in their training performance, as reflected in the stability and final values of their loss functions, which follow a trend of progressive improvement and reduction, as shown in Figure 6. These differences stem from their unique network architectures and training strategies. The following analysis, based on Figure 6, will detail the distinctions among these models in terms of loss function stability and final loss values.

MLP is a basic deep learning model composed of multiple fully connected layers. During training, the loss function for MLP often exhibits significant fluctuations, especially in the early stages. This is due to the longer gradient propagation paths in MLP, which make it prone to gradient vanishing or explosion issues, resulting in unstable parameter updates and pronounced oscillations in the loss function. Furthermore, the MLP’s limited representational capacity makes it challenging to effectively learn complex data features, leading to slower loss convergence and a relatively high final loss value. Its loss function curve typically shows a jagged pattern with slow convergence and is prone to get trapped in local minima.

ResNet mitigates the gradient vanishing problem in deep neural networks by introducing skip connections, allowing gradients to bypass certain layers during backpropagation. This design makes the model easier to train and enables the training of deeper networks. During training, ResNet’s loss function shows greater stability than MLP, with significantly reduced fluctuations. This is due to the skip connections, which improve gradient propagation and stabilize parameter updates. Additionally, ResNet’s convolutional layers effectively capture local features in image data and, through stacking multiple convolutional layers, learn higher-level feature representations, enhancing the model’s expressive power. As a result, ResNet achieves lower final loss values and faster convergence than MLP. Its loss function curve is relatively smooth, converges more quickly, and is more likely to approach the global minimum.

The core of the transformer model is the self-attention mechanism, which captures relationships between any two elements in the input sequence, effectively learning long-range dependencies. Unlike MLP and ResNet, the transformer model processes all elements in the input sequence in parallel, resulting in faster training and the ability to handle longer sequences. During training, the transformer model’s loss function exhibits even greater stability than that of ResNet, with smaller fluctuations. This stability arises from the self-attention mechanism’s capacity to capture contextual information, leading to more stable parameter updates and better learning of global data features. Additionally, the transformer model’s strong representational ability enables it to learn more complex feature representations, resulting in lower loss values and faster convergence than ResNet. Its loss function curve is exceptionally smooth, converges very quickly, and typically achieves very low loss values.

Wave-ViT is a model that applies the transformer architecture in the frequency domain. It first transforms input data into the frequency domain and then utilizes the transformer model for feature extraction in this domain. This approach effectively captures frequency information in the data and leverages the transformer model’s parallel processing capability to enhance computational efficiency. During training, the loss function of Wave-ViT is even more stable than that of the transformer model, with minimal fluctuations. This is because frequency domain representation can effectively reduce noise and highlight essential features in the data, resulting in more stable parameter updates. Additionally, Wave-ViT excels in capturing global data features and effectively learns frequency-based information, leading to lower loss values and faster convergence than the transformer model. Its loss function curve is exceptionally smooth, with rapid convergence to very low loss values, and is less prone to getting trapped in local minima.

In summary, from the perspective of loss function variation, these four models exhibit a clear progression in stability and final loss values: MLP shows the most volatile loss function with large fluctuations and a high final loss value; ResNet is relatively stable with smaller fluctuations and a lower final loss value than MLP; the transformer model is even more stable, with minimal fluctuations and a lower final loss value than ResNet; and Wave-ViT demonstrates the highest stability, with minimal fluctuations and the lowest final loss value. This difference primarily stems from the unique architecture and training strategies of each model. MLP lacks effective mechanisms to address the vanishing gradient problem; ResNet alleviates this issue through skip connections; the transformer captures long-range dependencies through its self-attention mechanism; and Wave-ViT combines the benefits of frequency domain representation and the transformer model, further enhancing model stability and representational capacity. This increased stability directly translates into better generalization and robustness, with Wave-ViT often showing optimal performance on the test set. Naturally, actual outcomes may be influenced by factors such as datasets and hyperparameters, but the overall trend follows this pattern.

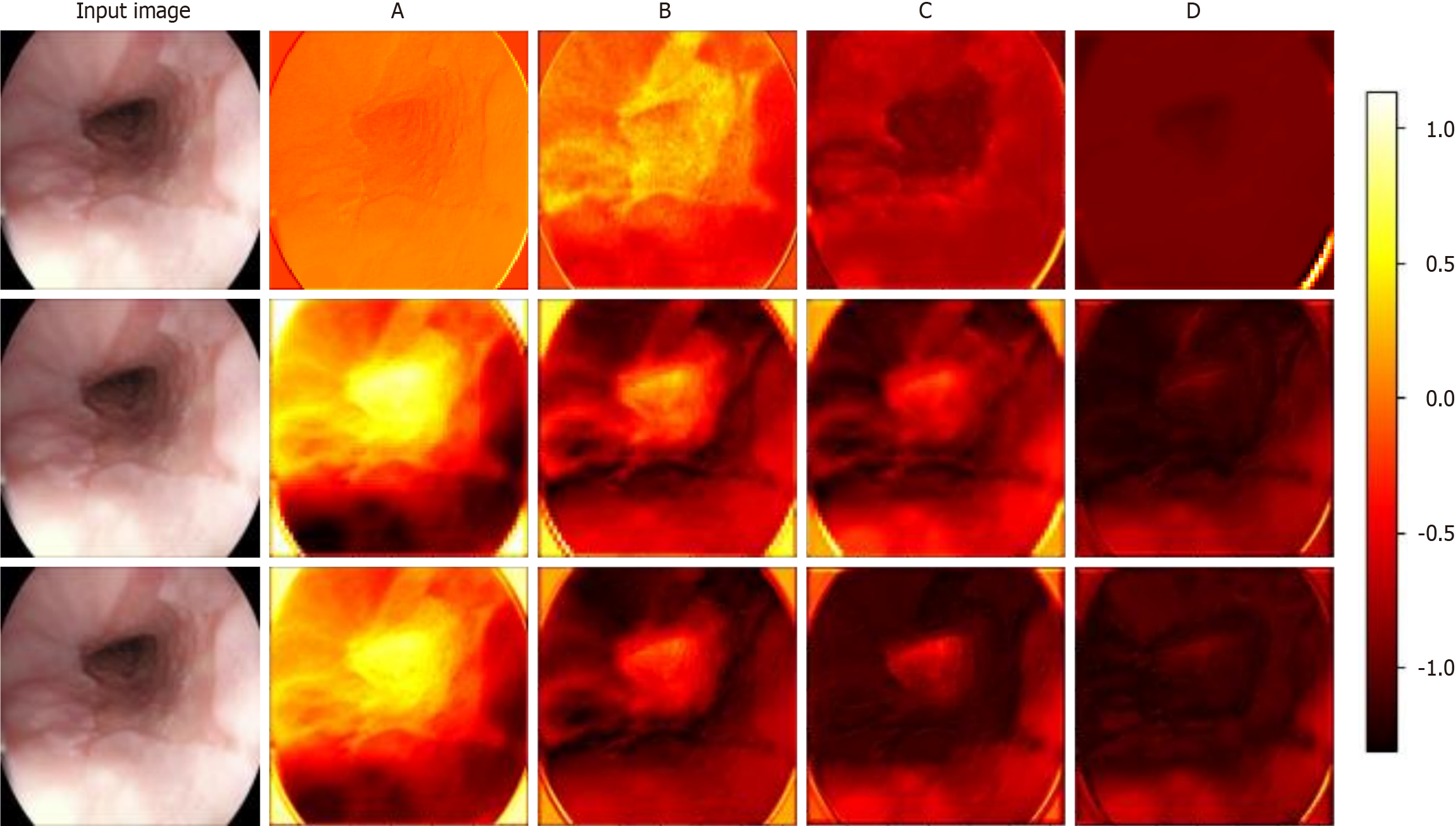

Visualizing the feature maps of each module in each model allows us to understand the internal mechanisms by which the models identify esophageal diseases. This is especially useful for examining the differences in feature extraction and semantic understanding across models. Figure 7 shows the results of visualizing different models for the identification of a particular case of esophagitis. This paper analyzes the differences in feature maps extracted by modules across four levels in ResNet, the transformer model, and Wave-ViT, with a focus on how Wave-ViT leverages frequency domain information to enhance its focus on disease regions in the esophagus.

By observing the intermediate feature maps extracted by each model, this study conducted a detailed analysis of the correlation between the model’s focus areas and pathological features[38]. ResNet primarily captures local details, such as the texture of the esophageal wall and the distribution of blood vessels. As the network depth increases, the model gradually learns higher-level features, such as the integrity of the esophageal mucosa and the shape and size of inflamed areas. However, ResNet mainly focuses on local spatial information within the image and has limited sensitivity to global information and different frequency components. The transformer model, on the other hand, captures relationships between different regions of the esophagus, such as the connection between inflamed areas and surrounding tissue. Due to its global perspective enabled by the self-attention mechanism, the transformer model is more effective than ResNet in identifying disease regions. Wave-ViT has the advantage of simultaneously capturing both local details and global contextual information in diseased regions. The wavelet transform highlights high-frequency details in diseased areas, such as irregular edges and abnormal textures. Additionally, the wavelet block extracts information at different scales from feature maps of various frequency components: High-frequency components highlight details within diseased areas, while low-frequency components capture global contextual information. As a result, the Wave-ViT model demonstrates a higher degree of focus on disease regions and achieves more precise localization of these areas.

In summary, the ResNet visualization results may indicate attention to the entire esophageal region but show a lower degree of focus on disease areas compared to the other two models. All three models’ shallow feature maps display local texture information, while deeper feature maps reveal more abstract regional features. However, both ResNet and transformer models lack emphasis on specific frequency domain information. Wave-ViT, in contrast, captures more frequency domain information, allowing it to extract richer semantic information from the esophagus, which explains why Wave-ViT demonstrates a higher focus on esophageal disease regions.

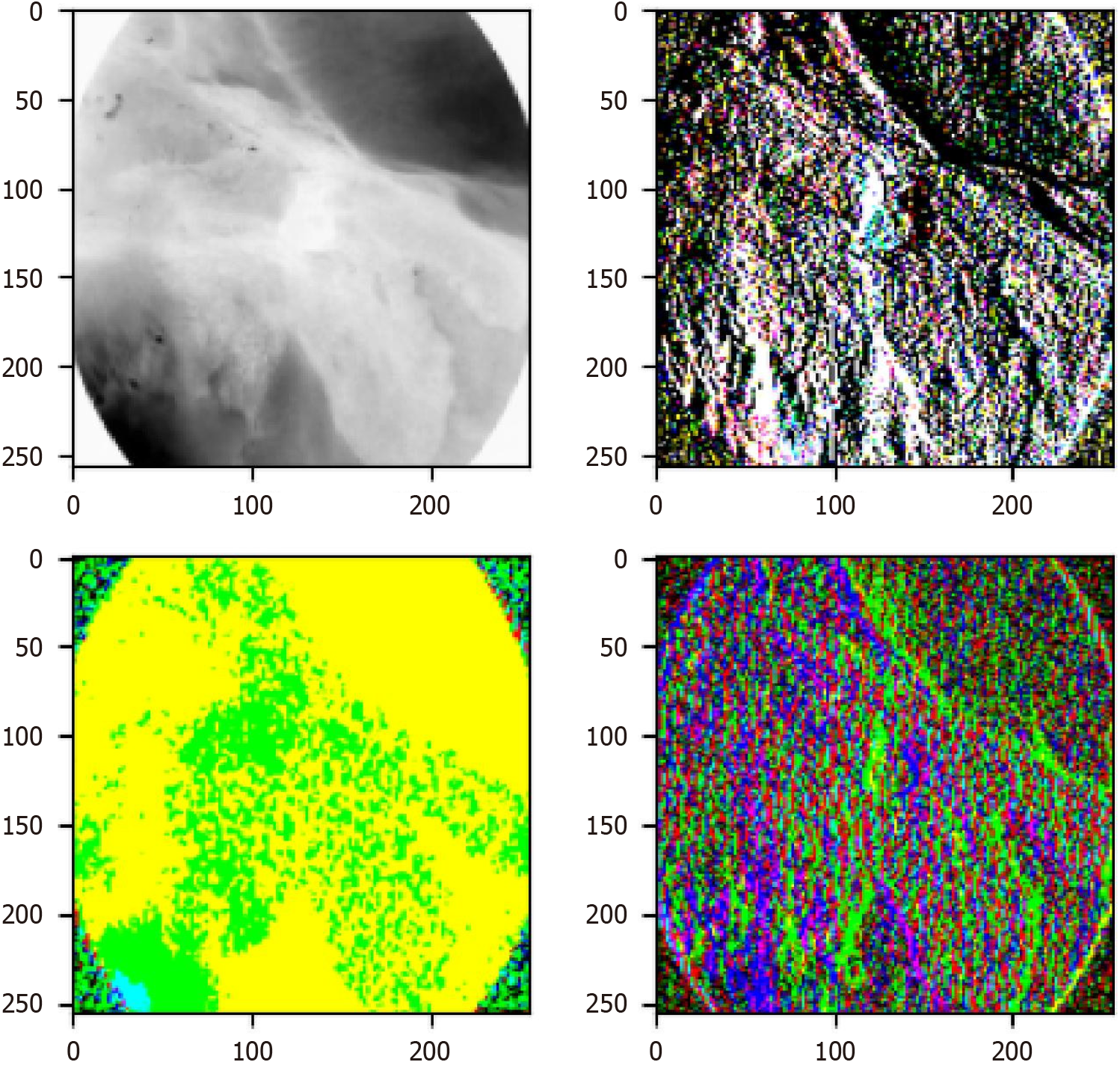

To further analyze how the wavelet block in Wave-ViT extracts frequency domain features, this study visualizes the first wavelet block’s feature extraction process, as shown in Figure 8. The wavelet block employs a fundamental time-frequency analysis method, decomposing the input signal into different frequency sub-bands - namely, low-frequency components (top-left in Figure 8) and high-frequency components (top-right, bottom-left, and bottom-right in Figure 8) - to address aliasing issues. Specifically, the low-frequency component reflects the coarse-grained structure of basic objects, while the high-frequency components retain fine-grained texture details. In this way, various levels of image detail are captured in the extracted feature maps without information loss.

The Wave-ViT model incorporates wavelet transform to leverage frequency domain decomposition, significantly improving the detection of EAC lesions. Wavelet transform allows the model to conduct multi-scale analysis in the frequency domain, enabling effective feature extraction across different frequency bands[39]. Specifically, the model first applies wavelet transform to decompose EAC images (e.g., biopsy tissue slides or medical scans) into low-frequency and high-frequency components: Low-frequency components retain global structural information. High-frequency com

Overall, Wave-ViT integrates frequency domain decomposition with deep learning, advancing early diagnosis and precise recognition of EAC, and supporting clinical decision-making[40]. This frequency domain mechanism enables Wave-ViT to simultaneously analyze local details and global context, capturing EAC’s pathological characteristics more comprehensively. High-frequency information helps identify subtle anomalies in early-stage lesions, while low-frequency information assists in assessing lesion extent and severity. Experimental results further confirm that Wave-ViT precisely localizes lesion areas and achieves higher classification accuracy on EAC datasets. This advancement provides critical support for early detection and treatment planning. This translation effectively conveys the training process, performance comparison, feature visualization, and clinical implications of Wave-ViT while integrating relevant references from the manuscript.

From the quantitative and visualization results, it is evident that none of the four models achieved optimal accuracy, and each exhibited different errors. When applying MLP, CNNs, transformers, and Wave-ViT for EAC classification, various sources of error may significantly impact model performance and clinical interpretability.

MLP: Limited feature engineering, overfitting, and nonlinear constraints may prevent the model from effectively capturing the complexity of clinical data, reducing diagnostic accuracy and generalizability.

CNN: CNN models may be affected by data bias, image quality variations, and overfitting, leading to missed or misdiagnosed high-risk patients, which increases psychological burdens on patients.

Transformer: While transformers have strong feature extraction capabilities, their reliance on large datasets makes them vulnerable to performance degradation due to insufficient training samples. Additionally, high computational complexity in inference may limit real-time decision-making.

Wave-ViT: Although Wave-ViT excels in multi-scale feature extraction, improper utilization of multi-scale information may lead to performance degradation or overfitting. These potential sources of error could lead to the omission of critical pathological features, undermining model interpretability and credibility, thereby affecting clinical decision-making and treatment strategies. In clinical practice, physicians must carefully assess these models’ limitations to make comprehensive patient evaluations and deliver more effective treatments. To further reduce these errors, future research will focus on optimizing multiple aspects of esophageal cancer prediction models.

Expanding the dataset: Enhancing generalization and performance by collecting diverse, multi-center data covering various patient demographics (age, gender, ethnicity) and pathological subtypes of esophageal cancer. This diversity will improve rare lesion detection and ensure better adaptability across different clinical scenarios.

Optimizing models for different esophageal cancer types: Specialized testing and optimization of models for adenocarcinoma and squamous cell carcinoma are crucial due to their distinct pathogenesis, pathological characteristics, and clinical manifestations. Optimizing models for specific subtypes can improve classification accuracy, while exploring subtype-specific feature selection and preprocessing techniques can further enhance performance.

Integrating AI models into clinical workflows: Embedding deep learning models into clinical decision support systems can facilitate real-time diagnostic suggestions following patient examinations. Future studies should also prioritize model interpretability and transparency to help physicians understand model decisions, thereby increasing trust and adoption in clinical settings. By expanding datasets, optimizing models for specific cancer subtypes, and integrating AI technology into clinical workflows, this research aims to improve the accuracy and precision of esophageal cancer detection and treatment.

To assess the generalization ability of the Wave-ViT model, we conducted a 5-fold cross-validation. The dataset was evenly split into five subsets, where one subset was used for validation while the remaining four were used for training in each iteration. This process was repeated five times, and the final accuracy was calculated as the average of all experiments. The results demonstrated that the Wave-ViT model performed consistently across all subsets, achieving an average accuracy of 89%, confirming its robustness under different data distributions. Moreover, a comparison of the loss curves between training and validation sets indicated no significant overfitting, further validating its strong generalization ability. This evaluation method ensures that the Wave-ViT model effectively mitigates data bias, demonstrating strong potential for real-world applications in esophageal cancer diagnosis. Future research will continue to expand the dataset by incorporating more EAC samples to further enhance model generalizability.

This study demonstrates significant research value and clinical potential by applying deep learning technology to the pathological classification and staging of esophageal cancer. As the sixth most common cancer worldwide, esophageal cancer is characterized by a high mortality rate and complex pathological features, making early diagnosis and staging crucial for improving patient survival rates[41,42]. However, traditional diagnostic methods often rely on the manual judgment of pathology experts, which is not only time-consuming and labor-intensive but also prone to human error, leading to misdiagnoses or missed diagnoses[43]. Therefore, enhancing diagnostic accuracy and efficiency for esophageal cancer, particularly through automated diagnostic tools in early pathological classification and staging, has become a pressing issue in the medical field.

In this context, deep learning serves as an automated and intelligent image analysis tool, providing a new solution for processing pathological images. The use of ResNet, MLP, transformer model, and Wave-ViT in this project significantly enhances the diagnostic accuracy of esophageal cancer pathological images. Among these, the Wave-ViT represents an innovative aspect of this study, combining the advantages of self-attention mechanisms and frequency domain information extraction. This model not only captures complex features in pathological images but also precisely identifies high-frequency and low-frequency characteristics through wavelet transform. As a result, the model demonstrates outstanding performance in classifying pathological stages from esophagitis to esophageal cancer, exhibiting higher accuracy and efficiency compared to traditional methods, particularly in the staging diagnosis of complex pathological structures.

In the application of deep learning models, the authenticity and validity of the dataset directly influence the model’s generalization ability and reliability. This study utilized clinical real-world data from two types of esophagitis and two types of Barrett’s esophagus, encompassing a diverse range of pathological classification and staging scenarios, which enhances the practical applicability of the model. In contrast, some existing studies often rely on publicly available datasets or relatively homogeneous pathological types, making it difficult to cover the variable pathological features encountered in clinical practice. Moreover, the quality of the data and the consistency of annotations largely determine the model’s diagnostic accuracy and generalizability. We strictly controlled data balance during model training and validation to ensure that the model could accurately identify different types of lesions. In comparison, other studies may struggle to achieve the same level of recognition performance due to limitations in their datasets. The dataset construction strategy employed in this research not only improves the model’s adaptability but also provides a solid foundation for clinical implementation.

Through a detailed evaluation of the performance of each model, this study found that the Wave-ViT excels in both accuracy and efficiency. In the tasks of pathological classification and staging of esophageal cancer, Wave-ViT achieved an accuracy of 88.97%, significantly higher than that of the transformer (87.65%), ResNet (85.44%), and MLP (81.17%). Furthermore, Wave-ViT exhibits low computational complexity, with key metrics such as parameter count, Madd, and flops maintained at appropriate levels, indicating its suitability for real-time analysis in practical applications. In contrast, existing studies often report deep learning models that require high computational resources and parameter counts, making them less feasible for implementation in resource-limited clinical settings. This research demonstrates the advantage of Wave-ViT in maintaining high accuracy while reducing computational complexity, laying the groundwork for its widespread application in pathological diagnosis. One of the key aspects to highlight is that the Wave-ViT model, by integrating wavelet transform with the transformer architecture, has demonstrated exceptional performance in medical image analysis. Its multi-scale feature extraction capability enables more accurate detection of subtle early-stage esophageal cancer lesions, such as Barrett’s esophagus or mild dysplasia, thereby significantly enhancing early detection sensitivity. Additionally, Wave-ViT effectively differentiates between esophageal cancer subtypes, including esophageal squamous cell carcinoma and EAC, providing clinicians with more precise pathological classification information to support personalized treatment planning. For example, based on the model’s high-precision diagnostic outputs, phy

In this study, we propose a step-by-step clinical workflow to integrate Wave-ViT as an auxiliary screening tool into the endoscopic examination process to improve early diagnosis efficiency and accuracy for EAC. Specifically, this model is designed to automatically analyze images from real-time endoscopic video streams and utilize frequency-domain decomposition techniques (e.g., wavelet transform) to extract high-frequency details (such as irregular edges and abnormal textures) and low-frequency global information (such as lesion size and its relationship with surrounding tissues). When a suspicious lesion is detected, the model automatically highlights the region in real time, prompting endoscopists to focus on potentially malignant areas, thereby reducing the risk of missed or misdiagnoses. This real-time assistance not only enhances endoscopic examination efficiency but also provides clinicians with a more comprehensive understanding of lesions, supporting more accurate clinical decision-making. Additionally, we explored the potential complementary role of Wave-ViT in pathological biopsy analysis. Traditional biopsy-based histopathology relies heavily on subjective assessment by pathologists, whereas Wave-ViT can provide objective, quantitative lesion characterization, including lesion size, shape, texture features, and contrast with surrounding tissues. For instance, the model can generate probability heatmaps of malignancy likelihood and combine frequency-domain feature analysis to offer multi-scale lesion descriptions. These quantitative insights, when combined with microscopic pathological observations, can significantly enhance diagnostic accuracy and consistency. This is particularly valuable for early-stage EAC diagnosis, where the model’s heightened sensitivity to microscopic lesions can compensate for sampling bias and the limited field of view in biopsies. Notably, Wave-ViT exhibits significant advantages for clinical applications.

Its frequency-domain decomposition enables superior lesion detection in complex backgrounds, effectively distinguishing early-stage esophageal cancer from normal tissues.

The model’s efficiency and automation make it well-suited for high-throughput screening scenarios, significantly reducing the workload of endoscopists.

By combining Wave-ViT analysis with histopathology, the model enables more accurate and personalized EAC diagnoses. Future research should focus on optimizing the model’s generalization ability and conducting multi-center clinical trials to validate its clinical applicability.

This study focuses on the classification and staging of different pathological types of esophageal cancer, particularly the precise identification and analysis of the progression from esophagitis to Barrett’s esophagus and EAC. Traditional pathological classification methods often face challenges in accurately identifying precancerous lesions, especially in distinguishing atypical hyperplasia from typical hyperplasia in Barrett’s esophagus[44,45]. However, deep learning models can automatically extract deep features from images, significantly enhancing the accuracy and consistency of classification. The Wave-ViT model employed in this study effectively extracts frequency domain information, enabling it to accurately differentiate between various pathological types, thus providing a more reliable tool for early cancer screening. Compared to models in other studies, the model presented here demonstrates not only greater efficiency in recognizing pathological features but also excellent adaptability in maintaining stability during staging.

We also performed a subgroup analysis of the two primary histological subtypes of esophageal cancer: Esophageal squamous cell carcinoma: Predominantly associated with smoking, alcohol consumption, and malnutrition. More prevalent in Asian and African populations. Pathologically characterized by dysplastic changes in squamous epithelial cells, progressing to invasive carcinoma. Treatment options: Surgery and chemo-radiotherapy remain the mainstay. Molecular characteristics: Frequently involves TP53, CDKN2A mutations, and Wnt/β-catenin pathway alterations[46]. EAC: More common in Western populations, strongly linked to gastroesophageal reflux disease, obesity, and Barrett’s esophagus. Pathologically originates from columnar epithelium with glandular dysplasia. Treatment options: In addition to surgery and chemo-radiotherapy, targeted therapies (e.g., anti-human epidermal growth factor receptor 2 drugs) show promising results. Molecular characteristics: Frequently involves TP53, ERBB2 mutations, and alterations in the epidermal growth factor receptor/human epidermal growth factor receptor 2 signaling pathway[47,48]. These findings emphasize the distinct etiological, pathological, molecular, and therapeutic differences between esophageal squamous cell carcinoma and EAC, underscoring the importance of subtype-specific treatment strategies in clinical practice.

The most significant highlight of this study is the introduction of frequency domain information, an innovative approach that enables deep learning models to capture and recognize subtle changes in pathological images that may otherwise be difficult to detect. While traditional deep learning models demonstrate considerable advantages in processing large-scale image data, they often exhibit limitations in recognizing minor changes, particularly the gradual transitions between different stages of esophageal cancer progression. The frequency domain features obtained through wavelet transform allow the model to extract more detailed image information from various frequency dimensions, significantly enhancing its sensitivity to pathological features. This is especially crucial in the diagnosis of early lesions, making early detection of esophageal cancer possible.

Another significant value of this study lies in the selection and application of the dataset. This project utilizes the Hyper Kvasir and other publicly available datasets, which contain a large number of high-quality esophageal pathology images annotated by professional endoscopists, covering multiple pathological stages from esophagitis and Barrett’s esophagus to EAC. The use of this dataset not only ensures the quality of the training data for the model but also guarantees its generalization ability and practicality through validation with extensive real clinical data. The model’s excellent performance in both training and validation demonstrates the broad applicability of deep learning technology in complex medical image processing, laying a solid foundation for future larger-scale clinical applications.

From a clinical perspective, this study provides a novel tool for the precise diagnosis and personalized treatment of esophageal cancer. The introduction of deep learning models not only enhances the accuracy of pathological classification and staging but also reduces the workload for physicians through automated diagnostics, shortening diagnostic times and improving clinical efficiency. Additionally, Wave-ViT’s analytical approach enables physicians to make precise assessments at earlier stages of lesions, thereby providing a more scientific basis for personalized treatment plans. This advancement not only helps to lower the mortality rate associated with esophageal cancer but also significantly improves patient treatment outcomes and quality of life.

In summary, this project demonstrates that the application of deep learning technology in the pathological classification and staging of esophageal cancer not only achieves a dual enhancement of diagnostic accuracy and efficiency but also establishes a new technological pathway for early detection, precision medicine, and personalized treatment of esophageal cancer. This research holds significant implications and broad clinical application prospects.

| 1. | Khan R, Saha S, Gimpaya N, Bansal R, Scaffidi MA, Razak F, Verma AA, Grover SC. Outcomes for upper gastrointestinal bleeding during the first wave of the COVID-19 pandemic in the Toronto area. J Gastroenterol Hepatol. 2022;37:878-882. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 5] [Cited by in RCA: 5] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 2. | Aburasain RY. Esophageal Cancer Classification in Initial Stages Using Deep and Transfer Learning. Proceedings of the 2024 IEEE International Conference on Advanced Systems and Emergent Technologies (IC_ASET); 2024 Apr 27-29; Hammamet, Tunisia. United States: Institute of Electrical and Electronics Engineers, 2024. |

| 3. | Tong T. Complete Tumor Response of Esophageal Cancer: How Much Imaging Can Do? Ann Surg Oncol. 2024;31:4161-4162. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 4. | Fang S, Xu P, Wu S, Chen Z, Yang J, Xiao H, Ding F, Li S, Sun J, He Z, Ye J, Lin LL. Raman fiber-optic probe for rapid diagnosis of gastric and esophageal tumors with machine learning analysis or similarity assessments: a comparative study. Anal Bioanal Chem. 2024;416:6759-6772. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 5. | Cao K, Zhu J, Lu M, Zhang J, Yang Y, Ling X, Zhang L, Qi C, Wei S, Zhang Y, Ma J. Analysis of multiple programmed cell death-related prognostic genes and functional validations of necroptosis-associated genes in oesophageal squamous cell carcinoma. EBioMedicine. 2024;99:104920. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 25] [Article Influence: 25.0] [Reference Citation Analysis (0)] |

| 6. | Zhang H, Wen H, Zhu Q, Zhang Y, Xu F, Ma T, Guo Y, Lu C, Zhao X, Ji Y, Wang Z, Chu Y, Ge D, Gu J, Liu R. Genomic profiling and associated B cell lineages delineate the efficacy of neoadjuvant anti-PD-1-based therapy in oesophageal squamous cell carcinoma. EBioMedicine. 2024;100:104971. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 6] [Reference Citation Analysis (0)] |

| 7. | Jiang P, Li Y, Wang C, Zhang W, Lu N. A deep learning based assisted analysis approach for Sjogren's syndrome pathology images. Sci Rep. 2024;14:24693. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 8. | Hachache R, Yahyaouy A, Riffi J, Tairi H, Abibou S, Adoui ME, Benjelloun M. Advancing personalized oncology: a systematic review on the integration of artificial intelligence in monitoring neoadjuvant treatment for breast cancer patients. BMC Cancer. 2024;24:1300. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 9. | Pusterla O, Heule R, Santini F, Weikert T, Willers C, Andermatt S, Sandkühler R, Nyilas S, Latzin P, Bieri O, Bauman G. MRI lung lobe segmentation in pediatric cystic fibrosis patients using a recurrent neural network trained with publicly accessible CT datasets. Magn Reson Med. 2022;88:391-405. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3] [Reference Citation Analysis (0)] |

| 10. | Tsai MC, Yen HH, Tsai HY, Huang YK, Luo YS, Kornelius E, Sung WW, Lin CC, Tseng MH, Wang CC. Artificial intelligence system for the detection of Barrett's esophagus. World J Gastroenterol. 2023;29:6198-6207. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 8] [Reference Citation Analysis (0)] |

| 11. | Ohmori M, Ishihara R, Aoyama K, Nakagawa K, Iwagami H, Matsuura N, Shichijo S, Yamamoto K, Nagaike K, Nakahara M, Inoue T, Aoi K, Okada H, Tada T. Endoscopic detection and differentiation of esophageal lesions using a deep neural network. Gastrointest Endosc. 2020;91:301-309.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 112] [Cited by in RCA: 100] [Article Influence: 20.0] [Reference Citation Analysis (0)] |

| 12. | Iwagami H, Ishihara R, Aoyama K, Fukuda H, Shimamoto Y, Kono M, Nakahira H, Matsuura N, Shichijo S, Kanesaka T, Kanzaki H, Ishii T, Nakatani Y, Tada T. Artificial intelligence for the detection of esophageal and esophagogastric junctional adenocarcinoma. J Gastroenterol Hepatol. 2021;36:131-136. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 32] [Cited by in RCA: 28] [Article Influence: 7.0] [Reference Citation Analysis (0)] |

| 13. | Cui R, Wang L, Lin L, Li J, Lu R, Liu S, Liu B, Gu Y, Zhang H, Shang Q, Chen L, Tian D. Deep Learning in Barrett's Esophagus Diagnosis: Current Status and Future Directions. Bioengineering (Basel). 2023;10:1239. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 14. | Jain S, Dhingra S. Pathology of esophageal cancer and Barrett's esophagus. Ann Cardiothorac Surg. 2017;6:99-109. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 53] [Cited by in RCA: 75] [Article Influence: 9.4] [Reference Citation Analysis (0)] |

| 15. | Botros M, de Boer OJ, Cardenas B, Bekkers EJ, Jansen M, van der Wel MJ, Sánchez CI, Meijer SL. Deep Learning for Histopathological Assessment of Esophageal Adenocarcinoma Precursor Lesions. Mod Pathol. 2024;37:100531. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 8] [Reference Citation Analysis (0)] |

| 16. | Bouzid K, Sharma H, Killcoyne S, Castro DC, Schwaighofer A, Ilse M, Salvatelli V, Oktay O, Murthy S, Bordeaux L, Moore L, O'Donovan M, Thieme A, Nori A, Gehrung M, Alvarez-Valle J. Enabling large-scale screening of Barrett's esophagus using weakly supervised deep learning in histopathology. Nat Commun. 2024;15:2026. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 9] [Reference Citation Analysis (0)] |

| 17. | Jian M, Tao C, Wu R, Zhang H, Li X, Wang R, Wang Y, Peng L, Zhu J. HRU-Net: A high-resolution convolutional neural network for esophageal cancer radiotherapy target segmentation. Comput Methods Programs Biomed. 2024;250:108177. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 18. | Souza LA Jr, Passos LA, Santana MCS, Mendel R, Rauber D, Ebigbo A, Probst A, Messmann H, Papa JP, Palm C. Layer-selective deep representation to improve esophageal cancer classification. Med Biol Eng Comput. 2024;62:3355-3372. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 19. | Iyer PG, Sachdeva K, Leggett CL, Codipilly DC, Abbas H, Anderson K, Kisiel JB, Asfahan S, Awasthi S, Anand P, Kumar M P, Singh SP, Shukla S, Bade S, Mahto C, Singh N, Yadav S, Padhye C. Development of Electronic Health Record-Based Machine Learning Models to Predict Barrett's Esophagus and Esophageal Adenocarcinoma Risk. Clin Transl Gastroenterol. 2023;14:e00637. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 17] [Cited by in RCA: 17] [Article Influence: 8.5] [Reference Citation Analysis (0)] |

| 20. | Yao T, Pan Y, Li Y, Ngo CW, Mei T. Wave-ViT: Unifying Wavelet and Transformers for Visual Representation Learning. 2022 Preprint. Available from: arXiv:2207.04978. [DOI] [Full Text] |

| 21. | Zhu Z, Soricut R. Wavelet-Based Image Tokenizer for Vision Transformers. 2024 Preprint. Available from: arXiv:2405.18616. [DOI] [Full Text] |

| 22. | Shoji Y, Koyanagi K, Kanamori K, Tajima K, Ogimi M, Ninomiya Y, Yamamoto M, Kazuno A, Nabeshima K, Nishi T, Mori M. Immunotherapy for esophageal cancer: Where are we now and where can we go. World J Gastroenterol. 2024;30:2496-2501. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 13] [Reference Citation Analysis (2)] |

| 23. | Borgli H, Thambawita V, Smedsrud PH, Hicks S, Jha D, Eskeland SL, Randel KR, Pogorelov K, Lux M, Nguyen DTD, Johansen D, Griwodz C, Stensland HK, Garcia-Ceja E, Schmidt PT, Hammer HL, Riegler MA, Halvorsen P, de Lange T. HyperKvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Sci Data. 2020;7:283. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 297] [Cited by in RCA: 137] [Article Influence: 27.4] [Reference Citation Analysis (0)] |

| 24. | Rukundo O. Effects of Image Size on Deep Learning. Electronics. 2023;12:985. [DOI] [Full Text] |

| 25. | He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27-30; Las Vegas, NV, United States. United States: Institute of Electrical and Electronics Engineers, 2016. |

| 26. | Liu S, Tian G, Xu Y. A novel scene classification model combining ResNet based transfer learning and data augmentation with a filter. Neurocomputing. 2019;338:191-206. [RCA] [DOI] [Full Text] [Cited by in Crossref: 70] [Cited by in RCA: 40] [Article Influence: 6.7] [Reference Citation Analysis (0)] |

| 27. | Liu Y, Zhang Y, Wang Y, Hou F, Yuan J, Tian J, Zhang Y, Shi Z, Fan J, He Z. A Survey of Visual Transformers. IEEE Trans Neural Netw Learn Syst. 2024;35:7478-7498. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 77] [Cited by in RCA: 36] [Article Influence: 36.0] [Reference Citation Analysis (0)] |

| 28. | Xu Y, Wei H, Lin M, Deng Y, Sheng K, Zhang M, Tang F, Dong W, Huang F, Xu C. Transformers in computational visual media: A survey. Comp Visual Med. 2022;8:33-62. [DOI] [Full Text] |

| 29. | He X, Chen Y, Lin Z. Spatial-Spectral Transformer for Hyperspectral Image Classification. Remote Sens. 2021;13:498. [RCA] [DOI] [Full Text] [Cited by in Crossref: 56] [Cited by in RCA: 46] [Article Influence: 11.5] [Reference Citation Analysis (0)] |

| 30. | Yang X, Cao W, Lu Y, Zhou Y. Hyperspectral Image Transformer Classification Networks. IEEE Trans Geosci Remote Sens. 2022;60:1-15. [DOI] [Full Text] |

| 31. | Zhang W, Shen X, Zhang H, Yin Z, Sun J, Zhang X, Zou L. Feature importance measure of a multilayer perceptron based on the presingle-connection layer. Knowl Inf Syst. 2024;66:511-533. [DOI] [Full Text] |

| 32. | Tolstikhin I, Houlsby N, Kolesnikov A, Beyer L, Zhai X, Unterthiner T, Yung J, Steiner A, Keysers D, Uszkoreit J, Lucic M, Dosovitskiy A. MLP-Mixer: An all-MLP Architecture for Vision. 2021 Preprint. Available from: arXiv:2105.01601. [DOI] [Full Text] |

| 33. | Liang J. Image classification based on RESNET. J Phys Conf Ser. 2020;1634:012110. [DOI] [Full Text] |

| 34. | Chen J, Lu Y, Yu Q, Luo X, Adeli E, Wang Y, Lu L, Yuille AL, Zhou Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. 2021 Preprint. Available from: arXiv:2102.04306. [DOI] [Full Text] |

| 35. | Mallat SG. A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Machine Intell. 1989;11:674-693. [DOI] [Full Text] |

| 36. | Lewis JR, Pathan S, Kumar P, Dias CC. AI in Endoscopic Gastrointestinal Diagnosis: A Systematic Review of Deep Learning and Machine Learning Techniques. IEEE Acc. 2024;12:163764-163786. [DOI] [Full Text] |

| 37. | Min JK, Kwak MS, Cha JM. Overview of Deep Learning in Gastrointestinal Endoscopy. Gut Liver. 2019;13:388-393. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 74] [Cited by in RCA: 116] [Article Influence: 23.2] [Reference Citation Analysis (0)] |

| 38. | Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int J Comput Vis. 2020;128:336-359. [RCA] [DOI] [Full Text] [Cited by in Crossref: 977] [Cited by in RCA: 1441] [Article Influence: 240.2] [Reference Citation Analysis (0)] |

| 39. | Hong Z, Xiong J, Yang H, Mo YK. Lightweight Low-Rank Adaptation Vision Transformer Framework for Cervical Cancer Detection and Cervix Type Classification. Bioengineering (Basel). 2024;11:468. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2] [Reference Citation Analysis (0)] |

| 40. | Umamaheswari T, Babu YMM. ViT-MAENB7: An innovative breast cancer diagnosis model from 3D mammograms using advanced segmentation and classification process. Comput Methods Programs Biomed. 2024;257:108373. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 4] [Reference Citation Analysis (0)] |

| 41. | Sidana V, Lnu R, Davenport A, Lekharaju V. Primary Oesophageal Lymphoma: A Diagnostic Dilemma. Cureus. 2024;16:e73751. [RCA] [PubMed] [DOI] [Full Text] [Reference Citation Analysis (0)] |

| 42. | Kamtam DN, Lin N, Liou DZ, Lui NS, Backhus LM, Shrager JB, Berry MF. Radiation therapy does not improve survival in patients who are upstaged after esophagectomy for clinical early-stage esophageal adenocarcinoma. JTCVS Open. 2025;23:290-308. [PubMed] [DOI] [Full Text] |

| 43. | Zhao YX, Zhao HP, Zhao MY, Yu Y, Qi X, Wang JH, Lv J. Latest insights into the global epidemiological features, screening, early diagnosis and prognosis prediction of esophageal squamous cell carcinoma. World J Gastroenterol. 2024;30:2638-2656. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 44. | Espino A, Cirocco M, Dacosta R, Marcon N. Advanced imaging technologies for the detection of dysplasia and early cancer in barrett esophagus. Clin Endosc. 2014;47:47-54. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 13] [Cited by in RCA: 13] [Article Influence: 1.2] [Reference Citation Analysis (0)] |

| 45. | Geboes K, Van Eyken P. The diagnosis of dysplasia and malignancy in Barrett's oesophagus. Histopathology. 2000;37:99-107. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 45] [Cited by in RCA: 47] [Article Influence: 1.9] [Reference Citation Analysis (0)] |

| 46. | Gao W, Liu S, Wu Y, Wei W, Yang Q, Li W, Chen H, Luo A, Wang Y, Liu Z. Enhancer demethylation-regulated gene score identified molecular subtypes, inspiring immunotherapy or CDK4/6 inhibitor therapy in oesophageal squamous cell carcinoma. EBioMedicine. 2024;105:105177. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 5] [Reference Citation Analysis (0)] |

| 47. | Zhao N, Zhang Z, Wang Q, Li L, Wei Z, Chen H, Zhou M, Liu Z, Su J. DNA damage repair profiling of esophageal squamous cell carcinoma uncovers clinically relevant molecular subtypes with distinct prognoses and therapeutic vulnerabilities. EBioMedicine. 2023;96:104801. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 3] [Reference Citation Analysis (0)] |

| 48. | Zhang G, Yuan J, Pan C, Xu Q, Cui X, Zhang J, Liu M, Song Z, Wu L, Wu D, Luo H, Hu Y, Jiao S, Yang B. Multi-omics analysis uncovers tumor ecosystem dynamics during neoadjuvant toripalimab plus nab-paclitaxel and S-1 for esophageal squamous cell carcinoma: a single-center, open-label, single-arm phase 2 trial. EBioMedicine. 2023;90:104515. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 29] [Reference Citation Analysis (0)] |