Published online Jun 14, 2023. doi: 10.3748/wjg.v29.i22.3561

Peer-review started: March 4, 2023

First decision: March 28, 2023

Revised: April 3, 2023

Accepted: May 4, 2023

Article in press: May 4, 2023

Published online: June 14, 2023

Processing time: 95 Days and 4.5 Hours

Recently, artificial intelligence (AI) has been widely used in gastrointestinal endoscopy examinations.

To comprehensively evaluate the application of AI-assisted endoscopy in detecting different digestive diseases using bibliometric analysis.

Relevant publications from the Web of Science published from 1990 to 2022 were extracted using a combination of the search terms “AI” and “endoscopy”. The following information was recorded from the included publications: Title, author, institution, country, endoscopy type, disease type, performance of AI, publication, citation, journal and H-index.

A total of 446 studies were included. The number of articles reached its peak in 2021, and the annual citation numbers increased after 2006. China, the United States and Japan were dominant countries in this field, accounting for 28.7%, 16.8%, and 15.7% of publications, respectively. The Tada Tomohiro Institute of Gastroenterology and Proctology was the most influential institution. “Cancer” and “polyps” were the hotspots in this field. Colorectal polyps were the most concerning and researched disease, followed by gastric cancer and gastrointestinal bleeding. Conventional endoscopy was the most common type of examination. The accuracy of AI in detecting Barrett’s esophagus, colorectal polyps and gastric cancer from 2018 to 2022 is 87.6%, 93.7% and 88.3%, respectively. The detection rates of adenoma and gastrointestinal bleeding from 2018 to 2022 are 31.3% and 96.2%, respectively.

AI could improve the detection rate of digestive tract diseases and a convolutional neural network-based diagnosis program for endoscopic images shows promising results.

Core Tip: Gastrointestinal tumors diagnosed at an early stage had a better prognosis than those diagnosed at a late stage, and performing endoscopy is the most effective approach to detect gastrointestinal tumors. In recent years, artificial intelligence (AI) has been widely used in gastrointestinal endoscopy examinations and it represents a fundamental breakthrough in the field of diagnostic endoscopy by assisting endoscopists with the detection of gastrointestinal tumors. This study aimed to provide a comprehensive analysis of AI applied in diagnostic endoscopy. A basic literature analysis was performed, as well as a subgroup analysis of AI-assisted digestive endoscopy in diagnosing various diseases.

- Citation: Du RC, Ouyang YB, Hu Y. Research trends on artificial intelligence and endoscopy in digestive diseases: A bibliometric analysis from 1990 to 2022. World J Gastroenterol 2023; 29(22): 3561-3573

- URL: https://www.wjgnet.com/1007-9327/full/v29/i22/3561.htm

- DOI: https://dx.doi.org/10.3748/wjg.v29.i22.3561

Gastrointestinal cancers (esophageal cancer, gastric cancer, colorectal cancer, etc.) account for 26% of global cancer incidence and 35% of all cancer-related deaths, which seriously threaten the health of global populations[1]. Gastrointestinal tumors diagnosed at an early stage had a better prognosis than those diagnosed at a late stage. For example, the 5-year survival rates of early gastric cancer and advanced gastric cancer were > 90% and < 30%, respectively. Performing endoscopy is the most effective approach to detect gastrointestinal tumors. More than 100 million subjects receive gastro

Artificial intelligence (AI) is intelligence processed by machines, as opposed to intelligence of humans and other animals, which emerged as a powerful and novel technology influencing many aspects of health care. In recent years, image recognition technology of AI has been dramatically improved and widely used in the medical field of diagnostic imaging[3-6]. AI represents a fundamental breakthrough in the field of diagnostic endoscopy by assisting endoscopists with the detection of gastrointestinal tumors[7]. Deep learning networks represented by convolutional neural network (CNN) systems are a hot research topic at present and have been widely applied in the fields of pathology, radiology and endoscopy[8]. The first study focusing on gastrointestinal endoscopy and computer simulation was reported in 1990, which showed that computer simulation was used as a tool to train endoscopists by constructing a model describing the three-dimensional structure of the appropriate parts of the gastrointestinal tract[9]. With the development of AI, it has been extensively used in gastrointestinal endoscopy examinations, such as upper gastrointestinal endoscopy, colonoscopy, wireless capsule and ultrasonic endoscopy.

Bibliometric analysis refers to a statistical approach that helps to comprehensively analyze the hotspots, trends and each unit and scholar’s cooperation of published articles in this field[10,11]. In this study, we aimed to analyze the research hot spots and trends in the field of AI and endoscopy from 1990 to 2022 using a quantitative method. We also explored the application of AI-assisted endoscopy in detecting different digestive diseases to provide assistance for the research and clinical application of AI and endoscopy.

All relevant and qualified data were collected from the Web of Science database. The search strategy was a combination of the following keywords and terms: (“artificial intelligence” OR “AI” OR “deep learning” OR “machine learning” OR “machine intelligence” OR “computer” OR “computational intelligence” OR “neural network” OR “knowledge acquisition” OR “automatic” OR “automated” OR “feature extraction” OR “image segmentation” OR “neural learning” OR “artificial neural network” OR “data mining” OR “data clustering” OR “big data”) AND (“endoscopy” OR “endoscope”). Moreover, only publications written in English between 01 January 1990 and 01 November 2022 were considered.

Two authors (Ren-Chun Du and Yao-Bin Ouyang) independently evaluated all relevant articles in two stages, and a full discussion was held to resolve any differences and conflicts (the inclusion of literature, classification of disease and endoscopy, and weight of different studies, etc) with the third author (Yi Hu). At the first stage of evaluation, basic research, reviews, case reports and meta-analysis were excluded. In the second stage, the contents of the remaining articles were carefully assessed according to the patient, intervention, comparison, outcomes (PICOs) criteria. “P” (patient): Patients with digestive diseases or health care; “I” (intervention): AI-assisted endoscopic examination; “C” (comparison): Routine endoscopic examination; “O” (outcome): The accuracy of AI and routine endoscopic examination in detecting digestive diseases; and “S” (study design): Retrospective studies, prospective randomized controlled trials (RCTs) and non-RCTs.

The included articles were assessed and downloaded into various file formats for analysis by the two investigators. The following data were extracted: Author, institution, country, endoscopy type, disease type, performance of AI (including sensitivity, specificity, and accuracy), citations, year of publications, and H-index. Each study was weighted according to the number of test samples and the weighted average of the performance data of AI in diagnosing diseases was calculated. Any discrepancy was evaluated by the kappa value to assess the agreement between two investigators.

The data were imported into VOSviewer software (version 1.6.18), GraphPad Prism (version 8) and CiteSpace (version 6.1.R3) to generate the visual graphs. CiteSpace and VOSviewer were used to perform the quantitative and qualitative analyses. The cluster network was generated by VOSviewer to assess the most productive and cooperative authors, countries and institutions. Each dot of the visual graphs represents an author, institution and country, and these dots were clustered into various groups based on their cooperations. The size of the dots corresponded to the number of articles, while the strength of the connection was based on the frequency of cooperations. The options used in CiteSpace included a time slice set to “1990-2022,” with 1 year per slice and the selection criteria set to “g-index.” The scale factor k was set to “25”, and “pathfinder” was selected to preserve the optimal structure while reducing the total links[12]. Keywords were used to label clusters and show cluster labels by a latent semantic indexing algorithm.

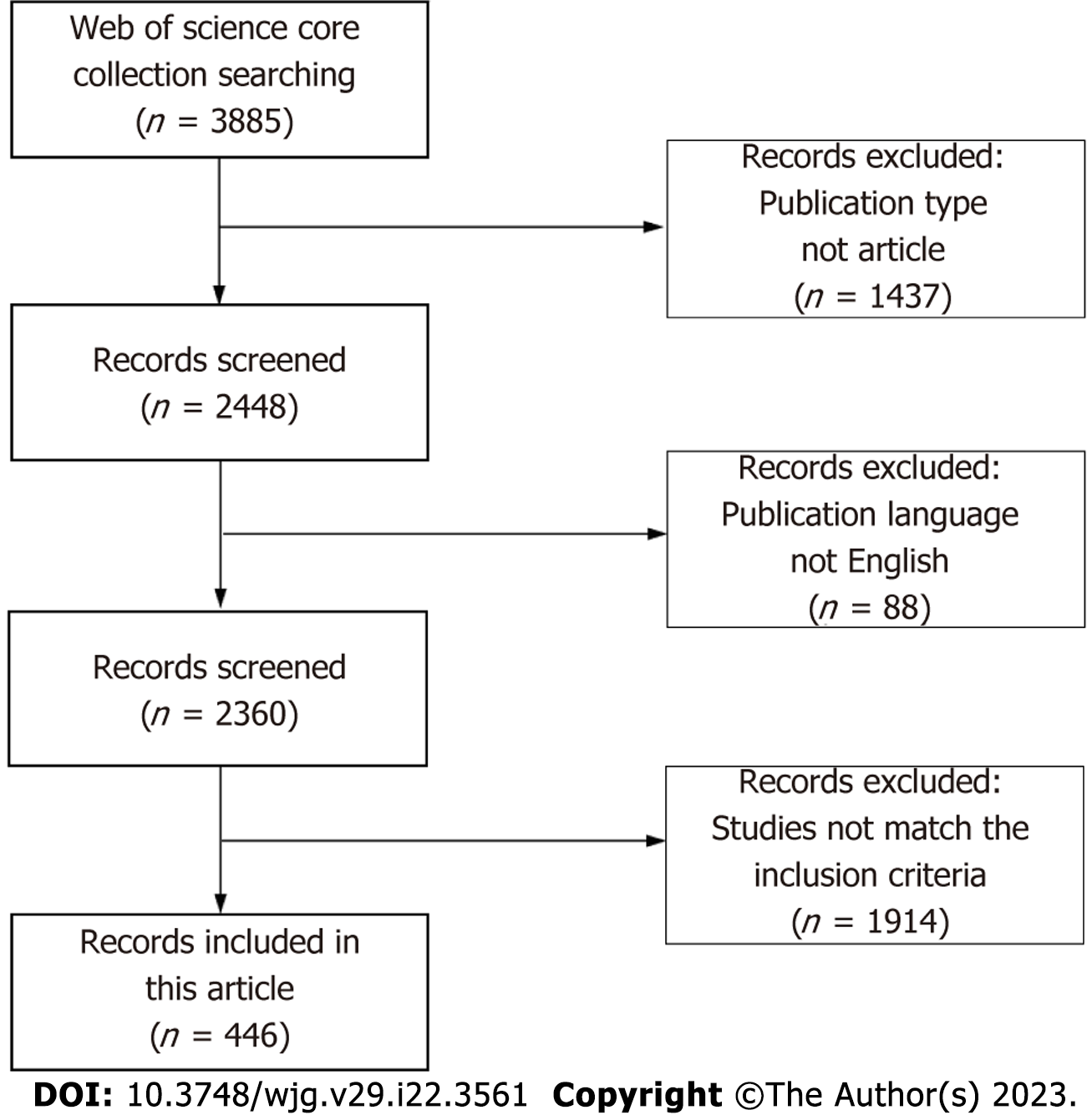

As shown in Figure 1, 3885 papers were enrolled from the Web of Science through our search strategy. At the first stage of selection, 1437 publications were excluded because of publication type, and 88 publications were excluded because of language. The contents of the remaining 2360 articles were carefully assessed. Ultimately, 446 articles meeting the inclusion criteria of this study were included.

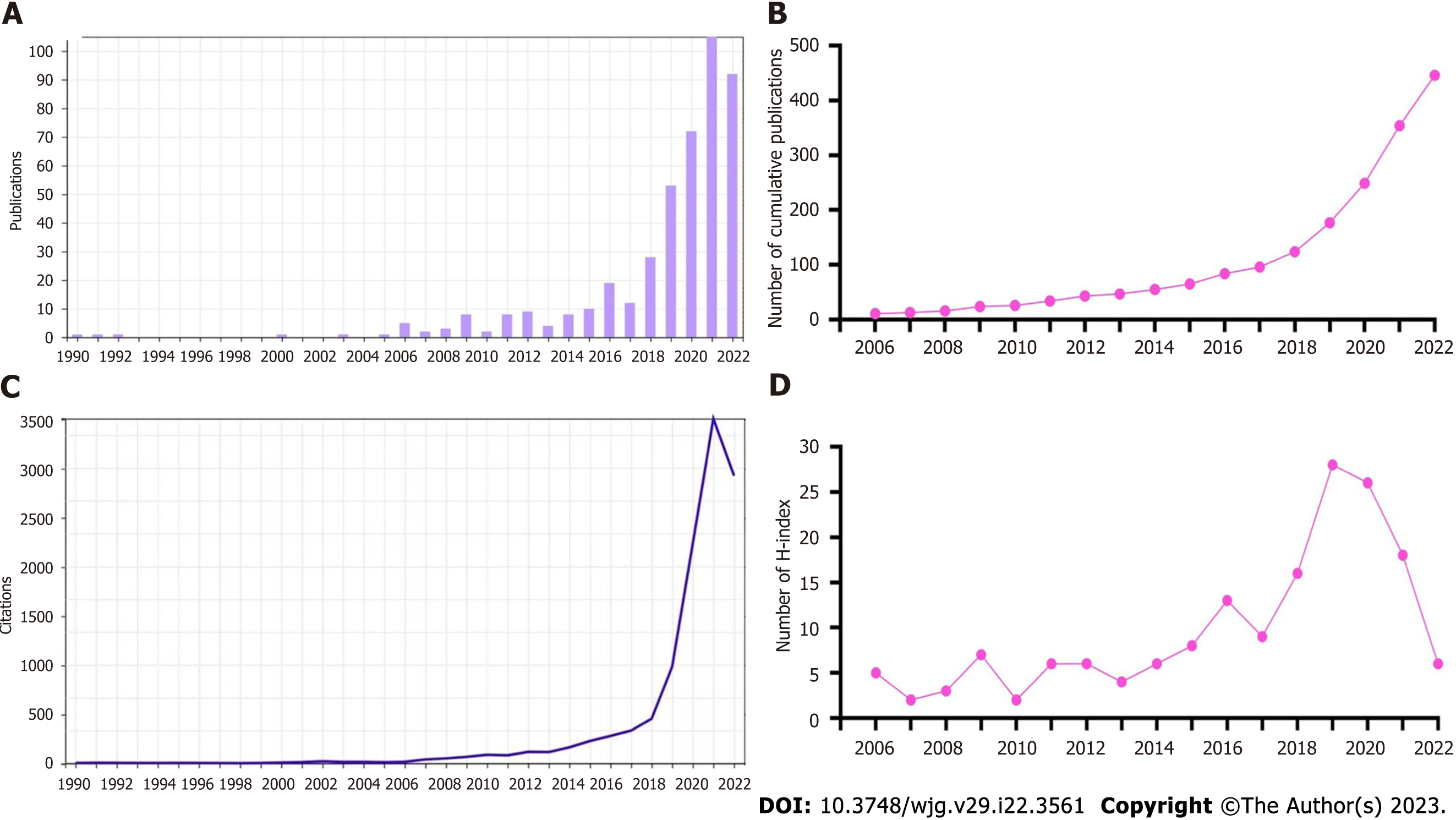

Next, we summarized the characteristics of the included articles. The chronological distribution of annual publication numbers from 1990 to 2022 is shown in Figure 2A. The first study we obtained was published in 1990[9]. Less than 10 annual publications were reported in this field before 2015. As depicted in the diagram, the number of publications rapidly increased after 2015, and peaked in 2021, accounting for 23.5% of all publications. Figure 2B displays the cumulative number of publications. The number of annual publications gradually increased after 2013 except for a decrease in 2017. The number of annual citations is shown in Figure 2C, which was relatively low from 1990 to 2010 (below 100 citations for each year). It increased slowly after 2006 and quickly after 2018, remaining at a high number after 2020 (over 2000 citations). The annual H-index is shown in Figure 2D, which was relatively low (< 10) before 2016, but then increased rapidly and peaked at 28 in 2019, followed by a slight decrease from 2020 to 2022.

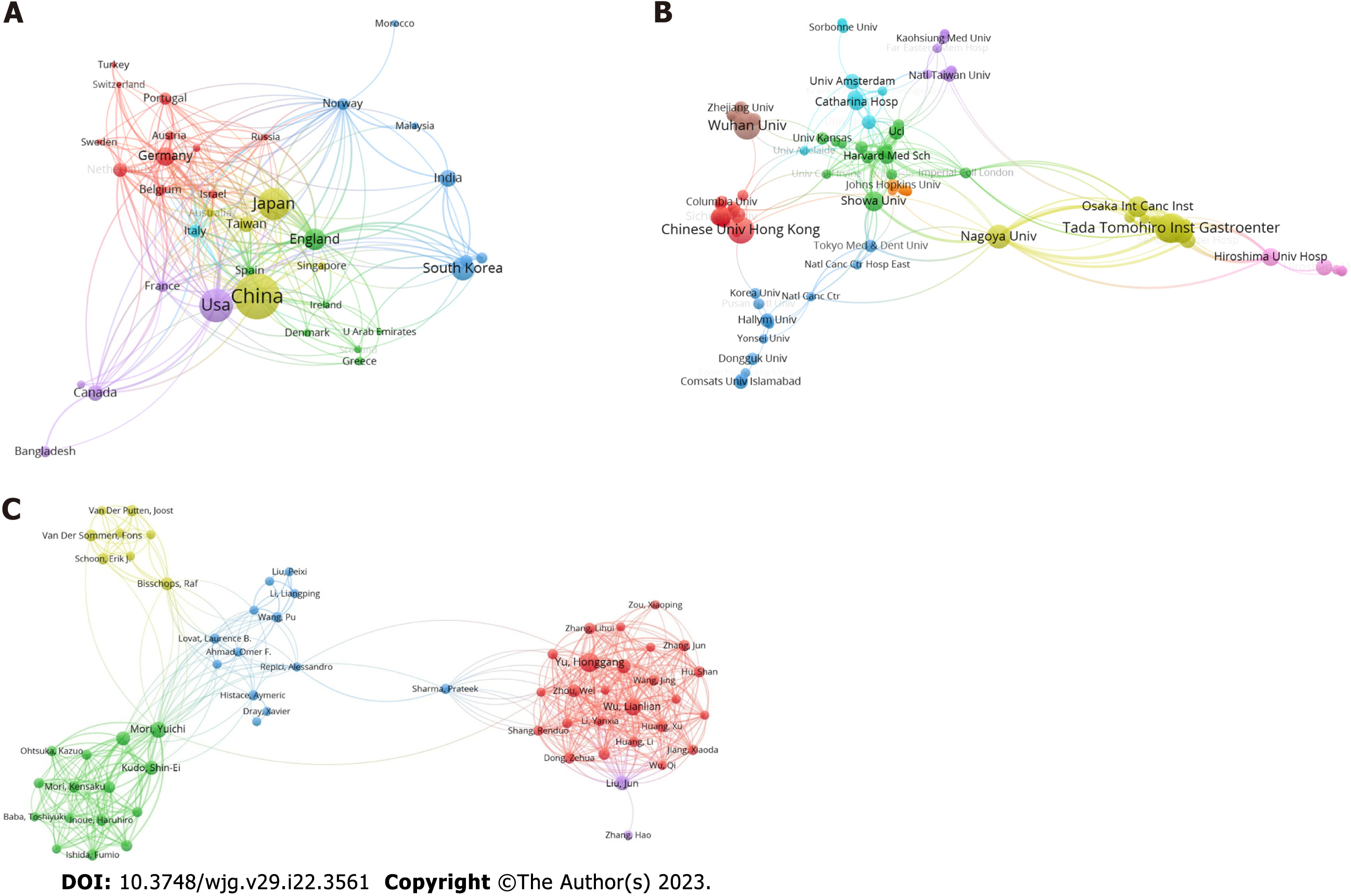

The cooperation network of publication countries is displayed in Figure 3A, including a total of 50 countries and 524 co-operations. Next, the H-index, total citations and publications of the top 10 most productive countries were analyzed and presented in Supplementary Table 1. China emerged as the most productive country with 128 publications, 4089 citations and an H-index of 36. Figure 3B shows the cooperation cluster network of institutions, with a total of 106 institutions and 708 cooperations displayed. These institutions were clustered into different groups according to their cooperations. The top 10 most productive institutions were further analyzed for their publications, citations and H-index, and the results are presented in Supplementary Table 2. The Tada Tomohiro Institute of Gastroenterology and Proctology had the highest number of publications (22), citations (1454), and H-index (17). Next, the publications, citations, H-index and co-operations of the 10 most productive authors were also analyzed. A total of 63 authors and 996 co-operations are shown in Figure 3C. As shown in Supplementary Table 3, Tada Tomohiro was the most productive author with 23 publications, an H-index of 17 and 1456 citations, followed by Yu HG, Wu LL, and Ishihara S.

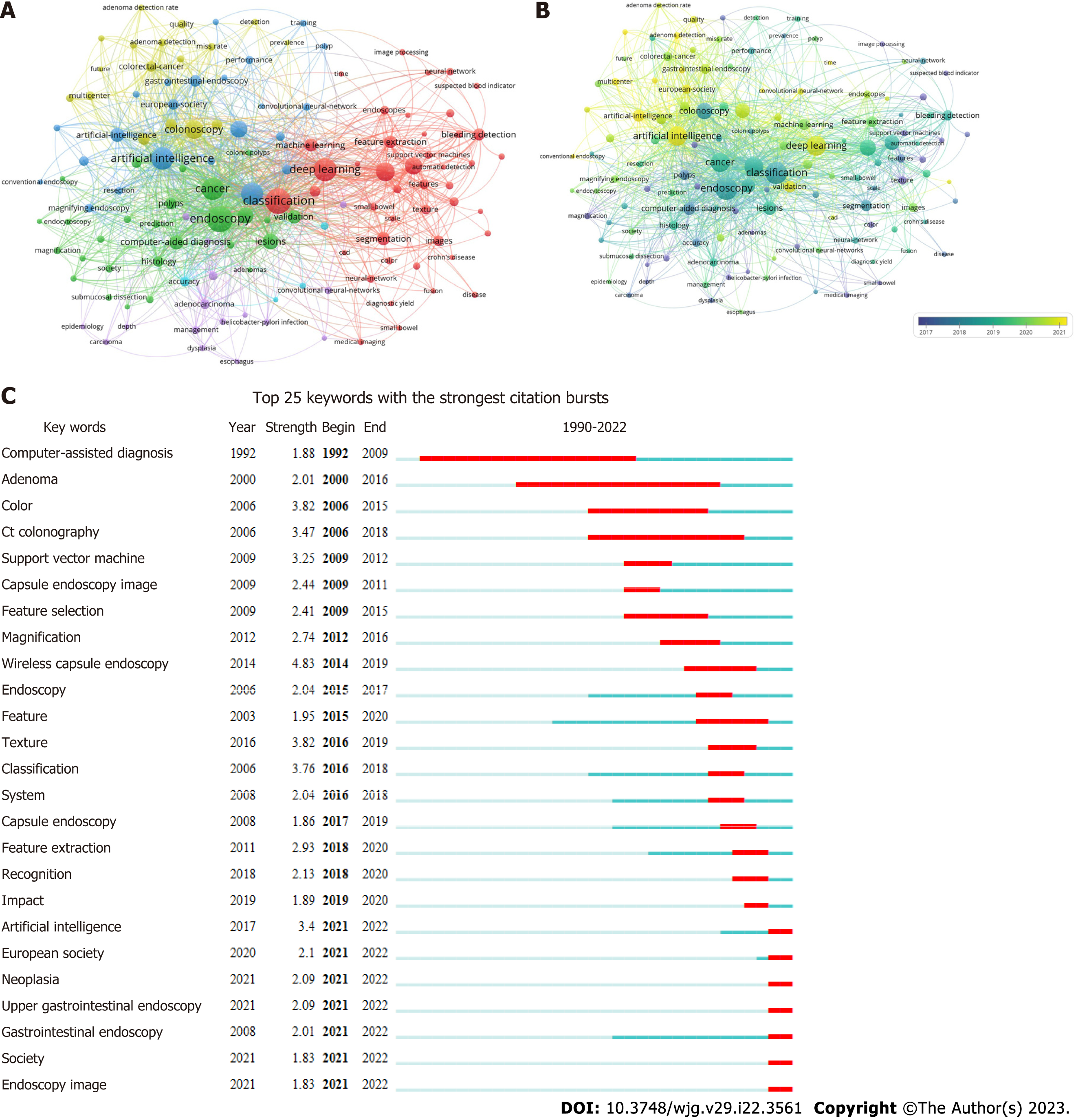

To obtain a comprehensive understanding of the popular topics in the realm of AI and endoscopy, a study is conducted utilizing keyword co-occurrence analysis. The analysis involves examining the relevant keywords associated with each paper, based on their titles and abstracts. A total of 131 keywords, appearing at least 5 times, are identified and represented using a bubble graph to visualize citation data. VOSviewer is employed to generate a comprehensive keyword co-occurrence visualization map, where the keywords are grouped into a distinct clusters market with a unique color code. Three categories were identified: AI, deep learning and endoscopy (Figure 4A). The co-occurrence visualization map based on the average year of publication is shown in Figure 4B. “Magnification” and “adenomas” mainly appeared before 2017. The keywords “classification”, “endoscopy”, and “cancer” became more common after 2017. Keywords colored in yellow are the latest, such as “AI”, “deep learning”, and “CNN”.

Burst detection is a method employed for detecting a sudden surge in the occurrence of novel concepts during a specific period. As shown in Figure 4C, wireless capsule endoscopy ranked first in terms of outbreak intensity (4.83) over the past three decades, followed by color (3.82) and texture (3.82). Computer-assisted diagnosis became the focus of research after 1993. It is worth noting that AI, neoplasia, upper gastrointestinal endoscopy, society, and endoscopic imagine were the strongest bursts since 2022.

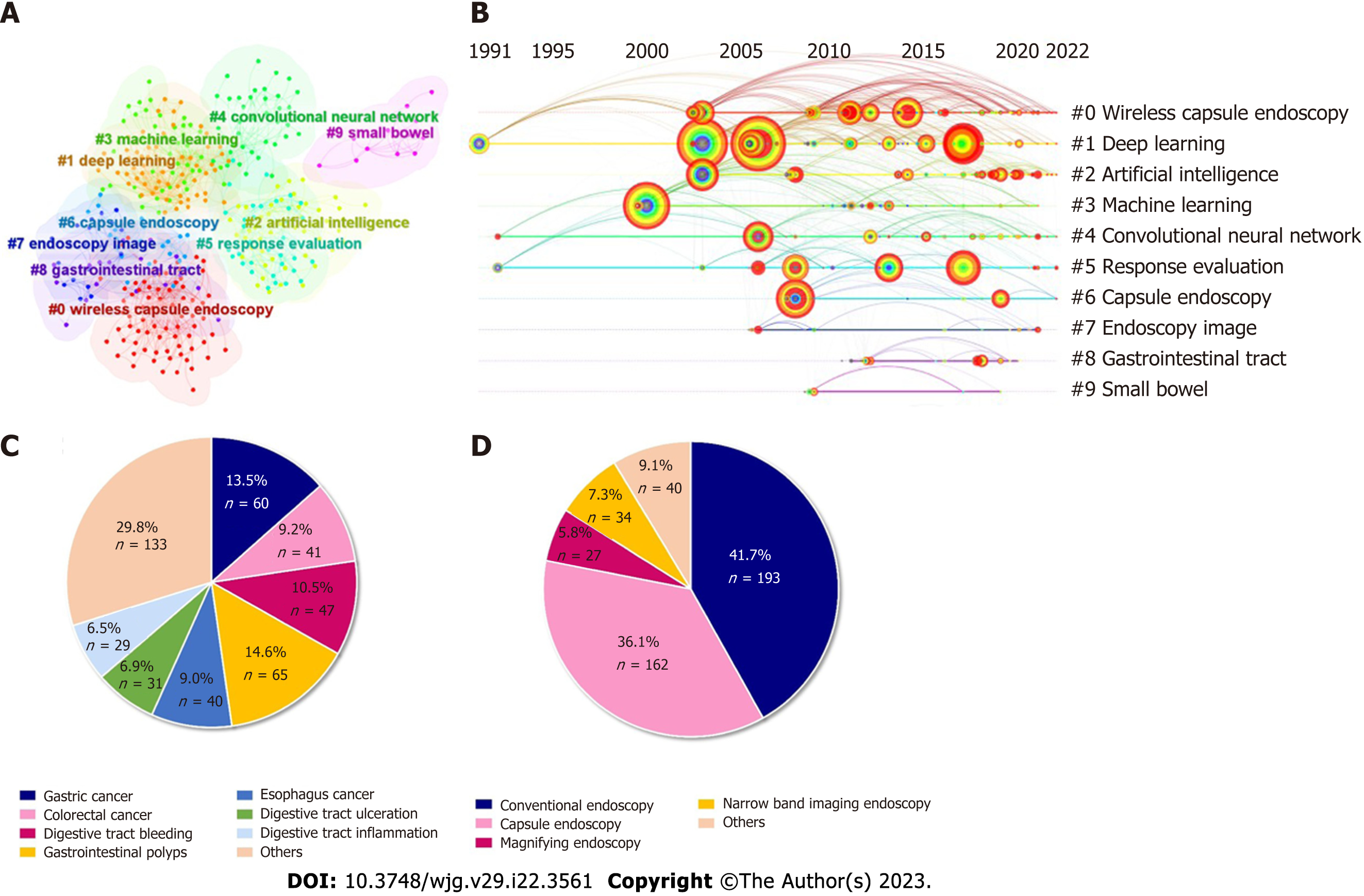

A network of references that are cocited by publications is referred to as a cocited network. When a group of references are repeatedly cited, it could generate a cluster network diagram (Figure 5A) and a literature cocitation diagram (Figure 5B). The “Pathfinder” and “Pruning Networks” options were utilized to conduct the optimal network. Each node on the network signifies a cited article and the size of it is based on the total cited frequency of pertinent articles. The literature that was cocited was then categorized into 10 major labels: Wireless capsule endoscopy, deep learning, AI, machine learning, CNN, response evaluation, capsule endoscopy, endoscopy imagine, gastrointestinal tract, and small bowel (Figure 5A). Figure 5B shows a timeline view of distinct cocitations. Deep learning, CNN and response evaluation have always been popular research topics. Wireless capsule endoscopy, AI, and machine learning have been popular research topics since 2000.

Table 1 shows the specifics of the 10 most cited studies from 1990 to 2022. Each study was cited between 145 and 323 times. Three of these studies were published in Gastroenterology, and the remaining 7 studies were published in Gastric Cancer, GUT, Gastrointestinal Endoscopy, IEEE Journal of Biomedical, Health Informatics, Lancet Gastroenterology, and Hepatology. The most cited article was the application of AI using a CNN for detecting gastric cancer in endoscopic images. Five articles focused on AI applied in the detection of polyps. Another 5 articles focused on AI applied in cancer detection.

| Rank | Journal | Title | Citations of Web of Science | Affiliations | Ref. |

| 1 | Gastric Cancer 2018; 21: 653-660 | Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images | 323 | Tada Tomohiro Inst Gastroenterol and Proctol, Saitama, Japan | Hirasawa et al[26], 2018 |

| 2 | GUT 2019; 68: 1813-1819 | Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: A prospective randomised controlled study | 307 | Sichuan Provincial People’s Hospital, China | Wang et al[23], 2019 |

| 3 | Annals of Internal Medicine 2018; 169: 357-366 | Real-Time Use of Artificial Intelligence in Identification of Diminutive Polyps During Colonoscopy A Prospective Study | 227 | Showa University, Japan | Mori et al[39], 2018 |

| 4 | Gastroenterology 2018; 154: 568-575 | Accurate Classification of Diminutive Colorectal Polyps Using Computer-Aided Analysis | 208 | Triservice General Hospital, National Defense Medical Center, Taiwan | Chen et al[40] , 2018 |

| 5 | Gastrointestinal Endoscopy 2019; 89: 25-32 | Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks | 194 | Japanese Foundation for Cancer Research, Japan | Horie et al[28], 2019 |

| 6 | IEEE Journal of Biomedical and Health Informatics 2017; 21: 41-47 | Automatic Detection and Classification of Colorectal Polyps by Transferring Low-Level CNN Features From Nonmedical Domain | 191 | Chinese University of Hong Kong, China | Zhang et al[35], 2017 |

| 7 | Gastroenterology 2013; 144: 81-91 | Real-Time Optical Biopsy of Colon Polyps With Narrow Band Imaging in Community Practice Does Not Yet Meet Key Thresholds for Clinical Decisions | 169 | Stanford University, United States | Ladabaum et al[41], 2013 |

| 8 | Gastroenterology 2020; 159: 512-520 | Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial | 155 | Humanitas University, IRCCS Humanitas Research Hospital, Italy | Repici et al[42], 2020 |

| 9 | Gastrointestinal Endoscopy 2019; 89: 806-815 | Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy | 150 | Fudan University, China | Zhu et al[43], 2019 |

| 10 | Lancet Gastroenterology and Hepatology 2020; 5: 343-351 | Effect of a deep-learning computer-aided detection system on adenoma detection during colonoscopy (CADe-DB trial): a double-blind randomised study | 145 | Sichuan Provincial People’s Hospital, China | Wang et al[44], 2020 |

The proportion of the number of diseases in the field of AI and endoscopy was counted. As shown in Figure 5C, gastrointestinal polyps accounted for the largest proportion of disease in this area (14.6%), followed by gastric cancer (13.5%). The proportion of the endoscopy type was also counted. As shown in Figure 5D, conventional endoscopy accounted for the largest proportion in this area (42.3%), followed by capsule endoscopy (35.5%).

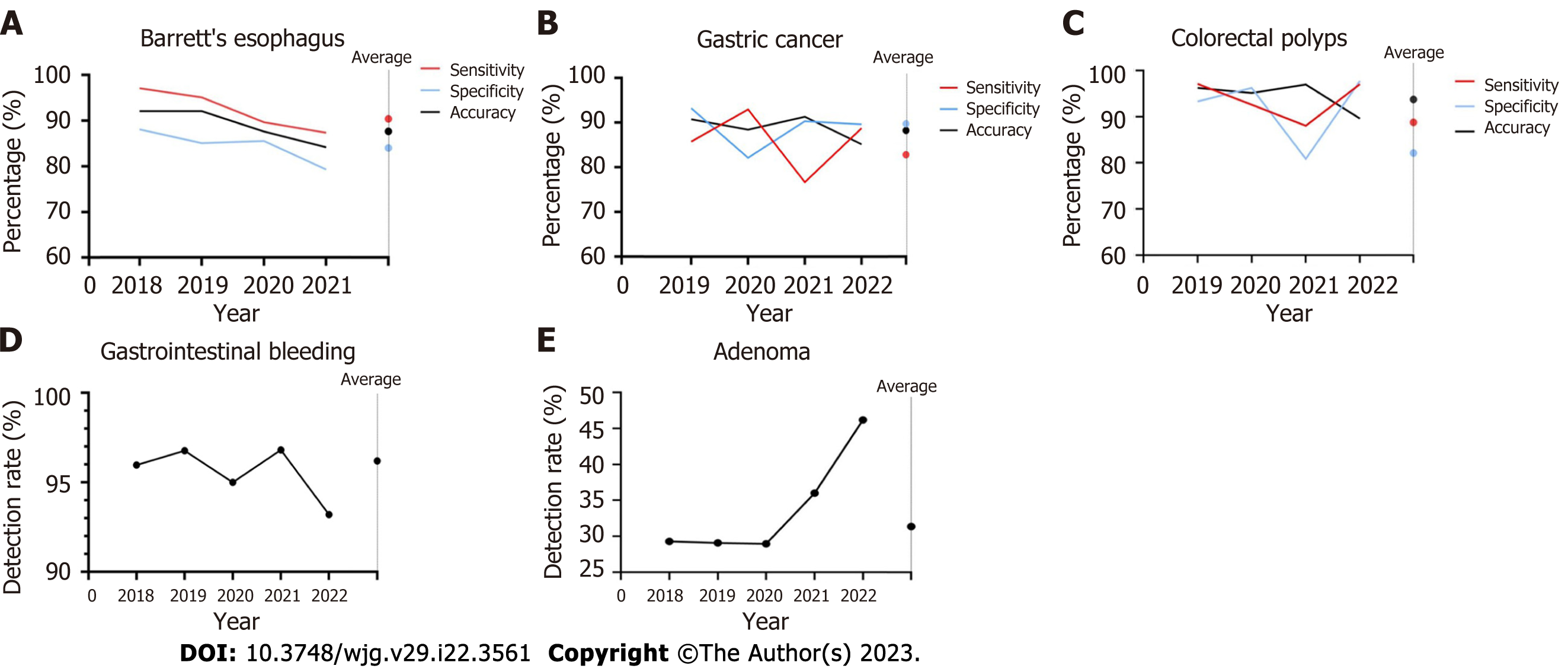

We further analyzed the temporal variation in the diagnostic performance of AI-assisted endoscopy for digestive diseases. The sensitivity, specificity and accuracy of AI-assisted endoscopy in Barrett’s esophagus, gastric cancer and polyps are shown in Figure 6A-C. For Barrett’s esophagus, the studies published in 2018 showed the highest sensitivity (97.0%), specificity (88.0%) and accuracy (92.0%). The weighted average sensitivity, specificity and accuracy of AI in detecting Barrett’s esophagus from 2018 to 2021 were 90.4%, 84.0%, and 87.6%, respectively. In terms of gastric cancer, the studies in 2019 showed the highest accuracy (90.8%) and specificity (93.3%), and the studies in 2020 showed the highest sensitivity (92.3%). The weighted average sensitivity, specificity and accuracy of AI in detecting gastric cancer from 2019 to 2022 are 82.8%, 89.8%, and 88.3%, respectively. For detecting colorectal polyps, the studies in 2019 showed the highest sensitivity (97.1%), the studies in 2022 showed the highest specificity (97.7%), and 2021 showed the highest accuracy (96.9%). The weighted average sensitivity, specificity and accuracy of AI in detecting polyps from 2019 to 2022 are 89.6%, 82.1% and 93.7%, respectively. As shown in Figure 6D-E, the detection rate of gastrointestinal bleeding remained stable above 95% from 2018 to 2021 (weighted average: 96.2%). The adenoma detection rate remained stable at approximately 29% from 2018 to 2020. A higher detection rate (46.2%) was achieved in 2022 (weighted average: 31.3%).

To the best of our knowledge, this is the first bibliometric analysis to comprehensively illustrate the application of AI-assisted digestive endoscopy in digestive diseases. A basic literature analysis was performed, as well as a subgroup analysis of AI-assisted digestive endoscopy in diagnosing various diseases.

The term “AI” was first proposed in 1956[13]. Cortes et al[14] suggested the first algorithm for pattern recognition 60 years ago. In 2006, a fast-learning algorithm for deep belief networks was proposed[15]. Since then, the number of publications and citations in this field has risen steadily. Machine learning is one of the fastest growing areas of technology[16]. Deep learning is an important branch of machine learning and is further divided into deep neural networks, recurrent neural networks and CNNs[17]. The best results were achieved by CNN in the field of image analysis[18]. In this study, we analyzed the most influential authors, institutions and countries regarding AI and endoscopy. Among these countries, China had the largest number of publications, citations and H-index. Moreover, China, United States, and Japan are the centers of the co-occurrence map. Wireless capsule endoscopy is one of the most effective endoscopic techniques used in the examination of gastrointestinal diseases such as polyps and ulcers[19] and has been a research hot spot and topic since 2014.

AI-assisted endoscopy for disease detection covers almost all endoscopy in the field of digestive endoscopy. Colorectal cancer is the world’s fourth most deadly cancer, with almost 900000 deaths annually[20]. AI-assisted colonoscopy has broad prospects in the screening and diagnosis of colorectal cancer. In our study, colorectal cancer accounted for 9.19% of the total disease. Urban et al[21] showed excellent performance in AI-assisted colonoscopy studies using CNN based on the deep learning method. The CNN identified polyps with an accuracy of 96.4%. Similarly, Shin et al[22] compared two different approaches named the hand-crafted feature method and CNN based on the deep learning method, and the results demonstrated that the CNN-based deep learning framework had better performance for detecting polyps, achieving over 90% accuracy, sensitivity, and specificity. The detection rate of adenoma reflects the quality of colonoscopy. A prospective cohort study was conducted to develop an automatic polyp detection system based on deep learning, and the detection rate of polyps and adenomas could be improved from 29% to 45% and 20% to 29%, respectively[23]. Wang et al[24] also studied the development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy, which proved that AI could assist endoscopists efficiently in discovering colorectal adenomas and polyps with high accuracy (per-image sensitivity: 94.4%, per-image specificity: 95.5%). Moreover, Pfeifer et al[25] evaluated a newly developed deep CNN with a large sample size (15534 test images), and the deep CNN’s sensitivity and specificity for detecting polyps were 90% and 80%, respectively.

Gastric cancer, Barrett’s esophagus and Helicobacter pylori (H. pylori) infection are common upper gastrointestinal diseases. Hirasawa et al[26] first reported that AI using a CNN applied in conventional endoscopic images could achieve a diagnostic sensitivity of 92% for gastric cancer in 2018. It is worth noting that the CNN system only required 47 s to analyze 2296 images, which is faster than analyses completed by endoscopists. Ikenoyama et al[27] constructed a CNN to detect early gastric cancer with 2940 test samples, and the sensitivity was 58.4%. The prognosis of esophageal cancer is relatively poor. The first report of AI-assisted endoscopy applied in the diagnosis of esophageal cancer was conducted by Horie et al[28]. A CNN was trained to diagnose esophageal cancer using 8284 conventional endoscopic images, and eventually, high sensitivity and accuracy were achieved. The weighted average specificity of AI in detecting Barrett’s esophagus from 2018 to 2021 was 84.0%. In 2020, a study with 20 samples achieved a specificity of 85.5%[29]. Another study in the same year with a sample size of 100 achieved a specificity of 78%[30]. H. pylori is the main pathogenic factor of gastric diseases. Shichijo et al[31] reported 89% sensitivity and 87% specificity for the diagnosis of H. pylori infection using CNN, and the diagnostic accuracy was significantly higher than that achieved by endoscopists. A consistent result was also achieved by another study[32].

Many studies have shown that cooperation between AI and endoscopists could improve the diagnostic ability to diagnose gastrointestinal diseases. Ikenoyama et al[27] compared the performance of endoscopists with an AI classifier based on CNN in detecting early gastric cancer through 2940 test images. Eventually, the results showed that AI had a significantly higher sensitivity than endoscopists. Niikura et al[33] obtained similar results in another study, and the diagnostic rate of gastric cancer per image in the AI group was higher than that in the group of experts. Yen et al[34] showed a higher accuracy of AI than experts in detecting peptic ulcer bleeding. Zhang et al[35] compared a CNN-based image recognition system with visual inspection by endoscopists in identifying polyps. The results showed a significant improvement in accuracy with the application of AI.

Certain limitations existed regarding the use of AI. First, algorithm performance could be different when using endoscopy equipment manufactured by different companies[23,24]. Second, as AI algo

A bibliometric analysis was previously conducted to examine AI applied in digestive endoscopy[38]. A basic analysis of bibliometric indicators was performed. Our study recorded the data of each article in detail. We also analyze the effectiveness of AI-assisted endoscopy in disease detection, specific analysis of digestive endoscopy, and gastrointestinal diseases and evaluate the accuracy of AI.

Our study aimed to provide a comprehensive analysis of AI applied in diagnostic endoscopy: (1) The emergence of deep belief networks contributed to an increase in research in this field; (2) the intelligent detection and recognition of colorectal polyps is the most concerning and researched area at present; (3) capsule endoscopy is one of the most influential keywords; (4) the accuracy of AI in detecting Barrett’s esophagus, colorectal polyps and gastric cancer from 2018 to 2022 is 88.08%, 93.71%, and 88.29%, respectively, and the detection rates of adenoma and gastrointestinal bleeding from 2018 to 2022 are 31.38% and 96.20%, respectively; and (5) AI-assisted digestive endoscopy could improve the diagnosis rate of endoscopists in disease detection, and CNN-based diagnosis programs for endoscopic images showed promising results. However, there are some limitations which existed in this study. First, the search for publications was limited to the Web of Science core collection database, which may result in incomplete literature searches of other databases. Second, a number of studies that were not in English might be missed. Third, several bias may affect the results, such as publication bias.

In conclusion, AI could improve the detection rate of digestive tract diseases and has been applied widely in the field of endoscopy. Multicenter prospective studies with larger samples should be conducted in the future to further explore the accuracy of AI based on different methods.

Recently, artificial intelligence (AI) has been widely used in gastrointestinal endoscopy examinations.

More than 100 million subjects receive gastrointestinal endoscopy examinations each year. It is of great importance to improve the quality of endoscopy examinations to discover the lesions. With the development of AI, it has been extensively used in gastrointestinal endoscopy examinations.

To provide a comprehensive analysis of AI applied in diagnostic endoscopy.

Relevant publications from the Web of Science published from 1990 to 2022 were extracted using a combination of the search terms “AI” and “endoscopy”.

AI-assisted digestive endoscopy could improve the diagnosis rate of endoscopists in disease detection, and convolutional neural network-based diagnosis programs for endoscopic images showed promising results.

AI could improve the detection rate of digestive tract diseases and has been applied widely in the field of endoscopy. Multicenter prospective studies with larger samples should be conducted in the future to further explore the accuracy of AI based on different methods.

Gastrointestinal cancers seriously threaten the health of global populations. Gastrointestinal tumors diagnosed at an early stage had a better prognosis than those diagnosed at a late stage. AI represents a fundamental breakthrough in the field of diagnostic endoscopy by assisting endoscopists with the detection of gastrointestinal tumors and it has been extensively used in gastrointestinal endoscopy examinations.

Provenance and peer review: Unsolicited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Oncology

Country/Territory of origin: China

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B, B

Grade C (Good): 0

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Tsoulfas G, Greece; Xie C, China S-Editor: Chen YL L-Editor: Filipodia P-Editor: Zhang YL

| 1. | Arnold M, Abnet CC, Neale RE, Vignat J, Giovannucci EL, McGlynn KA, Bray F. Global Burden of 5 Major Types of Gastrointestinal Cancer. Gastroenterology. 2020;159:335-349.e15. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 857] [Cited by in RCA: 1233] [Article Influence: 246.6] [Reference Citation Analysis (0)] |

| 2. | Hassan C, Spadaccini M, Iannone A, Maselli R, Jovani M, Chandrasekar VT, Antonelli G, Yu H, Areia M, Dinis-Ribeiro M, Bhandari P, Sharma P, Rex DK, Rösch T, Wallace M, Repici A. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: a systematic review and meta-analysis. Gastrointest Endosc. 2021;93:77-85.e6. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 361] [Cited by in RCA: 308] [Article Influence: 77.0] [Reference Citation Analysis (1)] |

| 3. | Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115-118. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5683] [Cited by in RCA: 5362] [Article Influence: 670.3] [Reference Citation Analysis (0)] |

| 4. | Bibault JE, Giraud P, Burgun A. Big Data and machine learning in radiation oncology: State of the art and future prospects. Cancer Lett. 2016;382:110-117. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 179] [Cited by in RCA: 182] [Article Influence: 20.2] [Reference Citation Analysis (0)] |

| 5. | Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA. 2016;316:2402-2410. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3669] [Cited by in RCA: 3363] [Article Influence: 373.7] [Reference Citation Analysis (0)] |

| 6. | Misawa M, Kudo SE, Mori Y, Takeda K, Maeda Y, Kataoka S, Nakamura H, Kudo T, Wakamura K, Hayashi T, Katagiri A, Baba T, Ishida F, Inoue H, Nimura Y, Oda M, Mori K. Accuracy of computer-aided diagnosis based on narrow-band imaging endocytoscopy for diagnosing colorectal lesions: comparison with experts. Int J Comput Assist Radiol Surg. 2017;12:757-766. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 47] [Cited by in RCA: 49] [Article Influence: 6.1] [Reference Citation Analysis (0)] |

| 7. | Messmann H, Bisschops R, Antonelli G, Libânio D, Sinonquel P, Abdelrahim M, Ahmad OF, Areia M, Bergman JJGHM, Bhandari P, Boskoski I, Dekker E, Domagk D, Ebigbo A, Eelbode T, Eliakim R, Häfner M, Haidry RJ, Jover R, Kaminski MF, Kuvaev R, Mori Y, Palazzo M, Repici A, Rondonotti E, Rutter MD, Saito Y, Sharma P, Spada C, Spadaccini M, Veitch A, Gralnek IM, Hassan C, Dinis-Ribeiro M. Expected value of artificial intelligence in gastrointestinal endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. Endoscopy. 2022;54:1211-1231. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 83] [Cited by in RCA: 72] [Article Influence: 24.0] [Reference Citation Analysis (0)] |

| 8. | Min JK, Kwak MS, Cha JM. Overview of Deep Learning in Gastrointestinal Endoscopy. Gut Liver. 2019;13:388-393. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 74] [Cited by in RCA: 116] [Article Influence: 23.2] [Reference Citation Analysis (0)] |

| 9. | Williams CB, Baillie J, Gillies DF, Borislow D, Cotton PB. Teaching gastrointestinal endoscopy by computer simulation: a prototype for colonoscopy and ERCP. Gastrointest Endosc. 1990;36:49-54. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 46] [Cited by in RCA: 42] [Article Influence: 1.2] [Reference Citation Analysis (0)] |

| 10. | Ouyang Y, Zhu Z, Huang L, Zeng C, Zhang L, Wu WK, Lu N, Xie C. Research Trends on Clinical Helicobacter pylori Eradication: A Bibliometric Analysis from 1983 to 2020. Helicobacter. 2021;26:e12835. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 6] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 11. | Palacios-Marqués AM, Carratala-Munuera C, Martínez-Escoriza JC, Gil-Guillen VF, Lopez-Pineda A, Quesada JA, Orozco-Beltrán D. Worldwide scientific production in obstetrics: a bibliometric analysis. Ir J Med Sci. 2019;188:913-919. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 15] [Article Influence: 2.5] [Reference Citation Analysis (0)] |

| 12. | Chen C, Dubin R, Kim MC. Emerging trends and new developments in regenerative medicine: a scientometric update (2000 - 2014). Expert Opin Biol Ther. 2014;14:1295-1317. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 339] [Cited by in RCA: 423] [Article Influence: 38.5] [Reference Citation Analysis (0)] |

| 13. | McCarthy J, Minsky ML, Shannon CE. A proposal for the Dartmouth summer research project on artificial intelligence-August 31, 1955. Ai Magazine. 2006;27:12-14. [DOI] [Full Text] |

| 14. | Cortes C, Vapnik V. Support-vector networks. Machine Learning. 1995;20:273-297. [DOI] [Full Text] |

| 15. | Hinton GE, Osindero S, Teh YW. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18:1527-1554. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9547] [Cited by in RCA: 3190] [Article Influence: 167.9] [Reference Citation Analysis (0)] |

| 16. | Jordan MI, Mitchell TM. Machine learning: Trends, perspectives, and prospects. Science. 2015;349:255-260. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2833] [Cited by in RCA: 1994] [Article Influence: 199.4] [Reference Citation Analysis (0)] |

| 17. | Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. 2019;25:1666-1683. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 211] [Cited by in RCA: 160] [Article Influence: 26.7] [Reference Citation Analysis (5)] |

| 18. | Ahmad OF, Soares AS, Mazomenos E, Brandao P, Vega R, Seward E, Stoyanov D, Chand M, Lovat LB. Artificial intelligence and computer-aided diagnosis in colonoscopy: current evidence and future directions. Lancet Gastroenterol Hepatol. 2019;4:71-80. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 102] [Cited by in RCA: 126] [Article Influence: 18.0] [Reference Citation Analysis (0)] |

| 19. | Iddan G, Meron G, Glukhovsky A, Swain P. Wireless capsule endoscopy. Nature. 2000;405:417. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1994] [Cited by in RCA: 1386] [Article Influence: 55.4] [Reference Citation Analysis (1)] |

| 20. | Dekker E, Tanis PJ, Vleugels JLA, Kasi PM, Wallace MB. Colorectal cancer. Lancet. 2019;394:1467-1480. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1570] [Cited by in RCA: 3032] [Article Influence: 505.3] [Reference Citation Analysis (3)] |

| 21. | Urban G, Tripathi P, Alkayali T, Mittal M, Jalali F, Karnes W, Baldi P. Deep Learning Localizes and Identifies Polyps in Real Time With 96% Accuracy in Screening Colonoscopy. Gastroenterology. 2018;155:1069-1078.e8. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 398] [Cited by in RCA: 433] [Article Influence: 61.9] [Reference Citation Analysis (1)] |

| 22. | Shin Y, Balasingham I, Ieee. Comparison of Hand-craft Feature based SVM and CNN based Deep Learning Framework for Automatic Polyp Classification. In: 39th Annual International Conference of the IEEE-Engineering-in-Medicine-and-Biology-Society (EMBC). South Korea, 2017: 3277-3280. [RCA] [DOI] [Full Text] [Cited by in Crossref: 40] [Cited by in RCA: 28] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 23. | Wang P, Berzin TM, Glissen Brown JR, Bharadwaj S, Becq A, Xiao X, Liu P, Li L, Song Y, Zhang D, Li Y, Xu G, Tu M, Liu X. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. 2019;68:1813-1819. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 398] [Cited by in RCA: 548] [Article Influence: 91.3] [Reference Citation Analysis (0)] |

| 24. | Wang P, Xiao X, Glissen Brown JR, Berzin TM, Tu M, Xiong F, Hu X, Liu P, Song Y, Zhang D, Yang X, Li L, He J, Yi X, Liu J, Liu X. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat Biomed Eng. 2018;2:741-748. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 248] [Cited by in RCA: 270] [Article Influence: 38.6] [Reference Citation Analysis (0)] |

| 25. | Pfeifer L, Neufert C, Leppkes M, Waldner MJ, Häfner M, Beyer A, Hoffman A, Siersema PD, Neurath MF, Rath T. Computer-aided detection of colorectal polyps using a newly generated deep convolutional neural network: from development to first clinical experience. Eur J Gastroenterol Hepatol. 2021;33:e662-e669. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 12] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 26. | Hirasawa T, Aoyama K, Tanimoto T, Ishihara S, Shichijo S, Ozawa T, Ohnishi T, Fujishiro M, Matsuo K, Fujisaki J, Tada T. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. 2018;21:653-660. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 568] [Cited by in RCA: 428] [Article Influence: 61.1] [Reference Citation Analysis (0)] |

| 27. | Ikenoyama Y, Hirasawa T, Ishioka M, Namikawa K, Yoshimizu S, Horiuchi Y, Ishiyama A, Yoshio T, Tsuchida T, Takeuchi Y, Shichijo S, Katayama N, Fujisaki J, Tada T. Detecting early gastric cancer: Comparison between the diagnostic ability of convolutional neural networks and endoscopists. Dig Endosc. 2021;33:141-150. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 129] [Cited by in RCA: 104] [Article Influence: 26.0] [Reference Citation Analysis (0)] |

| 28. | Horie Y, Yoshio T, Aoyama K, Yoshimizu S, Horiuchi Y, Ishiyama A, Hirasawa T, Tsuchida T, Ozawa T, Ishihara S, Kumagai Y, Fujishiro M, Maetani I, Fujisaki J, Tada T. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. 2019;89:25-32. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 240] [Cited by in RCA: 273] [Article Influence: 45.5] [Reference Citation Analysis (0)] |

| 29. | Waterhouse DJ, Januszewicz W, Ali S, Fitzgerald RC, di Pietro M, Bohndiek SE. Spectral Endoscopy Enhances Contrast for Neoplasia in Surveillance of Barrett's Esophagus. Cancer Res. 2021;81:3415-3425. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 16] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 30. | Struyvenberg MR, de Groof AJ, van der Putten J, van der Sommen F, Baldaque-Silva F, Omae M, Pouw R, Bisschops R, Vieth M, Schoon EJ, Curvers WL, de With PH, Bergman JJ. A computer-assisted algorithm for narrow-band imaging-based tissue characterization in Barrett's esophagus. Gastrointest Endosc. 2021;93:89-98. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 27] [Cited by in RCA: 54] [Article Influence: 13.5] [Reference Citation Analysis (0)] |

| 31. | Shichijo S, Nomura S, Aoyama K, Nishikawa Y, Miura M, Shinagawa T, Takiyama H, Tanimoto T, Ishihara S, Matsuo K, Tada T. Application of Convolutional Neural Networks in the Diagnosis of Helicobacter pylori Infection Based on Endoscopic Images. EBioMedicine. 2017;25:106-111. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 235] [Cited by in RCA: 181] [Article Influence: 22.6] [Reference Citation Analysis (0)] |

| 32. | Itoh T, Kawahira H, Nakashima H, Yata N. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc Int Open. 2018;6:E139-E144. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 116] [Cited by in RCA: 130] [Article Influence: 18.6] [Reference Citation Analysis (0)] |

| 33. | Niikura R, Aoki T, Shichijo S, Yamada A, Kawahara T, Kato Y, Hirata Y, Hayakawa Y, Suzuki N, Ochi M, Hirasawa T, Tada T, Kawai T, Koike K. Artificial intelligence versus expert endoscopists for diagnosis of gastric cancer in patients who have undergone upper gastrointestinal endoscopy. Endoscopy. 2022;54:780-784. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 36] [Cited by in RCA: 33] [Article Influence: 11.0] [Reference Citation Analysis (0)] |

| 34. | Yen HH, Wu PY, Su PY, Yang CW, Chen YY, Chen MF, Lin WC, Tsai CL, Lin KP. Performance Comparison of the Deep Learning and the Human Endoscopist for Bleeding Peptic Ulcer Disease. J Med Biol Eng. 2021;41:504-513. [RCA] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 16] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 35. | Zhang R, Zheng Y, Mak TW, Yu R, Wong SH, Lau JY, Poon CC. Automatic Detection and Classification of Colorectal Polyps by Transferring Low-Level CNN Features From Nonmedical Domain. IEEE J Biomed Health Inform. 2017;21:41-47. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 194] [Cited by in RCA: 168] [Article Influence: 18.7] [Reference Citation Analysis (0)] |

| 36. | Hickman SE, Baxter GC, Gilbert FJ. Adoption of artificial intelligence in breast imaging: evaluation, ethical constraints and limitations. Br J Cancer. 2021;125:15-22. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 48] [Cited by in RCA: 52] [Article Influence: 13.0] [Reference Citation Analysis (0)] |

| 37. | Ngiam KY, Khor IW. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019;20:e262-e273. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 371] [Cited by in RCA: 658] [Article Influence: 131.6] [Reference Citation Analysis (0)] |

| 38. | Gan PL, Huang S, Pan X, Xia HF, Lü MH, Zhou X, Tang XW. The scientific progress and prospects of artificial intelligence in digestive endoscopy: A comprehensive bibliometric analysis. Medicine (Baltimore). 2022;101:e31931. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3] [Reference Citation Analysis (0)] |

| 39. | Mori Y, Kudo SE, Misawa M, Saito Y, Ikematsu H, Hotta K, Ohtsuka K, Urushibara F, Kataoka S, Ogawa Y, Maeda Y, Takeda K, Nakamura H, Ichimasa K, Kudo T, Hayashi T, Wakamura K, Ishida F, Inoue H, Itoh H, Oda M, Mori K. Real-Time Use of Artificial Intelligence in Identification of Diminutive Polyps During Colonoscopy: A Prospective Study. Ann Intern Med. 2018;169:357-366. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 412] [Cited by in RCA: 355] [Article Influence: 50.7] [Reference Citation Analysis (1)] |

| 40. | Chen PJ, Lin MC, Lai MJ, Lin JC, Lu HH, Tseng VS. Accurate Classification of Diminutive Colorectal Polyps Using Computer-Aided Analysis. Gastroenterology. 2018;154:568-575. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 250] [Cited by in RCA: 276] [Article Influence: 39.4] [Reference Citation Analysis (0)] |

| 41. | Ladabaum U, Fioritto A, Mitani A, Desai M, Kim JP, Rex DK, Imperiale T, Gunaratnam N. Real-time optical biopsy of colon polyps with narrow band imaging in community practice does not yet meet key thresholds for clinical decisions. Gastroenterology. 2013;144:81-91. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 163] [Cited by in RCA: 186] [Article Influence: 15.5] [Reference Citation Analysis (0)] |

| 42. | Repici A, Badalamenti M, Maselli R, Correale L, Radaelli F, Rondonotti E, Ferrara E, Spadaccini M, Alkandari A, Fugazza A, Anderloni A, Galtieri PA, Pellegatta G, Carrara S, Di Leo M, Craviotto V, Lamonaca L, Lorenzetti R, Andrealli A, Antonelli G, Wallace M, Sharma P, Rosch T, Hassan C. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology. 2020;159:512-520.e7. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 237] [Cited by in RCA: 393] [Article Influence: 78.6] [Reference Citation Analysis (0)] |

| 43. | Zhu Y, Wang QC, Xu MD, Zhang Z, Cheng J, Zhong YS, Zhang YQ, Chen WF, Yao LQ, Zhou PH, Li QL. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest Endosc. 2019;89:806-815.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 201] [Cited by in RCA: 231] [Article Influence: 38.5] [Reference Citation Analysis (0)] |

| 44. | Wang P, Liu X, Berzin TM, Glissen Brown JR, Liu P, Zhou C, Lei L, Li L, Guo Z, Lei S, Xiong F, Wang H, Song Y, Pan Y, Zhou G. Effect of a deep-learning computer-aided detection system on adenoma detection during colonoscopy (CADe-DB trial): a double-blind randomised study. Lancet Gastroenterol Hepatol. 2020;5:343-351. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 164] [Cited by in RCA: 294] [Article Influence: 58.8] [Reference Citation Analysis (0)] |