Published online Nov 14, 2021. doi: 10.3748/wjg.v27.i42.7240

Peer-review started: April 30, 2021

First decision: June 13, 2021

Revised: June 27, 2021

Accepted: October 20, 2021

Article in press: October 20, 2021

Published online: November 14, 2021

Processing time: 193 Days and 11.3 Hours

Advances in machine learning, computer vision and artificial intelligence methods, in combination with those in processing and cloud computing capability, portend the advent of true decision support during interventions in real-time and soon perhaps in automated surgical steps. Such capability, deployed alongside technology intraoperatively, is termed digital surgery and can be delivered without the need for high-end capital robotic investment. An area close to clinical usefulness right now harnesses advances in near infrared endolaparoscopy and fluorescence guidance for tissue characterisation through the use of biophysics-inspired algorithms. This represents a potential synergistic methodology for the deep learning methods currently advancing in ophthalmology, radiology, and recently gastroenterology via colonoscopy. As databanks of more general surgical videos are created, greater analytic insights can be derived across the operative spectrum of gastroenterological disease and operations (including instrumentation and operative step sequencing and recognition, followed over time by surgeon and instrument performance assessment) and linked to value-based outcomes. However, issues of legality, ethics and even morality need consideration, as do the limiting effects of monopolies, cartels and isolated data silos. Furthermore, the role of the surgeon, surgical societies and healthcare institutions in this evolving field needs active deliberation, as the default risks relegation to bystander or passive recipient. This editorial provides insight into this accelerating field by illuminating the near-future and next decade evolutionary steps towards widespread clinical integration for patient and societal benefit.

Core Tip: Here, we introduce the concept of digital surgery and why it is important for everyone involved in the area of gastroenterological disease management. The current state and near-future of the art in this area are discussed, including the use of artificial intelligence methods to provide intraoperative real-time augmented decision making. Moral, ethical and legal challenges pertinent to digital surgery are explored, including the concerns relating to big data in health care and the transitioning role of industry in surgical development, as well as the implications such profound and imminent changes may have on the role of the clinician.

- Citation: Hardy NP, Cahill RA. Digital surgery for gastroenterological diseases. World J Gastroenterol 2021; 27(42): 7240-7246

- URL: https://www.wjgnet.com/1007-9327/full/v27/i42/7240.htm

- DOI: https://dx.doi.org/10.3748/wjg.v27.i42.7240

Since the mid-20th century, our analog electromechanical world has increasingly transitioned to a digital and even automated one, leading to our current epoch being named the “Digital Age” or “Information Age”. While many sectors have been rapid converts to this new order (notably retail and finance), adoption in the healthcare domain has been slower. In particular, in surgery, the focus has been on incremental iterations of existing technologies (such as upgraded displays and tweaked surgical instruments) over radical reformatting of existing methods. The reasons behind this are multifactorial, including a natural and understandable professional conservatism regarding clinical care, along with privacy concerns (patient and practitioner), ethical, moral and liability uncertainties, as well as a potential reluctance on behalf of system developers to involve themselves in intraprocedural decision making (preferring instead to leave this responsibility firmly in the hands of the human operator, for now at least). However, the evolution of surgical practice has plateaued.

Even the most sophisticated of new surgical machinery in general surgery, such as present-day robotic platforms, depend fully on the practitioner for their value and have led only to marginal benefit over the past 20 years. This is partly because of inequity in access but mostly because the fundamental distinguishing characteristic of best operative care relates to the intraoperative decision-making rather than dexterity of the practitioner. The new great hope is that the digitalisation of surgery, including the integration of artificial intelligence (AI) to the surgical workflow, will lead to better outcomes broadly; although, how exactly this will happen remains the challenge. Specifically, “digital surgery” exploits real-time analytics with technology during operations, and this editorial provides perspectives of its impact now and in the near future to all those looking after patients with digestive diseases.

This month’s landmark publication of the European Commission’s “Laying Down Harmonised Rules on Artificial Intelligence” attempts to shape the future of AI’s incorporation across society[1]. In this document, the enormity of the socioeconomic potential of AI is stressed, and it emphasizes that the same elements that drive benefits will bring about new risks of potential great consequence. Also this month, the first commercial AI system for endoscopy, “GI Genius” by Medtronic (Dublin, Ireland), has been approved for clinical use by the United States Food and Drug Administration (FDA)[2]. This system and others like it (e.g., Olympus ENDO-AID) act as a “second pair of eyes”, constantly watching the screen and alerting the user to any potential anomaly by highlighting the detected area and leaving the human to decide on its importance[3]. Unlike the endoscopist, the computer aid does not have a single point of focus on the screen and so provides accurate full field of view observation at all times, a digital safety net. While impressive results are reported regarding increased polyp detection rates by this combination (14% absolute increase in adenoma detection rate over standard endoscopy), intra-procedural polyp characterisation remains unsupported. Detailed descriptions of AI advances in endoscopic systems have been covered elsewhere[4-6].

When assessing such applications, the FDA stratifies the risk associated with the AI tool by the device’s intended use[7]. Technologies designed to treat and diagnose or to drive clinical management receive higher risk ratings than those that aim to inform clinical management. Furthermore, to date, the FDA has only approved AI or machine learning systems that are “locked” prior to marketing. In locked systems, the algorithms used must provide the same output each time the same input is provided (i.e., these systems cannot “learn” or adapt as they accrue more data, which really should be their hallmark). However, it is understandable that these early models should function within the safety of a locked domain while the appropriate regulatory and surveillance frameworks for unlocked AI are put in place. Such oversight would need to ensure that the performance and effectiveness of an unlocked system in clinical use was maintained as it evolved through learning over time. Furthermore, locking helps to ensure that the functionality of a device can be understood by clinicians and its abilities not overstated.

As more advanced systems come online, however, it is likely such “unlocked” systems will become approved for use. Therefore, the endoscopic support systems currently approved for use only represent a first pass at clinical entry, allowing sequential iterations to evolve serially with increasing degrees of data recording, analytics and self-learning, and therefore automatism.

While other areas of surgical practice, such as orthopedics, neurosurgery and maxillofacial surgery, have embraced surgical preplanning through computer-generated models and intraoperative navigational aids, the incorporation of AI methods for gastrointestinal surgery is only in its infancy[8-10]. The lack of fixed, easily identifiable landmarks and the tendency for the operator to completely shift the anatomical field intraoperatively (from the preoperatively recorded imagery) has proven difficult to accommodate in the same way as is possible with interventions in osseous lattices. Furthermore, the most valuable asset in the advancement of AI methods has been the rapid ability to accumulate, store, annotate and distribute data streams. As a result, it has been most applicable to nonsurgical uses, where enormous pre-existing archives of categorised images exist (with data points in the millions), such as breast mammography and retinal screening[11,12]. For similar impact in surgery, computer methods need integration with surgical video, which is inherently more complex and, for this reason, harder to extract meaningful datapoints from.

Indeed, the necessary technology is only now proving sufficiently capable for such analysis to be envisaged, and the main rush right now relates to the accumulation of videos for future exploitation. The major companies are, therefore, looking at placing systems alongside their core imaging technology that allow uploading of material at the operator’s discretion rather than automatically. This is because, with some exceptions, the prevailing business model has been to sell imaging systems with the imagery belonging fully to the purchaser. With the current realisation that such data have real value on an aggregated basis, it is likely that the next full-service subscription models will include data sharing as a contractual clause. Therefore, the technical obstacles of video set-up, storage and distribution have already diminished in this era of big data, leaving then annotation, curation and linking to metadata to be addressed.

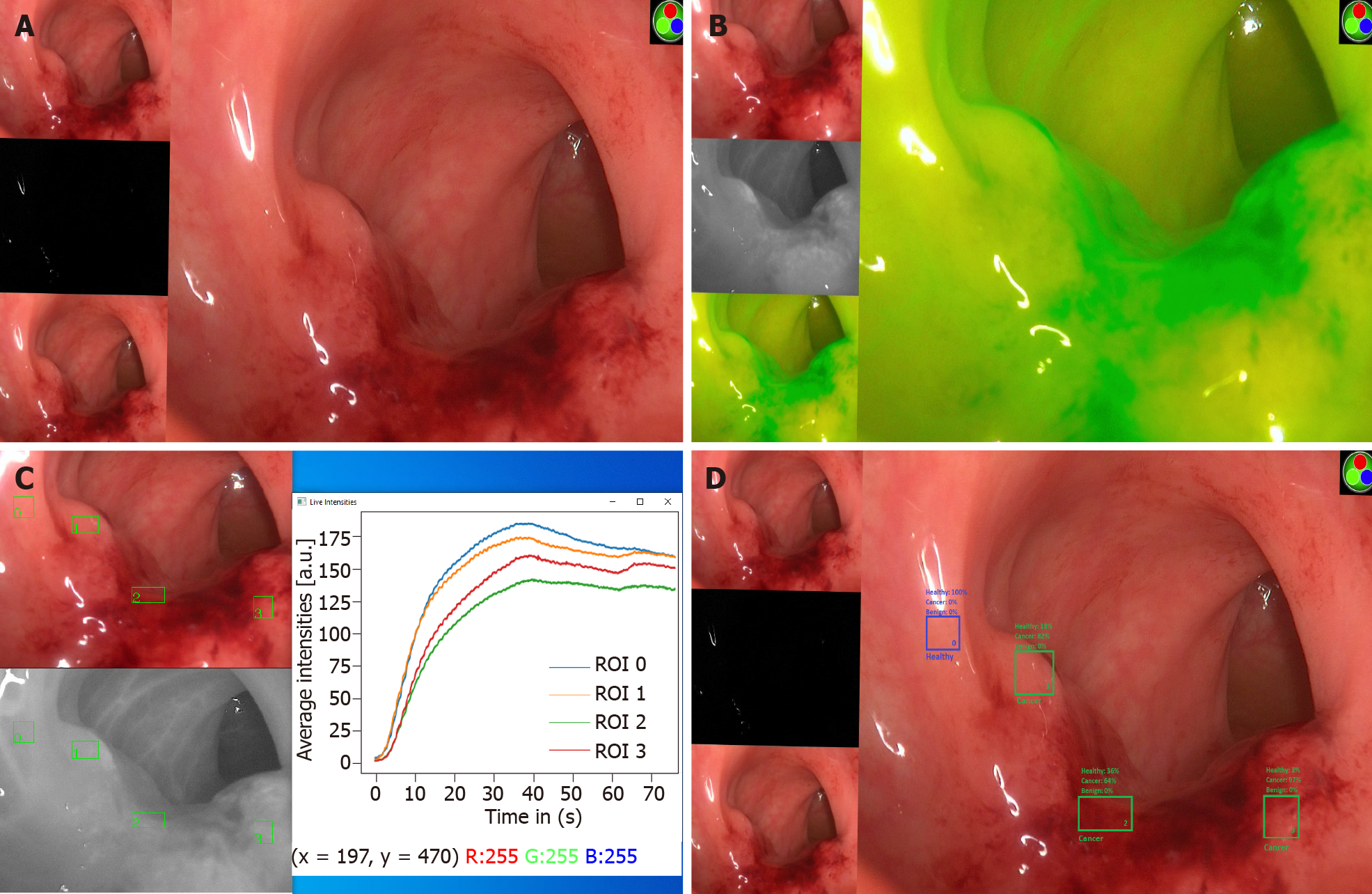

Surgical video contains more information than still images do, which means greater value but also complexity. Superimposition of more easily interpreted signals to these videos greatly enables data extraction. One such method successfully used in this manner utilizes near infrared imaging and indocyanine green, a fluorescent dye, to disclose information regarding the perfusion status of tissues in surgical videos and, hence, characterise their nature[13-15]. Indocyanine green binds to albumin within the vasculature, and real-time tracking of fluorescence intensity as it circulates can be used to create perfusion signatures of tissues. Alongside prediction of healing–nonhealing risk, this technique has been successfully piloted for the interrogation and classification of neoplasia of the colorectum using computer vision and AI with high levels of accuracy intraoperatively based on biophysics-inspired principles (i.e., perfusion within malignant tissue is fundamentally altered when compared to adjacent healthy regions in the same field of view[16]) (Figure 1).

Accurate computerised identification and labelling of structures at operation, through fluorescence or otherwise, could soon lead to better, safer surgery. Automated localisation with operator-prompting for important structures, such as the inferior mesenteric artery, ureter or neurological bundles, during left-sided colonic surgery would assist experienced surgeons in difficult cases or normalise for experience in those at the beginning of their career or with low volume practices. Deep learning models have even been demonstrated to accurately identify when the critical view of safety has been demonstrated during laparoscopic cholecystectomies[17]. Once a tissue can be accurately characterised, the next logical progression from on-screen display is the integration of such data into smart instruments to guide operative steps. This may be by way of haptic or auditory feedback or even automatic shutoff in the case of destructive instruments, such as diathermy encountering structures that require safeguarding (this already exists with some orthopaedic instrumentation).

More rudimentary systems of instrument tracking and operative step identification are already available that allow retrospective auditing of performed operations and instrument efficiency[18,19]. Parsing of operations into component steps linked with metadata including operative costs and standard (e.g. hospital stay and complications requiring intervention) as well as evolved patient metrics (e.g., patient-reported outcome measures) could greatly facilitate our move toward value-based health care. Early-stage iterations are now emerging, with automated operative phase identification in peroral endoscopic myotomy recently being described with the hope that further technological refinement may facilitate intraoperative decision support[20].

Collation of surgical outcomes and surgical performance, either by the individuals wishing to gain insight into their own practice or as part of professional regulatory oversight, still presents potential controversies. The centralised deposition of surgical video followed by anonymised analysis of technique and outcomes, likely by other specialty practitioners initially and followed by AI when permissible, would provide a means for impartial appraisal and maintenance of standards (both of practitioners and indeed surgical instruments). However, there are risks to use of the data to promote commercial interests rather than the public good (this also applies to practitioners and/or institutions); as such, great transparency is needed and trust-boards should be considered. In addition, practitioners have privacy rights, as do patients, and the special status of the doctor-patient relationship prevents exploitation of this privilege, which is an important consideration alongside legality alone (e.g., respect of frameworks such as General Data Protection Regulation). Furthermore, aggregation of surgical video in siloed collections, however individually large, risks lack of proper representation. Closed datasets also limit progress if others with relevant interest are excluded (frustrating the principle of reusability as a findability, accessibility, interoperability, and reusability principle), which may precipitate monopolistic practices.

The relatively delayed adoption of AI within surgery permits a glimpse into potential pitfalls by observing the experience of other sectors. In October 2020, the United States House Antitrust Subcommittee Report on Competition in the Digital Market noted that, in many digital domains, few dominant corporations held singular control of channels of mass distribution in a fashion that allowed them to maintain power and absorb or remove competitors with ease[21]. This is a real concern, considering the enormous financial incentives associated with success in healthcare provision. Potential safeguards include an open/shared data repository (with appropriate regulatory compliance) upon which clinicians, academics and companies could work, as well as the standardisation of policies for the acquisition and storage of digital data, to ensure universal accessibility. Additionally, the history of AI is replete with “boom and bust” cycles, and it is possible that its promised integration into surgery could prove another false dawn, especially with regard to more complex unsupervised machine learning and deep learning methods[22]. This may be a result of the complexity required to fine tune these systems to achieve acceptable results for use in humans. Controversies surrounding explainability (describing the settings used in the machine), interpretability (the degree to which a human can understand the decision) and bias within data training sets, as well as possible societal rejection of this form of technology in healthcare (akin to the criticisms of AI-based facial recognition software and recidivism predictors or any perception of data harvesting for surveillance capitalism), are other concerns.

Nevertheless, the formative years of digital surgery have begun. As a consequence, the landscape of surgical practice as it currently stands is likely to undergo rapid and most probably frequent evolutions. Such changes will have far reaching implications on the role of professional bodies, societies and institutions and, most fundamentally, clinicians. Moore’s law cautions that the limits of such changes will be neither technological nor computational. The role of implementation of digital surgery remains, for now, with the clinician in their duty as patient caregiver. Whether this role will, in time, increasingly become one of the clinician as early enabler and later bystander or clinician as co-developer and collaborator remains yet to be determined. For the latter, co-ordinated, purposeful cross-collegiate action is needed urgently.

Manuscript source: Invited manuscript

Corresponding Author's Membership in Professional Societies: Society of American Gastrointestinal and Endoscopic Surgeons (SAGES).

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: Ireland

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): E

P-Reviewer: Hanada E, Qiang Y, Sharma J S-Editor: Fan JR L-Editor: Kerr C P-Editor: Fan JR

| 1. | European Commission. Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. Brussels. 2021. [cited 1 June 2021]. Available from: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52021PC0206&from=EN. |

| 2. | US Food and Drug Association (FDA). Gastrointestinal lesion software detection Regulation Number 21 CRF 876.1520. 2021. [cited 1 June 2021]. Available from: https://www.accessdata.fda.gov/cdrh_docs/pdf20/DEN200055.pdf. |

| 3. | Repici A, Badalamenti M, Maselli R, Correale L, Radaelli F, Rondonotti E, Ferrara E, Spadaccini M, Alkandari A, Fugazza A, Anderloni A, Galtieri PA, Pellegatta G, Carrara S, Di Leo M, Craviotto V, Lamonaca L, Lorenzetti R, Andrealli A, Antonelli G, Wallace M, Sharma P, Rosch T, Hassan C. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology. 2020;159:512-520.e7. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 237] [Cited by in RCA: 393] [Article Influence: 78.6] [Reference Citation Analysis (0)] |

| 4. | Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. 2019;25:1666-1683. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 211] [Cited by in RCA: 160] [Article Influence: 26.7] [Reference Citation Analysis (5)] |

| 5. | Abadir AP, Ali MF, Karnes W, Samarasena JB. Artificial Intelligence in Gastrointestinal Endoscopy. Clin Endosc. 2020;53:132-141. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 39] [Cited by in RCA: 40] [Article Influence: 8.0] [Reference Citation Analysis (0)] |

| 6. | El Hajjar A, Rey JF. Artificial intelligence in gastrointestinal endoscopy: general overview. Chin Med J (Engl). 2020;133:326-334. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 33] [Cited by in RCA: 67] [Article Influence: 13.4] [Reference Citation Analysis (0)] |

| 7. |

US Food and Drug Association (FDA).

Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan www.fda.gov. 2021. [cited 25 June 2021]. Available from: |

| 8. | Azagury DE, Dua MM, Barrese JC, Henderson JM, Buchs NC, Ris F, Cloyd JM, Martinie JB, Razzaque S, Nicolau S, Soler L, Marescaux J, Visser BC. Image-guided surgery. Curr Probl Surg. 2015;52:476-520. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 28] [Article Influence: 2.8] [Reference Citation Analysis (0)] |

| 9. | Myers TG, Ramkumar PN, Ricciardi BF, Urish KL, Kipper J, Ketonis C. Artificial Intelligence and Orthopaedics: An Introduction for Clinicians. J Bone Joint Surg Am. 2020;102:830-840. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 109] [Cited by in RCA: 135] [Article Influence: 27.0] [Reference Citation Analysis (0)] |

| 10. | Heo MS, Kim JE, Hwang JJ, Han SS, Kim JS, Yi WJ, Park IW. Artificial intelligence in oral and maxillofacial radiology: what is currently possible? Dentomaxillofac Radiol. 2021;50:20200375. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 36] [Cited by in RCA: 83] [Article Influence: 20.8] [Reference Citation Analysis (0)] |

| 11. | McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, Back T, Chesus M, Corrado GS, Darzi A, Etemadi M, Garcia-Vicente F, Gilbert FJ, Halling-Brown M, Hassabis D, Jansen S, Karthikesalingam A, Kelly CJ, King D, Ledsam JR, Melnick D, Mostofi H, Peng L, Reicher JJ, Romera-Paredes B, Sidebottom R, Suleyman M, Tse D, Young KC, De Fauw J, Shetty S. International evaluation of an AI system for breast cancer screening. Nature. 2020;577:89-94. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1364] [Cited by in RCA: 1199] [Article Influence: 239.8] [Reference Citation Analysis (0)] |

| 12. | Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, Gan A, Hamzah H, Garcia-Franco R, San Yeo IY, Lee SY, Wong EYM, Sabanayagam C, Baskaran M, Ibrahim F, Tan NC, Finkelstein EA, Lamoureux EL, Wong IY, Bressler NM, Sivaprasad S, Varma R, Jonas JB, He MG, Cheng CY, Cheung GCM, Aung T, Hsu W, Lee ML, Wong TY. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA. 2017;318:2211-2223. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1098] [Cited by in RCA: 1269] [Article Influence: 158.6] [Reference Citation Analysis (0)] |

| 13. | Cahill RA, O'Shea DF, Khan MF, Khokhar HA, Epperlein JP, Mac Aonghusa PG, Nair R, Zhuk SM. Artificial intelligence indocyanine green (ICG) perfusion for colorectal cancer intra-operative tissue classification. Br J Surg. 2021;108:5-9. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 23] [Cited by in RCA: 52] [Article Influence: 13.0] [Reference Citation Analysis (0)] |

| 14. | Zhuk S, Epperlein JP, Nair R, Thirupati S, Mac Aonghusa P, Cahill R, O’Shea D. Perfusion Quantification from Endoscopic Videos: Learning to Read Tumor Signatures. 2020 Preprint. Available from: arXiv:2006.14321. |

| 15. | Park SH, Park HM, Baek KR, Ahn HM, Lee IY, Son GM. Artificial intelligence based real-time microcirculation analysis system for laparoscopic colorectal surgery. World J Gastroenterol. 2020;26:6945-6962. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 30] [Cited by in RCA: 56] [Article Influence: 11.2] [Reference Citation Analysis (0)] |

| 16. | Hardy NP, Mac Aonghusa P, Neary PM, Cahill RA. Intraprocedural Artificial Intelligence for Colorectal Cancer Detection and Characterisation in Endoscopy and Laparoscopy. Surg Innov. 2021;1553350621997761. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 12] [Cited by in RCA: 15] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 17. | Mascagni P, Alapatt D, Urade T, Vardazaryan A, Mutter D, Marescaux J, Costamagna G, Dallemagne B, Padoy N. A Computer Vision Platform to Automatically Locate Critical Events in Surgical Videos: Documenting Safety in Laparoscopic Cholecystectomy. Ann Surg. 2021;274:e93-e95. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 61] [Cited by in RCA: 46] [Article Influence: 11.5] [Reference Citation Analysis (0)] |

| 18. | Aghdasi N, Bly R, White LW, Hannaford B, Moe K, Lendvay TS. Crowd-sourced assessment of surgical skills in cricothyrotomy procedure. J Surg Res. 2015;196:302-306. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 44] [Cited by in RCA: 45] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 19. | Tulipan J, Miller A, Park AG, Labrum JT 4th, Ilyas AM. Touch Surgery: Analysis and Assessment of Validity of a Hand Surgery Simulation "App". Hand (N Y). 2019;14:311-316. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 22] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 20. | Ward TM, Hashimoto DA, Ban Y, Rattner DW, Inoue H, Lillemoe KD, Rus DL, Rosman G, Meireles OR. Automated operative phase identification in peroral endoscopic myotomy. Surg Endosc. 2021;35:4008-4015. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 16] [Cited by in RCA: 41] [Article Influence: 8.2] [Reference Citation Analysis (0)] |

| 21. | United States Subcommittee on Antitrust. Commercial and Administrative Law of the Committee on the Judiciary: Investigation of Competition in Digital Markets. 2020. [cited 1 June 2021]. Available from: https://judiciary.house.gov/uploadedfiles/competition_in_digital_markets.pdf. |

| 22. | Mitchell M. Why AI is Harder Than We Think. 2021 Preprint. Available from: arXiv:2104.12871. |