Copyright

©The Author(s) 2025.

World J Gastroenterol. May 21, 2025; 31(19): 104897

Published online May 21, 2025. doi: 10.3748/wjg.v31.i19.104897

Published online May 21, 2025. doi: 10.3748/wjg.v31.i19.104897

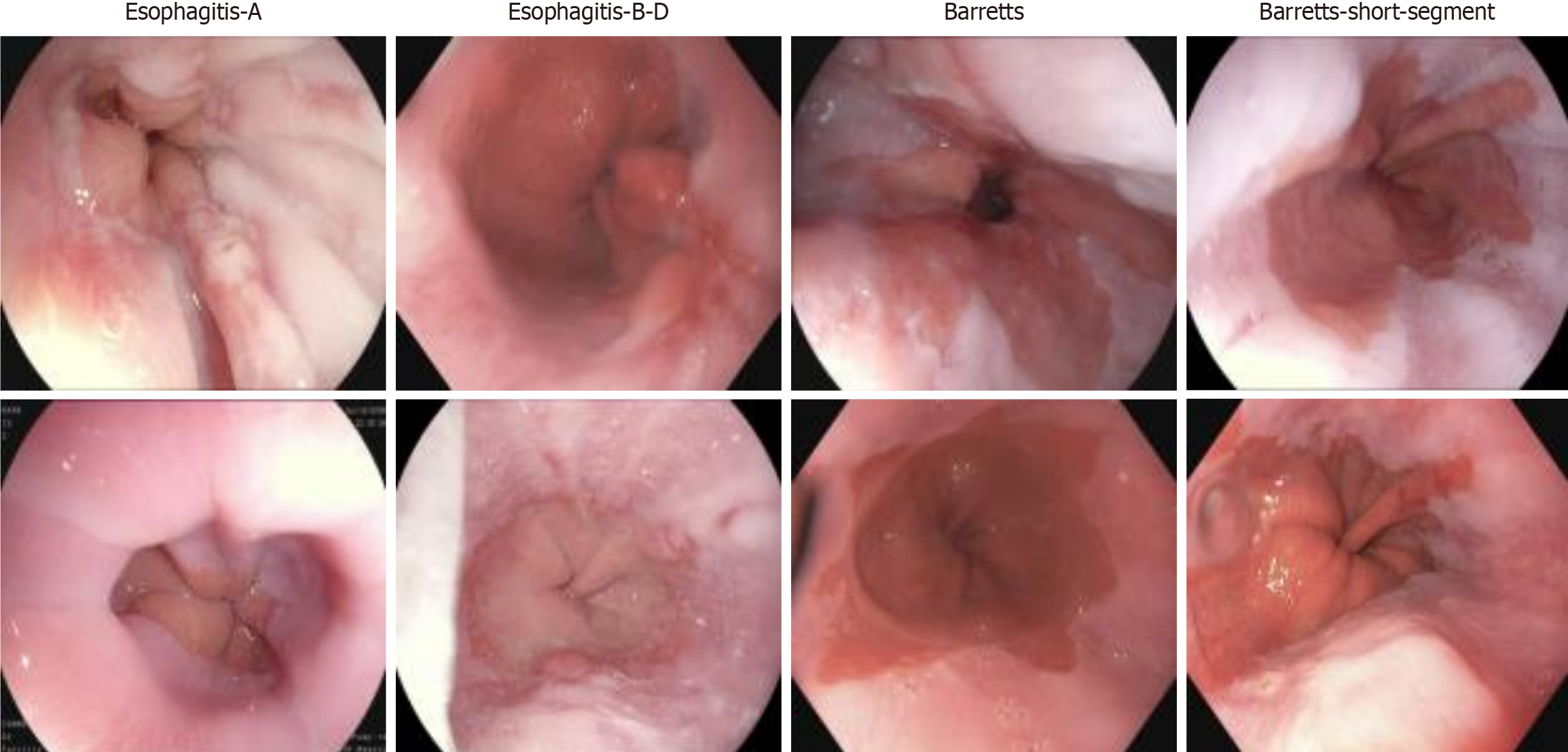

Figure 1 Display of different types of esophageal diseases.

From left to right are esophagitis type A, esophagitis types B-D, short-segment Barrett’s esophagus, and Barrett’s esophagus, with the severity of esophageal conditions increasing sequentially. By visualizing these results, the study enhances the understanding of esophageal inflammation and Barrett’s esophagus progression, providing insights into the gradual deterioration of esophageal tissue as the disease advances[23].

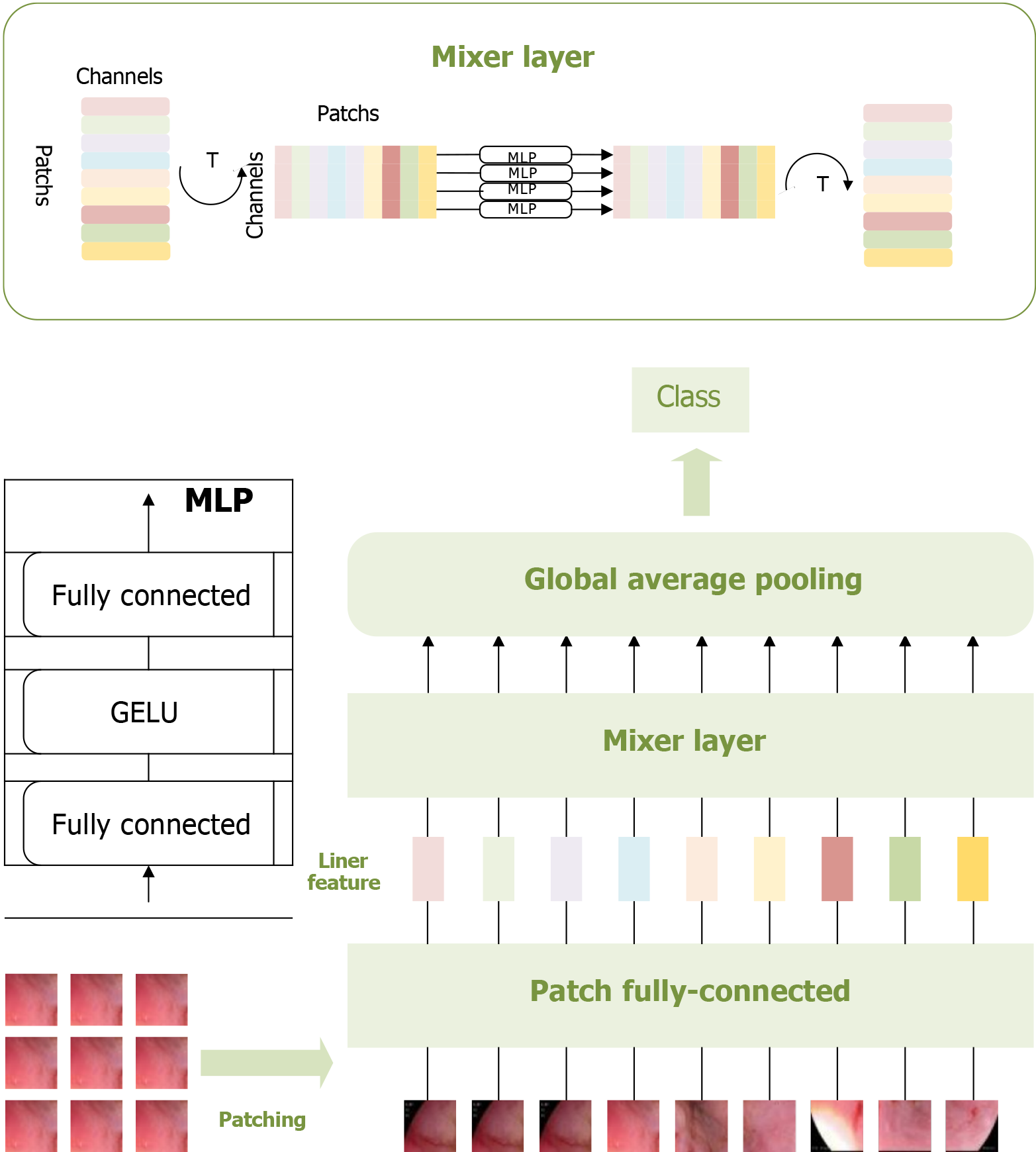

Figure 2 Multi-layer perceptron-based pathological recognition model for esophageal cancer.

This figure visualizes the patching, feature extraction, and classification process of esophageal adenocarcinoma images using the multi-layer perceptron-based model. These three operations constitute the core components of this model. Visualizing these processes provides valuable insights into the multi-layer perceptron model’s recognition performance, facilitating a comprehensive analysis of its effectiveness[23]. MLP: Multi-layer perceptron.

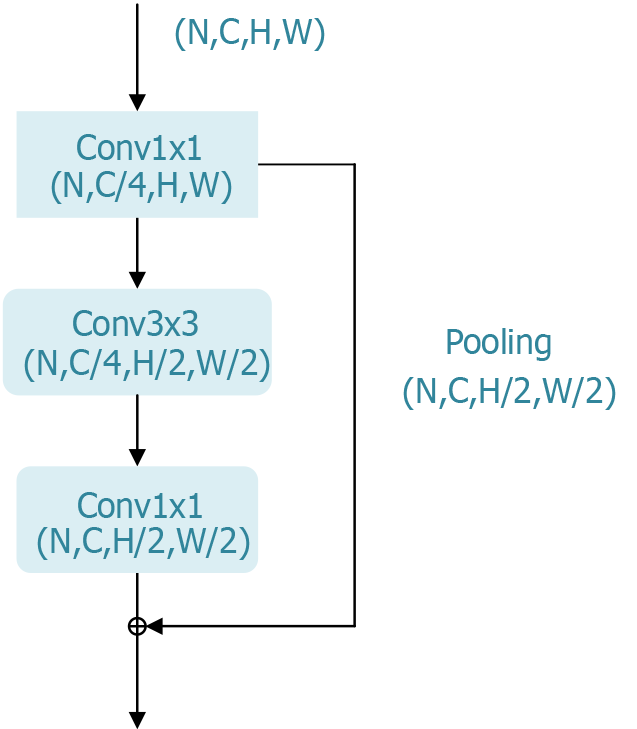

Figure 3 Bottleneck block of the residual network residual module.

The bottleneck block in residual network’s residual module is a key architectural component in deep convolutional neural networks and holds significant research value. This design effectively reduces the number of parameters and computational complexity, enabling networks to be deepened to hundreds of layers without encountering vanishing or exploding gradient issues. Consequently, this enhancement significantly improves the performance of deep learning models in image classification and other tasks.

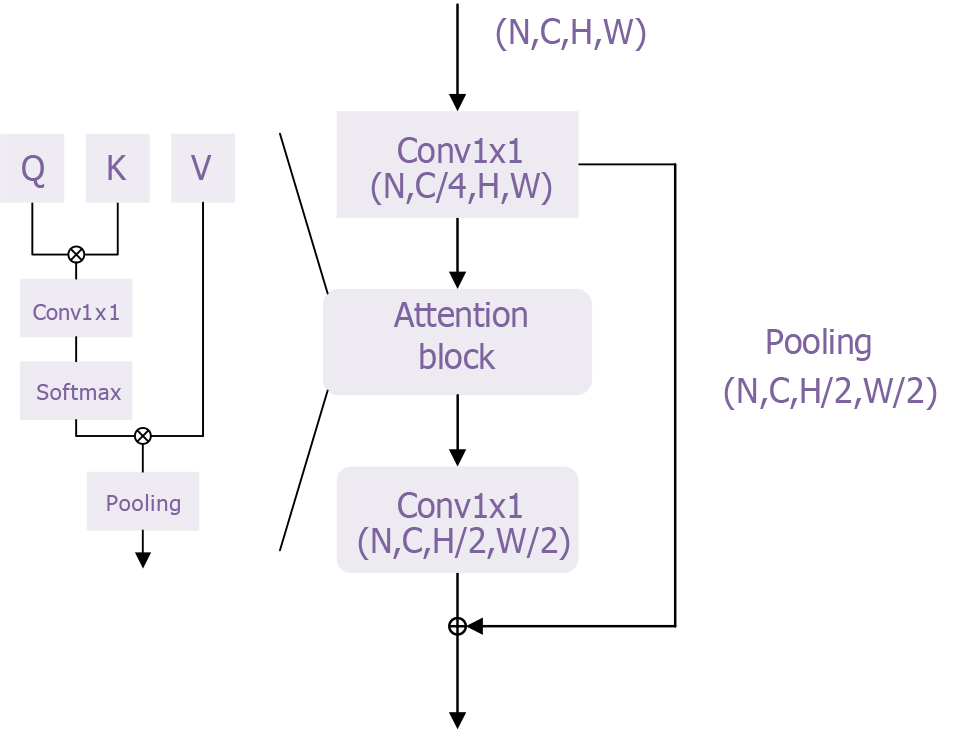

Figure 4 Transformer module of the transformer model.

This figure provides an intuitive visualization of the core structure and functionality of the transformer architecture. The self-attention mechanism and multi-head attention mechanism effectively capture and process relationships among different features in the input data. This structure enhances the model’s ability to comprehend contextual information while enabling parallel processing, which improves computational efficiency. By presenting a visual representation, researchers and clinicians can better understand how the transformer model functions in esophageal adenocarcinoma classification, increasing confidence in the model’s reliability. Furthermore, this figure supports the study’s findings by offering clear structural explanations and visual validations, demonstrating the effectiveness and robustness of the transformer model in tackling complex medical problems. This further underscores its potential for clinical applications.

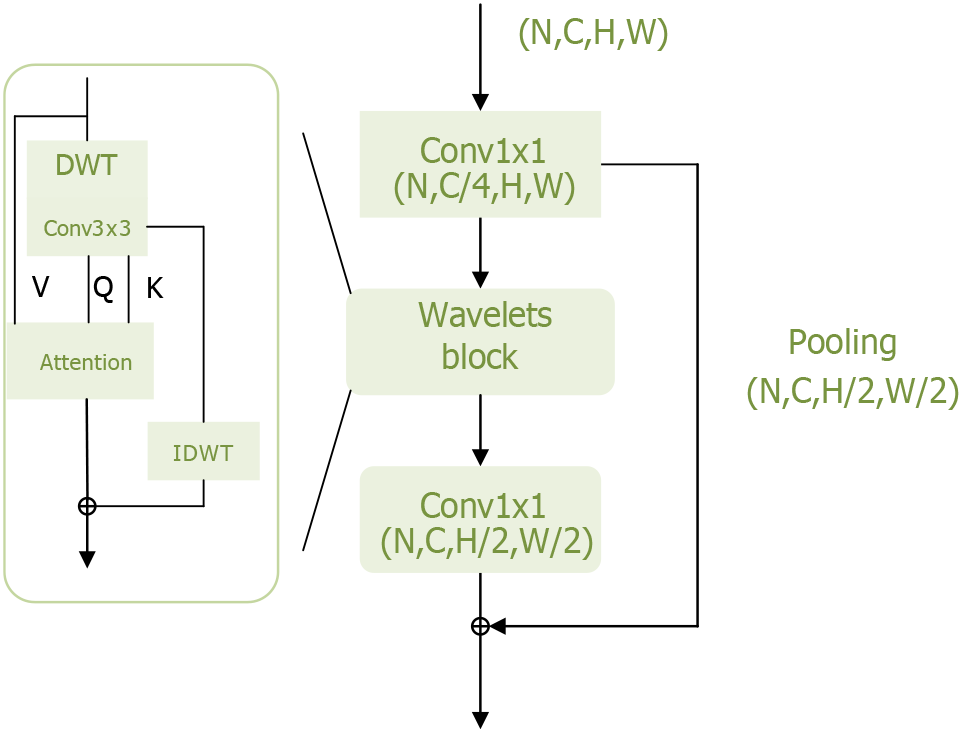

Figure 5 Wavelets module of the Wave-Vision Transformer model.

This figure visually illustrates the Wavelets module’s structure and functionality within the Wave-Vision Transformer model. The module is designed to leverage wavelet transform for multi-scale feature extraction while preserving information integrity. By integrating wavelet transform with self-attention mechanisms, Wave-Vision Transformer achieves lossless downsampling, effectively retaining high-frequency information such as textures and edges, which enhances the model’s sensitivity to fine details. DWT: Discrete wavelet transform.

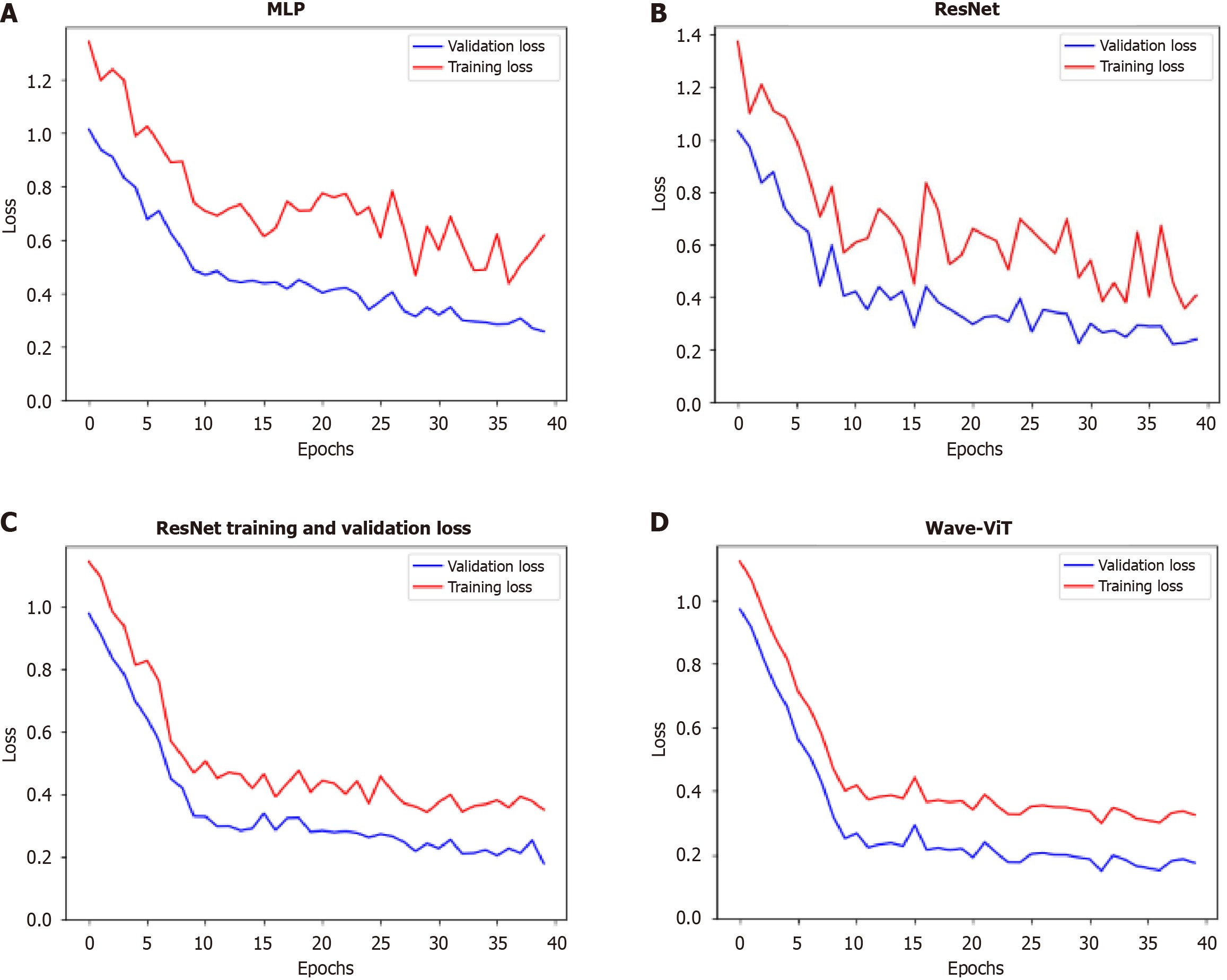

Figure 6 Variation of loss function and validation accuracy during model training.

A-D: The changes in loss function values during training for the multi-layer perceptron, residual network, transformer, and Wave-Vision Transformer (Wave-ViT) models, respectively, on the training and validation sets. This visualization illustrates the convergence behavior, generalization ability, and risk of overfitting for each model. By comparing loss function curves, we can assess training stability and efficiency. In this study, if Wave-ViT exhibits faster convergence and lower validation loss, this directly supports its superiority in esophageal cancer diagnosis. This would indicate that Wave-ViT can more effectively learn complex features in medical images while avoiding overfitting, providing strong evidence for its practical application. MLP: Multi-layer perceptron; ResNet: Residual network; Wave-ViT: Wave-Vision Transformer.

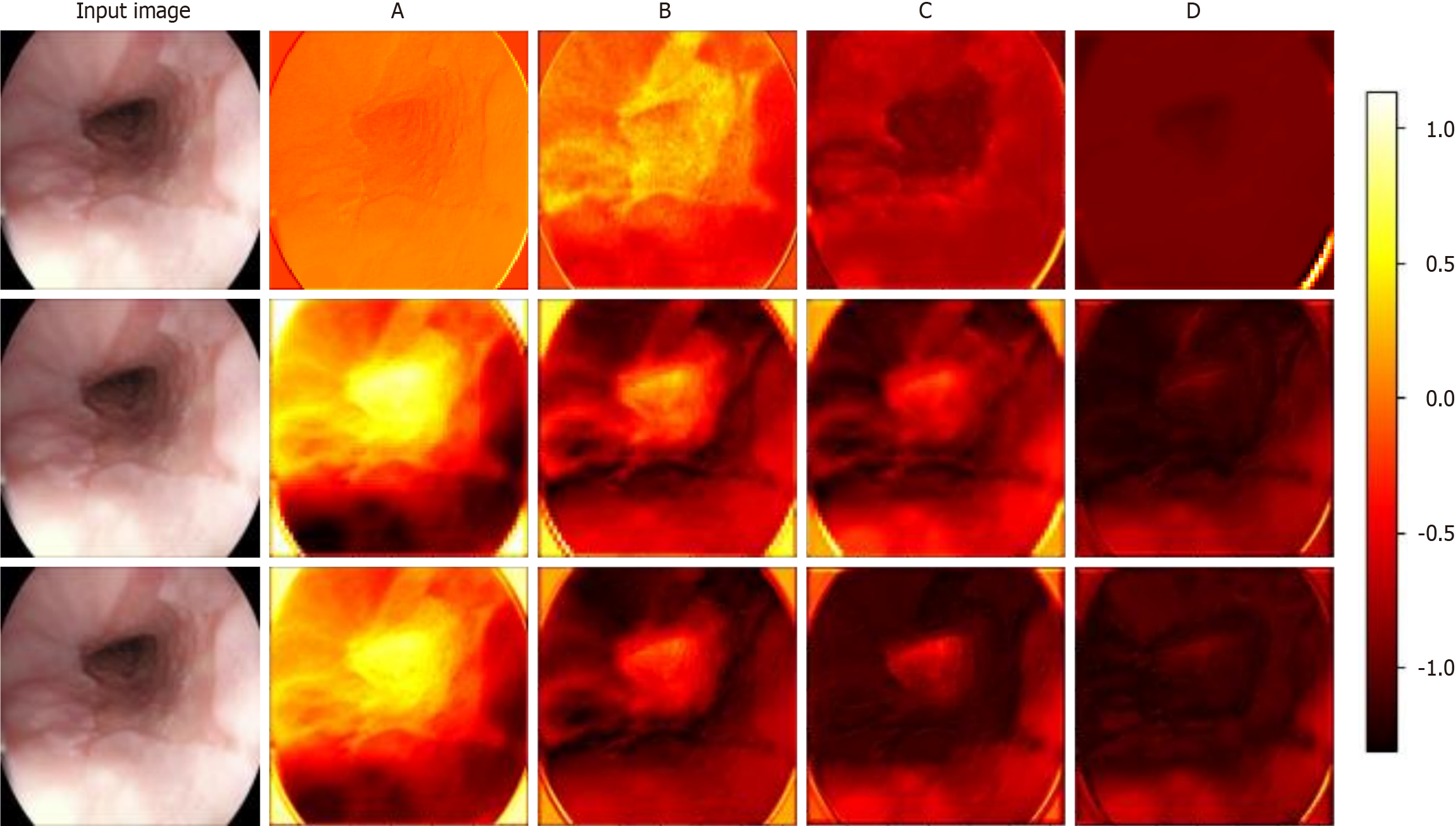

Figure 7 Visualization of feature extraction from different layers in the prognostic model.

The first to third rows correspond to the feature maps extracted by the bottleneck block in residual network, the transformer block in the transformer model, and the wavelet block in Wave-Vision Transformer (Wave-ViT), respectively. A-D: The feature maps extracted by the four different levels of modules illustrated in Supplementary Figure 1. This visualization provides an intuitive comparison of the feature extraction capabilities of the three models. The contrast in feature maps clearly reveals Wave-ViT’s advantage in multi-scale feature extraction, particularly in its balance between detail and global information. These results support Wave-ViT’s superiority in medical imaging analysis, particularly in esophageal cancer diagnosis, where it more accurately detects early-stage lesions and complex pathological features. This visualization validates Wave-ViT’s architectural design and provides critical insights for further model optimization[23].

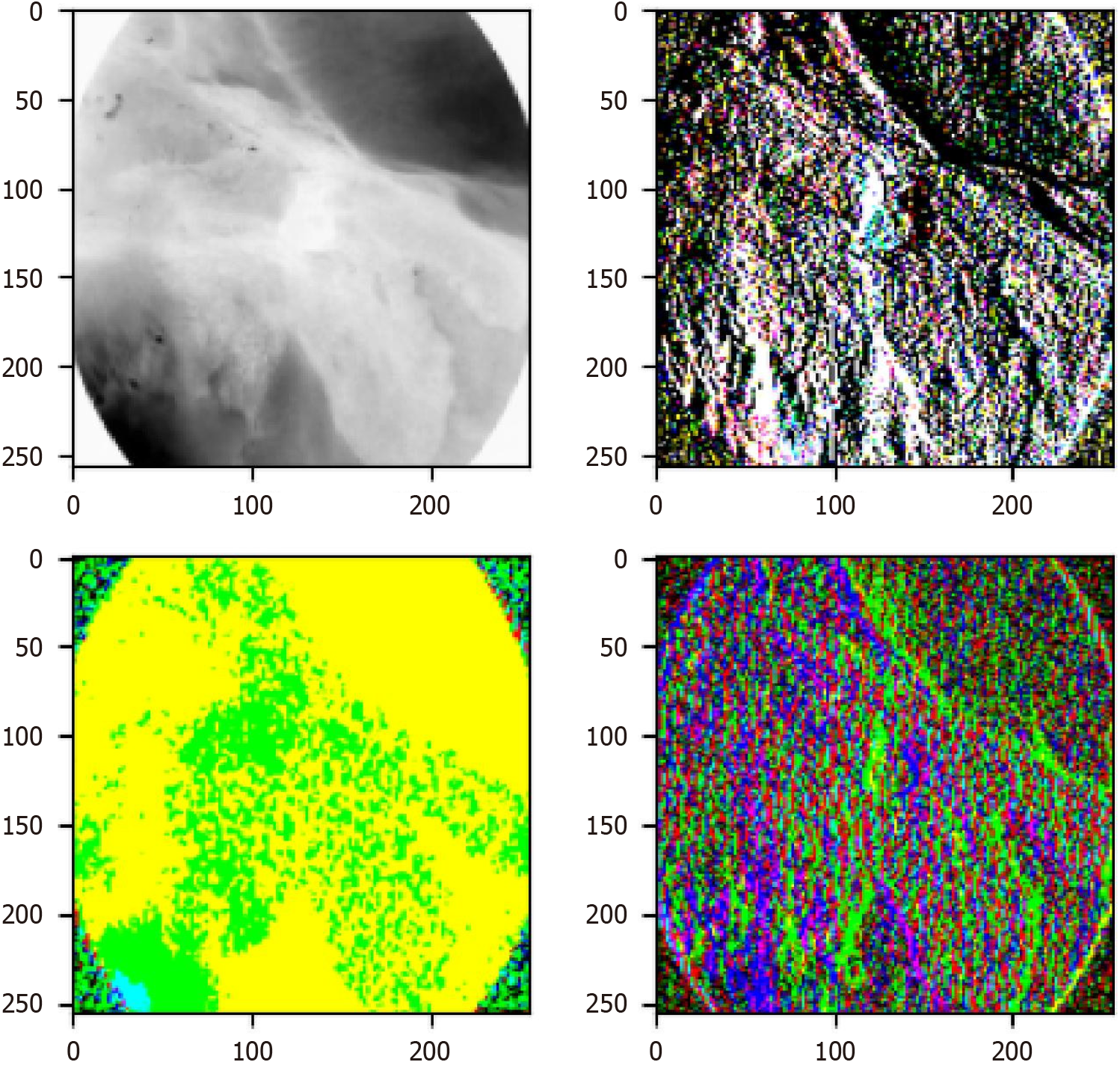

Figure 8 Visualization of wavelet block extracting different frequency domain features.

The top left image represents the low-frequency sub-band, while the other images depict high-frequency sub-bands. After processing the different sub-band images, they can be combined into a single image through the inverse wavelet transform, allowing for the extraction of additional information. After processing different sub-bands and applying the inverse wavelet transform, these components can be recombined into a single image, thereby extracting richer information. This demonstrates how wavelet transform decomposes an original image into low- and high-frequency sub-bands, where the low-frequency sub-band preserves the primary structure and global information, while the high-frequency sub-bands capture fine details and edge features. By processing and reconstructing these sub-bands, the multi-scale feature representation of the image is enhanced without losing critical information. This process significantly improves the model’s ability to detect subtle pathological changes, which is crucial for esophageal cancer diagnosis. Particularly in early-stage esophageal cancer, small and hidden morphological differences among subtypes can be better captured. Therefore, the figure not only validates the effectiveness of wavelet transform in feature extraction but also provides strong technical support for the study. The findings demonstrate that the Wave-Vision Transformer model, by integrating multi-scale feature fusion, substantially enhances the accuracy and robustness of esophageal cancer diagnosis[23].

- Citation: Wei W, Zhang XL, Wang HZ, Wang LL, Wen JL, Han X, Liu Q. Application of deep learning models in the pathological classification and staging of esophageal cancer: A focus on Wave-Vision Transformer. World J Gastroenterol 2025; 31(19): 104897

- URL: https://www.wjgnet.com/1007-9327/full/v31/i19/104897.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i19.104897