Copyright

©The Author(s) 2021.

World J Gastroenterol. May 28, 2021; 27(20): 2545-2575

Published online May 28, 2021. doi: 10.3748/wjg.v27.i20.2545

Published online May 28, 2021. doi: 10.3748/wjg.v27.i20.2545

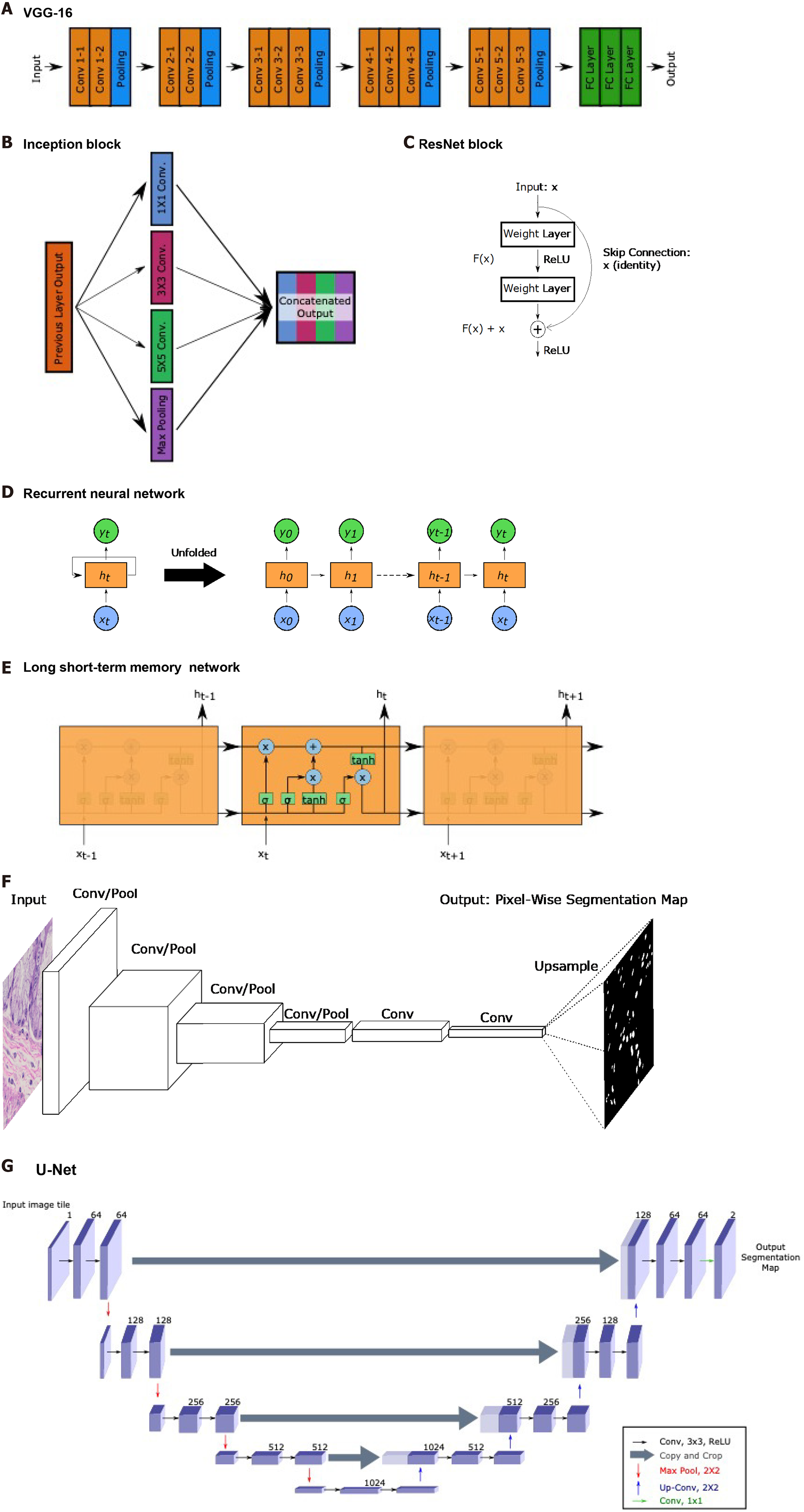

Figure 5 Common landmark network architectures.

Overviews of landmark network architectures utilized in this paper are presented. A: The visual geometry group network incorporates sequential convolutional and pooling layers into fully connected layers for classification; B: The inception block utilized in the inception networks incorporates convolutions with multiple filter sizes and max pooling onto inputs entering the same layer and concatenates to generate an output; C: The residual block used in ResNet networks incorporates a skip connection; D: Recurrent neural networks (RNNs) have repeating, sequential blocks that take previous block outputs as input. Predictions at each block are dependent on earlier block predictions; E: Long short-term memory network that also has a sequential format similar to RNN. The horizontal arrow at the top of the cell represents the memory component of these networks; F: Fully convolutional networks perform a series of convolution and pooling operations but have no fully-connected layer at the end. Instead, convolutional layers are added and deconvolution operations are performed to upsample and generate a segmentation map output of same dimensions as the input. Nuclear segmentation images are included for illustration purposes; G: U-Net exhibits a U-shape from the contraction path that does convolutions and pooling and from the decoder path that performs deconvolutions to upsample dimensions. Horizontal arrows show concatenation of feature maps from convolutional layers to corresponding deconvolution outputs. VGG: Visual geometry group.

- Citation: Kobayashi S, Saltz JH, Yang VW. State of machine and deep learning in histopathological applications in digestive diseases. World J Gastroenterol 2021; 27(20): 2545-2575

- URL: https://www.wjgnet.com/1007-9327/full/v27/i20/2545.htm

- DOI: https://dx.doi.org/10.3748/wjg.v27.i20.2545